Experiment Log

To look at some of our code, please visit our GitLab Repository

Based on our research, requirements and protoyping, we carried out the following experiments:

| Number | Title | Details | Results | Done By | Date |

|---|---|---|---|---|---|

| 1. | Testing the robot out | ||||

| 1.1. | Setting up the robot | We set up the Eclipse IDE for the robot and tried connecting to it and uploading one of the code samples available online. | We managed to set up the environment with a reliable connection. | Shanice | 11/12/16 |

| 1.2. | Facial Tracking Feature | We used Sota’s facial tracking feature to check that it could identify a face anywhere in the room. | Sota was successful in identifying the first face it comes across in every test. | Shanice | 11/12/16 |

| 1.3. | Taking a Picture | We tried to take a picture using SOTA’s built-in camera; it looks for a person who is smiling, then takes a picture of them. | It takes pictures of medium quality but gives a memory error after running it several times. We are trying to find the cause of and solve this problem. | Jaro | 11/12/16 |

| 1.4. | Making an Audio Recording | We made Sota record a brief voice sample using its built-in microphone, then repeat that recording through its speakers. | The audio recorded contained a lot of background noise. We are looking into using external APIs to filter out some background noise such that speech is audible. | Shanice | 11/12/16 |

| 1.5. | Text-to-Speech (TTS) Synthesis | We tried to make SOTA speak using the built-in Text To Speech API. | It worked with the Japanese words but not with English words. | Jaro | 11/12/16 |

| 1.6. | Motion Sample | We ran sample code to test Sota’s arm movements. | Sota could perform the Motion Sample code without errors every time we ran it. | Jaro | 11/12/16 |

| 2. | Testing External APIs | ||||

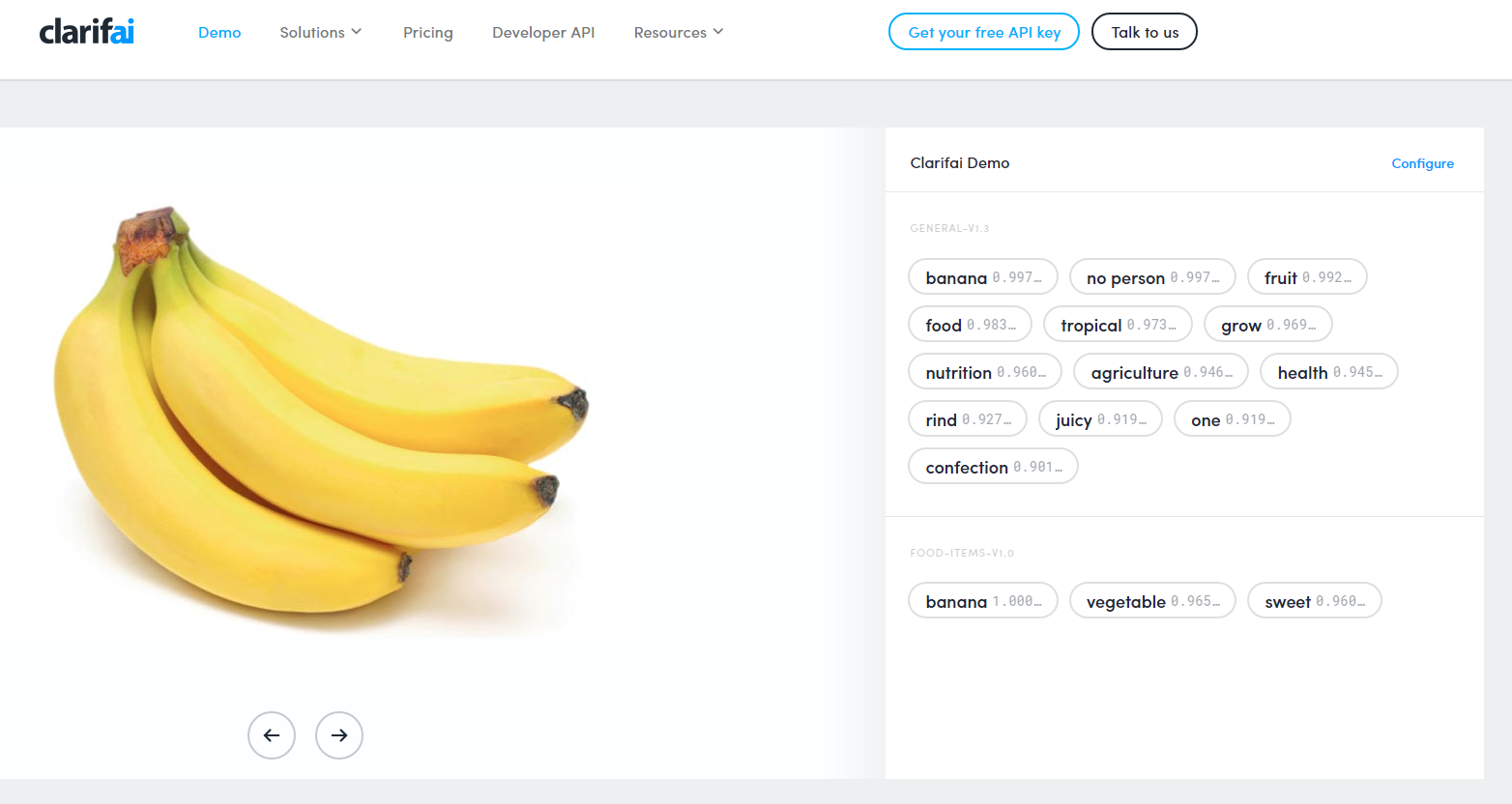

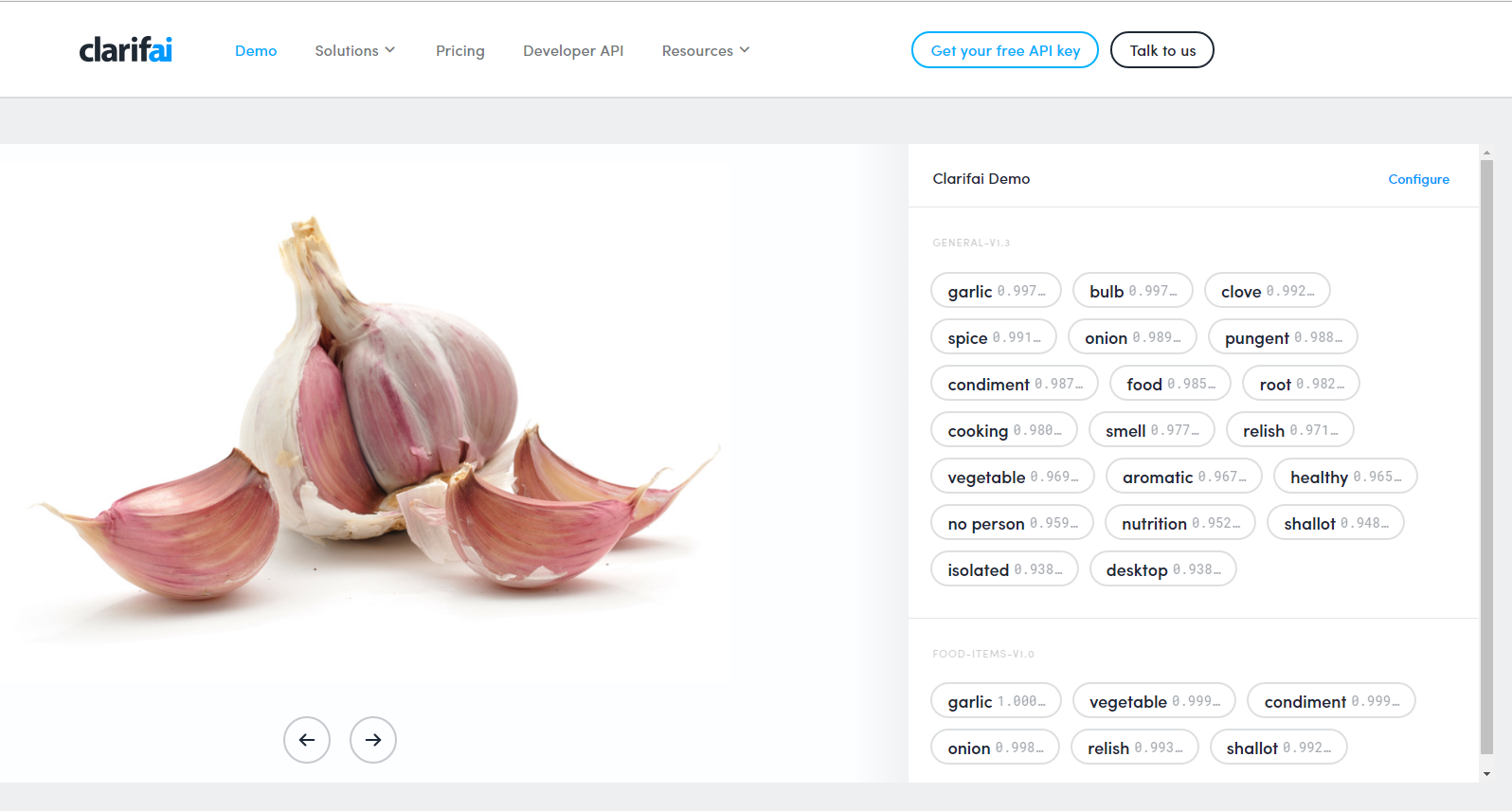

| 2.1. | Clarif.ai Food Recognition | We used Clarif.ai’s online demo to try to identify images containing fruits and vegetables. Furthermore, it shows the probability of each tag, allowing us to filter out items with above a particular percentage to improve accuracy of our ingredient recognition feature. | The accuracy overall was flawless and their image recognition model always identified the food in the picture. | Jaro | 11/12/16 |

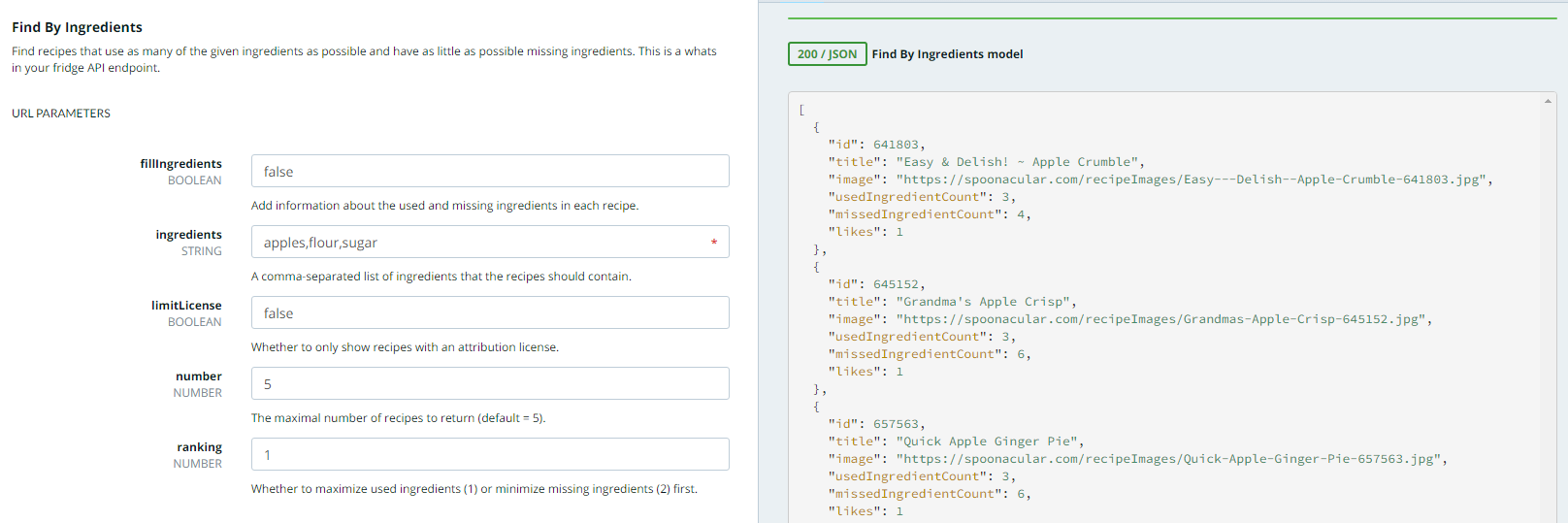

| 2.2. | Spoonacular API | We experimented on Spoonacular’s API through its online demo to find recipes according to ingredients, e.g. apples, flour and sugar. | Spoonacular’s API was easy to use and the results returned were relevant to the ingredients. | Jaro | 11/12/16 |

Videos of Experiments

While carrying out experiments, we took a videos of experiment 1.3 and experiment 1.6.

Video 1 - Sota looking for a face then taking a picture. (Experiment 1.2 & 1.3)

Video 2 - Sota moving its arms (Experiment 1.6)

Images Taken (Experiment 1.3)

These are several images that were taken by Sota in experiment 1.3.

Image 1 - A picture taken by Sota

Image 2 - A picture taken by Sota

The quality of the pictures was good. However, despite good lighting, it looked dark.

Clarif.ai Food Recognition (Experiment 2.1)

Image 3 - A screenshot taken of the result of testing the Clairf.ai image recognition demo with a picture of a banana. (Click on the image for a full-screen view)

Image 4 - A screenshot taken of the result of testing the Clairf.ai image recognition demo with a picture of an garlic. (Click on the image for a full-screen view)

Spoonacular API (Experiment 2.2)

Image 5 - A screenshot taken of the result of testing the Spoonacular recipe API demo with a three ingredients: apple, flour and sugar. (Click on the image for a full-screen view)

The API demo returned three recipes that use the ingredients that we specified.