This page summarises our HCI considerations and research for LeChefu. These findings contribute to improving overall User Experience.

Prototype

For prototyping, we have created storyboards to show how LeChefu would interact with a user through carrying out key tasks and an architecture diagram that shows what we intend to link all components to implement the key features. Since we do not create a website or application, we do not have wireframes for the user interface.

Sketches and Mockups

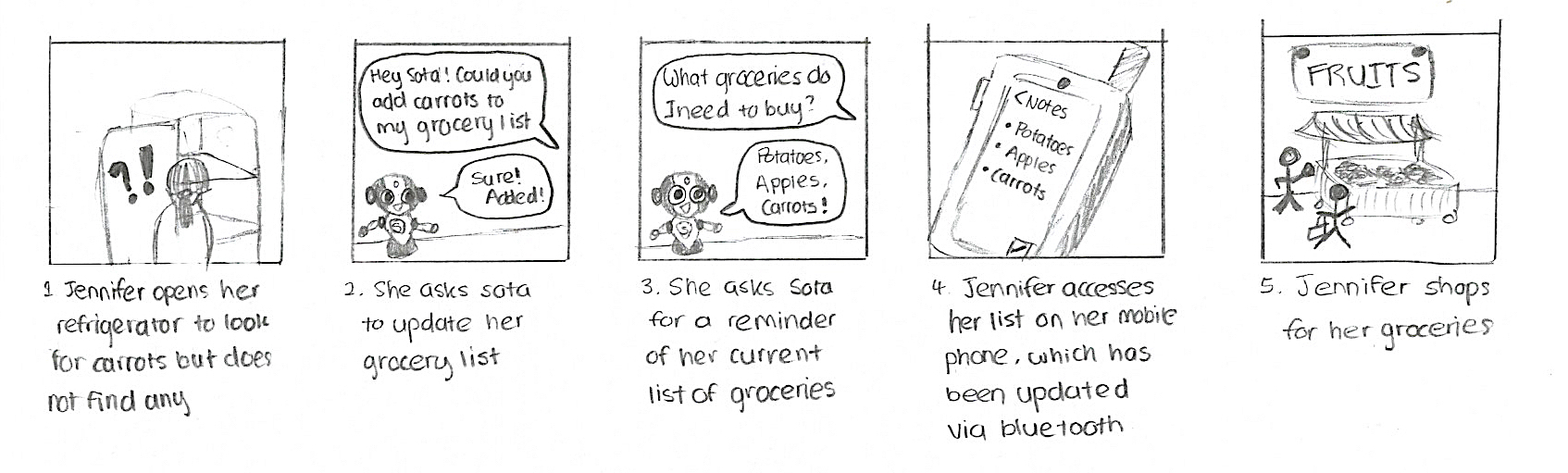

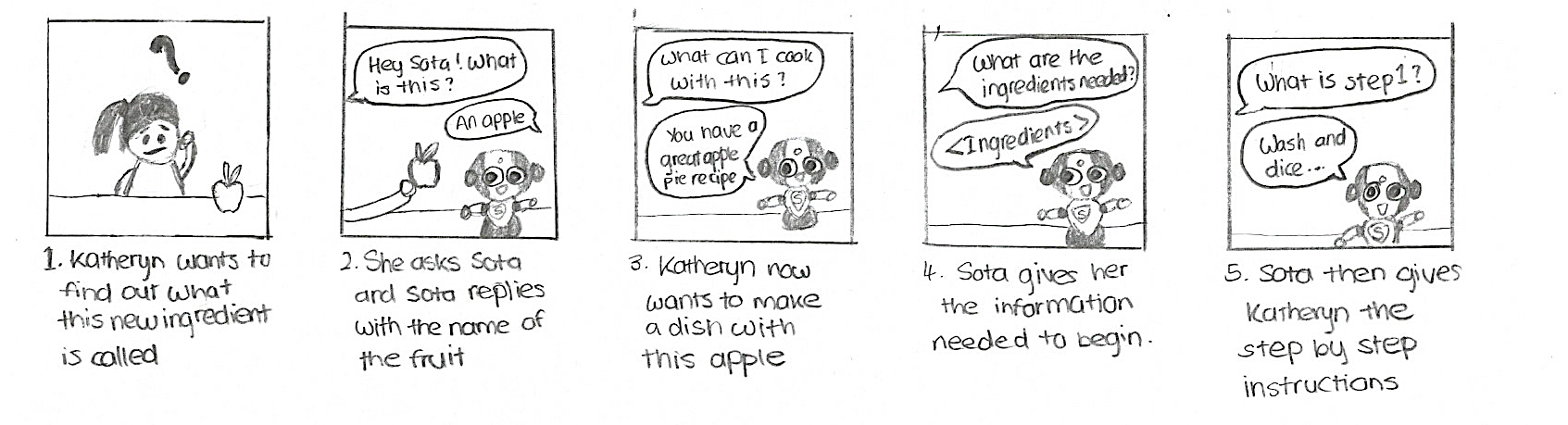

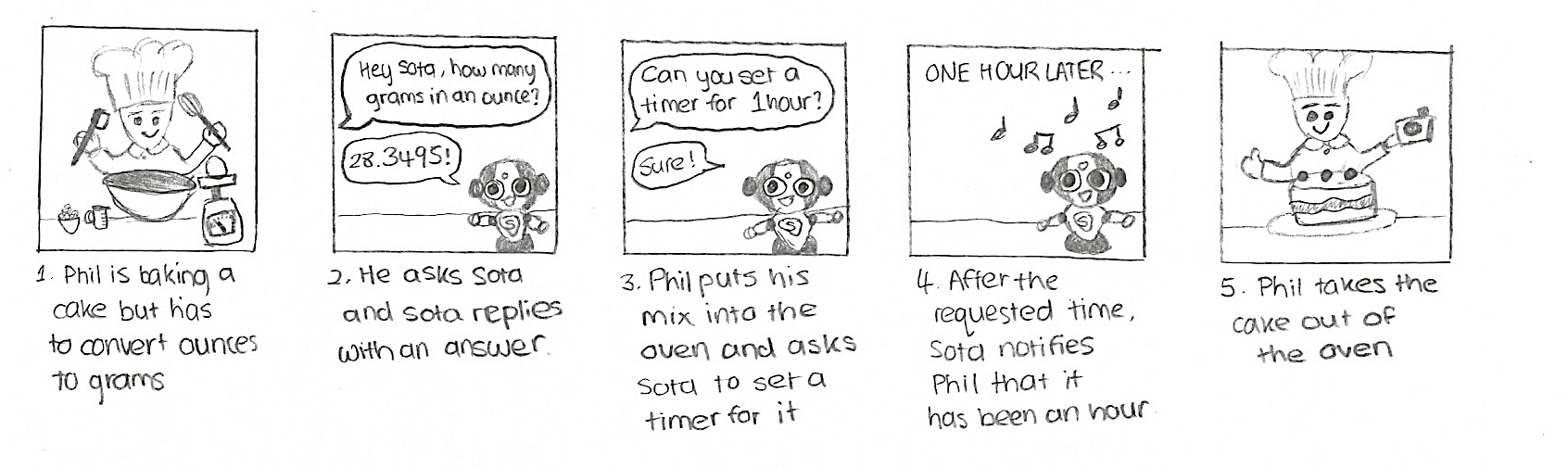

We have created several storyboards for our chosen use case, the kitchen assistant use case. These storyboards highlights our program’s key features by illustrating several user stories and how Sota would interact with the user.

- Updating the user’s groceries list

- Identifying ingredients and accessing recipe information

- Simple, straightforward interaction and setting a timer

Architecture Diagram (Proposed)

User Interface

Most of our user interface is done through speech, since the main role of LeChefu is to be a hands-free cooking assistant. We have identified several activation words, for each of the main features, which we have included in the user manual.

Human Computer Interaction

As our client has highlighted that, although Sota was not built to hold natural conversations with users, we should make user interaction as natural as possible.

Throughout development, we will be using tools like a Natural Language Processing API to give our software a better idea of sentence structure and using our User Acceptance Tests to identify factors like most commonly used phrases.

One potential problem with relying on Speech UI that we have found is the action of signing into Google Drive. Users will most probably prefer not to say their email address and password aloud.

Research on Voice User Experience

As our system relies on Voice as the only interaction with the user, we have looked into how we can make our system more robust, accessible and user friendly.

Even though one of the participants in this conversation is a human and the other is a machine, several basic expectations that are present for human to human conversations still exist. Both sides expect the “use of a common language, the other to be cooperative and that the other will possess basic cognitive faculties like intelligence, short-term memory, and an attention span, which are artificial in the case of the machine, but are nonetheless expected”1. Therefore, we try to take this into consideration as much as possible both when designing our user interface and when carrying out the tests on users. To make our system as user-friendly, optimally comprehensible and human-centered as possible, we looked into the significant factors in Voice User Experience that determine the system’s usability characteristics.

Instead of making our system more “conversational” by enabling users to feel like they are interacting with a human, we focus on linguistic familiarity to enhance user comfort and improving comprehension by carefully designing responses.

Error Recovery and Prevention

Our system should both prevent errors from occurring and help users recover when errors do occur. Three of our main problems in this area are:

- Errors in natural-language understanding due to the quality of the recording used and the amount of background noise present, especially in a setting like a kitchen.

- Failure to detect the activation word. This is a significant problem for our system, since each function is triggered by sets of activation words. Missing an activation word altogether would force the user to try again, since LeChefu would not be able to understand the question.

- Lack of accuracy in picking up voice due to the distance between the user and Sota. Devices like the Amazon Echo solve this issue by using seven built-in microphones and distinguishing voice commands from background noise.2 Since this problem is not likely to arise in a kitchen, where there is limited space, and since the solutions to this involve mostly hardware, we have decided not to prioritise this issue.

Another way in which we are trying to prevent errors from occurring is by writing a user manual that is concise and simple to understand and, therefore, capable of encouraging users to use this product in the correct way.3

Flexibility and Efficiency

When tasks are excruciatingly inefficient, it outweighs the convenience of using a social robot as a cooking assistant. In our case, the absence of a screen, such as phone screens that Siri or Google Now use, to display long and complicated information makes it more difficult to offer flexibility to the user.

With a screen, systems are able to provide visual representation of the current activity, indicating when the user can talk and whether the previous query is being processed. To compensate for this by providing a similar similar visual feedback without a screen, we are implementing distinct but consistent actions and eye colors in our robot when it is in three different states.

- Idle / Talking: LED blue

- Processing Information: LED yellow

- Listening for command: LED green

With the consistent use of these colors across the system, users grow familiar with the conventions of LeChefu more quickly, improving their User Experience with the system.

To tackle the problem of knowing when to talk, one solution that we have considered is the push-to-talk method, similar to Siri’s main activation method. When the robot is switched on, users can push its button to start giving it queries. In an effort to make communication with Sota more seamless and rely wholly on Voice User Interface, we have been trying, throughout the project, to devise a way in which Sota can depend only on its microphone to observe the user’s needs and intentions.

To improve efficiency, one method that we have to consider is making the robot listen continuously for an activation word. This ensures that the user is able to ask the robot anything, at any time. An example for how this is used in the industry is with Amazon’s Echo. “insert things about Echo here” One notable problem with this is privacy. Having a device that listens and processes every word it hears is not something that all users would agree with and pose as a threat to the users.

Throughout development, we try to improve efficiency in robot-user conversation by looking at common scenarios and taking different possibilities into account. For example, we tried solving this problem that occurs in Echo.2

User: "Alexa, put sugar, flour, and salt on the shopping list."

Alexa: "I’ve added sugar flour salt to your shopping list."

This forces the user to add items into their shopping list one command at a time, which is a very inconvenient method. In our system, we support the feature of adding several items to the grocery list at once by looking for the occurrences of the activation words “add” or “delete” throughout the query.

User: "Update my food list, add sugar, add flour, and add salt."

LeChefu: "All items have been added."

My_food_list.txt

Sugar

Flour

Salt

Although it is still tedious to say the wake word before every item, it avoids the need to wait for LeChefu to process it before repeating the command for a different query. This method also enables users to both add and delete items in one command, making the user interface more flexible.

User: "Update my food list, add apples, delete flour, and add candy."

LeChefu: "All items have been added."

My_food_list.txt

Sugar

Salt

Apples

Candy

Additionally, this method enables error prevention, as a list of items is less likely to be interpreted wrongly and, in the case where one item is missed or incorrectly identified, the items following that are less likely to be affected.

Conversational Design

In order to reduce the level of frustration caused by our system, we are looking into improving the conversational design by making our system smarter and build it to be closer to human interaction. A common problem with Voice User Interface Design is that the system expects one turn at each time, so it only allows the user to ask another question and it starts the conversation again when with each question.4 To mitigate this unnatural conversation style, we allow another query instead of prompting it. This way, users can carry on in their own pace. Moreover, we build our system to keep a recent history of what it has heard. Specifically, we save the robot’s previous response and allow the users to ask LeChefu to repeat a phrase if they did not catch it.

User: "Convert 10 milliliters to teaspoons"

LeChefu: "2 teaspoons."

User: “Repeat that line.”

LeChefu: “2 teaspoons.”

Limiting the Amount of Information Provided

Unlike visual content, verbal content does not give users the choice of going back to the information they overlooked.5 To minimise the need to do this, we must ensure that the amount of information we provide is concise and simple. Even though we let the users listen to responses again, long, convoluted responses will be challenging to catch, especially in a noisy environment. An area where this is most significantly used is when listing out the instructions. Similarly to how a long list of options listed by phone operators annoys users, we should not make Sota list off the options whenever it is switched on. Instead, since we do not have a screen to utilize, we have mitigated this by relying on the user manual instead. We have decided to list exactly which options are available and how to access them, minimising confusion and frustration at the same time.

Intuitive and Consistent Design

While an entirely new layout sounds exciting and innovative, our goal should be to make users feel at home when using LeChefu. This is imperative to allowing users to be comfortable when interacting with LeChefu. Moreover, giving an advantage of instant familiarity to users who are already familiar with devices such as Amazon Echo and Google Home. To achieve this, we have followed several conventions of well-known home assistant devices.

SOTA vs Google Home vs Amazon Echo Comparison Table

| SOTA | Google Home | Amazon Echo | |

|---|---|---|---|

| Respond to voice commands | Yes | Yes | Yes |

| Always listening | No | Yes | Yes |

| Wake up mechanism | Button push | Wake up word: “Hey Google” | Wake up word: "Hey Alexa" |

| Customizable appearance | No | No | Yes |

| Assistant highlights | Search recipes according to ingredients and ask SOTA to help you walk through the recipes step by step. Add groceries to shopping list. Set timers. Use SOTA to identify ingredients that you might not be sure about. | Get personalized daily briefing. Acts more like a personal assistant. Check traffic, check calendar, check flight status, track a package. | Add items to calendar, create shopping list, to do list, set timers, check flight status, track a package. |

Key Screenshots of Final Delivery

References

[1] Cohen, M.H., Giangola, J.P., Balogh, J. Addison-Wesley. Voice User Interface Design. Canada: Nuance Communications, Inc; 2004.

[2] Whitenton, K. (2016) The most important design principles of voice UX. Available at: https://www.fastcodesign.com/3056701/the-most-important-design-principles-of-voice-ux (Accessed: 1 March 2017).

[3] Benefit from a good user manual (no date) Available at: http://technicalwriting.eu/benefit-from-a-good-user-manual/ (Accessed: 1 March 2017).

[4] Pearl, C. (2016) Cathy Pearl. Available at: https://www.oreilly.com/ideas/basic-principles-for-designing-voice-user-interfaces (Accessed: 4 March 2017).

[5] Mortensen, D. (2016) How to Design Voice User Interfaces. Available at: https://www.interaction-design.org/literature/article/how-to-design-voice-user-interfaces