We have summarised our research for Sota on this page. As our project focusses on creating new ideas and use cases for the Sota robot, most of our research is conducted in the process of evaluating ideas and identifying user needs.

Infrastructure

To implement our Cooking Assistant idea, we require three different types of APIs for the following functions:

- Speech recognition

- Image recognition

- Recipes

As Sota is run with Java, we decided to use Java on the Eclipse IDE, to adhere to the choice of IDE used in NTT Data.

In this section, we look at the research we conducted into several APIs for each area and the justification of why we chose to use these APis.

Speech API

Google Cloud Speech API [source]

- convert audio to text using neural network models

- easy to use

- recognizes over 80 languages and variants

- enable command-and-control through voice

- accurate in noisy environments

Microsoft Cognitive Services Bing Speech API [source]

- Various add ons like voice authentication.

- Can be directed to turn on and recognize audio coming from the microphone in real-time

API.AI [source]

API.AI provides Natural Language Understanding Tools and allows integration with external apps and integrations with Alexa, Cortana and messaging platforms.

- Useful to build command applications.

- Supports 14 languages

Conclusion - For the Speech API we decided to use Google Cloud Speech API. Although Microsoft offers quite a few addons such as voice authentication, we feel that is not a core or necessary requirement for our project. Google Cloud Speech API offers the widest variety of languages, which would be beneficial for our client in the future, if they decide to expand the market for this product. It is described as being “accurate in noisy environments”, which is important in a kitchen environment. Furthermore, reports suggest that Google Cloud Speech API is the best speech API in the market.

Image Recognition API

Google Cloud Vision API

This API includes “image labeling, face and landmark detection, optical character recognition (OCR), and tagging of explicit content.” [source]

Clarifai [source]

- Has a dedicated “Food Recognition Model”

- “Create a custom model that fits perfectly with your unique use case.”

- Automatically tags images and videos to allow quick search through content

Conclusion - For the image recognition API we chose Clarifai. We chose Clarifai over the Google Vision API since Clarifai has a dedicated “Food recognition Model”, which is the most important image recognition feature that we need. Moreover, Clarifai helps to determine how healthy your food is based on the photo, something that would make Sota even more helpful to users. Additionally, although Google Vision API offers many different image recognition abilities, most are not needed for our program. Clarifai’s ability to automatically tag images makes scanning its environment quicker and more accurate.

Recipe API

Spoonacular API

- Searching for Recipes

- find recipes to use ingredients you already have (“what’s in your fridge” search) ✓

- find recipes based on nutritional requirements ✓

- semantically search recipes ✓

- find similar recipes ✓

- Extract/Compute

- extract recipes from any website ✓

- classify a recipe cuisine ✓

- convert ingredient amounts ✓

- compute an entire meal plan ✓

- map ingredients to products ✓

- Get Information

- get the price of a recipe ✓

- get nutritional information for ingredients ✓

- get in-depth recipe and product information ✓

Yummly API

Yummly API uses the world’s largest and most powerful recipe search site. It includes:

- Weight Loss/Tracking

- Allergy

- Shopping List

- Cooking and meal planning

- Food Brands

- Social Apps

Conclusion - For the Recipe API we decided to use Spoonacular API. This API offers numerous features, as described before, and is considered one of the best APIs regarding recipes. Yummly API uses a single site to gather the data while Spoonacular uses multiple sites, which enables a more extensive search and returns a more diverse collection of recipes.

Natural Language Processing (NLP)

Problem: In order to parse strings accurately and allow more natural conversations between Sota and the user, we needed to analyse the sentence to enable the program to understand the request.

For example, when parsing for ingredients to use in querying the API, some ingredients can be made of two words.

“Find a recipe using sweet corn and flour” -> “corn” and “flour”

This is not ideal, as adjectives can be significant to the description of an ingredient.

Solution: We used an NLP API to recognise the parts of speech that each word belongs to and use these tags in the logic of our functions.

For example, in the previous case case, we used NLP to recognise parts of speech and avoid dropping adjectives that come right before nouns in the output.

“Find a recipe using sweet corn and flour” -> “sweet corn” and “flour”

While exploring the idea of using NLP, we looked at several NLP APIs.

Apache Open NLP [source]

Open NLP is an Apache-licensed machine learning based toolkit for “the processing of natural language text”. It supports part-of-speech tagging, which is the NLP feature that we require. As it is open source, we are able to use it with no additional costs to the project.

Three Pillars

As our client has specified the Three Pillars that their company focuses on, we have research these pillars and how these are used in products similar to the NTT Sota.

Internet of Things

Definition

Sensors and actuators embedded in physical objects—from roadways to pacemakers—are linked through wired and wireless networks, often using the same Internet Protocol (IP) that connects the Internet [source].

Applications

-

Information & Analysis - use the information gathered from sensors in IoT devices to find patterns & enhance decision making process

- Tracking behavior (e.g. ZipCar - renting out cars on the spot)

- Enhanced situational awareness (e.g. video, audio & vibration in security to detect unauthorized access)

- Sensor-driven decision analytics (e.g. monitor patterns of people shopping in retail)

- Automation & Control - converting the data and analysis from IoT devices to feed back the instructions into actuators controlling the systems. (closed-loop systems, e.g. autonomous robots)

- Process optimization (e.g. separating cucumbers on production line [source]

- Optimized resource consumption (e.g. smart electricity meters in households)

- Complex autonomous systems (e.g. automatic braking systems in high-end cars when the situation is dangerous)

- Fragmentation - differences between software & hardware, lack of technical [source] possible issues with propietary IoT devices since protocol might become obsolete.

- Security - most IoT devices are exposed to the Internet without any protection (e.g. firewalling, subnet restriction) allowing users outside to access and potentially hack / abuse them.

Agent AI

Definition

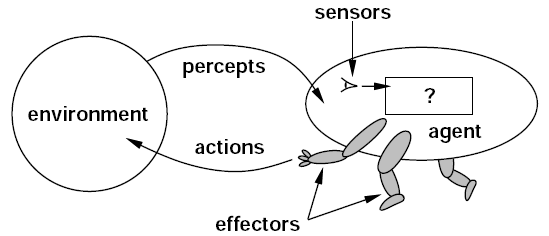

- An AI Agent acts in the environment of an AI system, which may contain other Agents. It perceives its environment through its sensors and reacts to the environment through effectors. A robotic agent, like Sota, uses cameras and infrared range finders for its sensors with motors and actuators as its effectors. [source]

- A rational agent is an agent that does the right thing. To determine how successful an agent is, we use objective performance measure. Rationality to an agent would depend on its percept sequence and the actions that it is able to perform. An agent’s behavior can be based on both its experience and its built-in knowledge. [source]

Diagram 1 - A visualisation of how an Agent interacts with its environment [link]

Applications

- Home assistants - Amazon’s Echo and Google’s Google Home are examples of how devices use infrared finders to make itself more aware of surroundings. When using multiple Echo devices, Echo uses its spatial perception to decide which device is closest to the user and, therefore, should respond.

- Air Traffic Control - the agents monitor and interact with the dynamic situation of flights around an airport tower. They detect and process possible collisions and landing requests to assist the human air traffic controller. These agents assume greater autonomy when the human operators do not take action. The agent decides when the situation has become critical and it has to take action. [source]

- Data Mining - Multi-Agent systems are used in Distributed Data Mining to reduce complexity needed in training the data while ensuring high quality results. Distributed Data Mining provides better scalability, possible increase in security and better response time, compared to a centralised model. [source]

- For our use cases, the robot would work in a continuous, dynamic and nondeterministic environment. [source]

- Continuous environment - as Sota is going to interact with users rather than with software or data alone, states of the environment are not distinct or clearly defined. Therefore, our solution has to allow Sota to continuously analyse its environment for changes.

- Dynamic Environment - While Sota is carrying out a task, the environment may still change. This means that Sota needs to decide when to switch from one task to another when necessary.

- Nondeterministic Environment - The next state of the environment is not completely determined by the actions of the agent in the current state. Therefore, the agent considers the outcome of the decision within this state instead of the state that this decision can lead to.

Voice UI

Definition

A voice-user interface (VUI) makes human interaction with computers possible through a voice/speech platform in order to initiate an automated service or process. The elements of a VUI include prompts, grammars, and dialog logic (also referred to as call flow).The prompts, or system messages, are all the recordings or synthesized speech played to the user during the dialog.

Applications

- Perform searches with your voice - Siri, Cortana and OK Google are examples of voice-activated technologies. These voice-activated technologies keep getting better and easier to use. They need less “manual training” than earlier generations of speech recognition apps. They seem to learn how to understand your voice and what you’re asking for intelligently and on the fly.

- Control software apps - You can use voice commands to control various apps on your devices. The command list of things you can do is long and getting longer all the time.

- Translate spoken words into other languages - An example is Skype translator. It will translate voices in Skype conversations in near real time - and give you an on-screen transcript, too.

- Proofread Documents - Voice technology is not limited to commanding a device with your voice. In recent years great strides have been made in text-to-speech conversion. In other words, some programs can transform text into the spoken word. This is great if you need to proofread a document. It’s the best way to catch missing words, grammar issues and other errors in writing.

Advantages

- The user does not need to be trained in how to use the interface.

- More flexible than a dialogue interface.

- Suitable for physically handicapped people.

- Increase customer satisfaction.

Disadvantages

- Reliability remains an issue - the interface can only respond to commands that have been programmed

- Highly complex to program and so only warrants this kind of interface where other types of interface are unsuitable.

- Not widely available as other forms of interface are often superior.

- A voice interface might need training in order to get the software to recognise what the user is saying.

Competitors

The following are a few of the competitors that we have identified for the Sota robot and, specifically, our use cases.

- Samsung Fridge Hub - This fridge updates grocery shopping list and shares to Wifi-connected device, suggests recipes based on available ingredients, built in electronic timetable and post-it notes board.

- Jibo Robot - (still in production) updates grocery shopping list and shares to Wifi-connected devices, informs user of text messages, helps with conversion of measurements when needed.

Conclusion and Decisions

Following evaluation of our research and using the Decision Criteria detailed in the Requirements section, we have decided, as a team, on one use case and have chosen JUnit as our main test for unit testing. We have decided to prioritise unit testing and user acceptance testing, as we feel these tests would add most value to identifying flaws in and improving our project. Furthermore, we have used our research into external APIs to narrow our list of APIs down to Google Cloud Speech API, Clarifai and Spoonacular for implementing voice user interface, image recognition and connection to recipes respectively.

References

[1] Whitenton, K. (2016) The most important design principles of voice UX. Available at: https://www.fastcodesign.com/3056701/the-most-important-design-principles-of-voice-ux (Accessed: 1 March 2017).

[2] Benefit from a good user manual (no date) Available at: http://technicalwriting.eu/benefit-from-a-good-user-manual/ (Accessed: 1 March 2017).

[3] Pearl, C. (2016) Cathy Pearl. Available at: https://www.oreilly.com/ideas/basic-principles-for-designing-voice-user-interfaces (Accessed: 4 March 2017).