System

Evaluation

Bug List

Given the size of our project, we could not rely on peer review to uncover issues with another group member's code - we had too much to do on our own. Instead, we aimed to only push code to the repository once it was heavily manually and automatically tested.

When we began to integrate our project, we used the GitHub issue tracker to keep track of bugs and fixing progress.

Now that the project is complete, we can say there are no (known) bugs in our system but some may arise in the technology used, which is completely out of our control.

Below is a screenshot of the GitHub issues tab, taken on Friday 26th March 2020 @ 12:10pm.

Admin Web App

The admin web app does not have any bugs but may have slight latency due to the fact that our cloud database is hosted in Dallas (as the London-based IBM DB2 servers were completely down during development).

Android App

The app itself does not have any bugs per say - all functionality works correctly. However, there were a few bugs with the technology we used to develop the app which may be worth discussing.

1. Android Studio Bugs

There were a few bugs with Android Studio:

-

With regards to tests, running all the unit tests together causes them all to fail, but running them individually works.

-

The instrumentation tests fail for no reason if ANDROID_TEST_ORCHESTRATOR is used, as specified in the Testing section.

-

When doing a gradle build and installing it on a device from Android Studio, it sometimes displayed error messages, again for no reason. Building the app and installing it again often solved the issue, however sometimes the entire cache had to be cleared by going under File and clicking Invalidate Caches/Restart. In some cases the errors would not stop the build/installation and in some cases it did. It should be made clear that there was no issue with our code (purely an Android Studio bug) as proven by the fact that simply building the project/clearing cache solved the issue.

2. Fetching user's location (latitude and longitude) using Google's PlayServicesLocation

For some reason, this only seems to work if location data for the user already exists (if they have used Google maps already then it would exist). Manually requesting the user's location from Android also did not seem to work. We can't ascertain the user's location ourselves like this in itself would be its own project (a dedicated program would be needed).

3. Alarm Timings

Depending on the Android API Level, if a user sets an alarm, it may not go off at the exact time they set it. Again, as we are using built-in Android APIs to set notifications, if the alarm does not go off at the exact time there is little we can do about it as we are limited to the capabilities of the user's Android SDK version. If the API level is above 19, the alarm should go off at the exact time.

Achievements

MoSCoW List Status

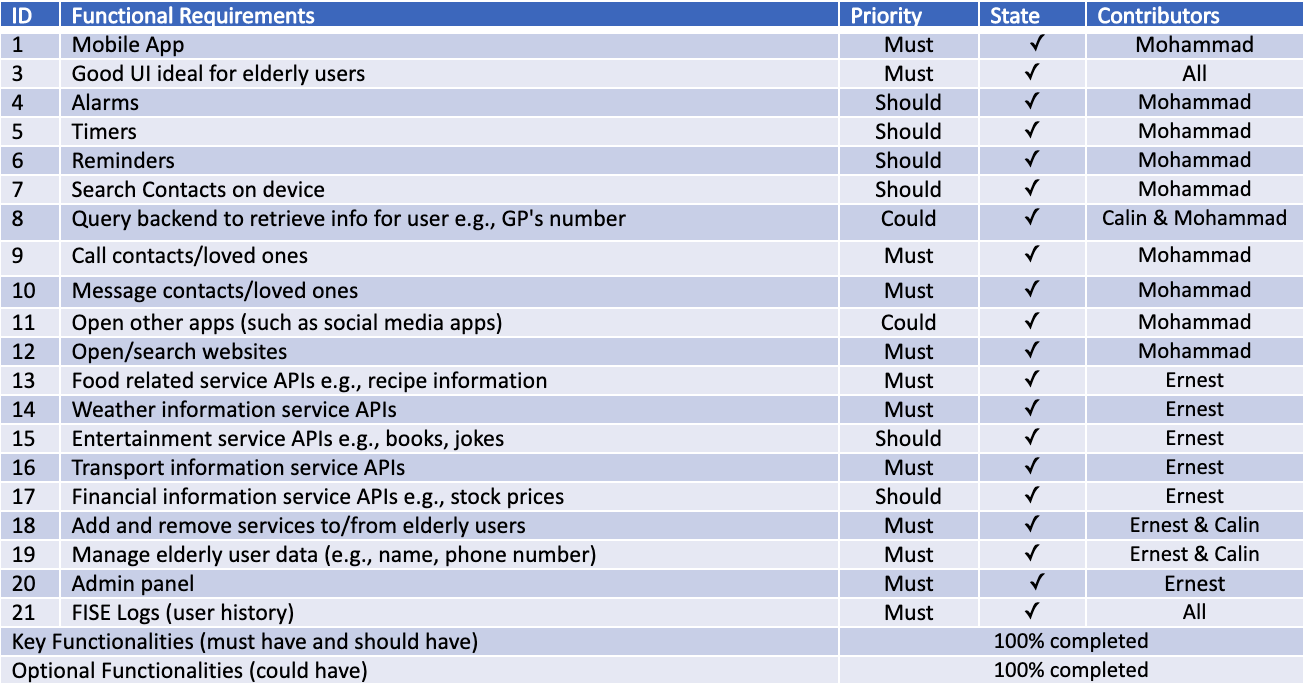

Functional Requirements

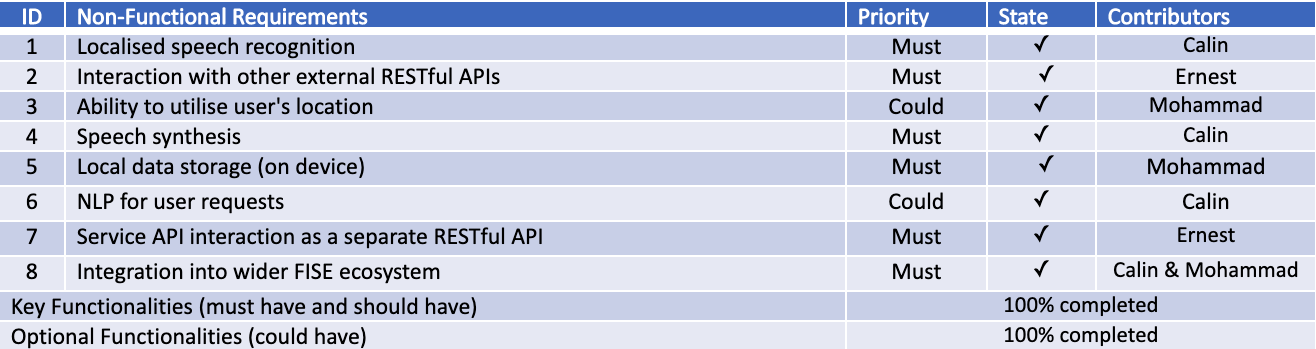

Non Functional Requirements

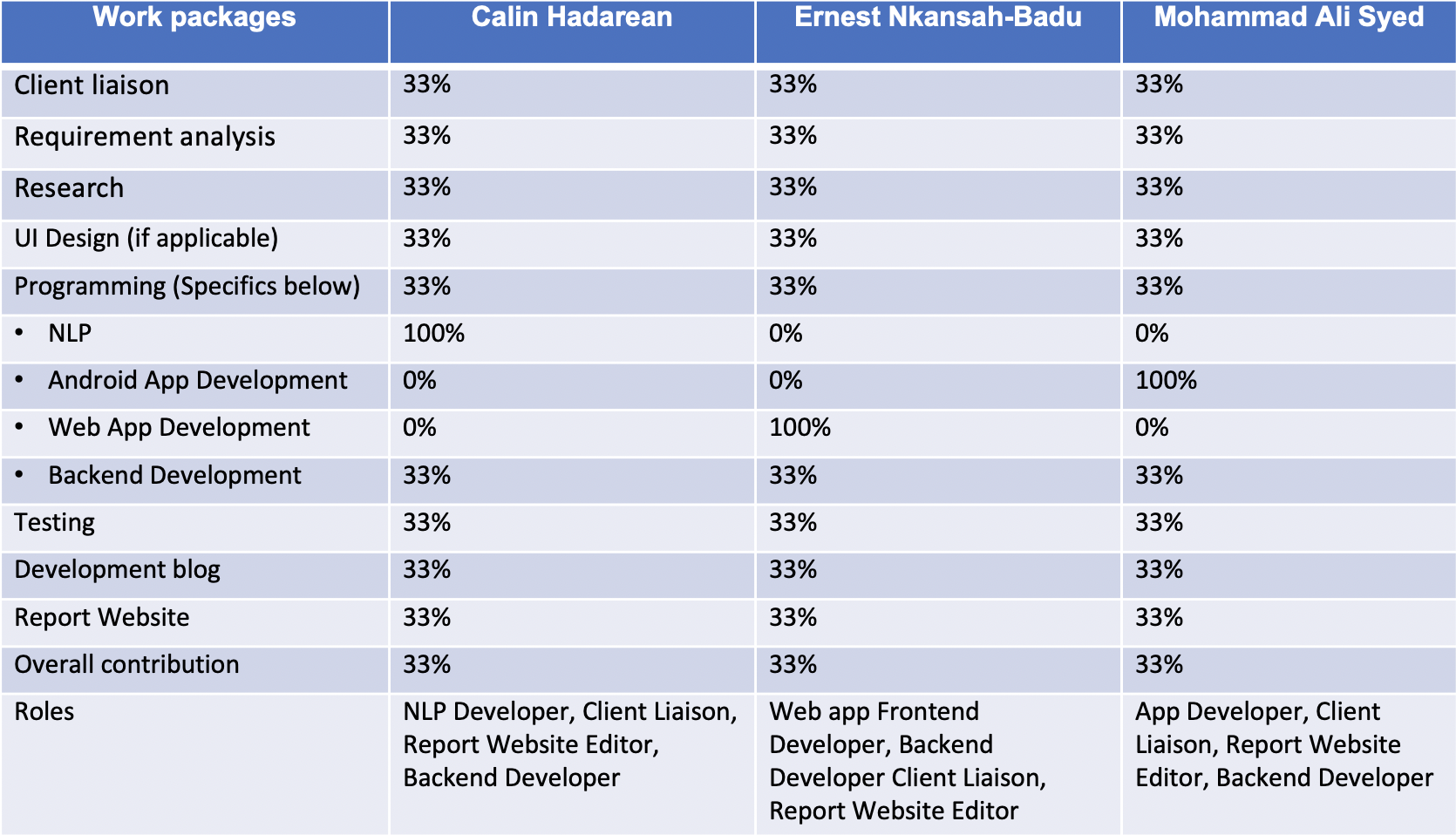

Individual Contributions

Critical Evaluation

As part of our evaluation we considered and investigated different aspects of the system and reviewed them individually.

User Interface and Experience

Throughout development our UI and User Experience was an integral part of our system. As such we went through multiple iterations of designs. With each iteration we received feedback from our clients and users to further refine our design. Additionally, we strove to make our UI's intuitive and as easy to use as possible. In the case of any uncertainty (e.g what voice commands are available to use for the voice assistant) we provided instructions to help users.

In total, we would give ourselves Good for our UI and User Experience.

Functionality

The system we produced achieved 100% of both our functional and non functional MoSCoW requirements. All of these requirements were also tested to ensure their functionality. Additionally, our clients were very pleased with the quality and expansiveness of our system.

Overall, we were very satisfied with our functionality and would rate it as Excellent

Stability

Our team performed extensive testing on both our application and our web app. For both of these, we achieved a high percentage of code coverage. Additionally, we also tested our subsystems, in particular, the AskBob Concierge API and were able to extensively test it despite the challenges that presented themselves when doing so.

As such, in terms of stability we would rate our system as Excellent.

Efficiency

For both the web app and the app we performed performance testing. In terms of our application, our app used minimal computational resources with it rarely exceeding a RAM usage of over 128MB. This was very important to both us and our clients as it would mean that older or less powerful machines would still be able to run the app.

In terms of our web app we received scores of 91 and 69 on Google Lighthouse for desktops and mobile devices respectively. This showed that our web app was able to function well on both types of devices.

Therefore, in terms of efficiency we would rate our system as Good.

Compatibility

One of our goals throughout development was to make our system accessible on as many devices as possible. As we developed for Android Version 16 our app is compatible with 98% of all Android devices. Additionally, we also tested our application on both mobile phones and tablets.

Our Admin website works on most modern browsers with the only exception being Safari as that would require us to acquire a digital certificate for our web application.

Our backend that supports both the application and web app is also packaged with Docker this means that it is both easy to deploy and compatible with all machines.

In summary, we would rate our compatibility as Good.

Maintainability

Our system has been explicitly designed to be extendable. Therefore when it comes to future developers building upon our existing system they will be able to achieve this with relative ease as long as they utilise our interface. On top of this, our codebase is well documented with each key subsystem being extensively documented so that developers can easily understand how it works.

Therefore, in terms of maintainability we would rate our work as Good.

Project Management

As a team of 3 we worked very fluidly. We utilised Microsoft Teams and Whatsapp as our main methods of communication and file transfer. Additionally, we utilised Trello and Github Issues where applicable to plan out our next steps throughout development. Every team member attended all client and team meetings and made their fair share of contributions to the project as a whole.

Overall, we would rate our project management as Excellent.

Future Work

In the future our system can be extended upon in the following key aspects:

-

Extend NLP training data

If we were to extend the training set for our plugins we would be able to achieve a higher degree of accuracy when it comes to recognising a users intent and extracting the relevant information. This could potentially further increase user satisfaction.

-

Adding new Services

This would be a natural expansion point for our system as adding additional services would give users more ways in which to interact with the system.

-

Extend App Pages

Alongside our voice assistant our app contains a variety of pages, some of which provide different utility features such as timer or alarms. Our app supports the ability for future developers to add further utility pages if they choose to do so.

-

Further integration with FISE ecosystem

At the moment our system is partially integrated into the FISE ecosystem via our use of the AskBob NLP framework. However, in the future our app could be integrated more fully into our ecosystem. For example, the Concierge app could be fully implemented into Team 38's Video Conferencing Web App.

-

More natural speech synthesis

Currently our speech synthesis can sometimes be challenging to understand. This is due to its robotic sounding nature. As such, one area for potential improvement could be to implement a more advanced speech synthesiser that would sound more natural.