System

Testing

Here we discuss how we tested the different parts of our system to ensure everything worked as it should.

Testing Strategy

Given the scale of our given project, tests were critical to ensure the system worked correctly - the codebase was simply too large for us to have complete assurance without any tests.

For each main component (described in the sections below) we used a subset of the following types of tests:

Fully-Automated

- Unit Testing

- Integration Testing

- Performance Testing

- Stress Testing

- Instrumented Testing

Semi-Automated

- Responsive Design Testing

- Compatibility Testing

- User Acceptance Testing

- Resource Usage Testing

Note that the backend was tested solely through integration testing with the Android app and the admin web app.

Admin Web App

Several different testing mechanisms were used to provide the assurance that the web app functioned correctly and provided a consistent user experience across different browsers and devices.

Technology Used

Testing a website can seem unintuitive at times but allowed us to uncover unknown issues with the web app that were not picked up during the initial implementation phase.

We used the popular testing framework Jest to test the web app. It allows us to mock components (without building them) and manually test individual components without building the entire application; we can therefore test specific pages and components without having to start at the homepage and sign in (or sign up) at the start of every single test.

Human interaction e.g., button clicks, typing etc., can be accurately simulated using Jest making it a very versatile testing technology.

Unit Testing

Unit testings allows us to focus on and test components in isolation. Individual components (such as buttons, dropdowns and text inputs) were unit tested to ensure they were usable across many different contexts and provided the necessary functionality. These components were tested first to assert that the foundation of the web app was in working order.

The components pass all the unit tests, and the report is included in the coverage report for the integration tests in the section below.

Integration Testing

Integration testing was used to test multiple components together, with the backend.

Given the nature of the web app, a sizeable portion of the system could not be tested in isolation. Integration testing was used with the backend to ensure that the web app communicated correctly with the database and provides assurance that the backend API, its endpoints (and their enclosing logic) were all in working order. A single test suite can therefore be used to assure that both the web app and backend (more specifically, the portion of the backend serving the web app) are functioning correctly.

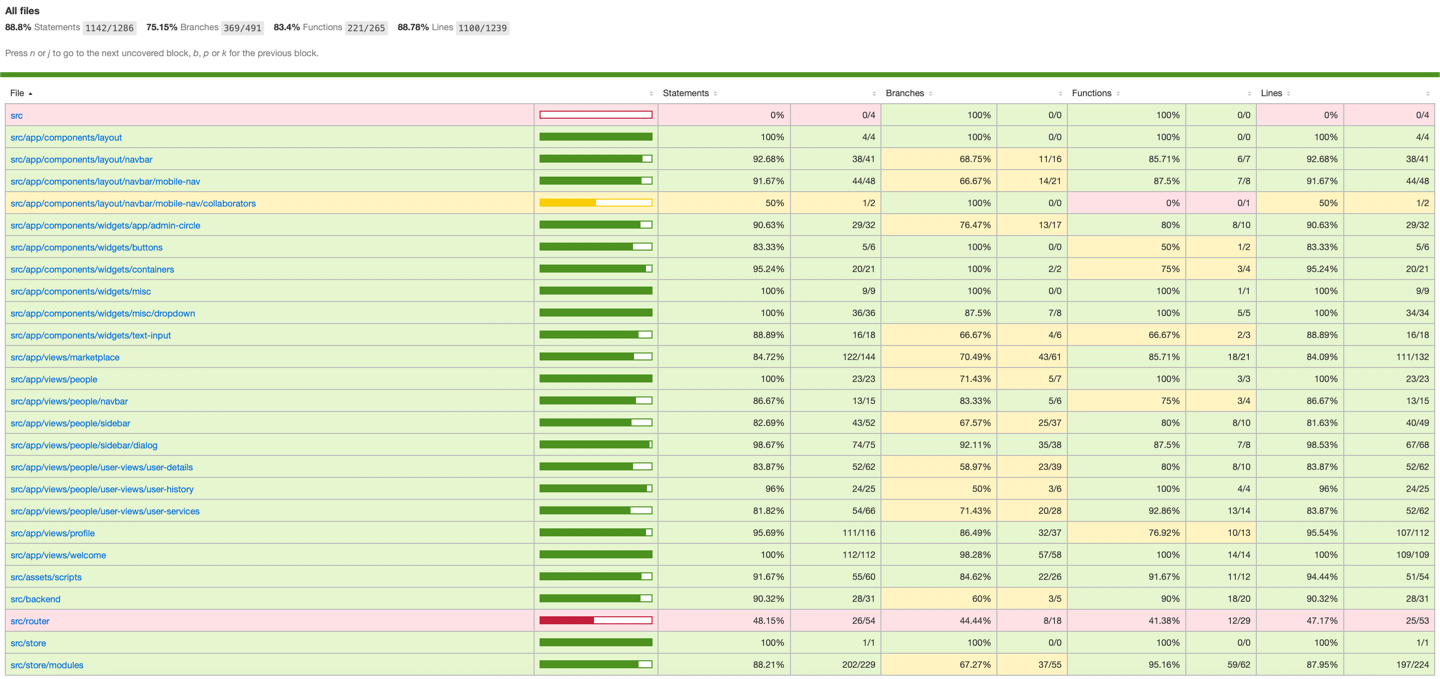

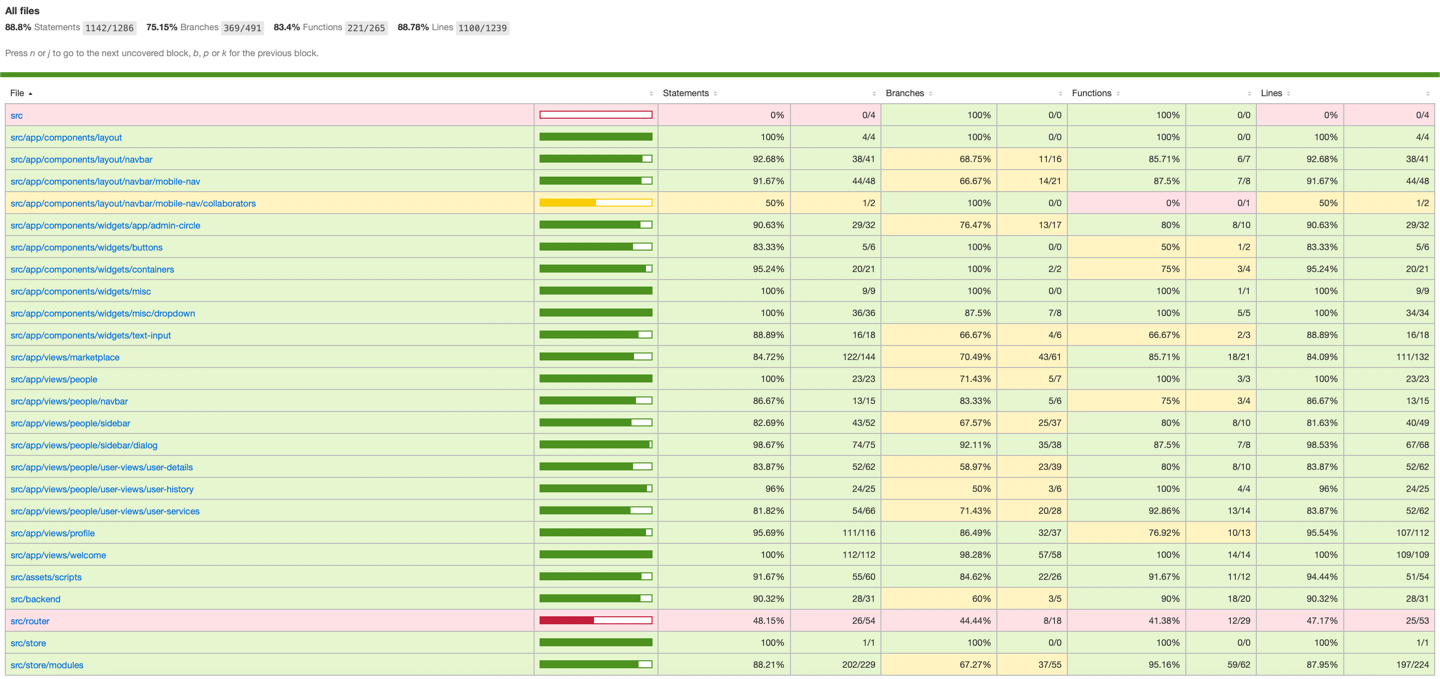

We can report that all integration tests passed, and the coverage report is shown below:

It is important to note that some components could not be tested, some examples are given below:

Some Vue components exist to reduce typing e.g., images – Jest does not allow the local filesystem to be used in tests meaning these components would throw an error if included in tests.

- Some code branches exist to assist with responsive design e.g., the grid shown for services in the marketplace is implemented using several branches monitoring the window width – this cannot be tested because of the limitations with the virtual DOM used by Jest outlined below.

The virtual DOM used by Jest does not implement the following:

- Programmatic scrolling.

- Navigation between pages (we can only test whether the method to change the page was called, but not if it navigated to the correct page with the correct parameters).

- Window alerts and other popup messages.

- Page reloading.

- Setting a specific window size (width or height).

As a result, the line and branch coverage is not as high as we had hoped.

To circumvent these limitations with Jest, these components and aspects were tested manually during development; while on its own, line coverage is not the be-all-end-all for testing, manual testing still provides us with the assurance that the web app functions correctly.

The total amount of unit and integration tests amounts to 139.

Responsive Design Testing

It is expected that the admin web app will be used on several different browsers, on several different types of devices with different screen sizes, resolutions and pointer precisions. Because of this, it is better to display the responsive design test as a video (6 min) using Safari’s responsive design mode feature:

In short, the web app is responsive to any device size and mouse pointer precision (or lack thereof), achieved through mobile-first design.

Compatibility Testing

Web apps do not need to be assessed for their platform-compatibility given they run on any platform with a browser with JavaScript enabled and an internet connection. Instead, this section describes the visual appearance of the web app on different browsers.

As previously mentioned, the web app will be used on different browsers and we can confirm that it appears as expected in the four main browsers: Chrome, Edge, Safari and Firefox.

During implementation, the tool CanIUse was used to determine which CSS attributes were available on which browsers. It also gives a breakdown of the popularity of each browser, allowing us to decide whether it was worth supporting a particular browser that did not implement a CSS attribute. As a result, it is also likely the web app will appear the same on more obscure browsers.

Performance Testing

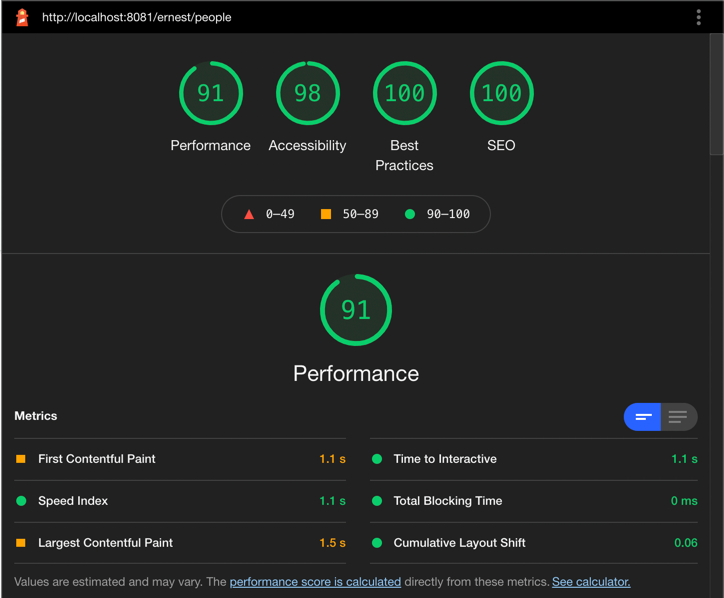

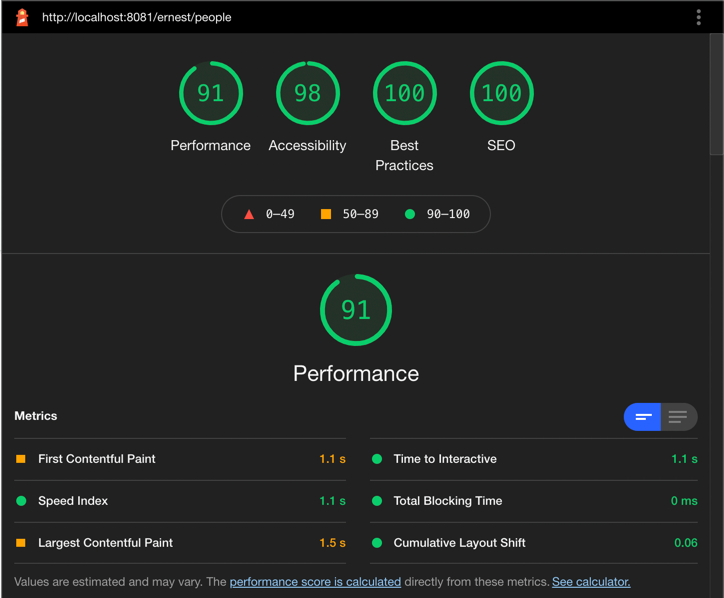

Google Lighthouse was used to gauge the performance of the web app (among its accessibility and search engine optimisation); this is a simple, minimal-effort way of getting a performance report without requiring more advanced performance testing frameworks which are unnecessary given the project scale, deadline and expected load.

The Lighthouse report for the Concierge web app for desktop (left) and mobile devices (right) is shown below:

It is important to note that Google Lighthouse is indeed a rough guide to improving websites and should be taken with a grain of salt.

User Acceptance Testing

To gain an unbiased overview and find out about potential improvements to the admin web app, we asked four people from various backgrounds to test the web app and recorded their feedbacks as shown below.

Tasks

Test Case 1

Testers were given a test account with no members added and were asked to add a new member to their circle.

Test Case 2

Testers were given a test account with an app user who has previously used the Concierge android app so their history should be visible in the history view.

Test Case 3

Testers were given a test account and were asked to go to the service marketplace to find a service using both the dropdown to sort by category or using the search bar.

Test Case 4

Testers were given a test account which has a pre-existing member added and were asked to use the marketplace to add a service to them.

Feedback

|

Case |

User |

Feedback |

|

1 |

1 |

Adding a new member is simple enough. |

|

2 |

If I entered details incorrectly, the website gave meaningful messages. Opening the newly added member also saves me some time. |

|

|

3 |

It is quick and easy to add new members. |

|

|

4 |

Opening the added member’s account after creating their account is very useful and saves a few extra clicks. |

|

|

2 |

1 |

Viewing full service usage history is a useful feature. It was not difficult to navigate to this page. |

|

2 |

Sorting by date is very useful. The items could be separated by month or week etc. |

|

|

3 |

The usage history is arranged well and is not cluttered with information. Sorting history by date is a nice touch. |

|

|

4 |

Clicking on a item and then opening the service in the marketplace is a very useful feature. |

|

|

3 |

1 |

The search functionality was very useful. Filtering by category also helps me find what I need quicker. |

|

2 |

Searching seemed very familiar. Filtering by category is a nice feature to have. |

|

|

3 |

I can see the filtering options being very useful when more services are added, but for now it’s a nice feature but not a necessity. |

|

|

4 |

I like how search results are returned as I type, instead of forcing me to click a button. Filtering by category is nice but there were not many different categories. |

|

|

4 |

1 |

Adding services was very easy. Maybe the admin should be taken to view the service in a user’s account instead of returning back to the marketplace |

|

2 |

Being able to add a service to multiple users at the same time saves a lot of extra clicks. |

|

|

3 |

Adding a new service to multiple members at the same time is a very useful feature. |

|

|

4 |

It was very quick and easy to add new services. |

Android App

Find details of the different types of tests we conducted to evaluate our app below.

Unit & Integration Testing

We wrote over 200 unit and integration tests achieving overall branch coverage of roughly 90%. These tested our app's functionality, from testing if buttons opened the correct activity to testing if we could parse AskBob responses correctly.

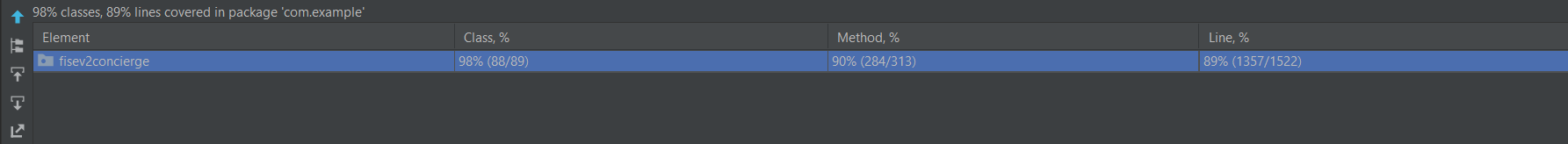

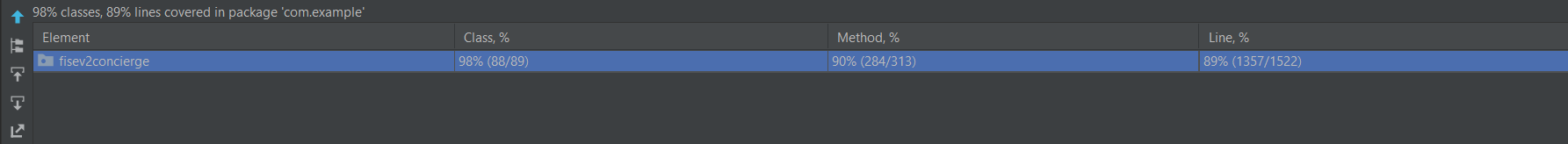

Overall:

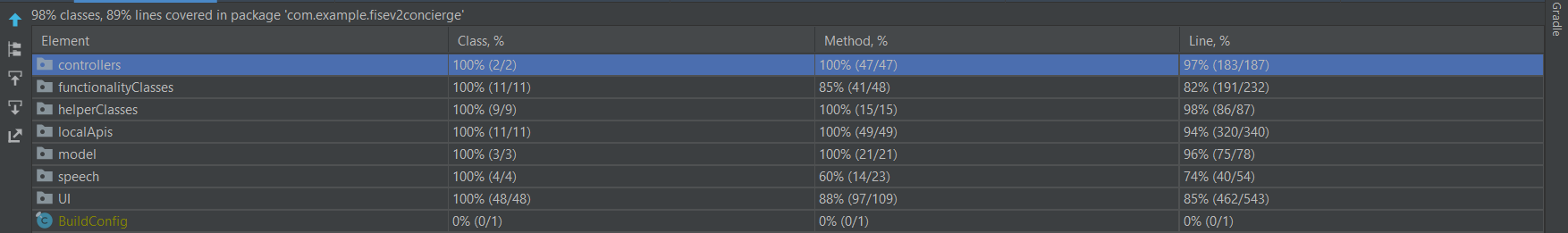

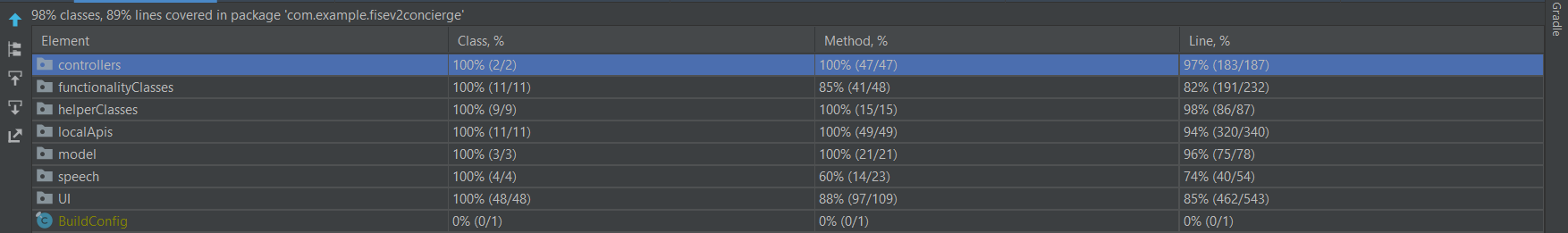

By Package:

Please note that the only class we didn't test was 'BuildConfig' which is dynamically generated at runtime and doesn't require testing.

Unit and integration tests are important as they provide a quick way of locally testing whether our code works or not. It is more reliable than testing the app ourselves as metric such as branch coverage can help show us how much of our code exactly we are testing. It is also not ideal to test the app ourselves every time as it is time consuming and error prone. See screenshots below for overall branch coverage and branch coverage package by package (provided through Android Studio).

Unit tests tested individual classes whereas the integration tests tested interactions between multiple classes. The majority of integration tests were limited to the MainController class and AskBobResponseController class since these are the two main classes which interact with other classes.

Tests were split into different classes, each one corresponding to the class we were testing. The 'test' folder structure is identical to the structure of the 'main' folder which makes it easy to navigate and quickly find the test class we need.

The tests were developed through TDD which helped us ensure the code we wrote was correct and minimal, saving us development time. The tests now act as a regression test suite, where if we add new code, we can run these tests to ensure there is no functionality leakage. This will be very useful for developers in the future who can use this when they add their own features to the app.

As the tests utilised Android APIs and real time system features, we had to use a testing framework which would allow us to 'mock' these API and system calls. Therefore, we used Robolectric as our testing framework which provided powerful APIs to test our code.

We chose Robolectric because it is robust, well tested, has a developer communnity and provided the APIs we needed to test our code. We did research alternatives such as Mockito and UI Automator but found Robolectric was the easiest to use and provided all the functonality we needed.

However, a downside is that to run these tests, the targetSdkVersion must be set to 29 or lower, as Robolectric doesn't currently support Android SDK versions greater than 29. In no way does this hinder the reliability of the tests as all the code written is intended for devices with Android SDK version 16 or higher. However, it did mean that the targetSdkVersion has to be changed every time before running the tests which is inconvenient.

Please note, aside from changing the targetSdkVersion, each test class has to be run individually as running all the tests in the 'test' package at once will cause them to fail - this is not because of our tests. It is an Android Studio bug [see Bugs under Evaluation]. Also, Robolectric was used even for tests which did not use Android API calls. This is because using Robolectric provided a sandbox environment which could mock a real time Android setup, allowing us to test other features (such as making HTTP requests to our server) which we could not do in standard unit tests.

Instrumented Tests

Instrumented tests are UI tests which run on an emulator/device with AndroidJUnit4Runner. We wrote these integration and functional UI tests to automate user interaction, eg testing if the buttons all worked.

We used the Espresso framework to write these tests, as recommended by Android. As well as using the standard features and APIs, we also used the 'assertions' feature to assert that key UI components such as the mic icon were present.

Instrumented tests are important as they provide us with a way to test if the UI was working correctly which is essential for an app. They also allowed us to quickly test our changes to the UI to ensure we hadn't broken any of the existing UI. For example, if during UI redesign we accidentally deleted a key UI component, then the tests would point this out, quickly and precisely, saving us development time.

As well as writing certain tests ourselves, we also used the built in Espress Test Recorder, which allowed us to perform actions on the device (such as clicking a button) and it would automatically convert these interactions into test code.

This made writing tests much easier and quicker. It meant we didn't have to spend time writing tests ourselves or getting bogged down with learning how to use the framework from scratch as we could use this tools to automatically produce our tests easily in an intuitive manner. The chances of bugs in tests was also reduced. This is very important as a developer as we have very limited time, so tools which can effectively reduce test writing without affecting the quality of the tests are essential.

However, a downside was that the tests produced by Espresso Test Recorded contained some deprecated methods. Therefore, after each test was produced, we manually replaced these deprecated methods ourselves with the proper, appropriate methods as required. Overall, we still saved a lot of time from having to learn the framework and writing the tests from scratch.

Please note, that the tests fail if ANDROID_TEST_ORCHESTRATOR is used as executor under testOptions in the gradle file. This is again an Android Studio bug and nothing to do with us [please see Bugs under Evaluation]. The current gradle file already comments out use of ANDROID_TEST_ORCHESTRATOR. However, should a need arise for it in the future, it must be commented out before running the Instrumented tests.

The combined use of unit tests and instrumented tests provided a powerful testing suite where unit tests would test the functionality and logic and the instrumentation tests would provide high level UI tests.

Stress Testing

It is an important quality assurance metric to stress test the app to ensure it can handle high pressure and volumes of input from the user.

We used the Monkey framework for this. This was a form of automated testing where Monkey would randomly generate a number of events such as button clicks , text input, screen rotations and more and inject these into our app. The number of events to be generated could be specified by us through the terminal. We could also specify which types on inputs we wanted. However we left this to be the default (all types of input), as this would provide a comprehensive view of how much load our app could handle.

We started off with 500 events, and doubled this every time (1000, 2000 etc). Our target was 5000 events to make the app crash. We are pleased to say that our app crashed at 16,000 events! Given that our app is to be used by elderly people and the nature of the app does not warrant regular, high volume user input (as a game might), we are highly confident that our app will be able to take the strain of regular user input without crashing.

Please note that Monkey tests are not formally written or produced unlike our other tests - we have to run a command in the terminal every time we want to run a test. The command we used was "adb shell monkey -p com.example.fisev2concierge -v [number of events]". Please make sure the device is connected to your computer and that the computer has ABD installed and available.

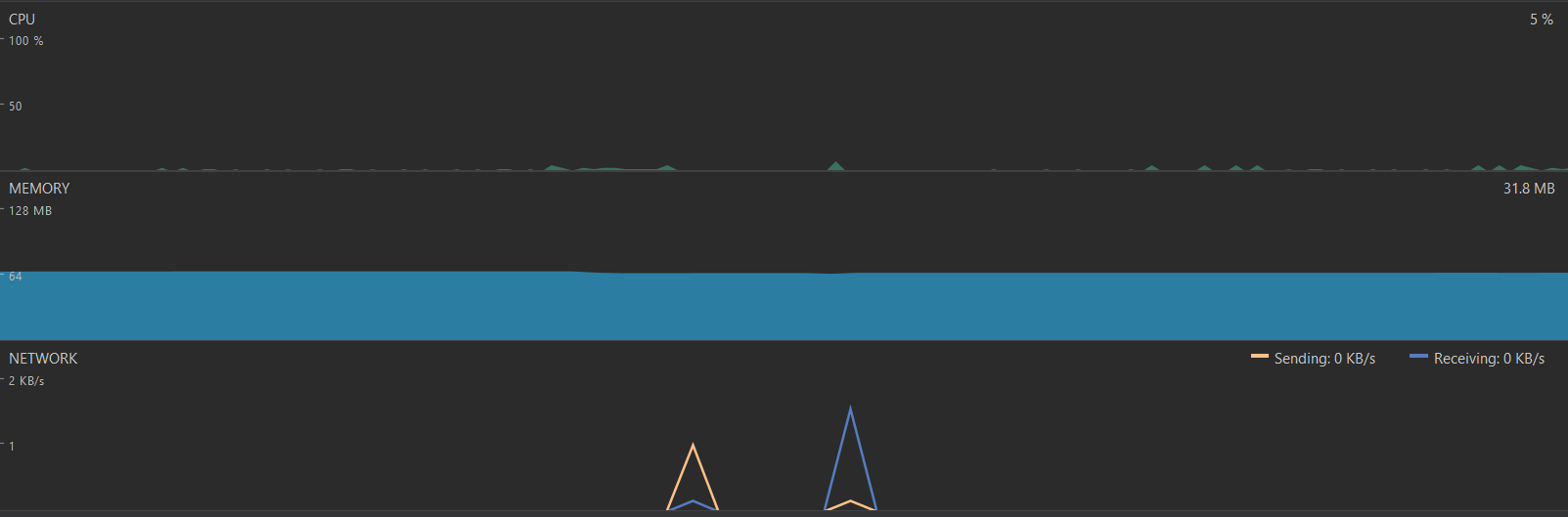

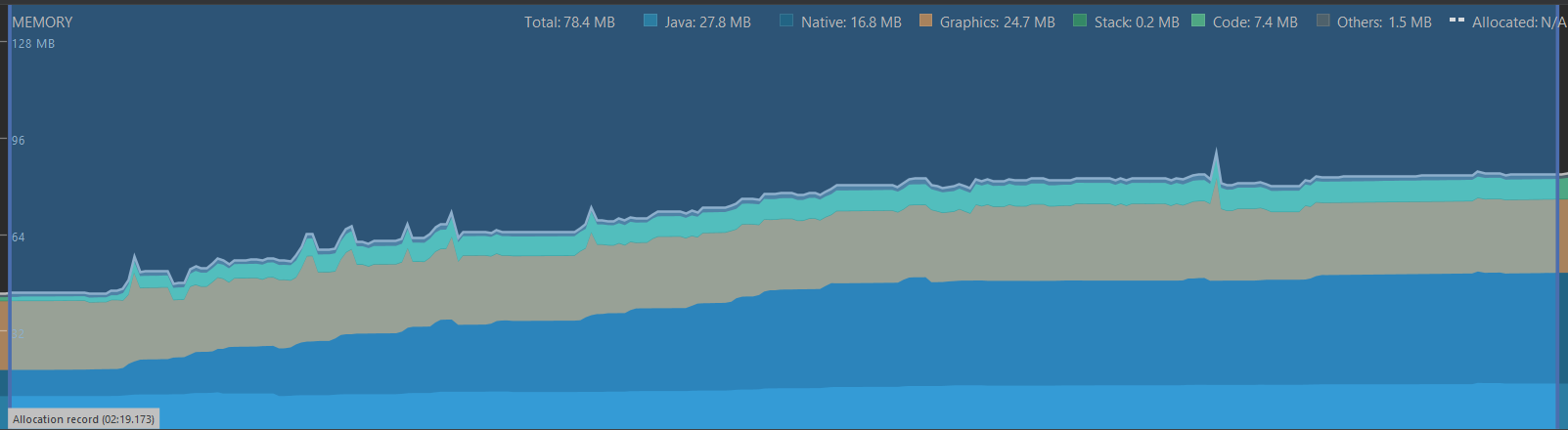

Resource Usage Testing

Using the built in Android Studio Profiler, we were able to monitor the system resource usage of our app on a tablet (Octa Core, 4GB RAM, 64GB ROM).

We performed a range of tasks on the app that we would expect our users to do, from making commands to adding reminders, for example. The average CPU usage during this time was less than 10% and the average RAM used was roughly 80MB. This is very good as it means our app uses minimal amount of system resources. Though we have not tested the app on low powered devices, this suggests that the app could run on such devices without changing the code. The Profiler settings were set to track low level method calls as well to ensure we got an accurate and deep reflection of the apps system resource usage. See screenshots of resource usage from the Profiler below.

CPU usage during general usage, including using the voice assistant:

RAM usage during general usage

Please note that Android only recommend doing formal speed testing specifically for tasks which use high amounts of the CPU. As our app doesn't use much CPU for any of the tasks, we did not conduct formal speed tests, though future developers may decide to do this as they add new functionality to the app.

Compatibility Testing

We want our app to be able to be used on a variety of devices, old and new. It was therefore important to make sure that it is compatible with different Android API levels.

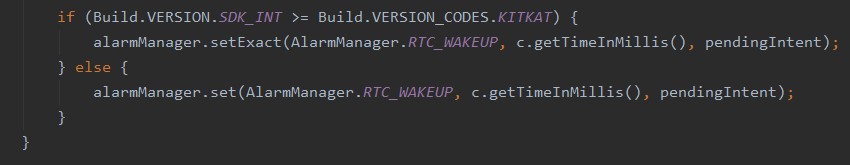

We did not conduct formal compatibility testing due to a lack of need. Android Studio itself tells us if we are using a system feature that is above our minSDK. This happened for setting an alarm notification, where Android Studio alerted us that the system call being used was above our minSDK. It then suggested a possible fix which we used. See the screenshot of this below.

Furthermore, all our Android API calls are basic, such as calling and messaging. We also do not interact with low level system features or the Hardware Abstraction Layer (HAL). Where possible, we also tried to use libraries which had been tested for backward compatibility. For instance, Google's Play Services (used to obtain the user's latitude and longitude) have been tested to ensure backward compatibility with Android SDKs up to 4.1 (API Level 16, which is also our minSDK). We therefore felt that formal compatibility testig was not necessary at this stage. It should also be noted that we tested the app on a tablet which ran Android SDK 8 (which is nearly 4 years old) and on a phone running the newer Android SDK and both worked without issue.

User Acceptance Testing

We asked some elderly people that we know to test our app and provide us with feedback.

The app has a lot of features so we split our test cases into different parts.

The first part was using the voice assistant along with all the available services (APIs and device services).

The second part was getting them to connect to the admin and reuse some of the APIs.

The third part was using the other features (Alarms, Reminders and Timers).

The fourth part was just navigating to all the different pages and assessing the UI.

The idea was to test the design, functionality and ease of use of our app with our test users.

Phase One

Users were given phrases for the different services and asked to use them with our voice assistant. We noted how many successes and failures there were and then asked for feedback at the end.

See the table below for the results

|

Service |

User 1 command |

User 2 command |

User 3 command |

User 4 command |

Successes |

Failures |

|

Weather |

What is the weather in Leeds |

Tell me what the weather is like in Bristol |

Give me the weather in Liverpool |

How hot is it in New York |

All |

None |

|

Air Quality |

Give me the air quality in London |

What is the air quality in New York |

Tell me what the air quality is like in Manchester |

Could you tell me what the air quality is like in Brooklyn |

All |

None |

|

Dictionary |

Could you tell me the meaning of the word spring |

What does the word sunshine mean |

What is the search definition of the word enhance |

Tell me the meaning of the word lake |

All |

None |

|

Thesaurus |

What are some synonyms of the word colony |

Give me synonyms of trivial |

Tell me some synonyms of the word powerful |

Please give me synonyms of good |

All |

None |

|

Jokes |

Tell me a joke |

Give me a science joke |

Could you tell me a pun |

Could you tell me a food pun |

All |

None |

|

Search book |

Tell me about the story Pride and Prejudice |

Give me information about the story the Scarlet Letter |

Could you tell me about the novel Peter Pan |

Tell me about the novel Alice’s Adventure in Wonderland |

All |

None |

|

Search book by category |

Tell me about a horror story |

Give me information about a classic book |

What is a good mystery book |

Give me a summary about a fantasy book |

All |

None |

|

Read book |

Tell me about the story Pride and Prejudice -> please read the book |

Give me information about the story the Scarlet Letter -> give me that book |

Could you tell me about the novel Peter Pan -> I want to read the book |

Tell me about the novel Alice’s Adventure in Wonderland -> read the book out |

All |

None |

|

News |

Give me some news about Elon Musk |

Tell me news about Boris Johnson |

Could you give me some news about London |

Please tell me news about pandas |

All |

None |

|

Find nearest bus stop/train station |

Tell me where I can find the nearest train station |

Tell me where the nearest train station is |

Give me the location of my closest bus station |

Could you show me where I can get the bus |

All |

None |

|

Search for bus stop/train station |

I would like the location where I can find the Oxford Street bus |

Please show me where I can find Kensington bus stop |

Tell me where I can find Euston train station |

Give me the location of Canary Wharf |

All |

None |

|

Stocks |

What is the stock value for IBM |

Tell me the current stock value for NKE |

Give me the current stock value of AMD |

Could you give me stock value of Tesla |

All |

None |

|

Search charity |

Give me some information about the charity Oxfam |

What is the charity WWF |

Tell me a short summary about the charity British Heart Foundation |

Give me a summary about the charity Bernardo’s |

All |

None |

|

Search charity by location |

Give me the name of a charity in London |

Tell me the name of a charity in the city of New York |

What is the name of a charity which is based in the city Edinburgh |

Are there any charities in Brooklyn |

All |

None |

|

Search recipe by name |

Tell me a recipe for Ramen |

Give me a recipe for Tacos |

Could you give me a recipe for roast chicken |

I would like a recipe for cookies |

All |

None |

|

Search recipe by ingredient |

I would like a recipe using chocolate |

Tell me a recipe using tomatoes |

Give me a recipe utilising fish |

Could you give me a recipe using pasta |

All |

None |

|

Get random recipe |

Tell me a recipe |

Give me a recipe |

Please tell me a recipe |

Could you provide me with a recipe |

All |

None |

|

Read out recipe |

Tell me a recipe for Ramen. -> Read the recipe |

Give me a recipe for Tacos -> Could you read the recipe |

Could you give me a recipe for roast chicken -> please read the instructions to that recipe |

I would like a recipe for cookies -> read out the instructions |

All |

None |

|

Call contact |

Call Bob |

Can you call Mike |

Please call Dan Dixon |

I would like to speak to Bob Rogers |

All |

None |

|

SMS contact |

Can you message Steve |

Can you send Selena an sms |

I would like to message Doris |

Send Emma a message |

All |

None |

|

Open app on phone |

Can you open Facebook |

Open Youtube |

Please open Messages |

I would like to open the camera application |

All |

None |

|

Navigate to page on app |

Go to the History page |

Navigate to Instructions |

I would like to go to Alarms |

Can you please go to Reminders |

All |

None |

|

Search for item on e-commerce site |

Can you search Amazon for baked beans |

Can you open Amazon and search for whiskey |

Search Google for Jam |

Please open Amazon and buy trainers |

All |

None |

|

Search for services on Yell |

Can you find me a plumber |

Get me a carpenter from Yell |

I would like to find a nearby vet |

Can you please get me a florist from Yell |

All |

None |

We are pleased to say that all commands worked. See the table below for the extra feedback received.

|

Feedback |

|

+ Wide range of services + Existing services are informative and useful + Phone services are particularly useful + Natural speech input is required + Good that responses are spoken -Speech synthesis could be more natural -Messages could be better |

Phase Two

We setup an admin and made accounts for all the users and added their favourite service from Phase 1 to their account. We then asked the users to use their favourite service and least favourite service (which should be rejected by the VA since it hasn’t been added to their account) and to check their history for the service. Feedback was then collected which can be seen below.

|

Feedback |

|

+Very easy and simple process +Very useful feature (connecting to Admin) +Love that the history is then displayed +Idea of customisation is very good +Removes all the stress, could never do this [Admin tasks] myself +Puts me at ease knowing they [Admin] can help +Its good you don’t have to do this multiple times / no logging out. -You should have description on page what to do

|

Phase Three

Users were then asked to conduct the following tasks for the other features.

|

Feature |

Task Description |

|

Alarms |

Add and view alarm |

|

Edit the alarm |

|

|

Delete the alarm |

|

|

Reminders |

Add and view reminder |

|

Edit the reminder |

|

|

Delete the reminder |

|

|

Timers |

Set a timer |

|

Start the timer |

|

|

Pause the timer |

We received the following feedback.

|

Feature |

Feedback |

|

Alarms |

+Very useful +Easy to use +Intuitive +Simple -When editing an alarm, it would be better if the existing date and time would show up |

|

Reminders |

+Very useful +Easy to use +Intuitive +Simple -Actual text for reminder could be bigger |

|

Timers |

+Very useful +Easy to use +Intuitive +Simple -Numbers in number picker could be bigger |

|

Overall |

+Good colour scheme +Big text +Big buttons +Easy to read fonts and colours +Clear instructions +Easy to use and navigate +Easy to understand -Some text/items could be bigger |

Phase Four

Users had the following feedback on the UI

|

Page |

Feedback |

|

Home |

+Nice +love green background when holding mic +love the clear instructions +love the big buttons and text |

|

Instructions |

+love the big text +very useful -so many instructions can be overwhelming |

|

History |

+Simple +Minimal +Easy to use |

|

View Alarms |

+nice and clean -default text if no alarms have been added should be there |

|

Add, Edit Alarms |

+love big buttons +love big text +text for date and time could be bigger |

|

View Reminders |

+nice and clean -default text if no reminders have been added should be there |

|

Add, Edit Reminders |

+big buttons +big text +very simple |

|

Timers |

+nice and simple +big buttons +easy to use -numbers should be bigger |

|

Register |

+big text +big buttons -have description of what to do on the page |

Overall, the feedback was very positive and exactly what we hoped the users would feel.

We will take on board the negative points. All of these are small issues which can easily be resolved in the future. They occurred mainly due to lack of time. For example, if we had more time, we could implement custom number pickers with larger size.

As a proof of concept, this is very encouraging.

AskBob Integration

Testing the AskBob API presented certain challenges which limited the depth at which it could be tested. However, we were still able to test the AskBob APIs to a certain extent.

To do this we used the Python testing library PyTest. This library allows us to easily create tests written in Python which are easy to read and understand.

Unit Testing

Due to the nature API, we found that unit testing was not feasible. This is caused by the way the AskBob is written due to the fact it utilises RASA. With RASA it is very difficult to separate the components of a chat bot (or in our case a voice assistant) as the code behind it is very intertwined. For example, in our case we were unable to separate the intent recognition of AskBob from the action’s component. This meant that performing unit tests was not possible.

Integration Testing

Due to the nature API, we found that unit testing was not feasible. This is caused by the way the AskBob is written due to the fact it utilises RASA. With RASA it is very difficult to separate the components of a chat bot (or in our case a voice assistant) as the code behind it is very intertwined. For example, in our case we were unable to separate the intent recognition of AskBob from the action’s component. This meant that performing unit tests was not possible.

These tests were organised based on which specific plugins they are intended to test. Within these plugins all branches of the actions code that are feasibly accessible by a user are explored. This provides mostly completely branch coverage with a few exceptions where a branch is realistically unreachable as in certain cases the service APIs will provide a default response. Additionally, each service is tested with a variety of phrasings to ensure the robustness of the natural language processing of the AskBob API.

One thing to note is that due to the discrepancies that may arise between training sessions of the NLP model behind the AskBob API there exists a change that a minute amount of these tests will fail. These will vary between different instances of the AskBob API.

Performance Testing

Similarly, to the unit tests we found ourselves unable to perform any meaningful performance testing on the AskBob API. This is in large part due to the Concierge Service API that the AskBob API utilises to retrieve the data required for a given request. Since the Concierge Service API is comprised of a collection of APIs it will have varying degrees of performance. This is caused by the locations in which these APIs are hosted as that will cause different amounts of latency.

Services API

Because our API interaction used external APIs with live information, we had no way of accurately testing they were functioning correctly - the information returned by each API is likely to change between test runs. Additionally, some services return random and/or location/time dependent information; this means we cannot write a test suite that is consistent across different time periods and locations.

As a result, we assumed these APIs were tested by their respective developers and elected to only test the JSON schema system and the response parsing.

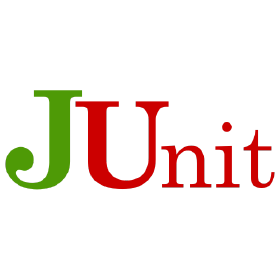

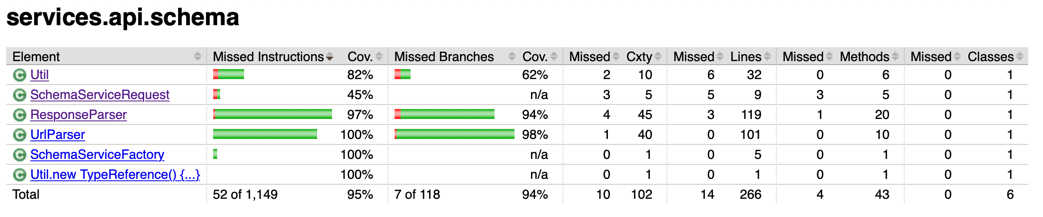

The programmer-friendly testing framework for Java and the JVM (JUnit 5, 2021) JUnit was used to write the integration tests for the API plugin system.

We wrote unit tests for different schemas (both valid and malformed) for different APIs as well as testing the response parser with different JSON response objects.

All tests pass, providing us with the assurance that we can use our API plugin system to represent any API as a JSON schema and turn the API response into a natural language string.

The coverage report is shown below:

Continuous Integration

A continuous integration provides us with some assurance that we are breaking existing code in our system when pushing changes to our repository.

Continuous integration also promotes the development of testable code, of a higher quality.

We did not feel the need to go for a fully-fledged continuous integration suite like Travis CI as GitHub actions (since our repository is already on GitHub) was sufficient for our use case.

GitHub actions run tasks on every push or pull request and we used this to run all of our tests after each repository update.

A develop

branch was used to introduce new features and manually merged to the main

branch if it did not introduce any new issues.

If we worked in much larger groups, we would have set up the workflow to automatically merge pull requests - this feature is unnecessary for our group size.

Below is the (recent) history of the CI workflow runs

References

Junit.org. 2021. JUnit 5. [online] Available at: <https://junit.org/junit5/> [Accessed 26 March 2021].

Robolectric.org. 2021. Robolectric. [online] Available at: <http://robolectric.org/> [Accessed 26 March 2021].

Espresso. 2021. Espresso. [online] Available at: <https://developer.android.com/training/testing/espresso/> [Accessed 26 March 2021].

Monkey. 2021. Monkey. [online] Available at: <https://developer.android.com/studio/test/monkey/> [Accessed 26 March 2021].

Docs.pytest.org. 2021. pytest: helps you write better programs — pytest documentation. [online] Available at: