Deployment

We deployed our service onto Linode by dockerising the frontend, backend, and having the database live on a postgres container. These 3 images are ran on a docker network, using NGINX as a reverse proxy to handle web requests to the frontend and API.

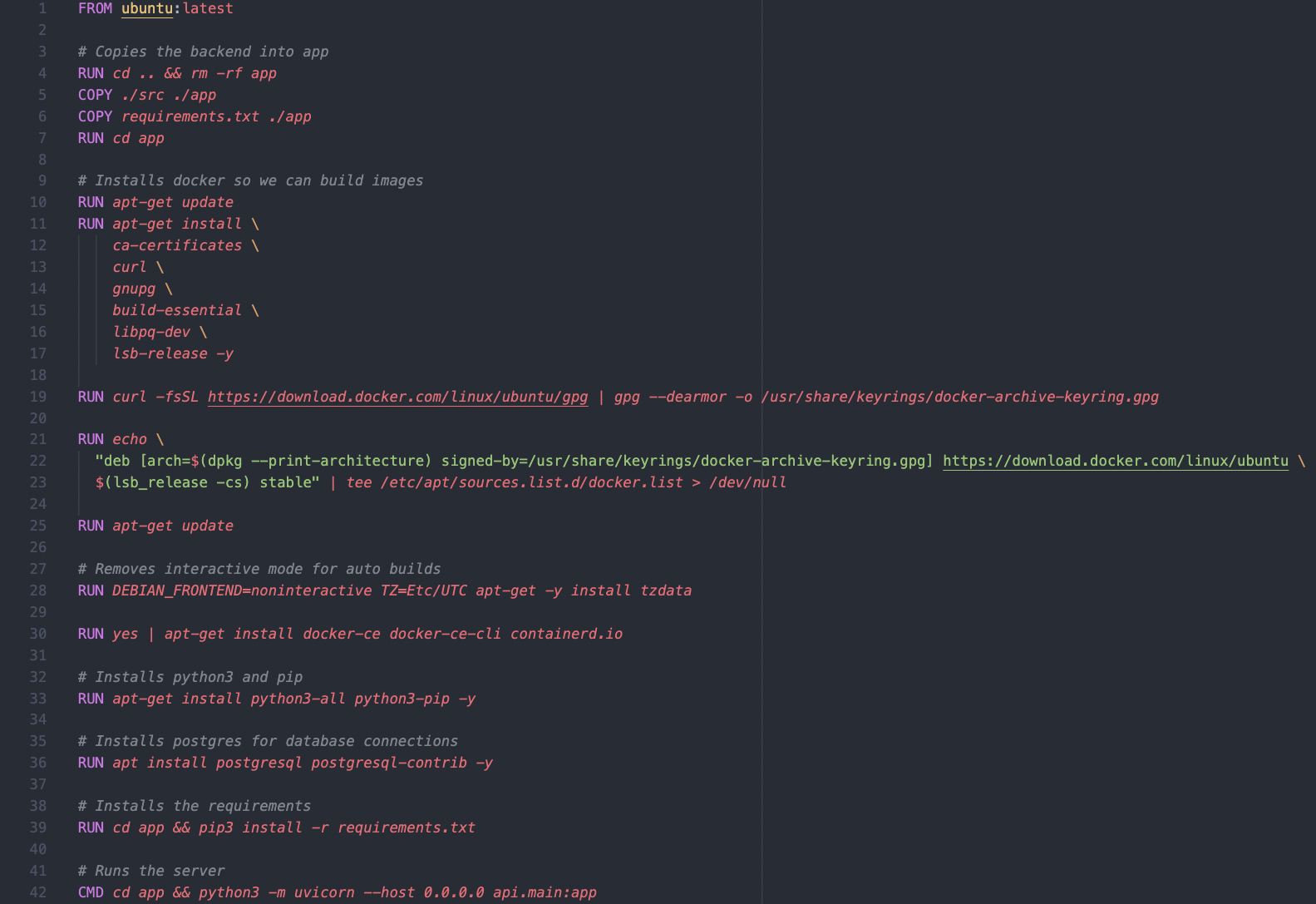

Backend

To deploy the backend, we build from the Ubuntu base image and copy over our source files and requirements.

Since our backend builds docker images, we need to be able to use docker within this docker image, so lines 19 to 30 install Docker onto the image.

We also install Python and pip, as well as postgres for connecting to our database and finally the requirements.

When the docker image is run, it serves the API with uvicorn on port 8000

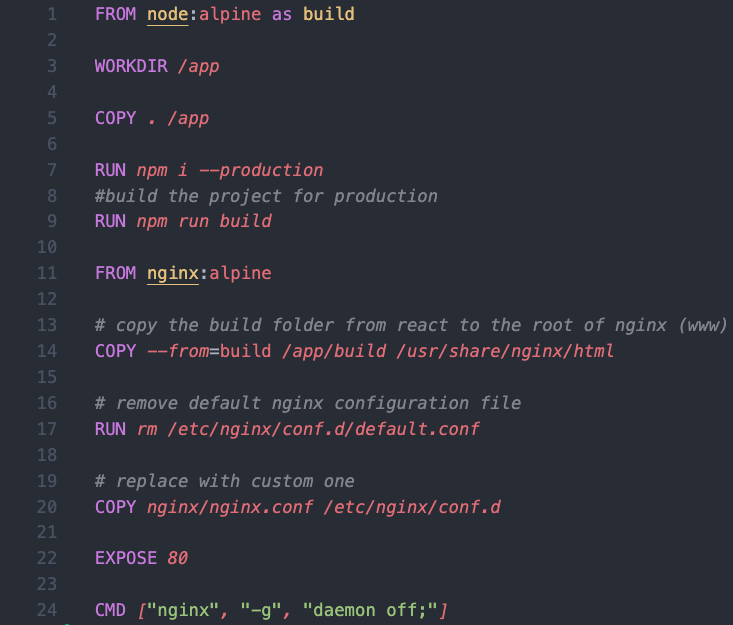

Frontend

For the frontend, we use a multi-stage build that first creates a production build of our frontend, and then serves it with an NGINX reverse proxy.

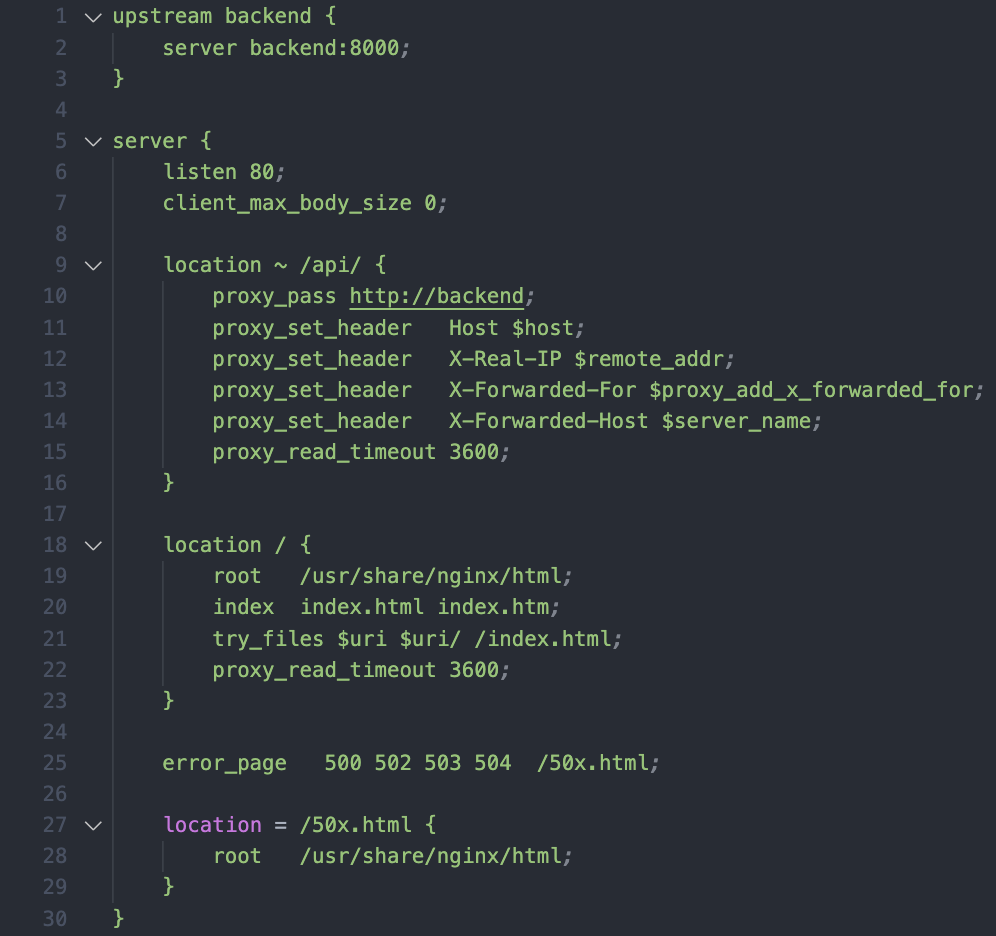

Here is the required nginx config file which proxies requests to either the frontend or the API:

Building and running the images

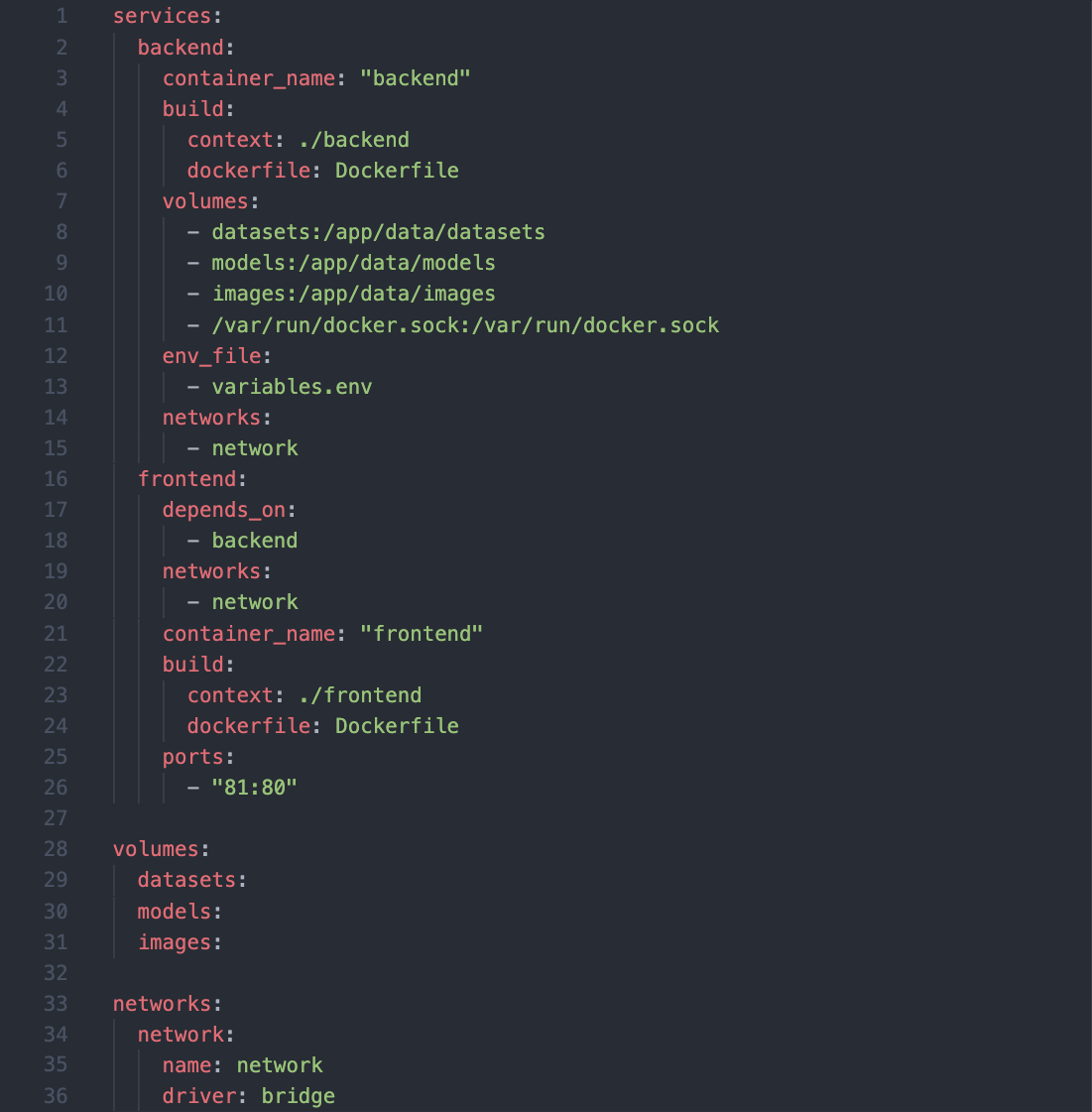

To run the service, we have the following docker-compose file:

This builds and runs the two images on a docker network and exposes port 81 for the frontend.

We can see we have 3 volumes for the backend, the first two are for the models and datasets directories, this is so if the container goes down or is stopped, we don’t lose those files and the data persists when the container is ran again. The third volume connects the container to the hosts docker socket, allowing us to access the hosts docker daemon and build docker images within the container.

We run these two containers on a docker network, and once we have built our database image, we connect it to the backend by adding it to our docker network.

The reason I did not add the database image to the docker-compose file is so that when the user wants to re-deploy, there will often be changes to the backend and frontend images, and they will need to be rebuilt, but there will rarely be a need to rebuild the database image, as revisions can be applied whilst the container is running. This way, we can stop the frontend and backend containers as much as we like and re-run the docker-compose file, and the database container won't be affected. Therefore, to service this, we setup and run the database container separately first, and after running the docker compose, we add the database container to the docker network, and then apply the revisions as explained in the database section.

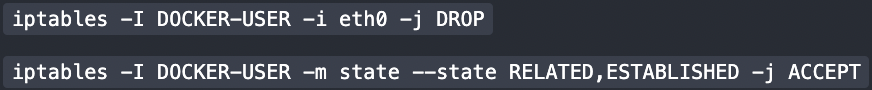

Once all three containers are running, the revisions have been applied to the database, and the first user has been added, we are ready to allow access to our service from the internet. One thing I learnt in my research was that docker by default exposes ports publically to the internet, and an adversary can inject code this way. To prevent this, I ran the following commands which disallow access from anywhere except localhost to docker images:

Source: https://stackoverflow.com/a/66630577/15962925

Finally to service this to users, I installed NGINX on to the Linode server and set up a reverse proxy which redirects traffic from port 80 to port 81.

You can see this in action here: http://176.58.116.160/