Model Download

Once the user uploads a model we now have a docker image which serves an API and allows us to call a predict function on the trained model. However, we still need to provide the user with a frontend. To do this, we built a simple React frontend which calls the API endpoint ‘/model/data’ to display the information about the model to the user such as name, description, inputs, and outputs when the frontend is run. It also calls the ‘/model/predict’ endpoint which calls the trained model’s predict function.

The frontend displays the model data when run, and has a file input button, and a predict button, that when clicked, calls the ‘/model/predict’ end point with the uploaded file as input and displays the result from the trained model as JSON data.

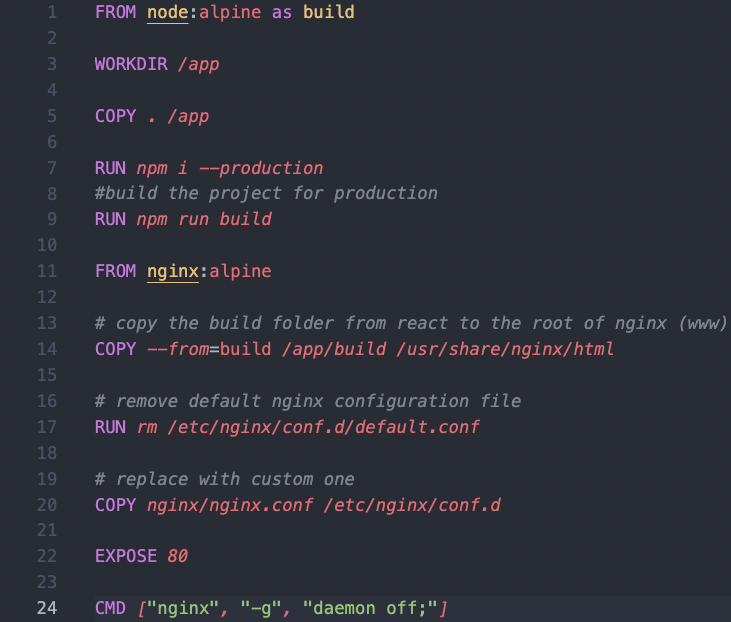

Building the frontend

We built the frontend with React and have a docker file that creates a production build of the frontend and serves it to the user on port 80 with an NGINX reverse proxy, similarly to how we deploy the main applications frontend.

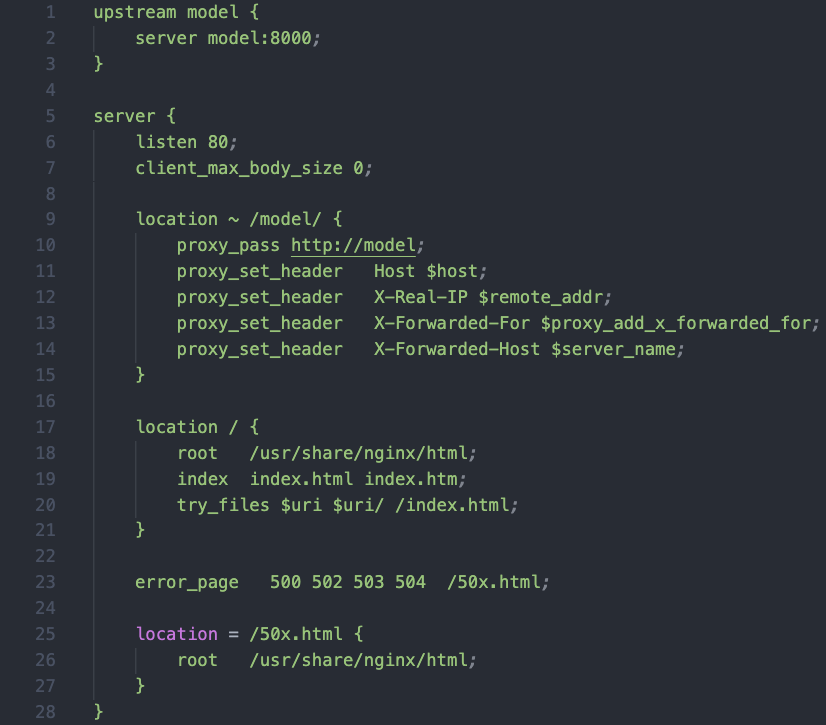

Here is the NGINX config used to proxy requests to either the frontend or the model API:

Serving to the user

To serve this to the user, we pre-build the frontend docker image at deployment and it is saved as a .tar file, similarly to the backend model, and stored in the directory ‘src/data/model_download’ along with a docker-compose.yml and a readme.md

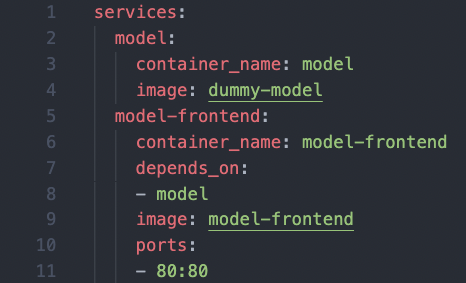

Here is the docker-compose file:

This allows both the frontend and backend docker images to be run together on a docker network, so the frontend can send requests to the model API at ‘http://backend:8000’. The frontend is exposed at port 80 so the user can view the model frontend at http://localhost

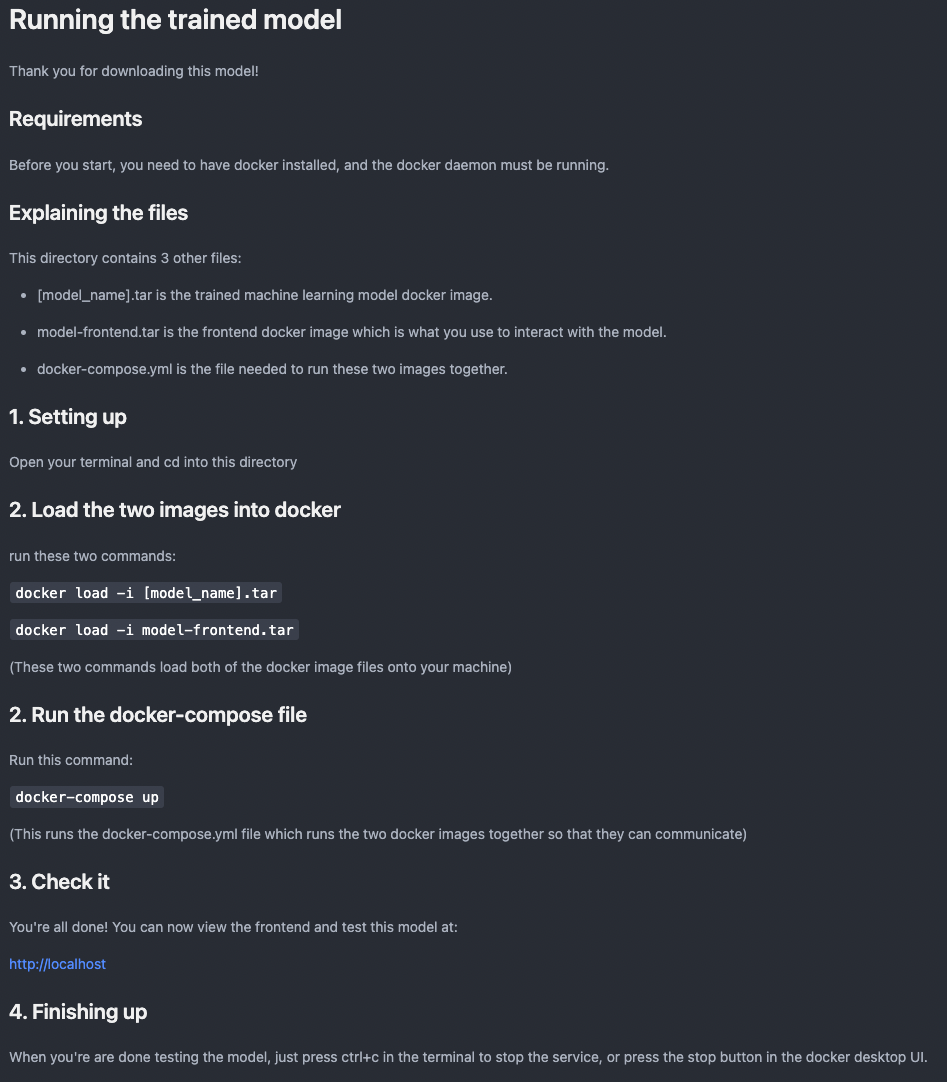

The final file in model_download is a readme, which contains all the information for running the two files, it looks like this:

The first two commands load the two images into docker, and the final command runs the docker compose file.

Generating a download

In our main applications API, when the ‘/models/{model_id}/download’ is called, it copies over the three files in model_download into the temporary directory ‘src/data/temp/[model_name]-download’. The .tar file of the trained machine learning model's docker image the user is requesting is also copied into this temporary directory from where it is stored in 'src/data/models'.

We edit the readme file and the docker-compose file in this temporary directory so that the model name in both matches that of the docker image the user is requesting.

This temporary file is zipped, then deleted. The zip file is served to the user, and once they have downloaded it, it is deleted.

The end user just needs to unzip this file, cd into the directory, and run the three commands in the readme.

They can then test the model with the provided frontend at http://localhost