Development Blog - COMP0016 Team 20

BHF Key Statistics Chatbot

This is a development blog for Team 20 as a part of UCL’s COMP0016 Systems Engineering module 2021-22. We are building a chatbot for the British Heart Foundation (BHF). The chatbot is going to assist researchers, policy makers and the BHF team to find statistical data easily from the excel sheets of BHF’s data.

Meet the team

Neil Badal

Client Liaison, Programmer, Bot Developer, Website Designer, Video Editor

Ivan Varbanov

Client Liaison, Programmer, Bot Developer, Researcher, Report Editor

Maheem Imran

Client Liaison, Programmer, Bot Developer, Tester, Development Blog Editor

Week 1 (18th Oct - 25th Oct)

- We met within our team and had introductions

- Outlined the problem

- Contacted the client and had our first meeting with them

- Briefly discussed the BHF data compendium

- Asked the client about their expectations and requirements (semi-structured interview)

- Agreed check in days/times for weekly meetings with the client

We also set up our GitHub repository and started planning and creating templates to gather user requirements.

Week 2 (25th Oct - 1st Nov)

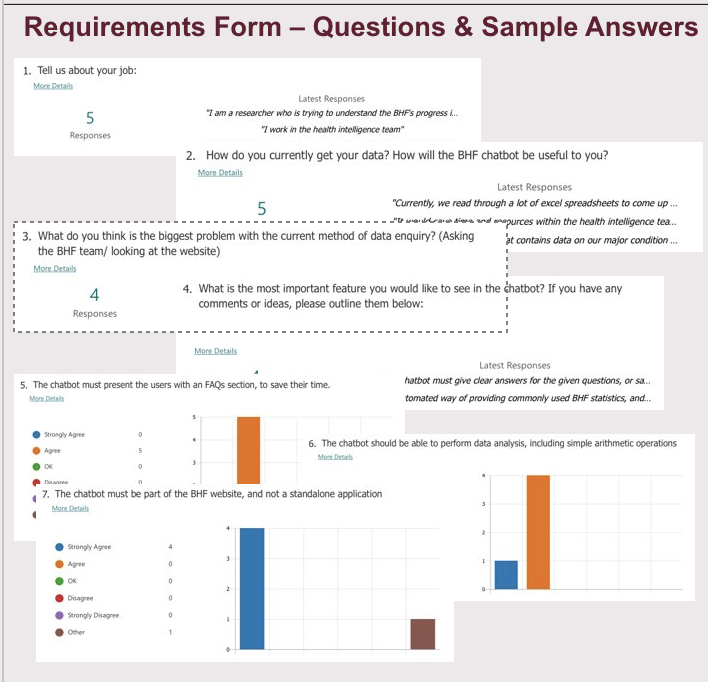

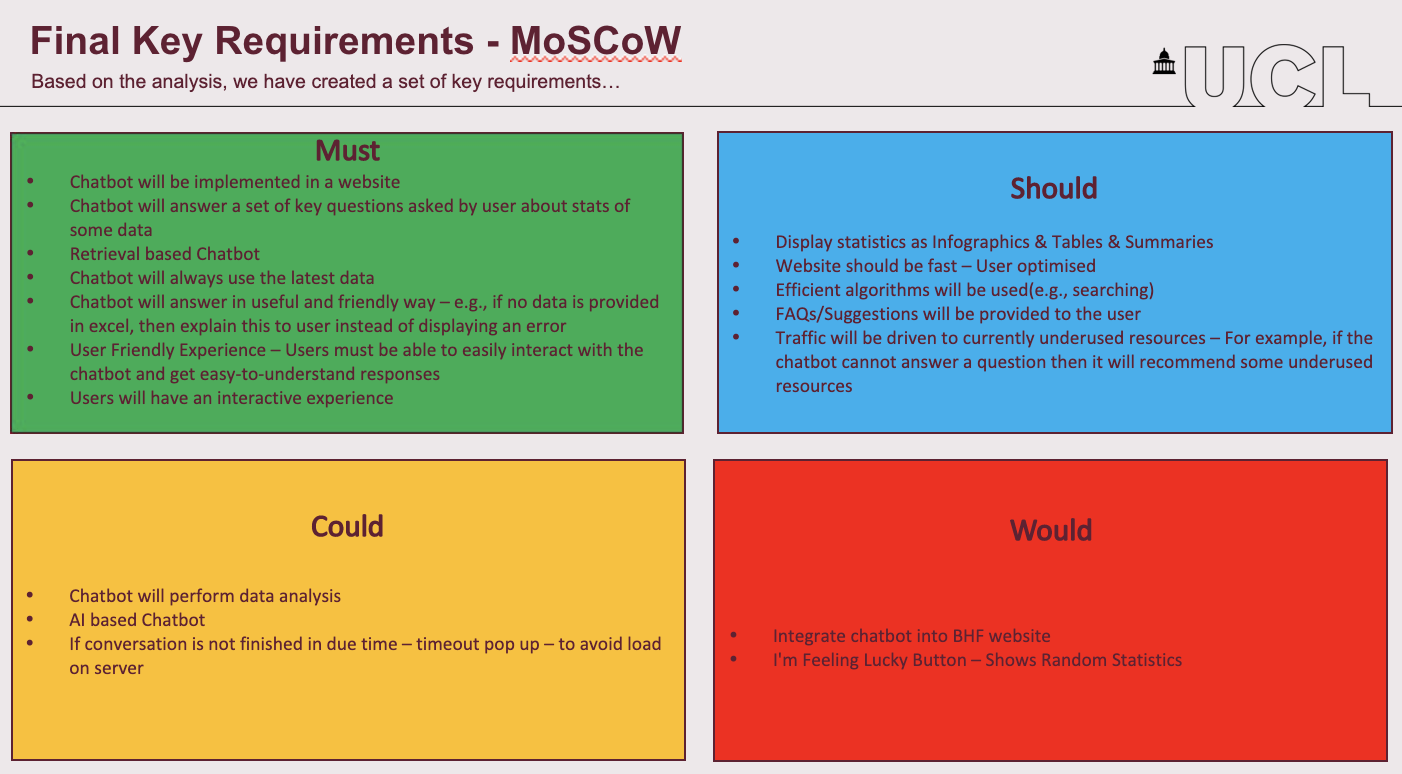

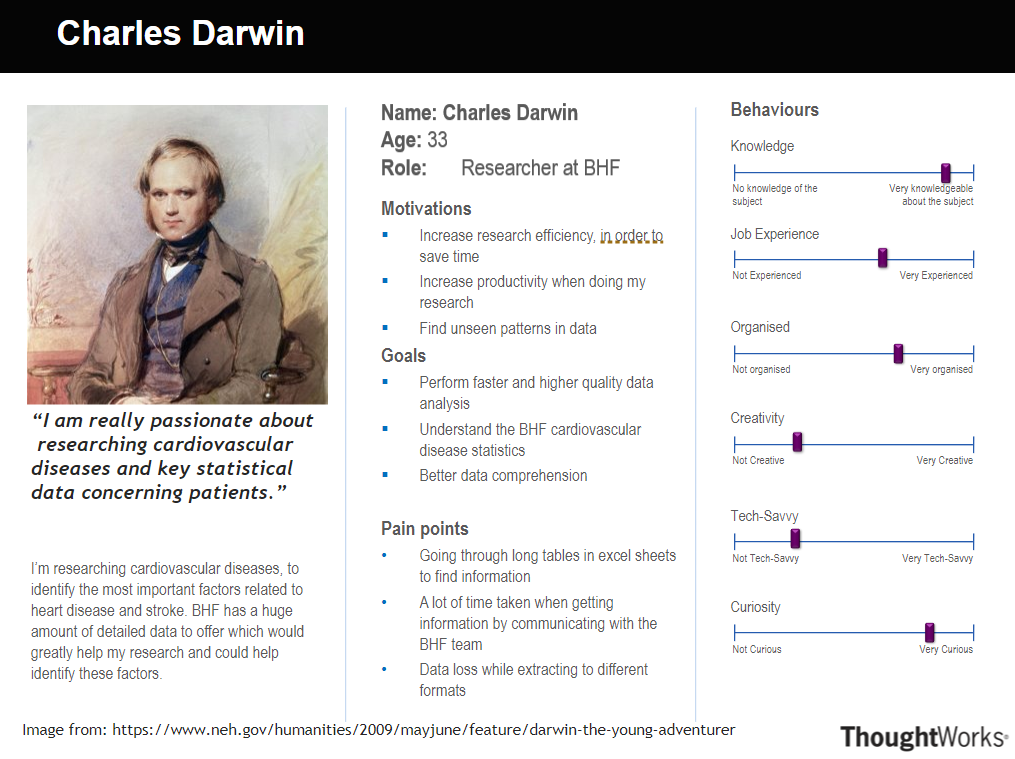

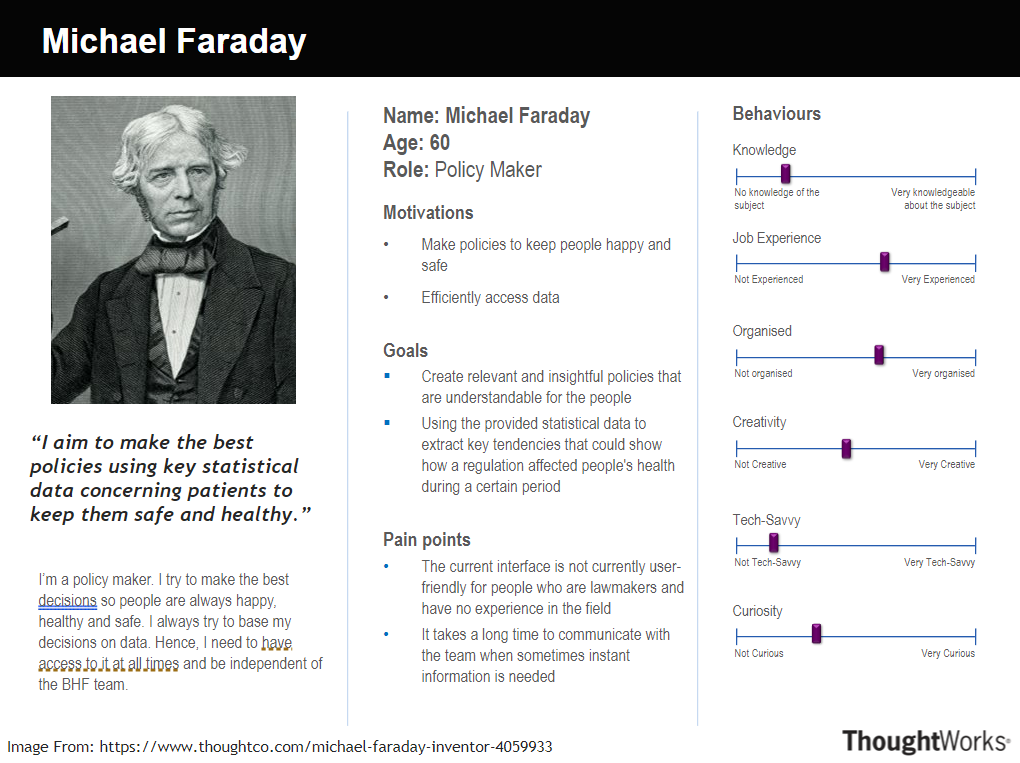

This week, we sent out a form to our classmates, who acted as pseudo users in the scenario and helped us gather user requirements for the chatbot. We also interviewed our clients and the BHF team. After gathering user requirements, we came up with a set of key requirements using the MoSCoW technique. Using these requirements we then made some personas and scenarios to help us better understand the users.

Week 3 (1st Nov - 8th Nov)

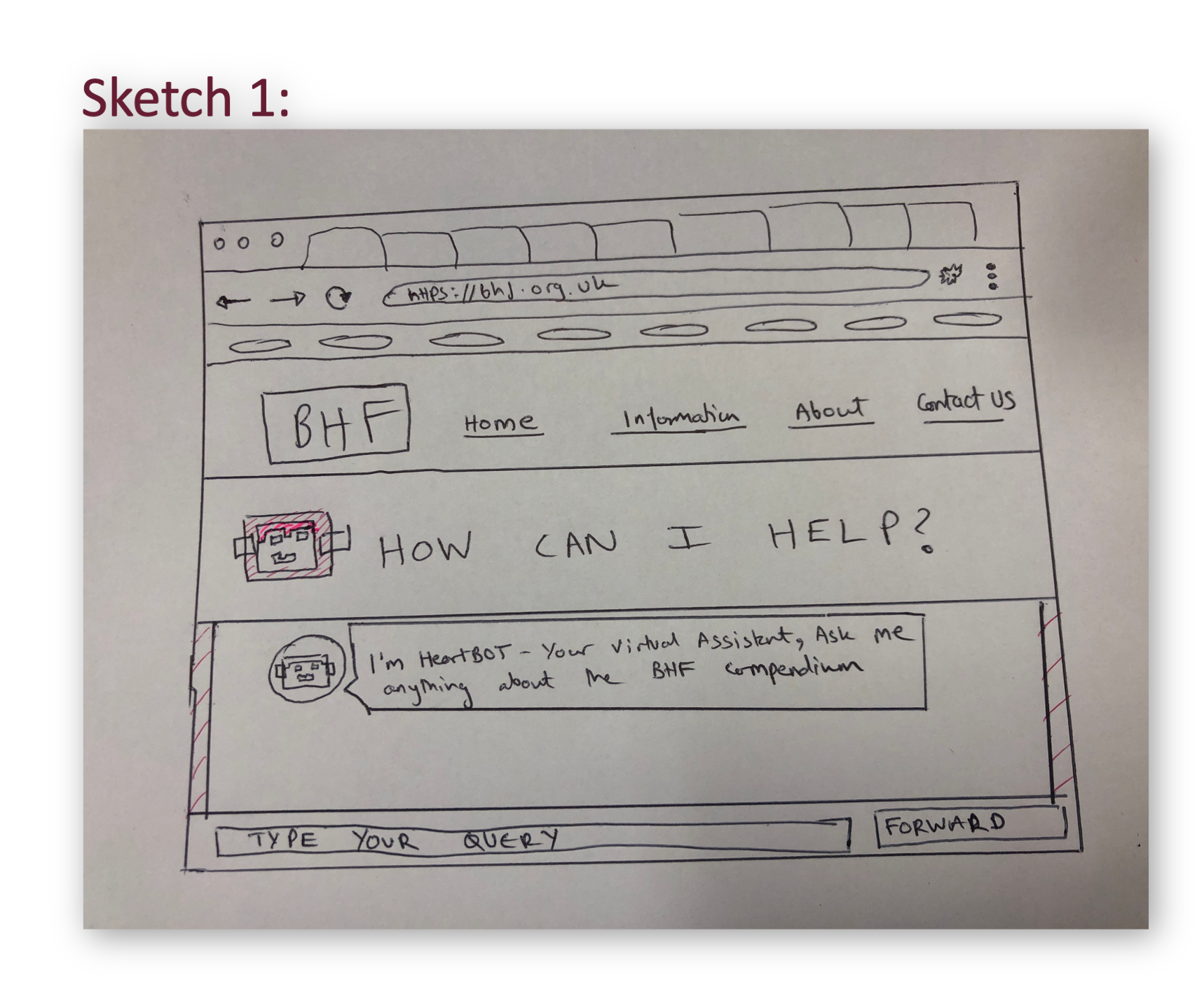

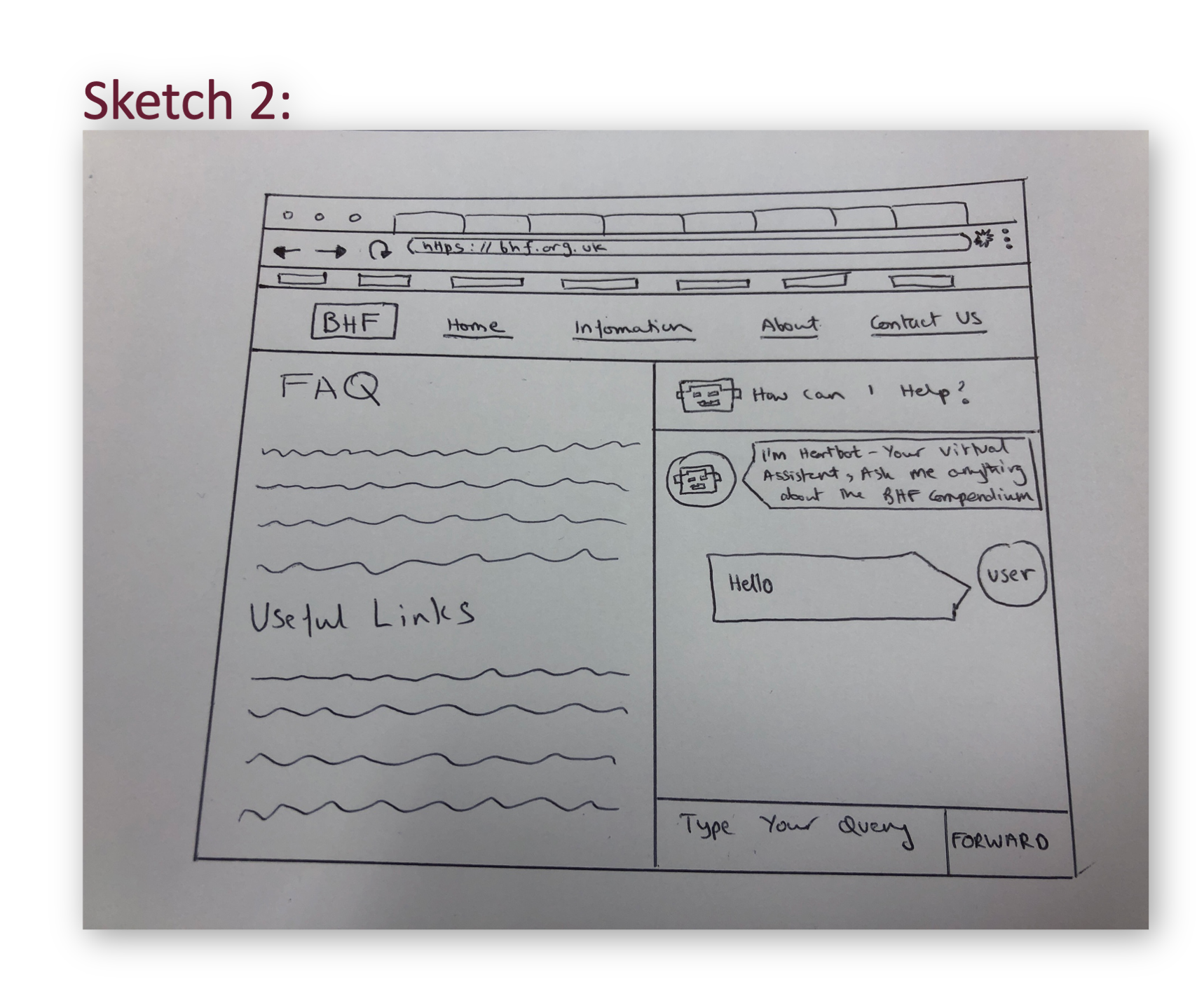

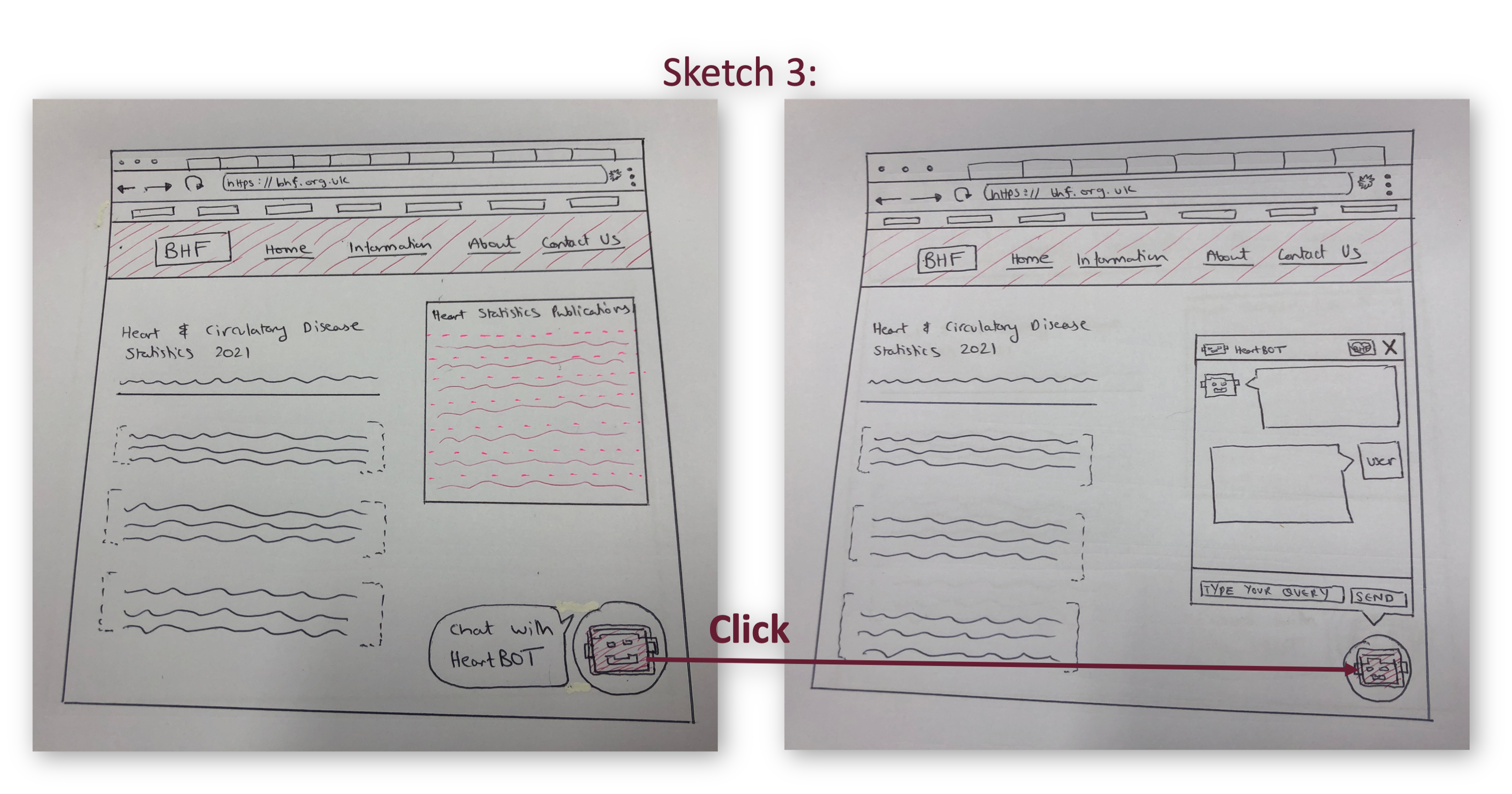

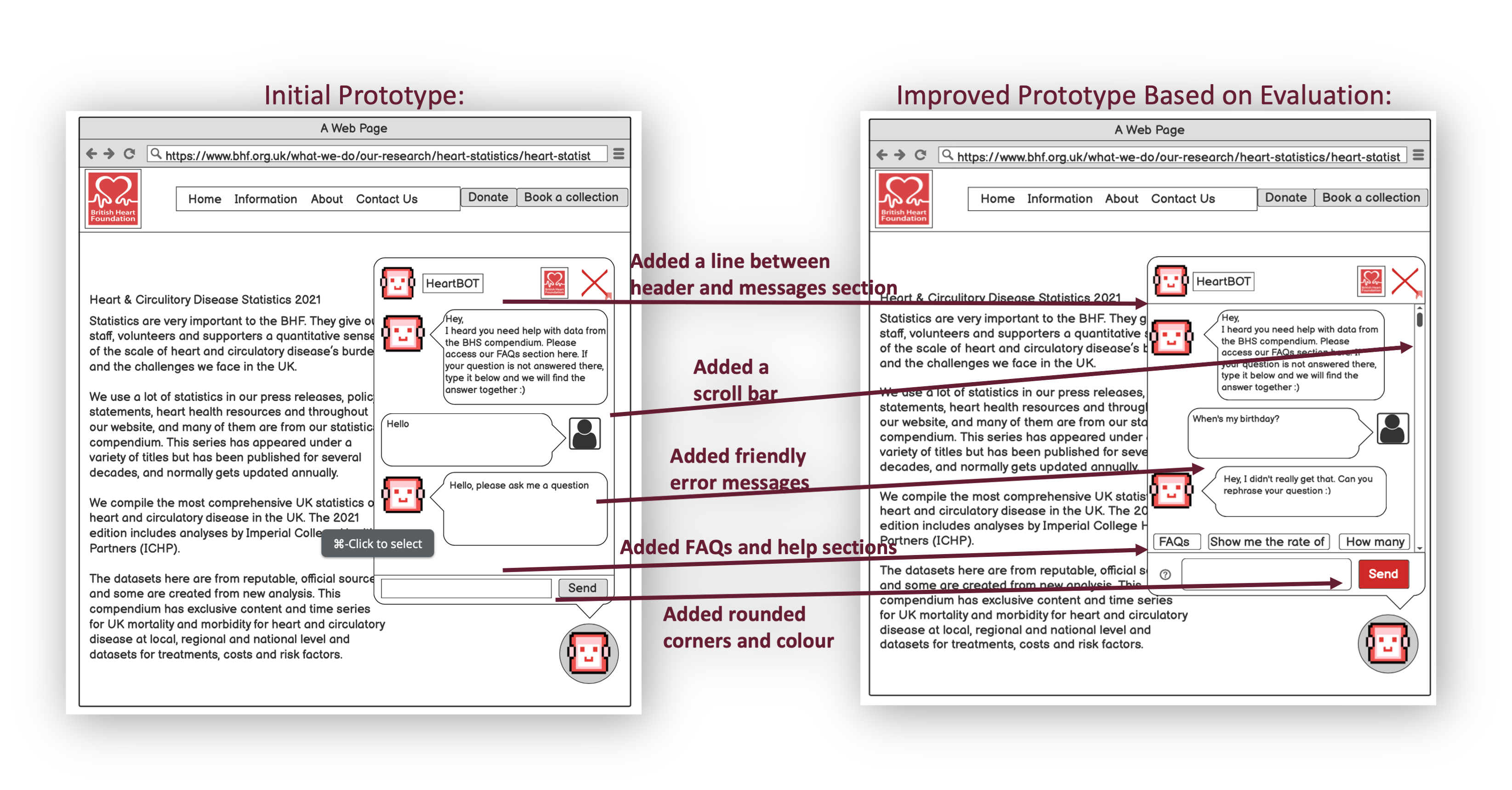

This week we started working on sketches. We sketched out some possible user interfaces that we could have and then showed them to our classmates/pseudo users. They preferred one of them over the others. We used that sketch (sketch number 3) to make a prototype using Balsamiq. We then showed this to our pseudo users and our client and then used their feedback and heuristics to improve our prototype.

Week 4 (8th Nov - 15th Nov)

This week we worked on our HCI (Human Computer Interaction) report.

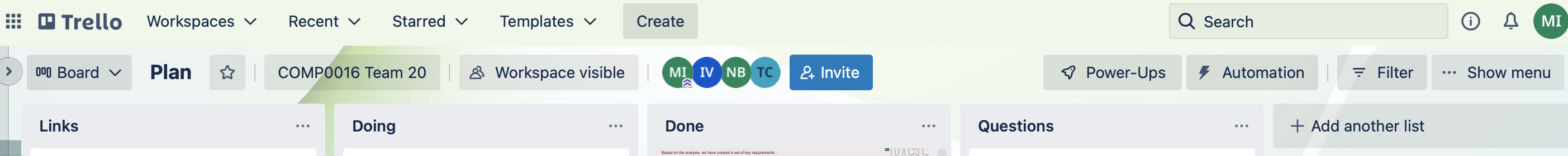

We made a trello board to keep track of tasks and roles within the team and we researched libraries, websites and chatbots.

Week 5 (15th Nov - 22nd Nov)

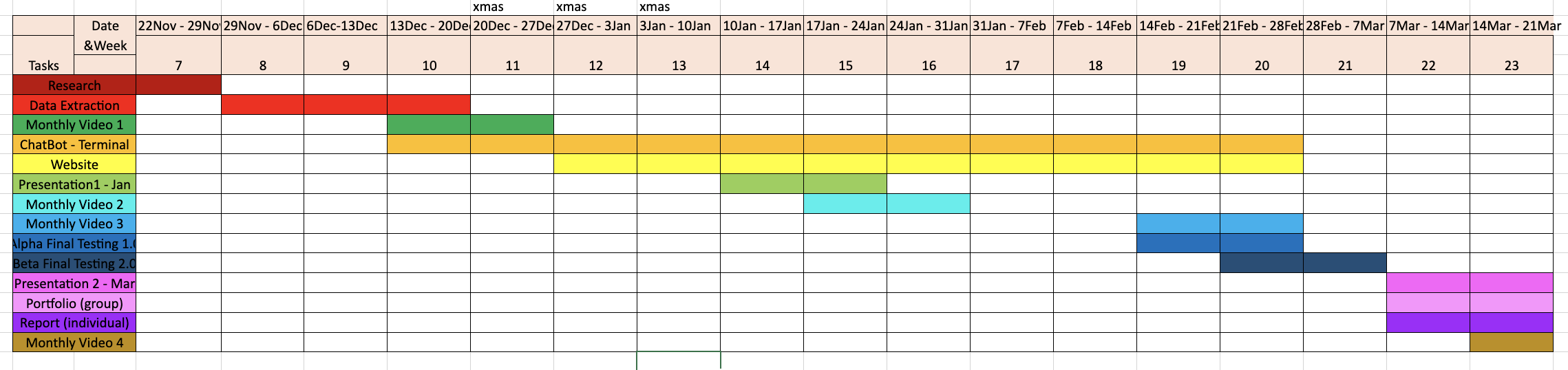

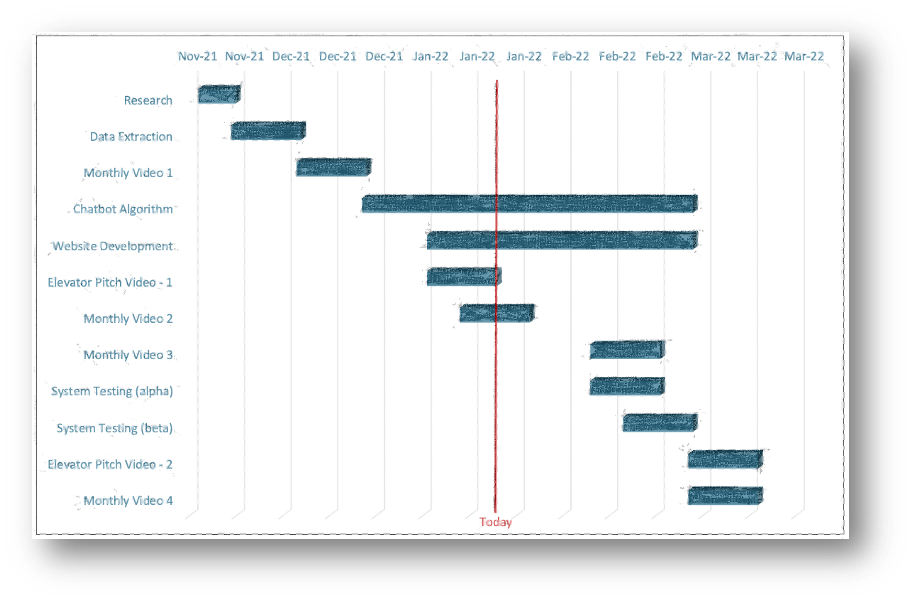

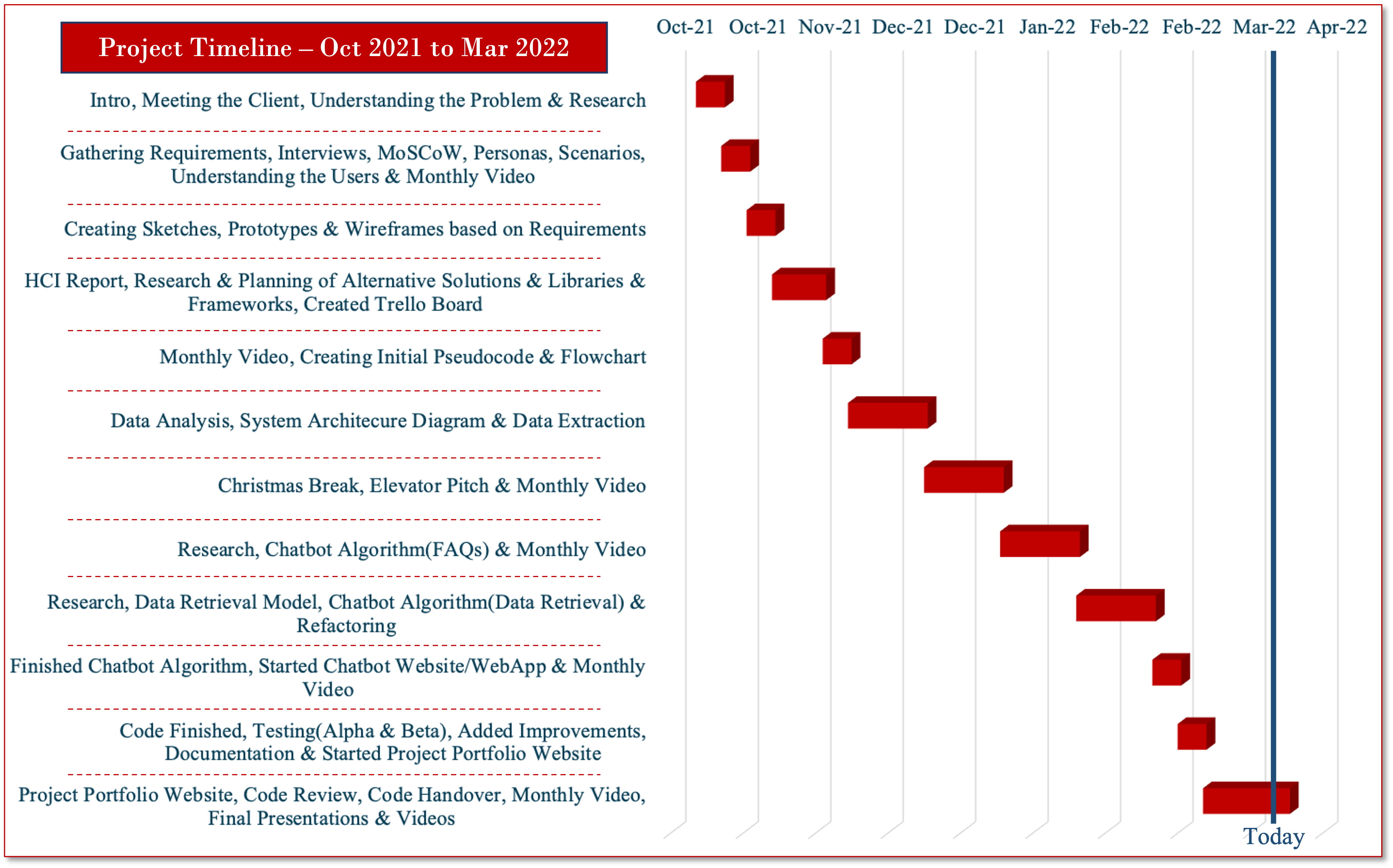

We made a Gantt chart to plan our project ahead and make the most of the time we have by splitting it up into different tasks. This helped us determine how long to give each task, effectively divide tasks and manage dependencies between different tasks.

Week 6 (22nd Nov - 29th Nov)

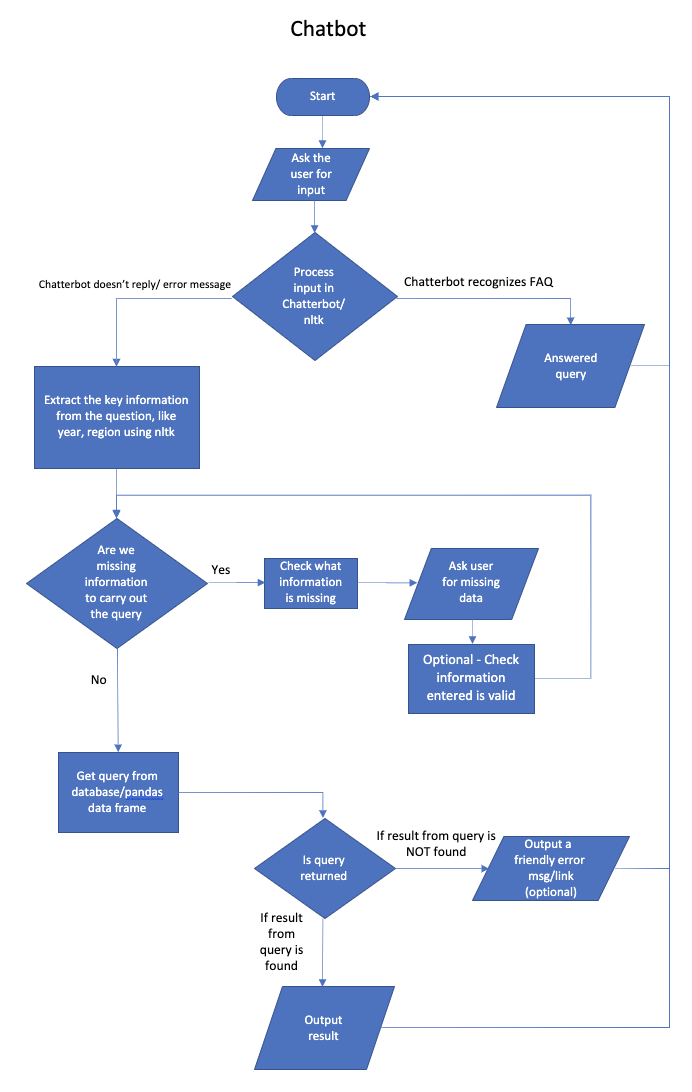

This week we started writing pseudocode and making flowcharts for different functions of the chatbot. This helped us visualise our code and how the data will flow through the program, determine some potential problem points and better understand the code.

Week 7 (29th Nov - 6th Dec)

We started to analyse the data compendium in detail to better understand it. During this process, we found that the data was not standardised and this meant we could not parse the data into our code. We experimented and researched different techniques to standardise the data but could not find a straightforward solution in the limited time we have. Therefore, we decided to contact our client.

Week 8 (6th Dec - 13th Dec)

We had a meeting with our client about the data and fortunately they said they have all the data in a standardised table format which solved our problem. They provided us with some sample data and FAQs for us to start working on.

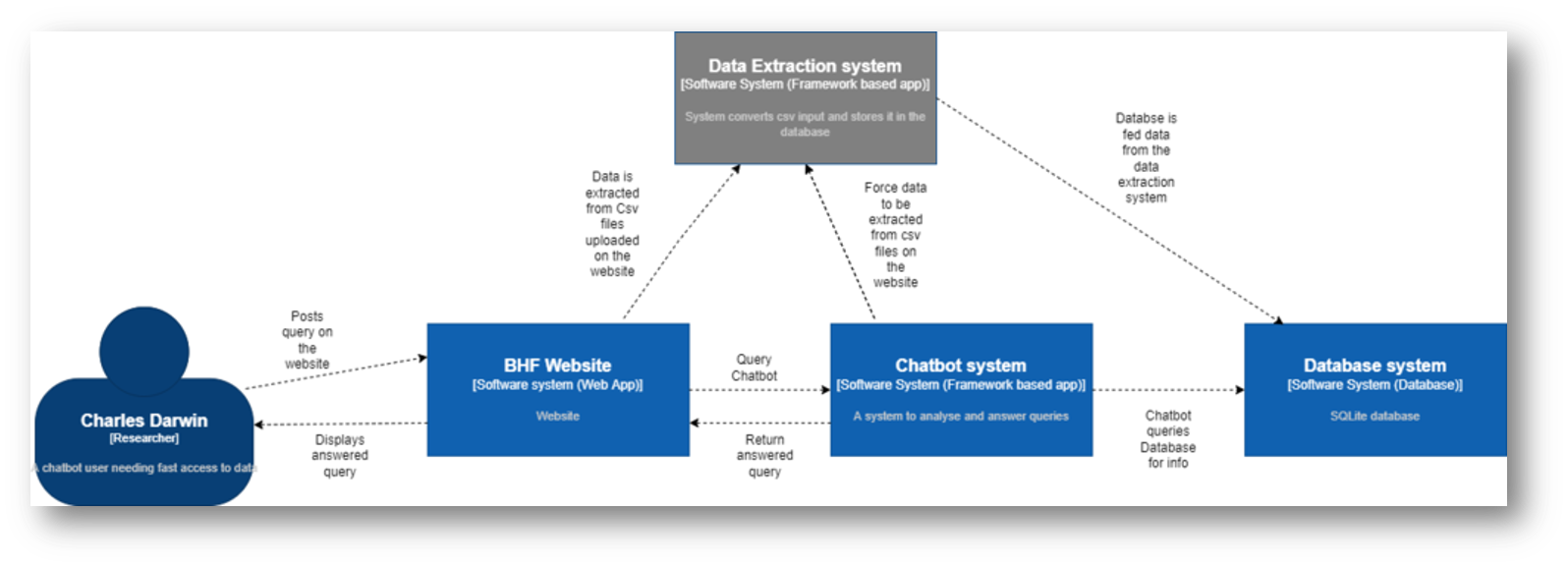

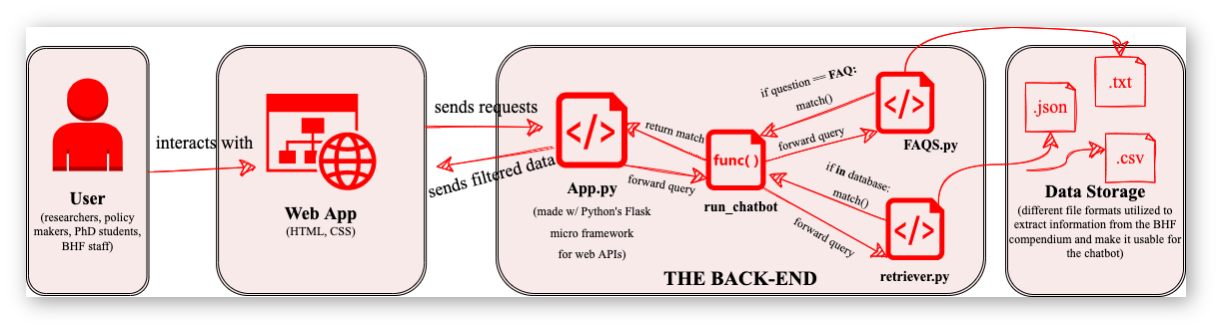

Since now we have all the things we needed, we started to work on an architecture diagram. This will help abstract our system and show us the relationships, constraints and boundaries between different components in our code.

Week 9 (13th Dec - 20th Dec)

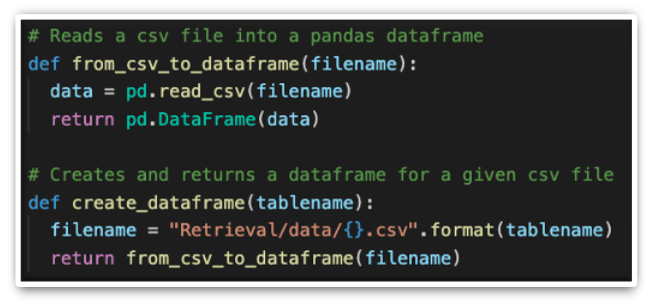

Since, we had the sample data from our client now, we started working on data extraction. We implemented this by converting the excel data into csv files. Then we converted these csv files into a pandas data frame. We then added the information from the data frame to a sqlite3 database. We can now retrieve data from this database using SQL queries.

Week 10, 11 & 12 (20th Dec - 10th Jan)

We started working on our elevator pitch to present what we’ve done so far in this project. We revised our gantt chart according to the current progress and situation of the project in coordination with the client.

Week 13 (10th Jan - 17th Jan)

We finished our elevator pitch presentation slides and video. We started writing the chatbot algorithm and experimenting with Chatterbot. We recorded a monthly video to show the progress we’ve made this month and what we’re planning to do next month.

Week 14 (17th Jan - 24th Jan)

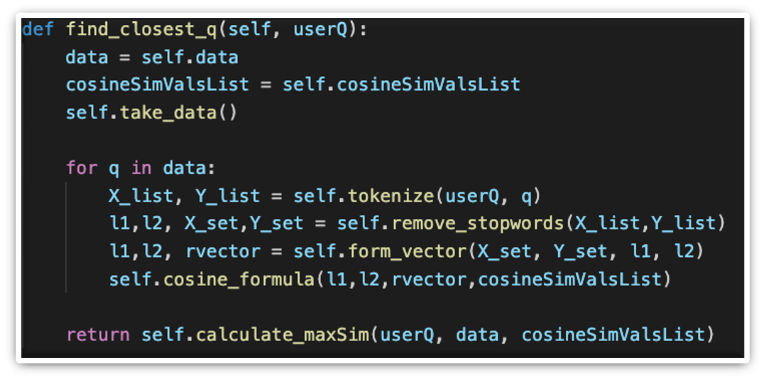

We figured out that the chatterbot library was not an optimal match for our project and specifically the FAQs chatbot algorithm that we were planning to create. Therefore, we created a modified version of the chat class in the nltk library for our algorithm. We have started planning and implementing our FAQ algorithm using natural language processing.

Week 15 (24th Jan - 31st Jan)

We finished implementing the FAQ chatbot algorithm using nltk and the details mentioned above in week 15. In addition to the nltk Chat class, we created a best match class that finds the best matching FAQ using cosine similarity since users may ask questions in more than one way. Next week we plan to start designing a model to answer data specific questions.

Week 16 (31st Jan - 7th Feb)

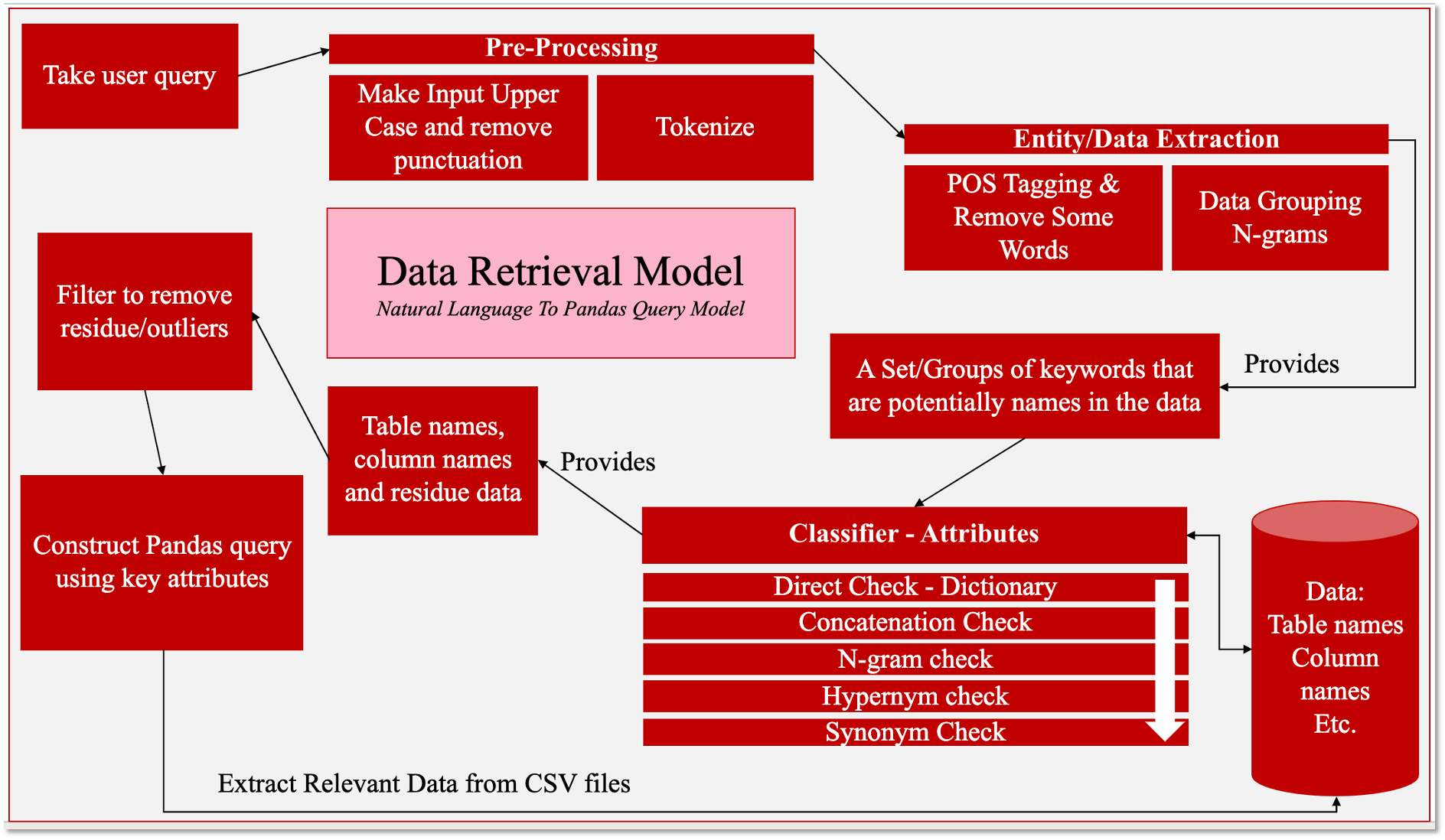

We researched different methods that could be used to convert questions written in natural language to SQL queries in order to build on our chatbot.

Week 17 (7th Feb - 14th Feb)

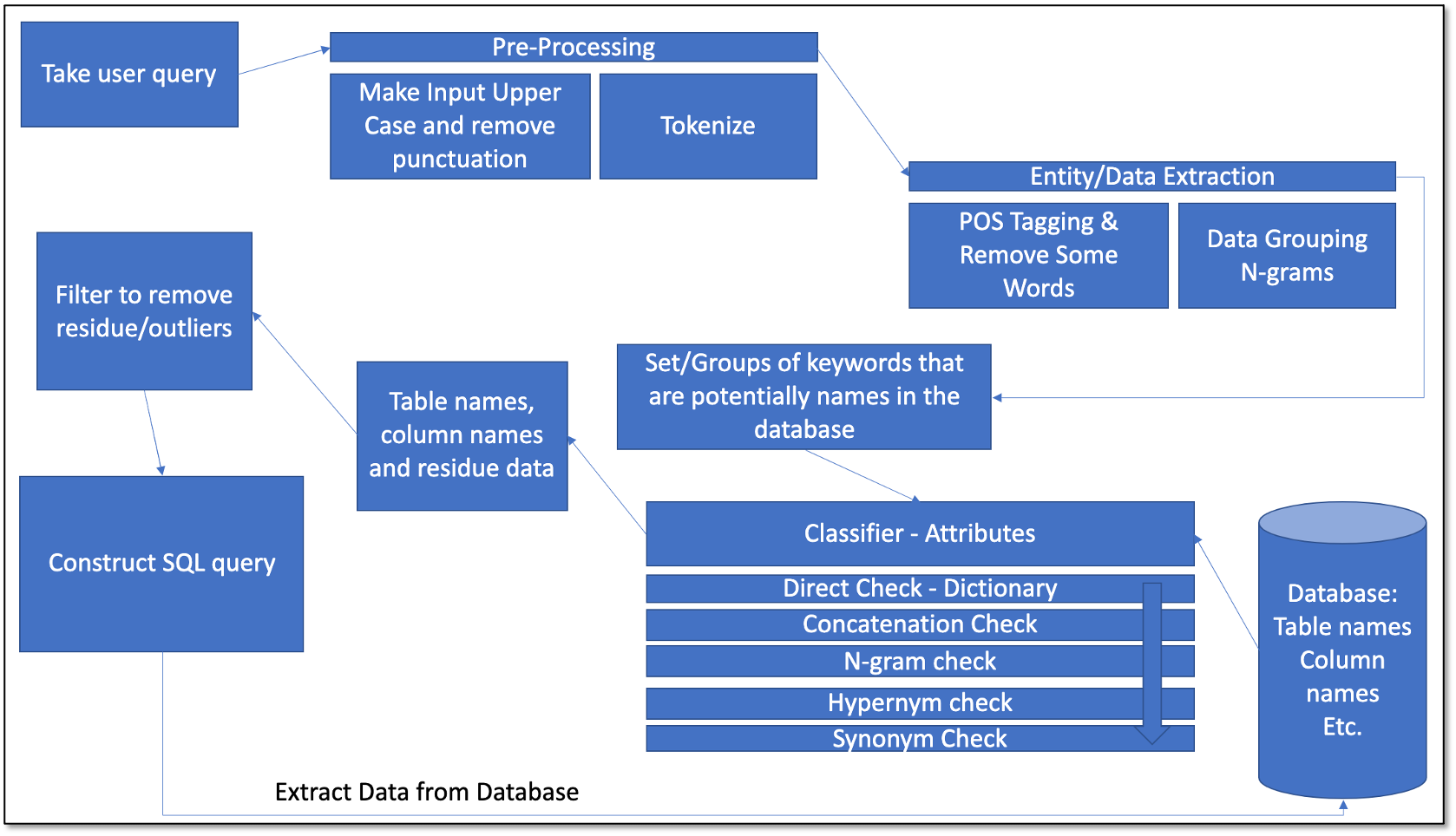

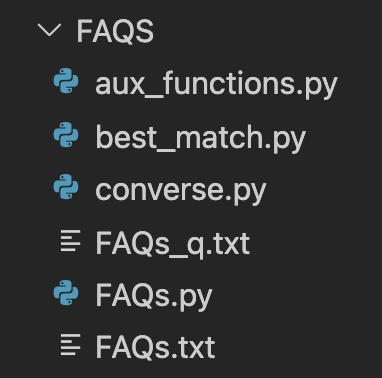

After extensive research, we created a custom model to convert users’ questions into SQL queries. We refactored the code we had for the FAQs section and added different classes in order to organise our code better.

Week 18 (14th Feb - 21st Feb)

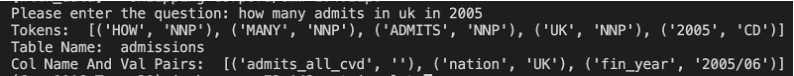

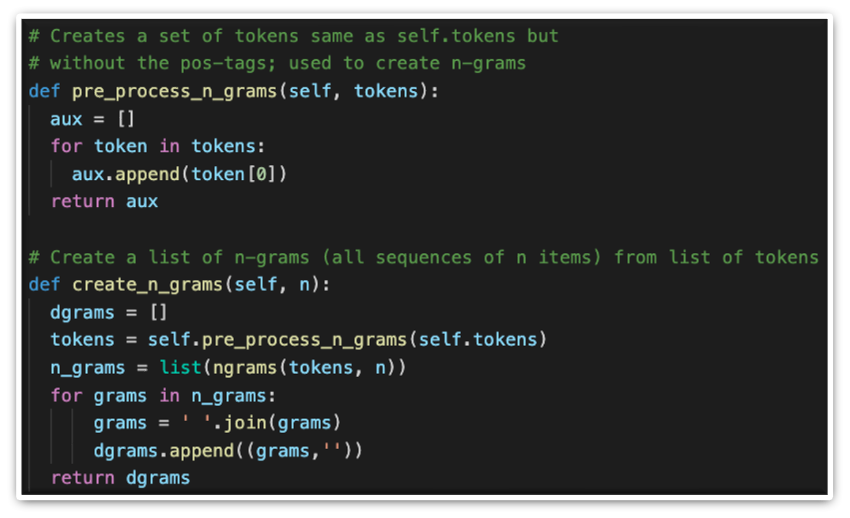

We started implementing the model we created. This week we began by taking the user query and pre-processing the input data to standardise it and split it into different tokens, after which we deleted stop words and punctuation which are uninformative. Additionally we added POS tagging to each token to identify the individual token types. While we do not plan to use this in our current implementation, it might be useful for future development and hence is included in the code to make our project extendable.

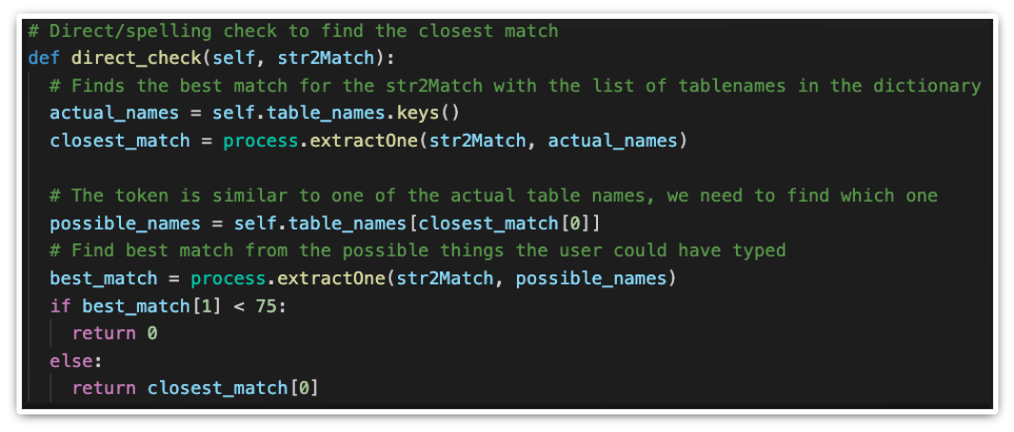

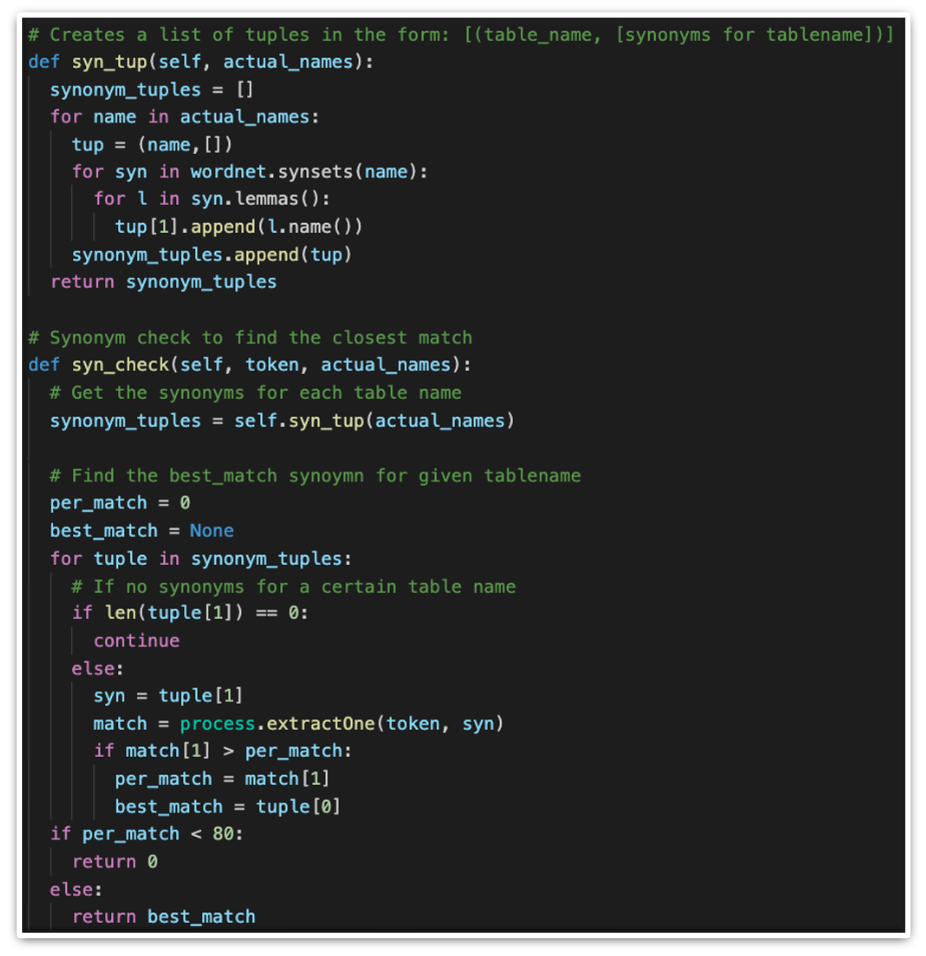

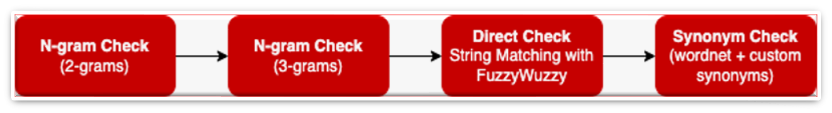

We continued implementing the model by extracting a table name from the tokens so that we can filter through the tables in our data. To do this, we created a layered classifier which uses a library called fuzzywuzzy to find the best match table name. In addition to this, the classifier also checks for synonyms.

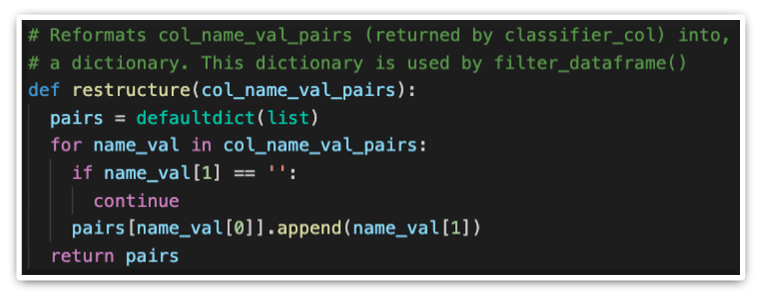

We also implemented a classifier similar to the table names extractor for the column names and column values in the tokens in order to identify what the user has specified. In addition to best match and synonyms, we also added a n-gram check to ensure column names/values which have more than one word, match to the right token. We refactored all of this code into separate classes.

Week 19 (21st Feb - 28th Feb)

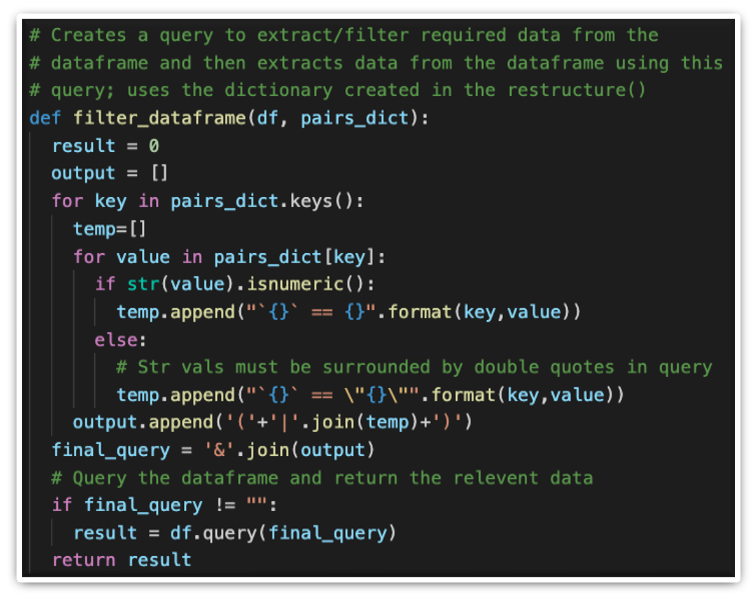

This week, since we had extracted the key information from our tokens we were ready to construct the query. Originally we planned to use SQL queries and the SQlite database from the start of the project. However, we found that it would be better to use the data frames in the pandas library along with pandas queries. Hence, we took this key information extracted from the user question, constructed a pandas query which returned a filtered pandas data frame with the information the user asked for. Note: The data retrieval model was slightly changed here.

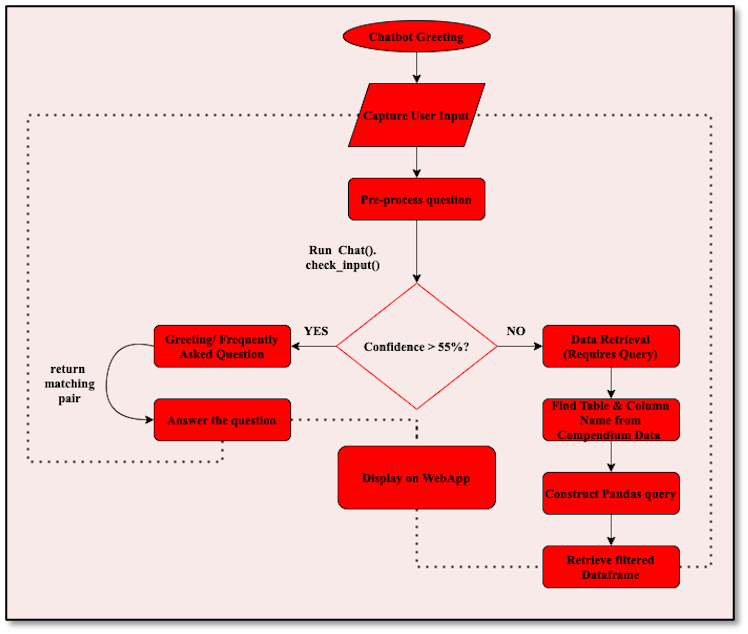

After we had both the FAQs and retrieval algorithms working correctly, we integrated them together. Now, the program works by first checking if the query is a FAQ/general greeting and if it is not, it runs the retrieval algorithm. If no match is found by the retrieval algorithm, a friendly error message is printed. If a match is found, a filtered data frame is printed.

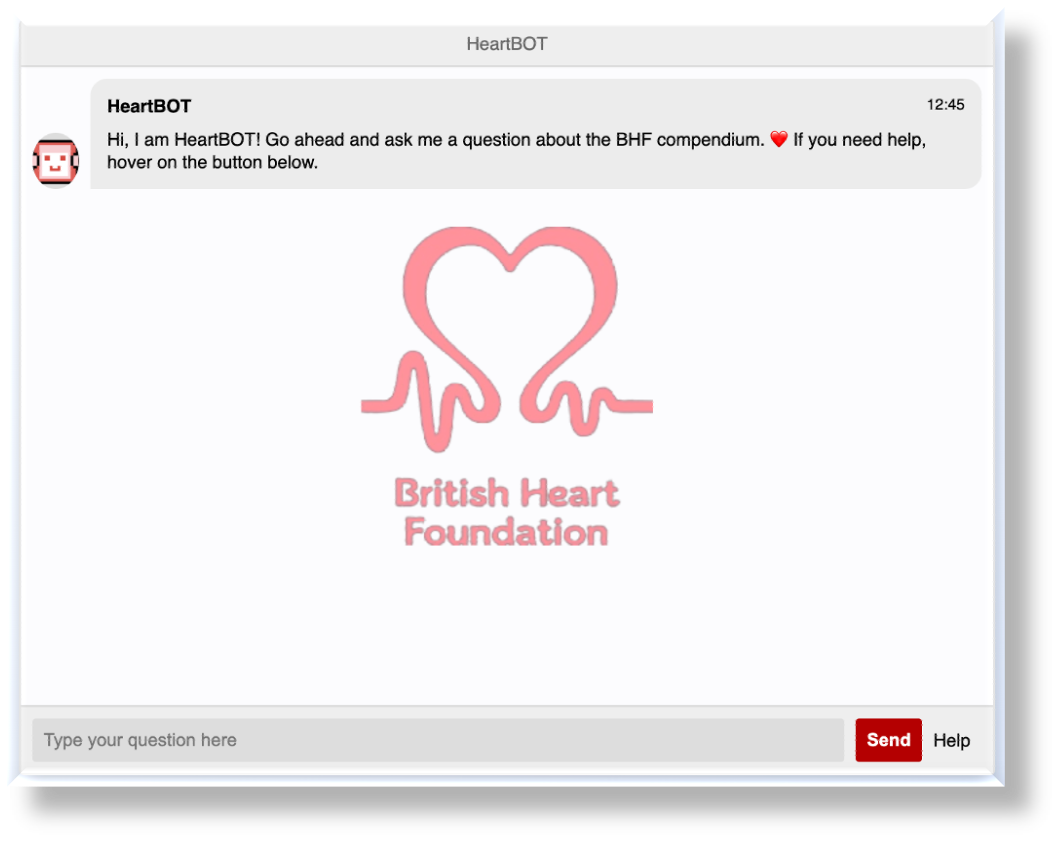

Once all the back-end code was completed, we started implementing the front-end of our project, which is a website which runs the chatbot. This is important because it allows users to easily interact with our chatbot.

We did research on website chatbot implementations and found a template to follow. We emailed the owner and got permissions to use and modify the template for our project. Modifications we made include:

- Added CSS styling and restructured the python code to allow us to print the data frames

- Modified the HTML to add icons and pictures

Week 20 (28th Feb - 7th March)

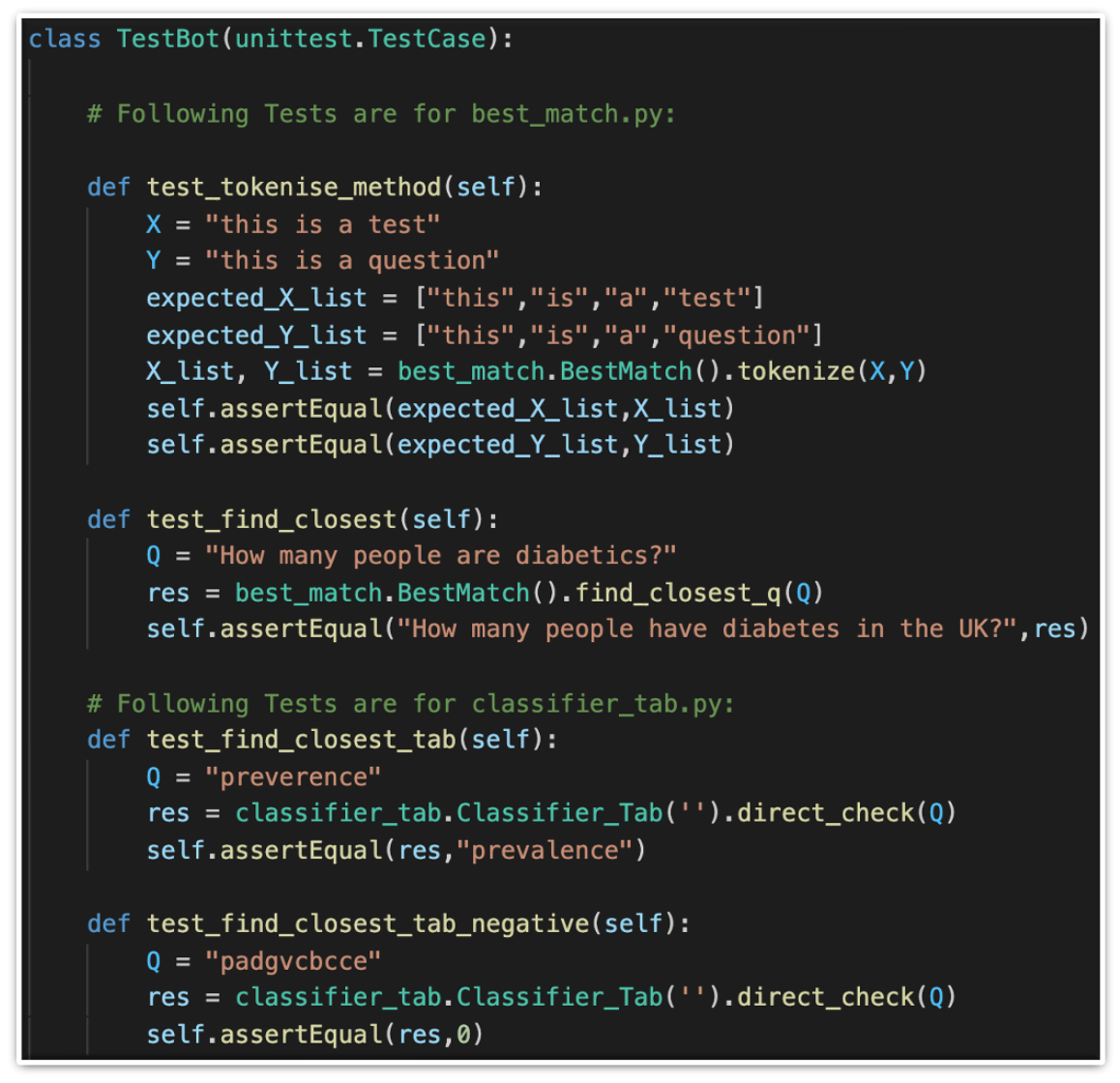

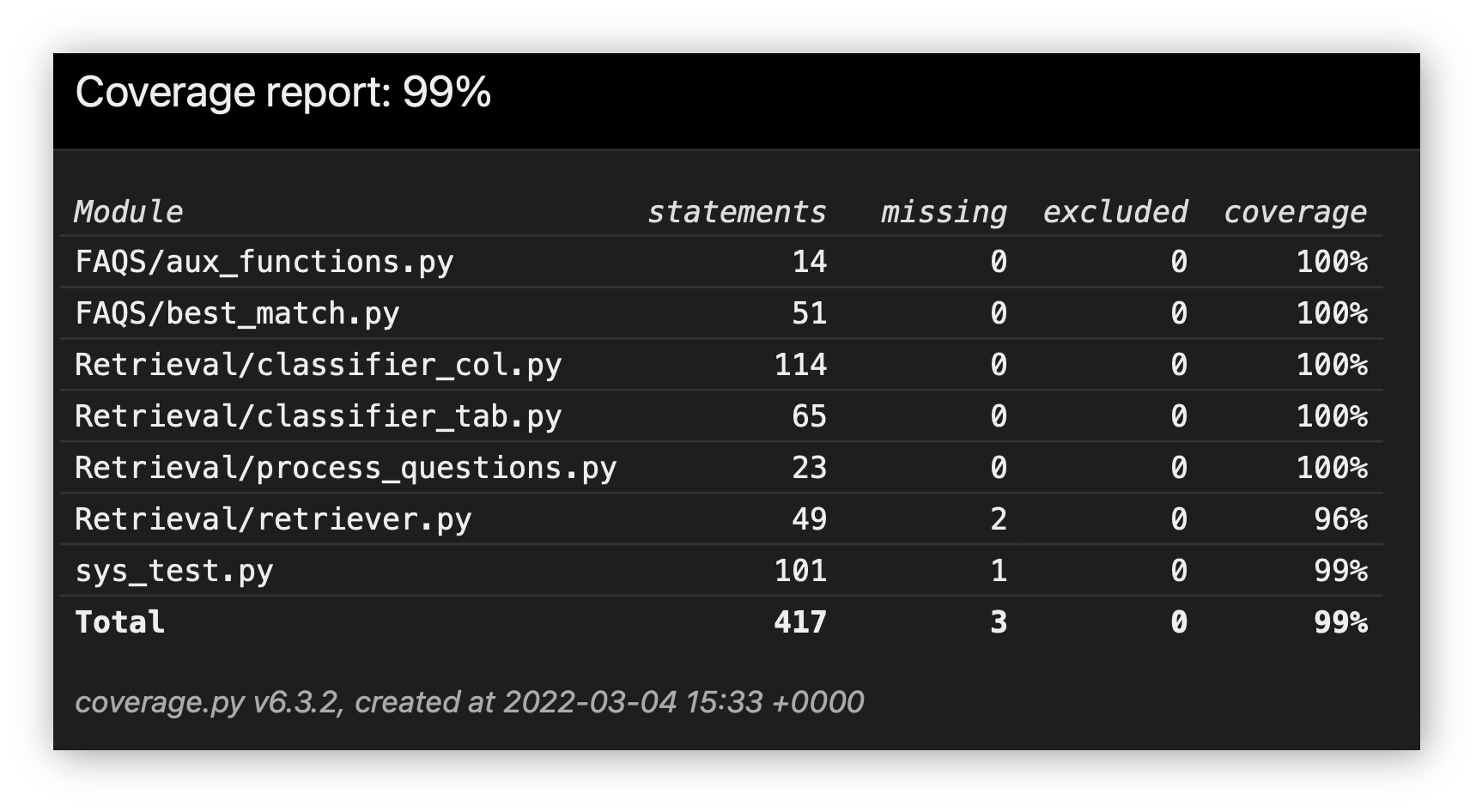

After finishing all of the code, we tested it. We created unit and system tests. We unit tested all of our main functionality and tested the overall system for all possible scenarios when it comes to user input. We got a test coverage of 99%.

We also did user acceptance testing since our project was based on user requirements. Hence, we asked our client and some pseudo users to try some test cases and give us feedback which we used improve our chatbot. For example adding more FAQs and synonyms, adding more conversation pairs and editing the error messages.

We also added a help button which would guide users in the scenario where they are unsure about what to ask. We started creating our project portfolio website.

Week 21 (7th March - 14th March)

This week, we continued creating our project portfolio website. We added different pages and filled in content. In addition to this, we started making the documentation and manuals required for the project handover.

Week 22 (14th March - 21st March)

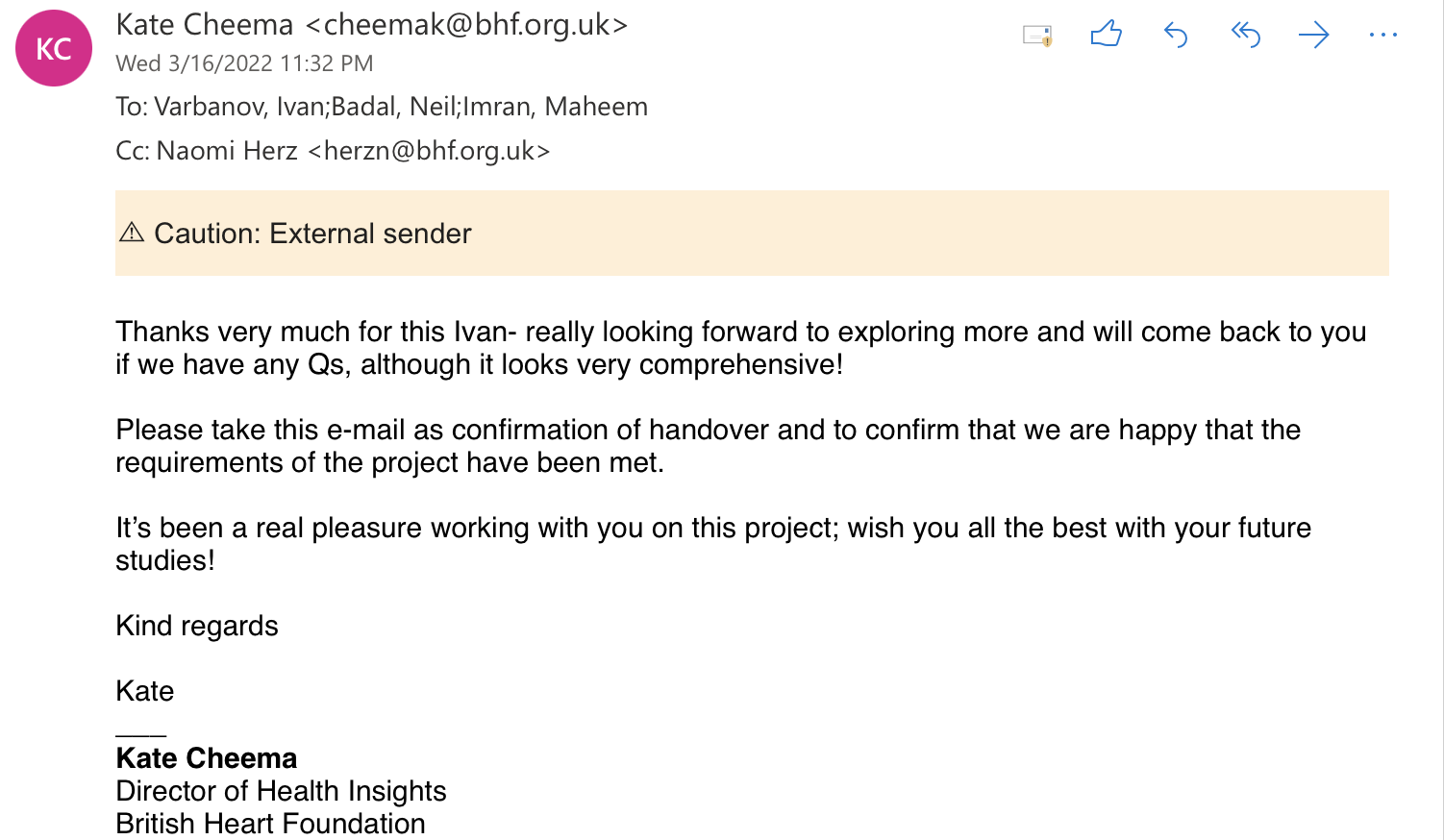

We had our code review and handover with our client and handed in the code for the chatbot. The client was happy and satisfied with HeartBOT. We continued working on our project portfolio website. We started working on our final presentation and prepared some videos that summarised our project and progress.

Week 23 (21st March - 28th March)

We had our final presentation. We continued working on the project portfolio and videos.

Week 24 (28th March - 4th April)

We finished our project portfolio (website, videos and code) and handed it in. We had our demos with our TA and professor. We started working on our individual reports.

Final Diagrams

Throughout our project we had to make modifications to our data retrieval model and our project Gantt chart as the project needed. Here is what the final diagrams looked like:

Final Gantt Chart

Final Data Retrieval Model

Final System Architecture

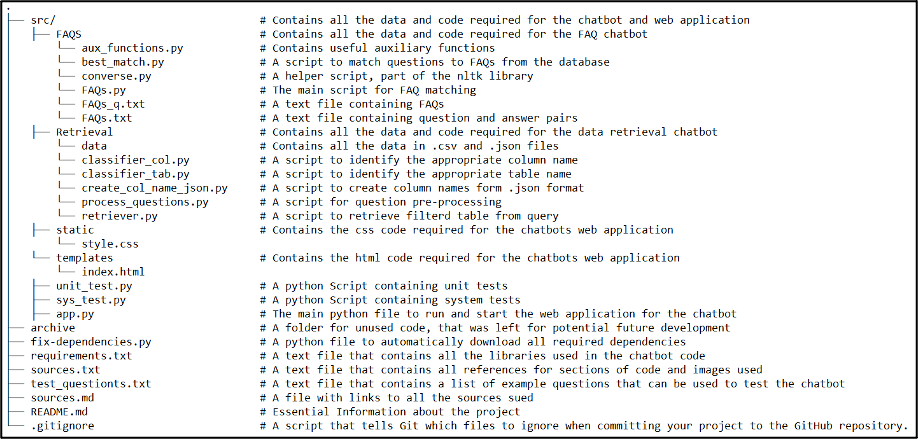

Final Project Structure

Final Flow Chart

References

-

Preece, J., Sharp, H., & Rogers, Y 2015. Interaction design: beyond

human-computer interaction. 4th ed. West Sussex: Wiley. -

Pixilart.com. 2021. Make pixel art online - Pixilart. [online]

Available at: https://www.pixilart.com/draw?ref=home-page#

[Accessed 6 November 2021]. -

BHF logo. [image] Available at:

https://www.dpag.ox.ac.uk/images/logos/bhf-logo/view [Accessed 6

November 2021]. -

Down House, Kent, UK/Bridgeman Art Library, 2021. Charles Darwin

(1840) by George Richmond… [image] Available at:

https://www.neh.gov/humanities/2009/mayjune/feature/darwin-the-young-adventurer

[Accessed 6 November 2021]. -

traveler1116 / Getty Images, 2021. Engraving of Michael Faraday,

1873. [image] Available at: https://www.thoughtco.com/michael-faraday-inventor-4059933

[Accessed 6 November 2021]. -

Balsamiq.cloud. 2021. Balsamiq Cloud. [online] Available at:

https://balsamiq.cloud [Accessed 6 November 2021]. -

bhf.org. 2021. BHF Statistical Compendium. [online] Available at:

https://www.bhf.org.uk/what-we-do/our-research/heart-statistics/heart-statistics-publications/cardiovascular-disease-statistics-2021

[Accessed 6 November 2021]. -

Alibaba Cloud Community. 2021. How to Create an Effective Technical Architectural Diagram?. [online] Available at: https://www.alibabacloud.com/blog/how-to-create-an-effective-technical-architectural-diagram_596100 [Accessed 7 December 2021].

-

.2021. Programming Flow Charts: Types, Advantages & Examples. [online] Available at: https://study.com/academy/lesson/programming-flowcharts-types-advantages-examples.html [Accessed 7 December 2021].

-

Mindtools.com. 2021. Gantt Charts: Planning and Scheduling Team Projects. [online] Available at: https://www.mindtools.com/pages/article/newPPM_03.htm [Accessed 7 December 2021].

-

En.wikipedia.org. 2021. Pseudocode - Wikipedia. [online] Available at: https://en.wikipedia.org/wiki/Pseudocode [Accessed 7 December 2021].

-

StackEdit. 2021. [online] Available at: https://stackedit.io/ [Accessed 7 December 2021].

Additional references are mentioned in sources.txt in the code.