Final Solution Component Descriptions

Below are high-level descriptions of the functionality of the components included in our final solution. These components are: ChatBot API (Messaging), ChatBot API (Summary), React Component(s), Documentation, and Website.

ChatBot API- Messaging Endpoint

The messaging functionality of the ChatBot API includes sending/receiving messages, delivering error messages, formatting question and answer response, saving the status of a conversation between the user and the bot and appropriate question to continue the conversation with the patient.

When a request is made to this endpoint, a JSON formatted

response is returned to the user:

- - If you are a current user with an active session, you will be delivered the next appropriate question, or the end of conversation notification.

- - If you are a new user, a user will be created in the database, and the conversation will be initialised.

- - If you are a current user with no active session, a session will be created and linked to your user_id. The conversation will then begin.

The messaging API updates and maintains the database which stores users along with their corresponding sessions, and the results of the conversation.

ChatBot API- Summary Endpoint

After a conversation is complete, it is required that there is a method of summarising the results, so that conclusions can be drawn about the information gathered from the patient. Therefore, we created the summary functionality of the API which returns a JSON containing a structured and formatted JSON response including the asked questions, and the corresponding answers.

Later, the summary API can have multiple uses: It can act as an API for a doctor interface to see results of chatbot conversations, or it could be part of a larger knowledge-inference/diagnosis engine.

Administrators or medical professionals can also access the Django Admin panel to see specific results, and alter aspects of the chatbot.

React Components

We developed a GUI for the chatbot using React+Redux.

From our research, we discovered that novel interfaces

were crucial to a good chatbot experience.

The components below were developed to showcase the visual

components of the conversation and its input and was originally

only planned to be an experiment with user interface elements.

However, due to the PEACH messaging team failing to set up the

Rocket.chat platform, with which we had planned to integrate our

UI elements with, we continued development on the chat interface

in order to have at least one functioning chatbot GUI.

The visual components we wanted to account for were chosen

when developing and modelling the dialogue structure. We

found that questions would ideally need to be answered in three

ways:

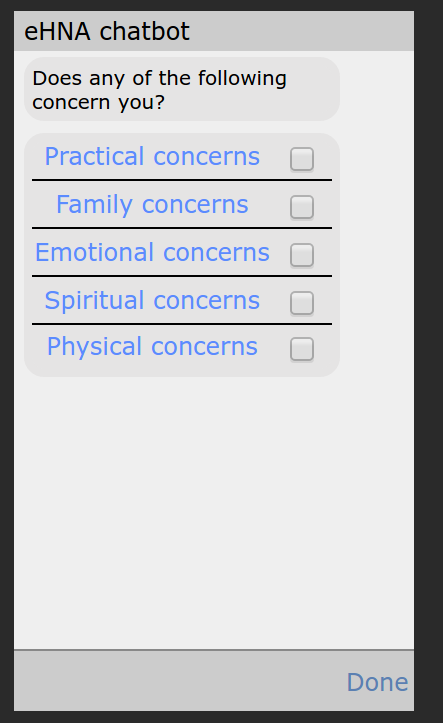

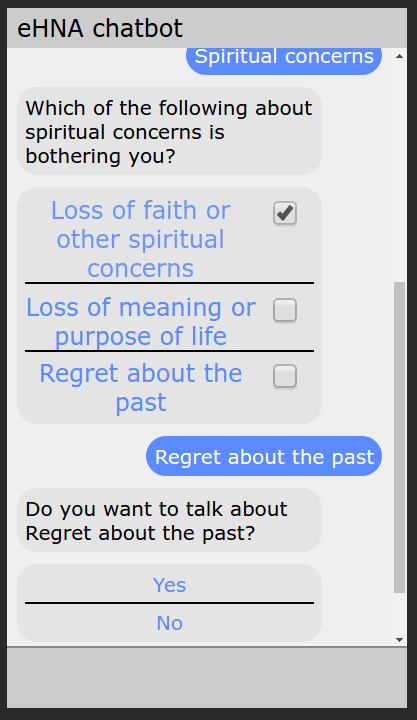

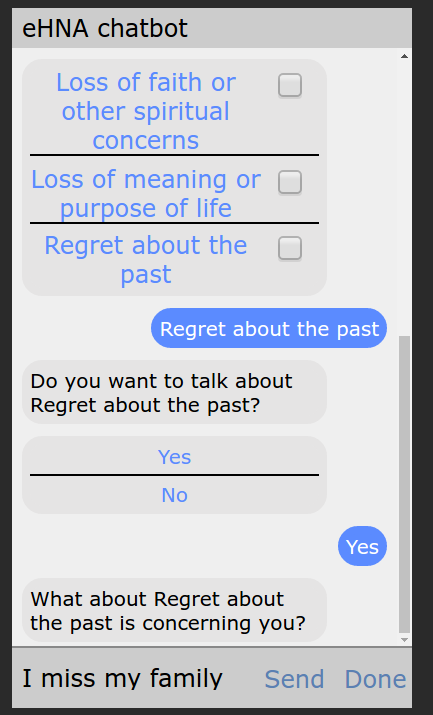

Multiple Choice

|

The structure of the original Holistic Health Assessment Form (see 'Management' page) was based heavily around the ability to select multiple applicable options. Therefore, we needed to implement a simple way for the user to select one or more choices from a list. The image below shows how we implemented this. |

Single Choice

|

We also needed to cater for the need to input a single option from a list. This could be as simple as selecting one option out of "Yes" and "No". This question type is common to Facebook's Messenger Bot Platform . Therefore, we made a clickable single choice input list to deliver this. |

Text Input

|

In order for the user to expand upon the answers provided within the conversation, we needed to allow for text input. This is a usual input for a chatbot interface, and therefore was an essential implementation. |

Website

The website, as said by UCL, is to be our primary platform for communicating the progress and success of the project. We have used the website throughout development to show updates, resources and features of our deliverables.

As you can see, we have followed the specification for the site closely. We have altered the structure of the site, the distribution of content, and design, all following feedback from both Yun Fu and Marios (our TA).

The feedback given to us at the end of term 1 was very positive, and just asked us to slightly redistribute content, and add more pages in order to minimise the amount of scrolling required to view content.

The most recent feedback we received was mainly considering design, and just requested for the text to be more left-aligned, and for the structure to be altered to fit specification.

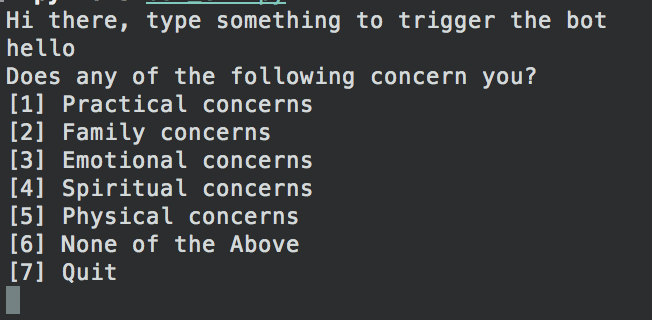

Command Line Interface

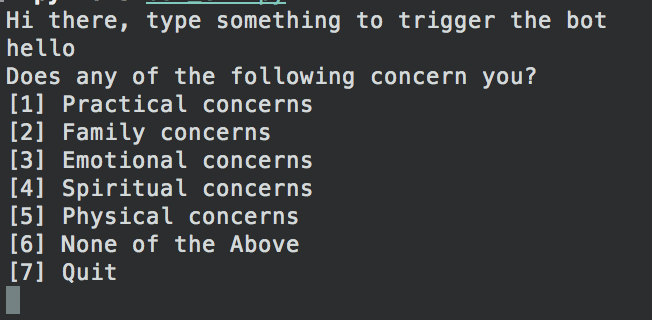

The command line interface (CLI) we developed was created in order to act as a platform for testing the API. We used this throughout development as a format for interacting with the API and database, to test functionality and response.

This interface acted as our testing environment for the development of back-end functionality such as dealing with multiple-choice responses, universal keywords, and ending the conversation appropriately when needed.

The image below shows a screenshot of the CLI:

During development, we incorporated design patterns in our architecture.

| Name | Description |

|---|---|

| Model-View-Controller | Model-View-Controller is the standard way of designing any interface. We incorporated this pattern in the React frontend design. In React-Redux, the model is implemented via so-called 'reducers', that simply modify the current state (in redux-speak, the current 'store'). Controllers are implemented in Redux as 'Actions', that explicitly codify what should happen. This is also an instance of the Command pattern (see below). Finally, the actual React components are the views of the MVC application. |

| Command | React-Redux best-practice is the use of 'actions' to model events that modify state. For the typical user actions (i.e. selecting/un-selecting an option, entering a message, submitting an answer), we have created appropriate actions. These - as part of the best practice - get passed to a central 'reducer' which modifies application state. |

| Module | In Python, it is not typical to wrap every function in a class. Instead, a major part of applications is only wrapped in packages and modules. We adopted this approach and developed modules for retrieving and modifying user state, summarising user dialogues and populating the dialogue tree. |

| Front controller | We implemented the front controller pattern in Django: While the framework itself takes care of delegating URLs and authorisation, if needed, for every endpoint of ours, parameter checking, and further delegation to the appropriate method of the model is necessary. |

| Null Object | Null objects are used in multiple stages of the database population process.

Models are initialised using None in order to initialise them and

connect them to

assiociated models if we don't have the data already to fill them in. None

object is also used to find

when the conversation ends since the priority queue that determines which question

will be asked next will return a None object which

in turn determines the end of the conversation

|

| Adapter | Adapter patterns allows the interface of an existing class to be used as another interface. Since this project is implementing an API, our core classes and wrapped in JSON to be able to interact with them from the client side. |

| Memento | Memento design pattern allows an object to be restored to the previous state. Our keyword feature implementation in this API employs this design pattern by using the specified question as the caretaker to roll back to the previous state |

| Iterator | Iterator object provides a way to access the elements of an object sequentially without exposing its internal representation. The priority queue we implemented inside the ChatBotUser session contains a priority queue where we don't allow any operation other than getting the top of the queue. |

| Decorator |

Decorator design pattern allows external behaviour addition to functions and

classes. In this project all of the entry

points to the API are decorated with api_view in order to reject any

unallowed type requests and filter them out.

This takes out the complexity of filtering from the actual implementation of the

API.

|

| Facade | Facade design pattern gives a simplified interface to a complex set of functions/code. All of the libraries we use in this project are examples of Facade design pattern. The way state/question answer generation is abstracted and encapsulated to only have one entry using a core functions to both of these abstractions where we have a complex implementations which is hidden from the entry point to the API. |

| Observer | Observer design pattern is a software design pattern which maintains a list of its dependents and notifies them automatically of any state changes. In the API implementation Session keep the questions to be answered and questions that are already started. Once any keyword that will require the internal structures to be changed, session object will be used to execute it. |

Implementation Details for Key Functionalities

Below is a description of the implementation for every key feature within our deliverables. This will include the messaging functionality, summary functionality, conversational flow, multiple-choice selection, single-choice selection and the universal keywords.

ChatBot API- Messaging Logic:

The Messaging functionality of the ChatBot API includes sending/receiving messages, delivering error messages, formatting question and answer responses, saving the status of the conversation, and finding the next appropriate question to continue the conversation with the patient.

ChatBot API- Summary Functionality:

We provide a first implementation of an API for retrieving logs of patient dialogues with the bot. Currently, one can retrieve question-answer pairs for all sessions of a single user, for one specific session, or for one question.

Models for Conversational Flow:

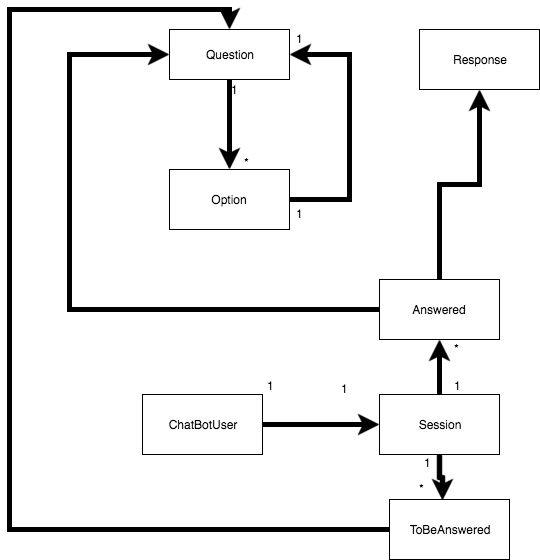

The models that we describe in this section is how we model the elements of a conversation with the user, and how this is represented in our database. These models are essential to the functionality of our ChatBot.

The logic of the conversation is dictated by these models. The

essential overview is:

- Every Question that could be asked to the user is stored in the form of a Question object. As Questions can have multiple options, each question is 'owned' by an Option object.

- The Option object holds the information about the parent_question and next_question. This is used to dynamically create a structure for the conversation depending on selected Options.

- Each user of the chatbot is modelled as a ChatBotUser. This object creates a user_id for the user, as well as a Session. The Session is a key concept as it determines our ability to summarise and alter the data at a later stage.

- The Session model is created when a new conversation is initiated with the ChatBot. The Session has a unique ID, and a timestamp. The importance of this model is to hold all the data about a current conversation in an easily retrievable form.

- Each Session will be linked to ToBeAnswered and Answered objects. The ToBeAnswered objects represent questions that are 'coming up' in the conversation, and are therefore yet to be answered. Each of the ToBeAnswered objects holds a foreign key linking them to the valid session.

- The Answered objects represent question which have been asked to the user, and have been answered. Therefore, they need to be documented as answered, and also hold a valid session_id. They also hold a Response object, which contains the user's answer to the Answered question.

The list below details the implementation of each of our models, their purpose and any other relevant information.

-

filter_drama Question

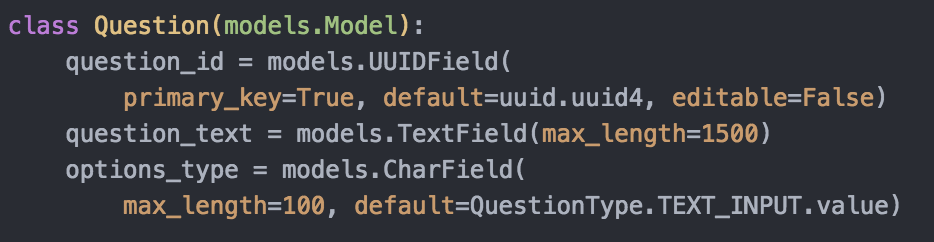

The Question models a question, or chatbot prompt, within our database. It contains the ID of the question it represents, the text of the question and the type of the Option objects it relates to.

The question_id is stored as a UUID field. This is a field used for storing unique id's. When this is used with PostGreSQL, the stored id will be of uuid type, otherwise as char(32).

The question text is stored as a text field, which can be as long as 1500 characters, and the question type is also a CharField, which defaults to the value of Text_Input. Text_Input is a type of question which is answered through the user inputting text, rather than multiple-choice option selection, for example.

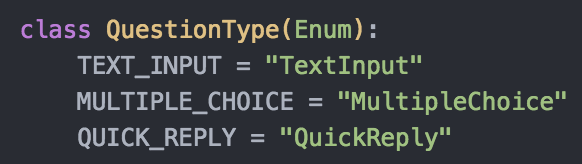

The possible types of question are shown in the image below:

Each Question object can 'own' Option objects, depending on the type of Question. If, for example, it is a multiple choice question, it will own multiple options. Each of these options then, in turn, own Questions, and thus we can create a conversational structure.

The image below shows the body of the Question model.

-

filter_drama Option

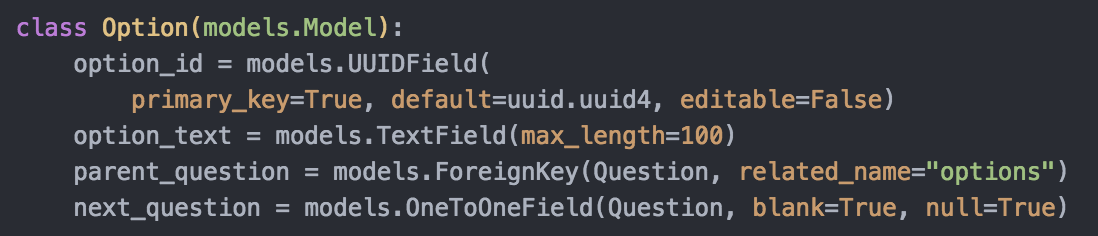

The Option object, as mentioned in the description of Question, is a representation of an Option in a multiple-choice or single-choice questionnaire question. Each Question have Options, and each Option will then, in turn, have a Question.

The Option is key for representing the dialogue as we wanted to. We wanted to allow for multiple-choice questions to be asked, and then for the user to be asked relevant questions about their selected options. The Option model allows us to represent this structure in a cleaner and more understandable way.

This model contains the option_id, option_text, the parent_question and the next_question. The option_id is in the form of a uuid, which represents the question using this unique form of representation. The option_text contains the content of the question to be displayed to the user, and is in the format of a TextField.

The parent_question is holding a Question object which acts as the 'parent' of this Option within the conversational structure. This means that this Option was one of the 'children' of the parent_question.

The next_question holds a Question object which is the Question which should 'follow' this Option. This means that the next_question will be added to the conversation, so that it is asked to the user later.

The image below shows the body of the Option model.

-

filter_drama ToBeAnswered

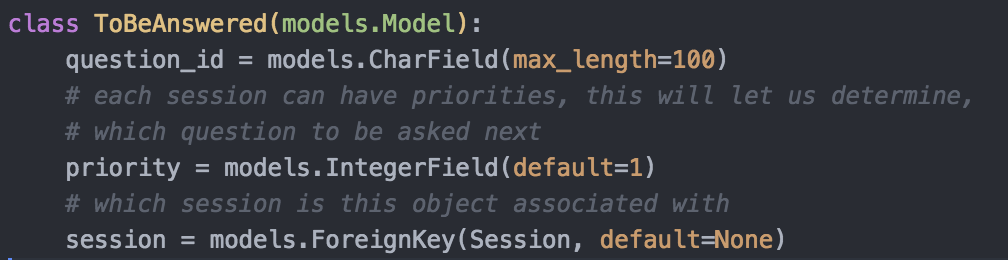

The ToBeAnswered model is used to represent Questions which need to be Answered in the current conversation. It forms a sort of priority queue which means that the correct question represented by a ToBeAnswered object will be asked in the correct order.

The ToBeAnswered objects for a session will together form a type of priority queue enabling the Questions to be asked in the correct order according to the appropriate user responses.

ToBeAnswered has three fields: question_id, priority and session. question_id holds the ID of the Question that needs to be answered. Priority is assigned to the ToBeAnswered Question according to its appropriate position in the conversation. The session holds a ForeignKey to a Session object for the current Session for the ChatBotUser.

-

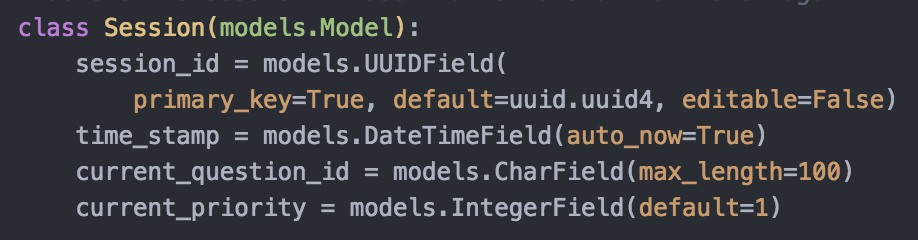

filter_drama Session

The Session model is key to determining the current status of the ChatBotUser within a conversation, or before/after. It represents a single Session where the user was interacting with the ChatBot.

A Session object is assigned to a ChatBotUser each time a conversation is initialised with the ChatBot. The Session model has four fields: session_id, time_stamp, current_question_id and current_priority.

The session_id is UUID for the Session so that it can be identified from others. The time_stamp just stores the time/date the Session was iniitalised.

The current_question_id stores the ID of the active Question in a CharField, and the current_priority stores the position we are in within our priority queue of ToBeAnswered and Answered objects.

-

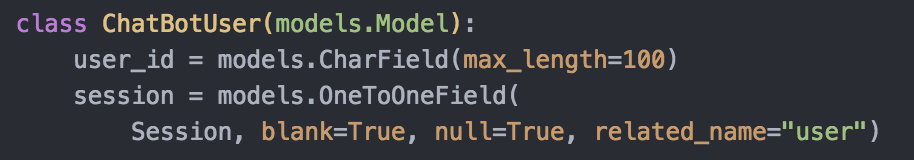

filter_drama ChatBotUser

The ChatBotUser model represents a user of the ChatBot. This is important from distinguising who is interacting with the ChatBot, and for for accessing the data at a later date.

The model has only 2 fields: user_id and session. The user_id is a CharField containing the ID of the user this object is representing, and the session holds a OneToOneField to a Session object.

If somebody tries to interact with the ChatBot without being represented by a ChatBotUser, then we create one and begin a Session. If somebody interacts whilst having a ChatBotUser representing them, then we either create or a continue a Session appropriately.

-

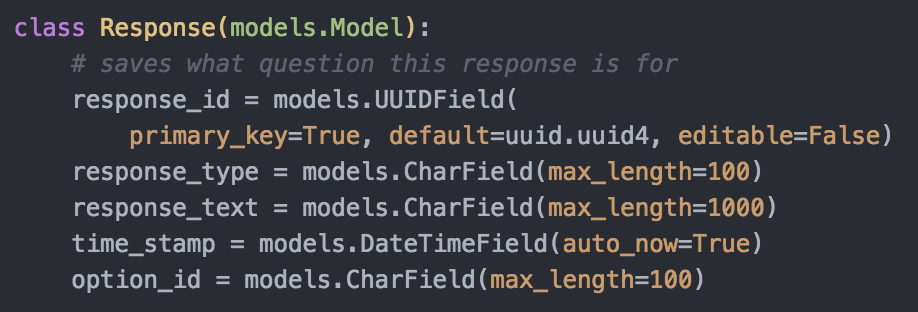

filter_drama Response

The Response model is used to represent a user's response to a Question. It is 'owned' by an Answered object which represents the fact a Question has been Answered.

The Response model holds 5 fields: response_id, response_type, response_text, time_stamp and option_id.

The response_id is a UUID for the Response, so that it can be identified easily. The response_type is a CharField which will hold the QuestionType of the Answered question. The response_text is another CharField which holds the textual representation of the user's Response if applicable.

The time_stamp is used to indicate when this Question was Answered, and the option_id holds the type of Option that this Question was referring to.

-

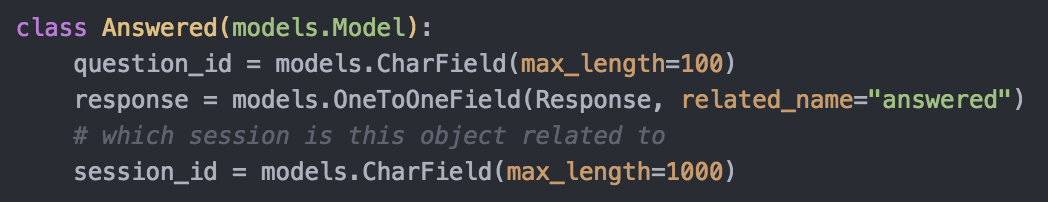

filter_drama Answered

The Answered object is very simple, as it just represents the fact that the Question with the question_id it holds has been answered in the conversation with the user.

It has three fields: question_id, response and session_id. The question_id holds the ID of the Question that has been Answered. The response is a OneToOneField to a Response object representing the user's answer.

The session_id holds the ID of the session that this question was answered within.

How all the components fit together

The above picture represents how all these fit together in the database. This structure allows the Questions to be easily generated and appended to a user session. Which in turn makes it easy to process the data since all the responses will be tied to the session once a session is determined to be finished. Once a user start a session, we append the very first Core question to their session. This will initiate a the tree with one node. Anything the user answer to this question will populate the tree with more nodes. Once the question is answered we pop the ToBeAnswered instance of the Question from the tree and add it to the Answered instance. This will have a reference to the actual question answered and the reponse to the question. This relation makes it possible to eliminate the process of duplication when we want to connect the responses to the actual question that was being answered. Once there aren't anymore questions to be popped out of the ToBeAnswered Queue the user session has, we determine session to be finished and process the data associated with session - Answered list. Then we terminate this session for the user to be able to start new sessions in the future. A user can only have atmost one session at any given point.

Documentation

The documentation is embedded in this site for ease of access. It is written directly into an HTML file. We have styled it for ease of use, and it contains details about the use of our API, and includes code snippets.

Website

The website you are viewing was built using HTML/CSS and JavaScript. We use the Materialize Framework which helped us with our design elements. We have used a system of 'cards' which contain and separate the information we wish to present to any viewer.

The website has 9 pages, each containing the information we were required to supply in the specification provided. Additionally to the pages required in the specification, we also have a page entitled 'documentation' which includes our API and setup documentation for our client, future teams and any other users of our work.

The pages we include are:

- Homepage- containing our abstract, overview, client description, team introductions and contact details.

- Requirements- contains a description of our updated requirements agreed with our client, as well as information about the background of our project, the problem statement, our MoSCoW analysis, and our use cases/personas.

- Research- includes any research we conducted throughout the progress of our project. This includes research into Continuous Integration, Databases, Frameworks, languages.

- HCI- This page, Human Computer Interaction, is for displaying our progress and thoughts throughout the time we have worked on the project. Here we display our final delivery, and any prototypes we developed.

- Design- includes details about our architectural design, and breaks down our project into key deliverable components which we describe in detail, including the implementation.

- Testing- holds all the information about the testing we conducted on our deliverables, such as Unit or User Acceptance Testing.

- Evaluation- This page is, clearly, for evaluation of our deliverables and progress. Here we include a summary of our achievements, information about completed tasks, workpackages and challenges faced. The key component on this page is the critical evaluation.

- Management- This contains all of our resources that we have produced and used, and details of all of our client meetings. We also add feedback and a gantt chart.

- Documentation- This page is for our API documentation for both Summary and Messaging endpoints. This will be used by any future teams who develop the ChatBot further, users, and our client.

Command Line Interface

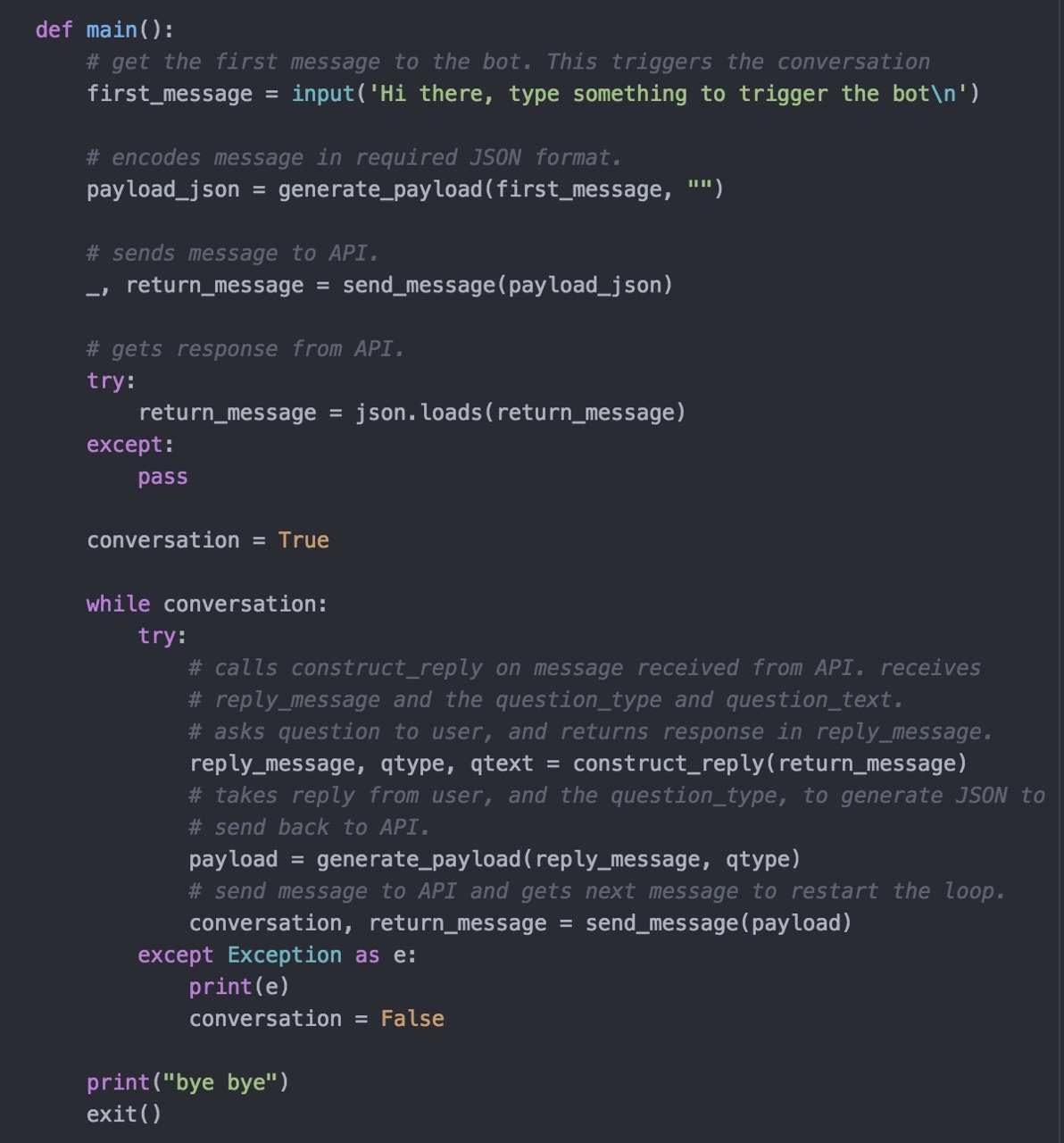

The command line interface (CLI) was written using Python, and integrates directly with the API. The logic for the CLI is broken up into key functions which complete different key tasks.

An image depicting the style of the CLI is below. We have used a very simple yet effective interface for allowing testing and interaction with the API for future teams:

As has been explained on the Evaluation page, the logic flows through the main function as shown below in this code snippet:

The CLI as a whole is a simple python script which makes a request to the API every time the conversation progresses with the user. Descriptions of all the key functions are below:

-

construct_reply(question)

This function takes the response from the API which will contain the next Question to ask to the user. construct_reply then calls ask_question which 'asks' the question to the user, and returns the user's response.

construct_reply returns the user's answer, formatted in a way that can be understood by the API, and the question_type and question_text which will both be used by generate_payload().

-

generate_payload()

This function is made to take the user's reply given by construct_reply, and generate the appropriate JSON to return to the API. It's a simple function.

-

send_message()

Send message sends the JSON response to the API, and gets the next question which is returned by the API to continue the conversation.

send_message is also responsible for notifying the CLI if the user has reached the end of their conversation, and also facilitates the use of the 'restart' keyword.