Group 40- PEACH Chatbot

Systems Engineering Project

Achievements

-

filter_drama A non-exhaustive collection of things we done for the project

- Evaluated the team members’ skills and created a balanced split of roles.

- Completed the tasks sent to us by client i.e. MRC certificates.

- Established basic brief of project and developing action plan and created Trello team/board, Documents for deliverables, stakeholders and resources created.

- Created initial external architectural model.

- Researched and compared Continuous Integration tools.

- Carried out a comprehensive literature analysis on chatbot systems.

- Analysed and developed use cases and scenarios resulting in a high-level overview of interactions with the system.

- Analysed the masters’ dissertation created by one of the people who had previously worked on the project.

- Definition of chatbot dialogues.

- Decision on Python web framework and initial websocket setup using that framework. Created a chat server with long polling web-sockets that mirrors the messages.

- Definition of a messaging JSON format so that we can work on the server-side parsing/creation of the messages.

- Created architectural model of our system.

- Researched and compared Continuous Integration tools.

- Creation of the Chatbot database schema. This is compatible with further adaptation and work on the system, especially including NLP elements.

- Set up continuous integration for the chatbot, and ported to Python 3.

- Integrated all API components together.

- Wrote unit tests for the API to ensure proper functionality.

- Built a Chatbot CLI for testing purposes. Can easily identify issues with input, and distribute to anybody else who needs to test the messaging endpoint.

- Met all of our 'must have' requirements.

- Implemented further functionality of universal keywords like 'help' and 'restart' and 'quit'.

- Deployed chatbot app into Azure.

- Created and edited project website to fit requirements, and following feedback from Yun Fu or our TA.

- Fully functional conversation structural models defined, messaging API meets all requirements.

- Summary API complete and returns summary of a patient's conversation.

Experiment Log

After we had defined an initial database schema for dialogue templates, we carried out some experiments to test the robustness of our schema. Below is a list of the experiments we carried out to verify said robustness.

-

filter_drama Click to expand the experiment log from term 1

Outcome Participants Running the Django DB Shell - Received SyntaxError, fixed the issue (A circular Object reference in the Django ORM)

Christoph Creating a "Question" instance in the Django ORM - Added UUID to the typeFrom our prototype, we inferred that individual messages are best decoupled by referencing them by id.

Christoph Creating an 'Option' - Options are ways to answer a question. Potentially, we have to move the field 'next question' to Questions since in the case of checkboxes, the next Question will differ depending on the individual option rather than the question

Christoph Creating a Websocket - We created a WebSocket during a hackathon

Faiz Creatinng the general message interface as a React component - For a more complex application, React-Redux definitely needs to be added for a cleaner design. Already, the components were starting to be bloated.

Christoph Creating the frontend part of the websocket as part of the react prototype - Figured out the fields needed for logging messages in our database, and a potential representation of these messages in the frontend by defining the accepted JSON.

Christoph Chat Server from Scratch Chat server that echoes the messages we sent to it. This demonsstrated the how user would interact with the server API with multiple channels being present Emily Stream API integration experiment Rocket.chat API behaves almost like twitter's tweepy, the streaming API, So I created a streaming API middleware that mimicks the project that we have with a production ready API twitter provides Faiz Middleware integration of a chat server Integrated slack streaming API with Django to study the behaviour streaming APIs with django and third party libraries like channels and socket.io Faiz Database scheme behaviour A mock dataflow between server and client to check the validity of the scheme and how it fits in our environment Emily Creating different types of messages - The types of messages created were: Checkboxes, Quickreply and normal message. From this, we can conclude that it's sufficient to only send the ticked checkboxes to the backend instead of the entire set of checkboxes. In additon, future work should be centered around assessing whether a slider would really work (as dragging is a different interaction than clicking).

Christoph Running the previous solution - In the first session, we ran the previous year's solution. We deemed it not fit for use, and overengineered.

Faiz

Work Packages : Term 2

List of work packages completed in term 2, along with the contributors to each.

-

filter_drama Click to expand

Work Package Contributors Client Liaison Christoph Website Emily, All Research All CLI Emily, Faiz Final Report All React Interface Chris Tests All Video Chris Summary API All Chatbot Database Model Faiz Chatbot Controller Faiz Biweekly Report 5 All Biweekly Report 6 All Biweekly Report 7 All Biweekly Report 8 All

Command Line Interface

CLI for testing and improving our ChatBot

-

filter_drama Click to expand

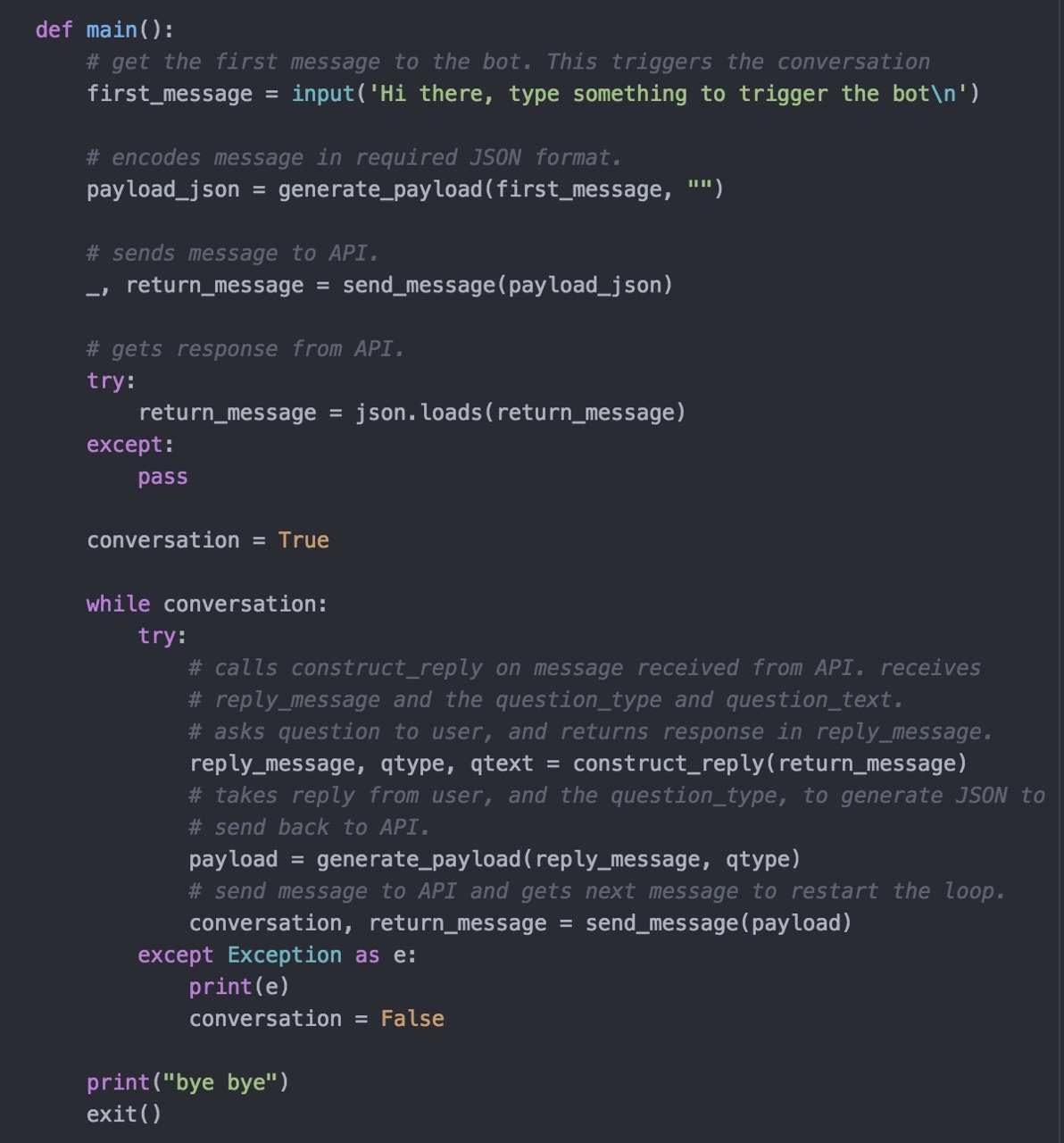

We developed a command line interface to allow interaction with the ChatBot during development. This was built in Python, and interacted with the messaging API endpoint to generate users and conversations.

This was extremely useful throughout development as it allowed for testing of the API, and for the quick implementation of new features which we hadn't previously thought about.

The user can interact with the ChatBot CLI as follows:

- - Run the ChatBot helper Python file in terminal.

- - Message the ChatBot saying anything to trigger a conversation.

- - Answer the multiple choice questions in the format answer1,answer2,...,answern.

- - Answer the text input questions with any valid string.

The screenshot below is the main code of the CLI. The comments describe the logic and flow of how it works, and its interaction with the API.

Summary of Achievements

A table of requested functional requirements and user cases, priority, the completed status and contributors.

-

filter_drama Click to expand

Must Have Should Have Could Have The user can respond to chatbot messages. ✔️ All Integration with the Rocket.Chat messaging platform chosen by the messaging team. ❌ Team working on messaging platform failed to set up. Use Sentiment analysis to guage severity of user concerns. ❌ Not a priority for the client. The user can see the previous messages the chatbot sent within the current conversation, and their own replies. ✔️ All The solution is built using CI. ✔️ Christoph The user can send and receive messages to/from the chatbot. ✔️ All Have a method of summarising a conversation. ✔️ Christoph The user shall be asked about relevant areas of concern (e.g. Physical Concerns). ✔️ All Documentation outlining how to set up the system. The user can indicate the problem areas within each ‘concern bracket’, i.e. each category of concerns (physical, emotional etc.). ✔️ All Documentation outlining use and features of the system. ✔️ Emily Templates for bot response and user response (single option from list, multiple choice, text input and scale slider). ✔️ All API documentation describing parameters and usage. Faiz, Emily Universal keywords such as 'quit' and 'restart' that the user can type to change the outcome of the conversation. ✔️ Emily

Known Incomplete Features and Bugs

A list of features that are, as of yet, incomplete or bugs we are aware of

- - The restart keyword is currently partially functional. On the API side, this feature is working. When interacting with the CLI, however, there are still fixes which need to be made

- - The 'help' keyword is not functional

Critical Evaluation

-

filter_drama Click to expand

Summary API The Summary API is designed such a way that it gives all the question-answer pairs in a JSON. This is useful external database, since it is raw data so can be modified in many ways. Since the responses are note edited in the database itself, this data can be easily used for sentiment analysis in the future. This is an example of a potential extension of the bot's capabilities and functionality that we have built our system to make a possibility.

The summary API responses can be improved in a way that an external developer who wants to use the API for summarisation and analysing meaning, can interpret meaning directly from the JSON structure itself. For example, you could embed the tree structure of the Questions within a JSON.Use of templates Templates were also a great addition to the bot since this takes away the complexity of the context-awareness problem. The only drawback of this is that it greatly reduces the number of ways you can interact with the bot. Introducing more ways to interact with the bot would make the style of conversations more flexible, it will also likely improve user experience. Simplicity of architecture The architecture of our application is very technically complex. Comprehension difficulty of the architecture can be problematic, however it is optimal for generating a dynamic conversation from user response. Adding new questions and giving them place within the defined conversational flow is also easy because of the architecture. Fitness for expandability Since the question generation is based on the input only, this enables us to easily mix machine learning based conversations with this rule-based model. Use of feedback We tested the bot and got user feedback using 2 different client side implementations: A ReactJS app and a CLI client. BOth of them gave critical feedback from the conversation they had with the bot. Several concerns were solved using different methods. This included editing the phrasing of questions which were generated using a script. These iterations using direct testing with user feedback let us do iterations and improve the structure of the API both internally and on the client side. Fitness of API The ChatBot API and both endpoint provided are 100% functional. We have solved all the known bugs upto the day of writing this. Fitness of architecture Since the API is build with a Client-Adapter-Server setup, any 3rd party clients can be integrated with a platform using a single adapter. This adapter can live in a microservice environment where it only has to worry about translating client-server response into the specified format in the documentation. This app is perfectly integratable with any clients because of this architecture. Documentation The entire code base is documented with special technical documentation with technical diagrams to help with design choices and special attention is given to spaces where improvements can be introduced. This also has API documentation, and the codebase has docstrings provided with all the functions to help with further maintenance. Testing We believe that our application is sufficiently unit tested. Moreover, we have performed some testing whether our dialogue model is sufficient to be accepted by patients. After this, we rephrased some questions. However, we have only performed this testing on a limited number of people, and was only performed once, due to time constraints. Future work should definitely have an eye on providing more iterations on improving the dialogue models. One way to make this a reality is to create a focus group at UCLH for this purpose.

Future work

"If we had six more months", we would be able to tackle some of the below

projects. Generally,

we believe that our main achievement was to concretely envision how to tackle

a chatbot project for the medical field.

Our main deliverable, apart from the actual implementation of our

proof-of-concept,

is thus the ideas presented on how to further develop this chatbot. Thus, the

below ideas

are of special importance to us.

Expose the chatbot to a more diverse audience

Firstly, we would perform more representative acceptance testing: So far, our testing group only consisted of highly-educated people, mostly from Computer Science or Medicine. While we did ask other people to test our application (e.g. our parents), we believe that this sample set is not representative of the entire population. Within the existing time, we have not been able to create ties with 'real' patients. A longer project, or continued work on this project should definitely tackle this issue and establish a more representative group of users for acceptance testing. This should be seen as a constant feedback process and be a chance to improve on the dialogue model.Development of interface for editing dialogue models

Secondly, we would tackle the development of a doctor interface for editing the dialogue model and viewing results of chatbot sessions. While we believe that the invention of such a system as we have built in this context was an appropriate workload for the time we were given, to move the project from proof-of-concept to full solution. Especially the idea of having domain experts edit the dialogue model for improved rapid development is a novel idea. To 'sell' this vision, we have created mockups for part of the doctor interface.Integration with a third-party messaging application

Thirdly, we would give our best to integrate with Rocket.Chat. Given the additional six months of development time, we are sure that the other team, which was responsible for hosting the PEACH Messaging platform, would have been able to deploy the fork from the repository, rather than only the container. Based on this assumption, we would be in the clear to finish writing our adapter to Rocket.Chat in the time window after that.Development of a chatbot management interface

Fourthly, we would have invested time in developing an interface for doctors to 'schedule' a chatbot session. While this feature was never a requirement for our work, our client was clear that in the long-term vision of the bot, at some point, he wants to simply 'prescribe' chatbot sessions, just as one would prescribe a physical checkup. Working on the project for another six months would mean implementing such a system in part, or entirely.Interface for analysing dialogues

Lastly, we would make more research into knowledge extraction from the data provided by the user. With our Summary API, one is already able to start work on this right now, conforming to our belief that good work means that future people can start working right away. Yet, we are unclear about the extent to which knowledge can be extracted at the moment, and how to build such a system, such that - ideally - the chatbot would simply 'fill in' the patient record automatically. Concretely, we have doubts about whether in the future, diagnosis and knowledge extraction will happen by the same subsystems, or whether they will be separate. Additionally, we believe that natural language processing is not accurate enough to cover the large body of medical knowledge with sufficient precision at this stage, and that any attempt to cover the entire spectrum is not possible, unless significant resources are devoted to the project.Yet, one way to reduce the amount of resources and to create the system within a shorter timeframe, a human-in-the-loop system, such as described with our doctor interface that provides chat data that humans, in turn, use to fill in the patient health record will be more feasible. Another alternative would be a hybrid system, in which a human confirms or denies categorizations made by the chatbot This is ultimately the reason why we did not invest heavily in NLP now. Yet, with more time, an implementation of a hybrid solution could come into scope for our project.