Project Background

For more than a century, IBM has been dedicated to every client's success and creating innovations that matter for the world. With the recent COVID-19 pandemic, social distancing and increasing remote-work has lead people to a more isolated lifestyle, with many not leaving their homes unless absolutely necessary. This has led many companies, including IBM, to exploring how XR technologies could be used to create engaging products for users sitting at home.

The pandemic has also brought with it an increase in usage of technology, whether it be through smartphones or computers, which has even further isolated a certain group that is unfamiliar with the usages and workings of modern technology. Our project, the WAPETS 2, serves as a transition for such users into learning the remote world through the usage of helpful, AI-controlled Augmented Reality pets.

Through the usage of AR and chatbot services, what our project, in collaboration with IBM, aims to do is to engage with these users and help ease their transition into this fast-evolving remote environment. By the end of our project development, we hope to develop a cross-platform application for both mobile and desktop in which users will be able to interact with their own little pets, who will serve as their guide and will be able to respond to user commands and speech inputs.

Interviews

Interview with elderly person

Do you live alone?

Yes

Do you live alone?

I have a regular lunch with some friends once a week, and family members stop in occasionally, so one to three times per week.

How confident are you with technology?

I think I generally understand what’s going on, but I struggle to tap the right bit of my phone screen. I use siri a lot, she makes everything a lot easier!

Would you be interested in an AI pet? (The concept was explained to him)

I don’t think it’s going to be anything like social interaction, but I do think it could be a useful tool to make using technology easier.

What sort of things would you find most helpful for it to be able to do?

I think a lot of the things siri can do, like telling me the time and playing music, would be nice. But to specifically use the fact that the camera is involved, maybe it being able to tell me what I’m looking at when I haven’t got my glasses on, or reading out text.

Interview with nursing home worker

Do you think socialising is important for elderly people?

Yes, humans are social creatures and, particularly for people living alone, some kind of regular interaction is important so that they don’t forget how!

Do you think any value could be had from interaction with an AI?

An AI is obviously not going to be as good as real people, but it does still practice the skill of social interaction. Also, if they can interact with the internet through an AI interface (such as voice commands), it could allow them to connect with real people too.

What sort of user interface requirements do you think our users might have?

Different people will have a range of different needs, so it is probably best to design a couple of interaction styles. The two main considerations I would think of would be sight, and hearing. So you probably want any UI elements to be large, and sounds to be clear. And you should also be able to interact with as much as possible through BOTH visual and auditory methods.

What functionality would be most useful?

At it’s most basic, the time, the weather and maybe the news. Those are the three things that we are asked about most frequently. Beyond that, I would say finding some way to interact with family members and then maybe the full internet.

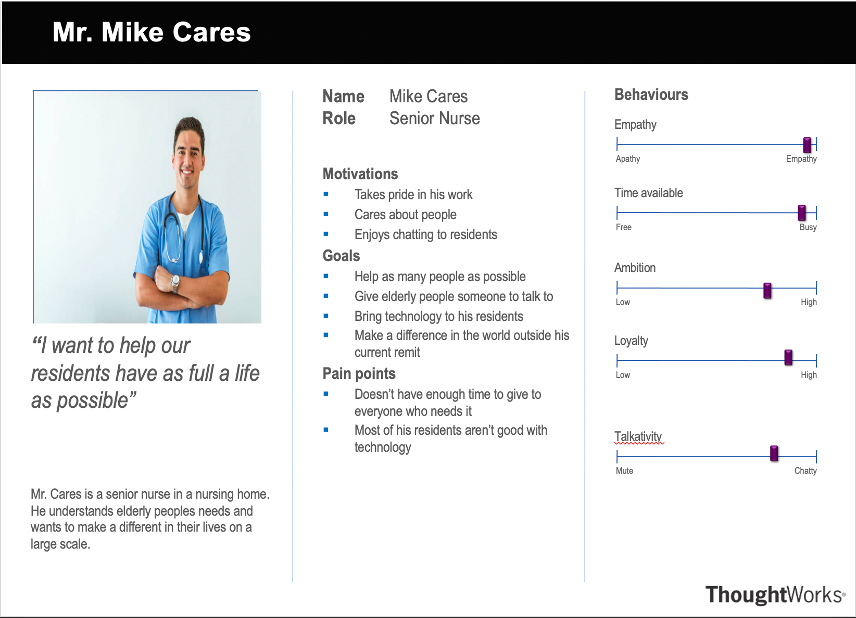

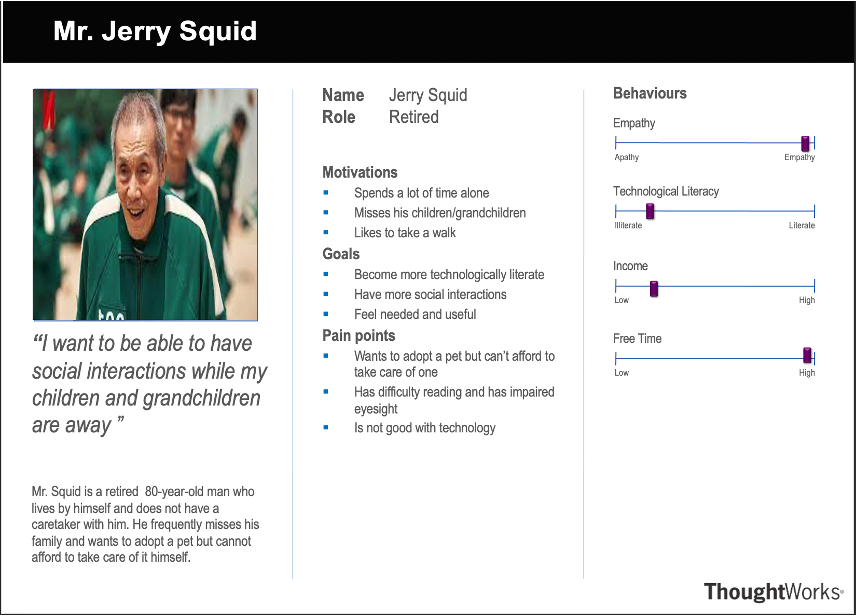

Personas

After conducting our interviews and getting a better understanding of our potential user base, we produced several personas and scenarios that would assist us in visualizing the needs of our users and identifying our purpose with our product.

The two personas helped us develop a better understanding of what functionalities and properties our application would require. The personas also allowed us to review our gathering of requirements with a more holistic approach as we focused not only on elderly people but potential users who could be unfamiliar in general with smartphones and other related technologies.

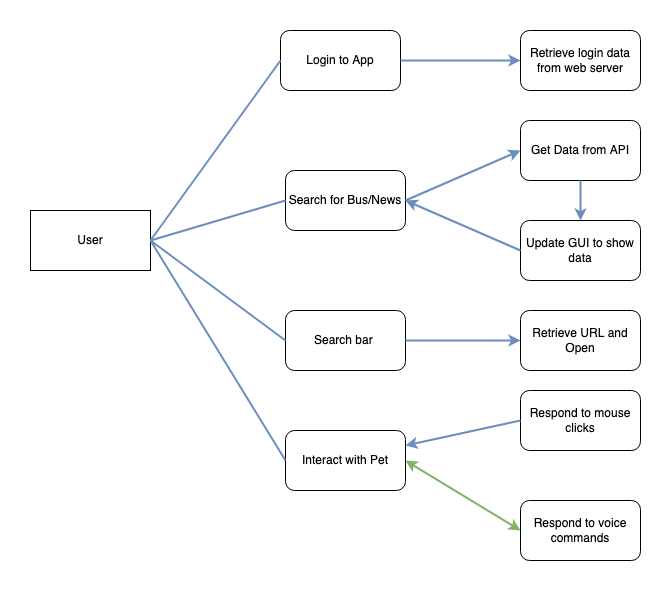

Use Case Diagram

After conducting our interviews and getting a better understanding of our potential user base, we produced the following use case diagram to better display the basic interactions a user could have with our system. The green arrow on the diagram is used to indicate that the feature will be made available through speech-to-text input.

MoSCoW List

Must Haves

- AR character which can be overlayed on reality

- Automatic Speech Recognition and Speech-to-Text Input/Output

- Search for Bus Times and Tube Times

- Search for daily news via keyword

- Backend Web Server to store user information and provide cross-application support

- Pet animations when interacting with pet

- Website / Development Blog

- APIs

Should Haves

- Widgets for the app (such as music scrollbar, alarm clock)

- Social media integration (i.e. posting tweet via pet)

- Cross-platform integration to transfer pet

Could Haves

- Personalized media and news display based on user preferences

- Notifications and reminders when the users haven't logged in in a while

- Feeding schedule for pet, like Tamagotchi

- Asking them about their day and understanding interests from this

Won't Haves

- Sentient AI Pet connected to Robots

- WebGL version

- Pet integration with Virtual Reality

- Multilanguage support for the app