During the development process, we used test-driven development using unit testing. It allowed us to make sure the project was bug-free before adding new functionnalities, hence making the project more maintainable and scalable. We conceived the software by small steps, each time adding corresponding tests to the new parts of code.

Unit testingThe main type of testing we used was unit testing. We aimed to have a decent coverage by the end of the project for the back-end (i.e >50%). When implementing functionalities, we created Unit tests in to check it was functionning correctly. We tried creating tests for as many branches as we could to avoid unexpected behaviors. We tried to test both the intended behaviors of our code and the exception throwing behaviors in order to be as complete as possible. All of the failed tests were looked into and we tried to fix the corresponding bugs immediately. However, some bugs appeared with deployment and we couldn't use unit testing on them.

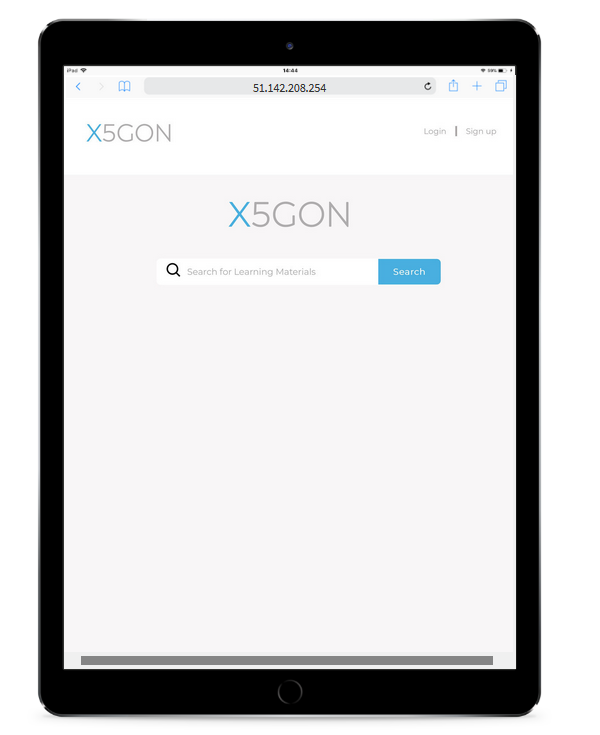

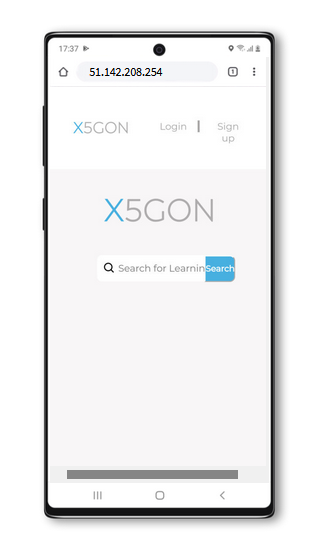

Responsive design testing

Using responsive web design testing tools like Matt Kersley's and BrowserStack we were able to test the design of our website on different devices like cellphones or tablets. While developing the front-end and the interface, we tried to make the application accessible by mobile users. As you can see on the images, the application is usable for Ipad and Samsung users. However, it is not perfect and some contents sometime do not show properly. Furthermore, the application is not optimised for very small screens (eg.320 x 480px)

Performance testingDuring the project, we performed two types of performance testing:

- Time efficiency testing: User feedback indicated that search was to slow. To help tackle that, we decided to implement time efficiency testing.

We timed each method in the search route for the back-end API, in order to find the parts which took the most time. We found out the problem was the fact that to add metadata to the results we had to send one query per document to the X5GON API. Each request took around 0.5 second. To tackle that, we decided to implement parallel requests.

- Search accuracy testing: Using another dataset, we tested our different search algorithms on a manually-labelled judgement list. more details on that are included in the Research tab

We asked our clients and Teaching Assistant for feedback in order to make user experience more pleasant. As developers, it is hard to have an objective opinion of our design as we are accustomed to it and know how it works. We simply showed them the deployment version of our project and asked them to use it the way they would if they wanted to use the tool.

The feedback was the following:

- There was no way to tell the users that the engine was searching, and when the search took long they could not know if it was a bug or not. To solve that we added a "Loading" animation to the search front-end.

- The loading time for search was too long: testers felt like the search engine was slower than other search engines they were used to. To solve that we implemented parallel requests to the X5GON api

- Users found annoying the fact that when clicking on a search result it showed it on the same tab, hence making the users loose the result page. To solve that, we decided to show content on a new tab from the search results

- Users were disoriented by the fact that when adding filters they had to search again to show the results with the filters. To solve that, we could in the future decide to launch a new search request each time new filters are added.

Other feedback was received and acted upon like the lack of popup message on log-in.