Testing

Testing Strategy

Objective

Superhero Sportsday: Winter Edition is a game that requires testing evaluation on two main areas of focus: Engagement and User Compatibility.

As the primary target users are young individuals with various autistic symptoms, we needed to ensure that firstly, the level designs created were within the scope of the users’ interests such that maintaining interest and engagement over a specific period of time is possible.

The other aspect is the in-game difficulty, as setting an adequate difficulty for the game level design is crucial to provide the users with a positive experience, which is especially important considering the uniqueness of this game’s target user pool (Buncher, 2013).

Scope

Target

- Primary Target Users: Children with autistic symptoms who will be mainly interacting with the in-game levels.

- Secondary Target Users: Occupational therapists who will be guiding the primary users and interacting mainly with game settings and the UI.

Core Gameplay Features

- Compatibility of the primary users with the motion input technology implemented.

- Determine areas and features primary users find intriguing.

- Determine areas and features primary users find uncomfortable or disengaging.

- Emphasize features based on individual preferences and tendencies.

Technical Accessibility

- Compatibility with PCs used by the occupational therapists.

- Customization options to support individual differences in primary users' needs.

Methodology

User-Centered Design Approach

- Collaborate with occupational therapists to define key mechanics and ensure therapeutic value.

- Gather feedback from both children and therapists to refine game mechanics and improve overall design.

Iterative Development and Testing

- Prototype & Playtesting: Develop small iterations and test with users in controlled environments.

- Feedback Integration: Maintain regular feedback loops with therapists and educators.

- Usability Testing: Ensure the game is intuitive for children and easy for therapists to facilitate.

Adaptive and Inclusive Gameplay Mechanics

- Provide adjustable motion input settings for users with different motor and sensory needs.

- Include adjustable motion input trigger icons, keyboard controls, and configurable settings values.

Unit & Integration Testing

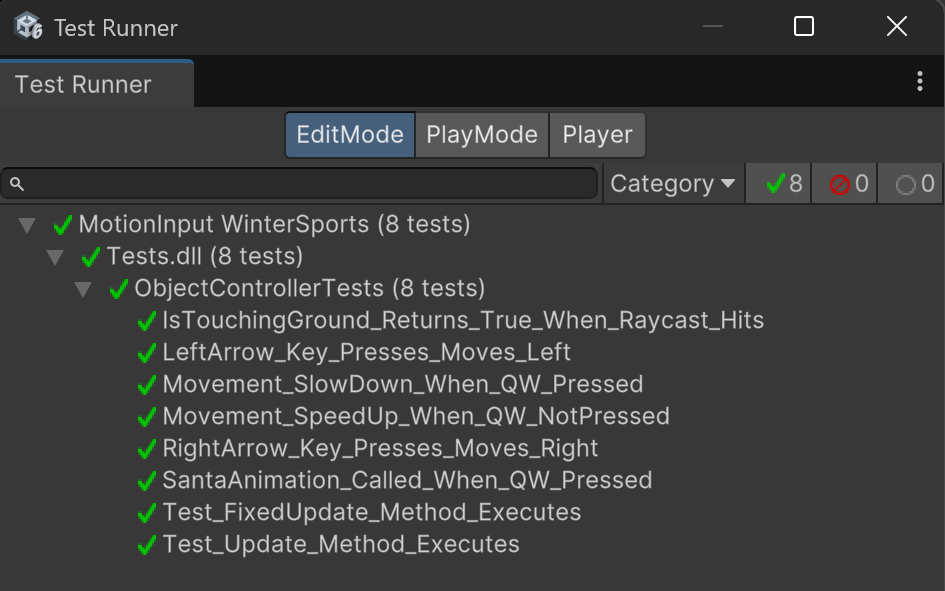

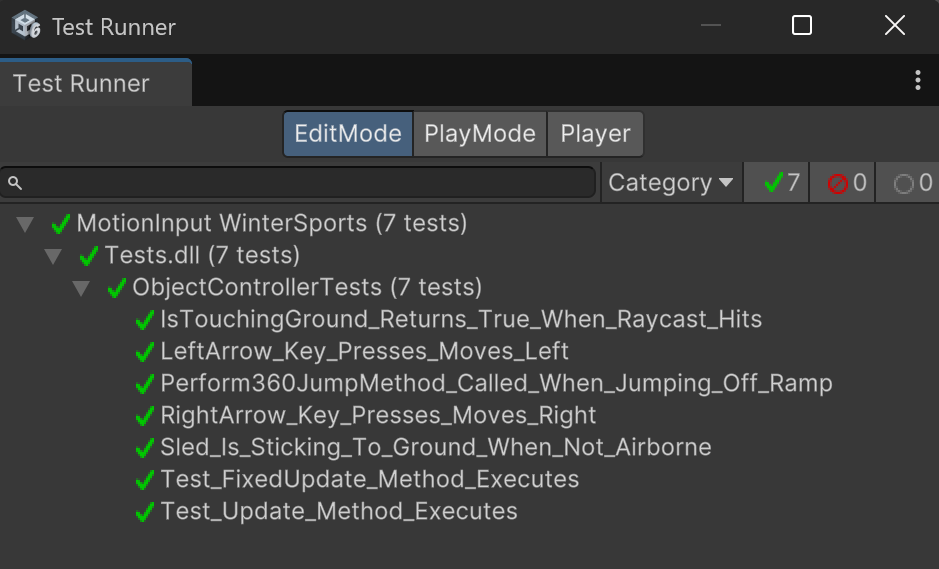

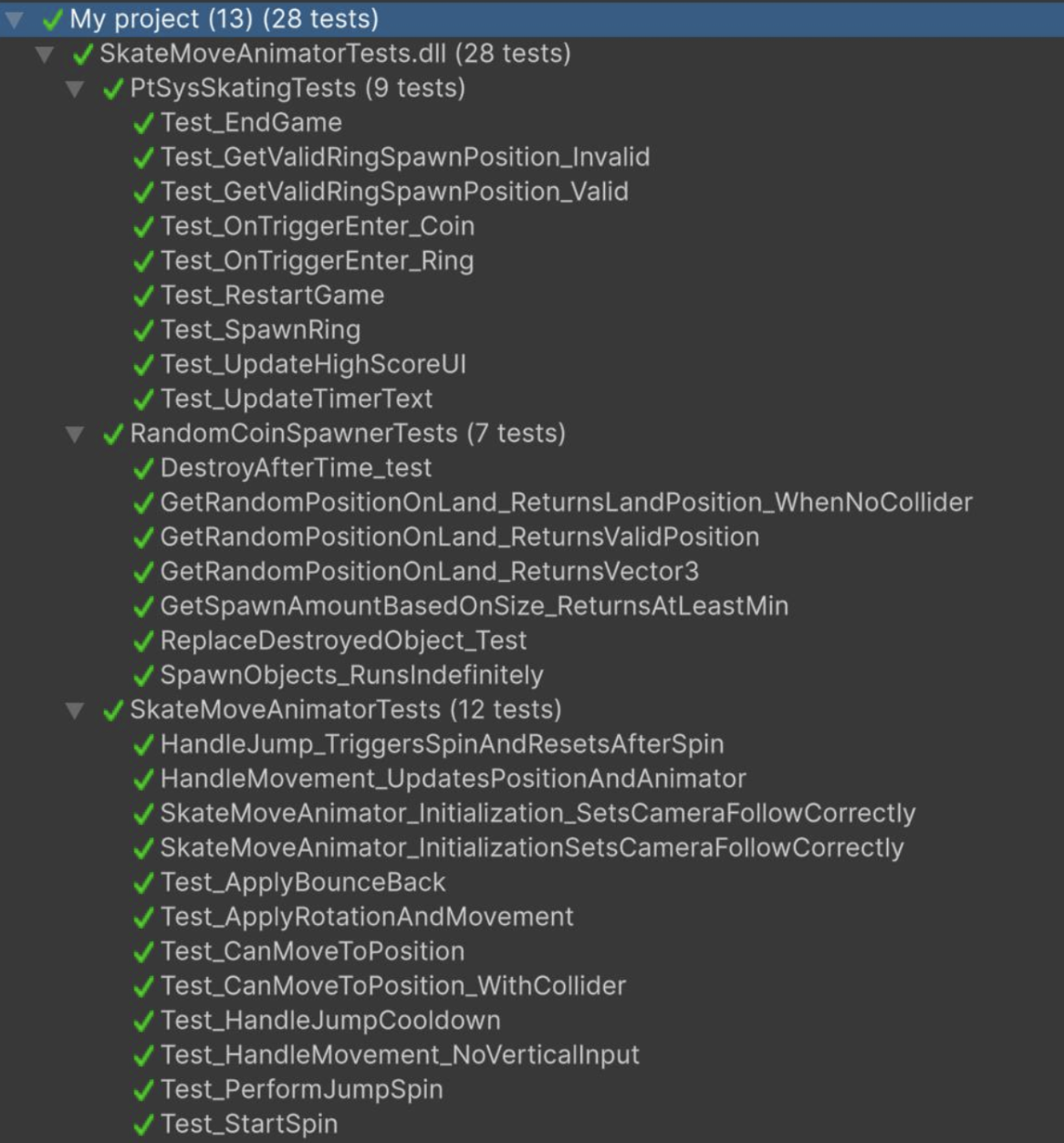

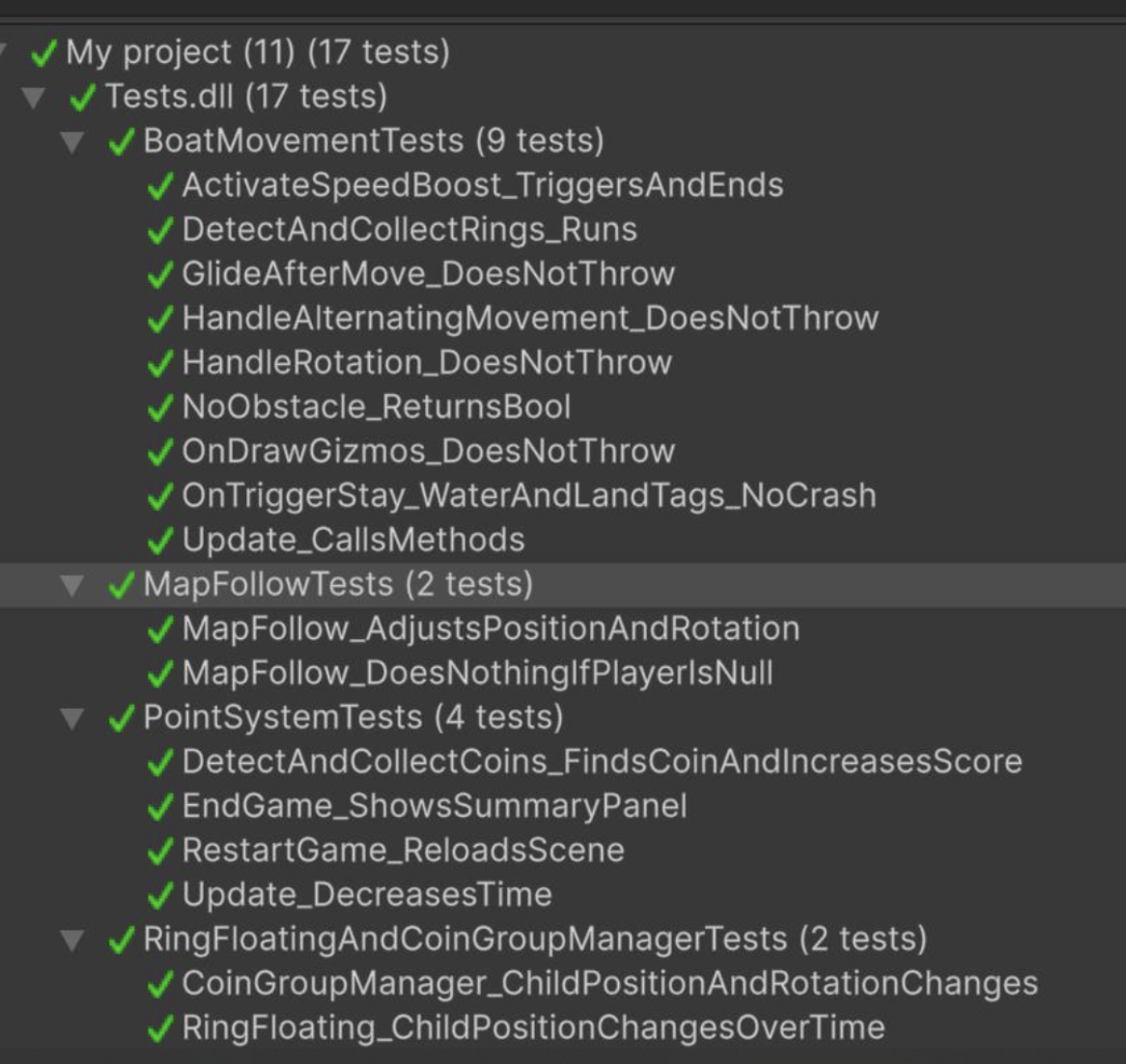

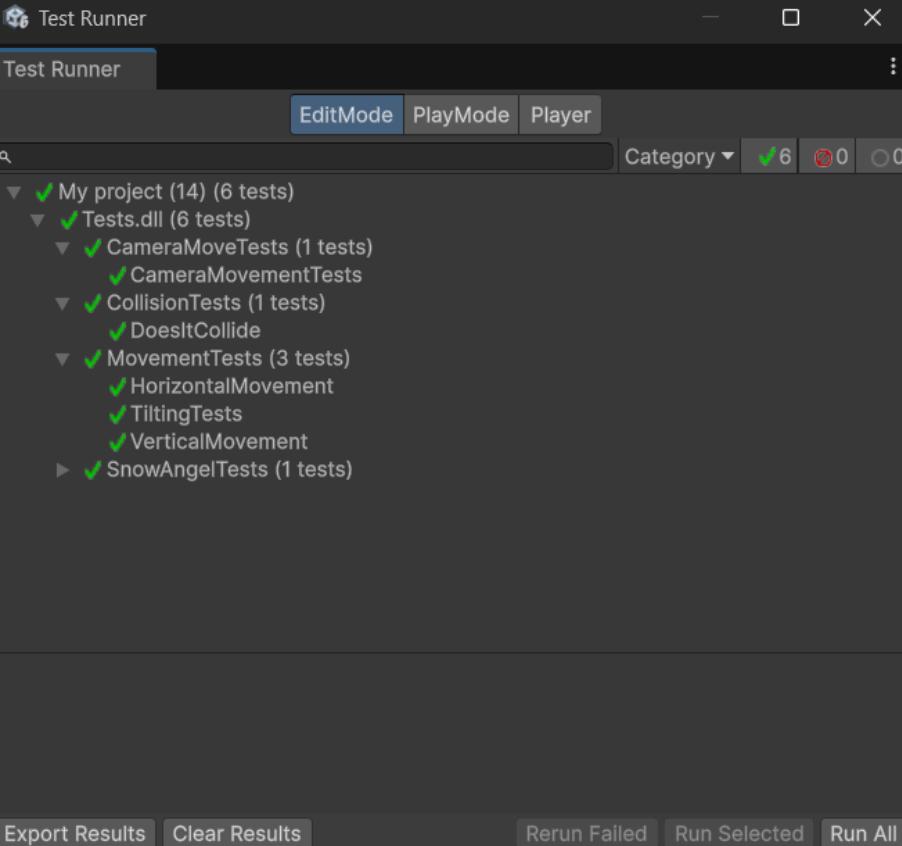

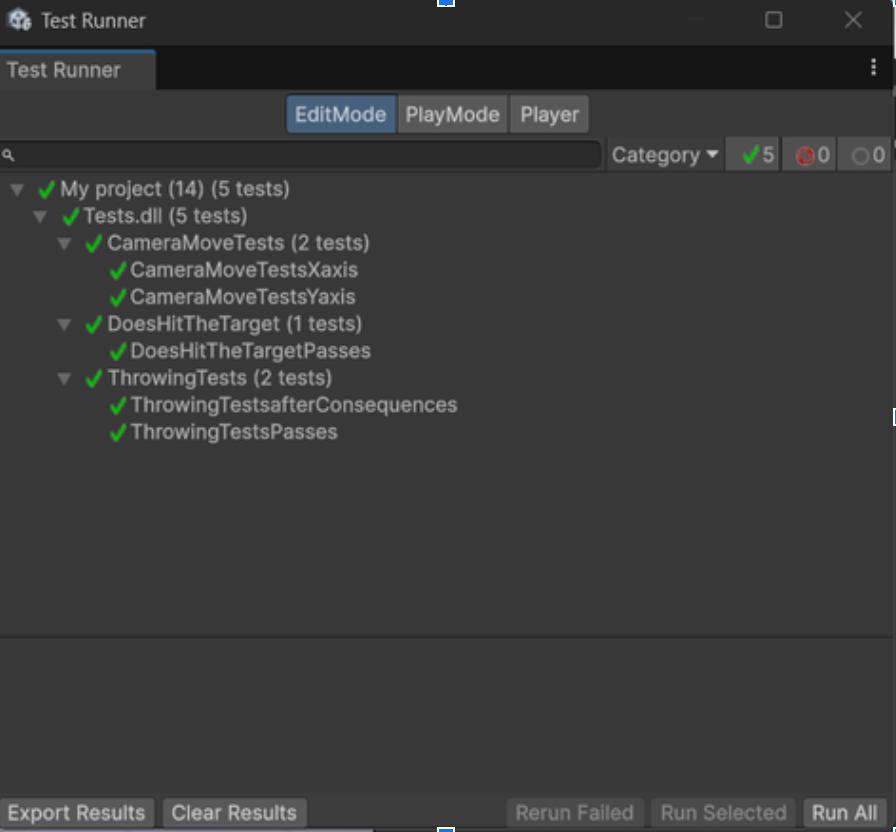

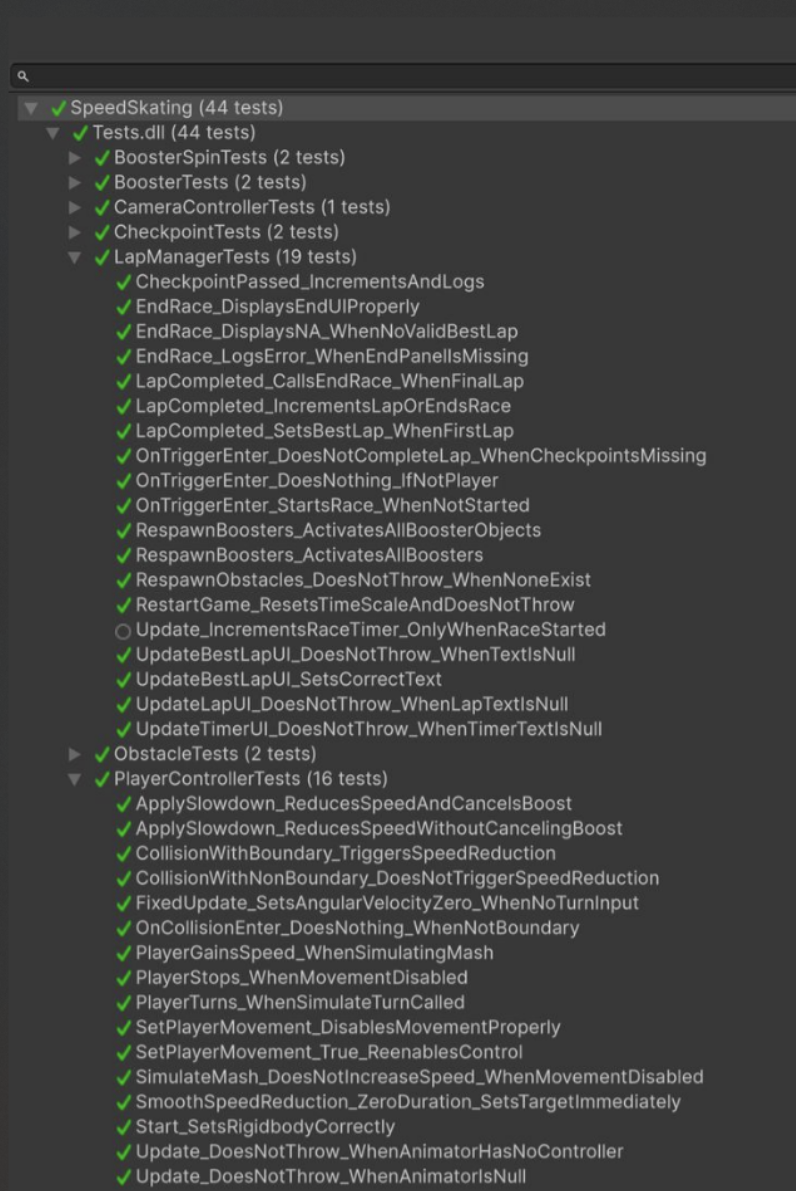

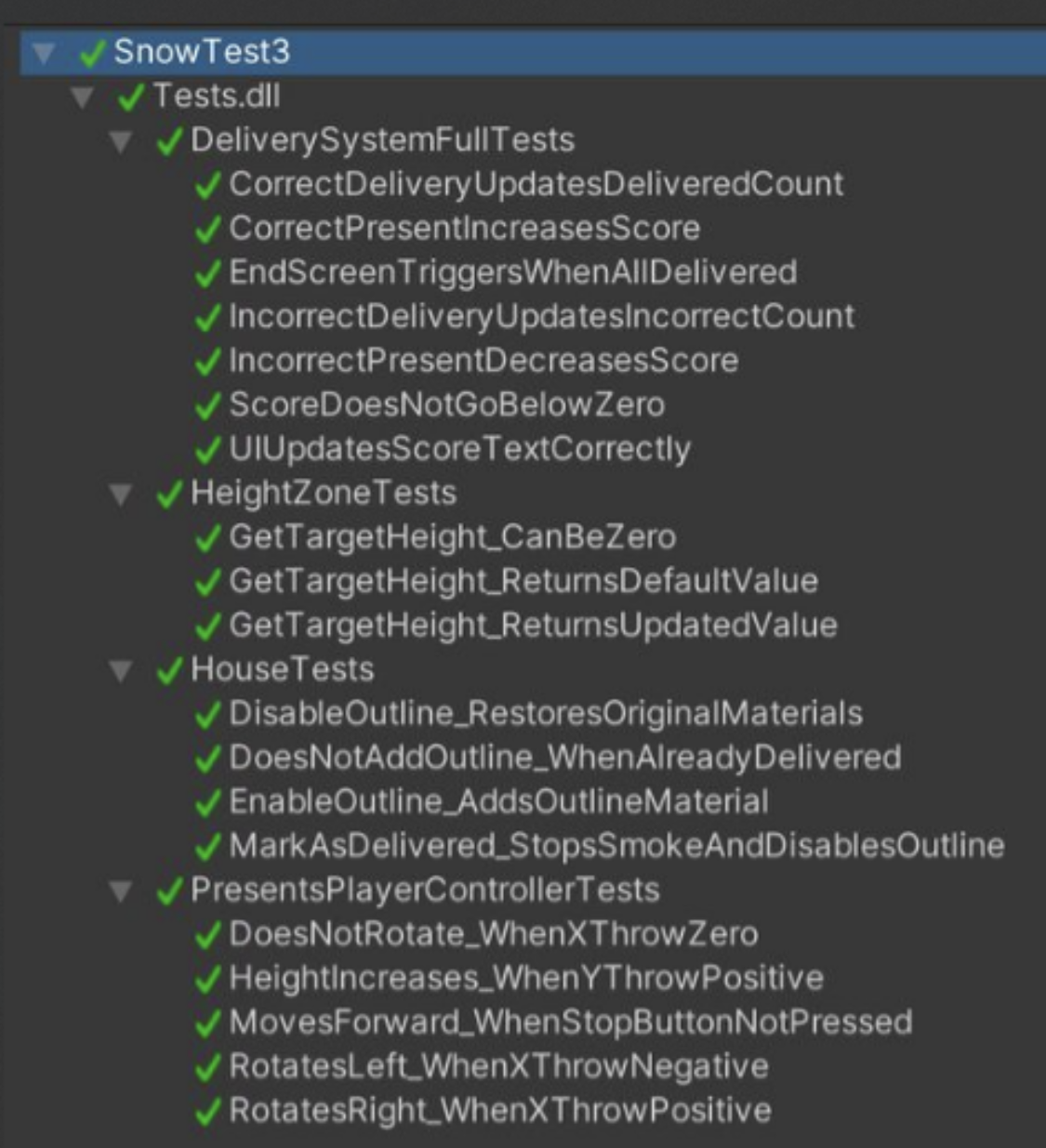

Unit Testing

Our project contains 8 winter sports levels that are created based on unique logic and mechanics in accordance with the client’s requirements. As a Unity-based project, various challenges must be addressed to ensure that each level design is fully functional and free of major bugs.

This testing scope includes core behaviours such as:

- Executing correct responses upon receiving user inputs

- Ensuring

Rigidbodyphysics behave as expected - Validating cross-referencing between methods and classes

- Verifying internal logic and control flow correctness

Testing is performed individually per level. At least one key controller script is tested, along with its integration with other components (if applicable). If another script plays a significant role in the game logic, it is also tested independently.

We use Unity’s built-in Test Runner to conduct our tests. This tool is ideal as it seamlessly interacts with Unity components and GameObjects. We use:

- Edit Mode: for fast unit tests and logic validation

- Play Mode: to isolate a scene and simulate real in-game behaviour

Achieving a high pass rate across these tests helps ensure minimal runtime errors and more consistent gameplay behaviour.

Integration Testing

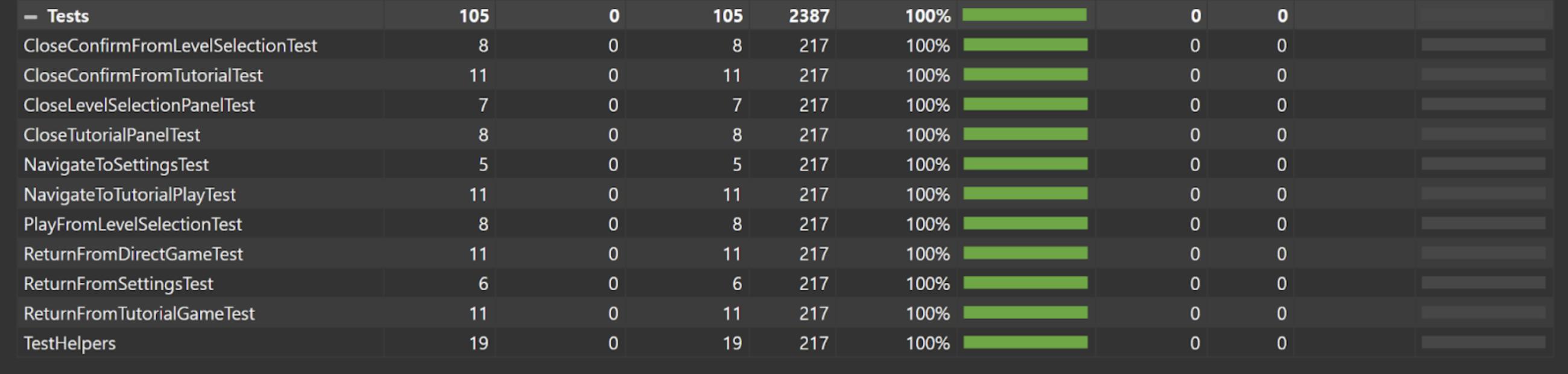

After the test rates of the 8 levels were evaluated separately in unit testing, integration testing was done to evaluate the whole compiled project that includes UI features such as settings, level selection, settings customization, etc.

This is the testing where we must ensure 100% coverage, as an error in the homepage UI could lead to fatal functional issues. The key point checked for evaluation was to ensure that the user could freely enter and exit all scenes with no exceptions (as failing to do so keeps the user stuck on a specific screen and unable to make further actions).

using System.Collections;

using NUnit.Framework;

using UnityEngine;

using UnityEngine.SceneManagement;

using UnityEngine.TestTools;

using UnityEngine.UI;

using UnityEngine.EventSystems;

public class UIFlowIntegrationTest

{

[UnitySetUp]

public IEnumerator SetUp()

{

SceneManager.LoadScene("HomePage");

yield return new WaitForSeconds(1f);

}

[UnityTest]

public IEnumerator NavigateToSettings()

{

yield return ClickButton("setting");

Assert.IsNotNull(GameObject.Find("close (2)"), "Settings panel not found.");

}

[UnityTest]

public IEnumerator ReturnFromSettingsToHome()

{

yield return ClickButton("setting");

yield return ClickButton("close (2)");

Assert.AreEqual("HomePage", SceneManager.GetActiveScene().name);

}

[UnityTest]

public IEnumerator NavigateToTutorialPlay()

{

yield return ClickButton("play");

yield return ClickButton("tutorial");

for (int i = 0; i < 6; i++)

yield return ClickButton("next");

yield return ClickButton("play (1)");

yield return ClickButton("confirm");

yield return new WaitForSeconds(2f);

Assert.AreEqual("SkatingScene", SceneManager.GetActiveScene().name);

}

[UnityTest]

public IEnumerator ReturnFromGameToHome_AfterTutorial()

{

SceneManager.LoadScene("SkatingScene");

yield return new WaitForSeconds(1f);

var manager = GameObject.Find("GlobalEndGameManager");

manager.SendMessage("ActivatePausePanel");

yield return new WaitForSeconds(1f);

Time.timeScale = 1f;

var backButton = GameObject.Find("BackToSelectionButton");

yield return SimulateClick(backButton);

yield return new WaitForSeconds(2f);

Assert.AreEqual("HomePage", SceneManager.GetActiveScene().name);

}

[UnityTest]

public IEnumerator PlayDirectlyFromLevelSelectionPage()

{

yield return ClickButton("play");

yield return ClickButton("play (1)");

yield return ClickButton("confirm");

yield return new WaitForSeconds(2f);

Assert.AreEqual("SkatingScene", SceneManager.GetActiveScene().name);

}

[UnityTest]

public IEnumerator ReturnFromGameToHome_AfterDirectPlay()

{

SceneManager.LoadScene("SkatingScene");

yield return new WaitForSeconds(1f);

var manager = GameObject.Find("GlobalEndGameManager");

manager.SendMessage("ActivatePausePanel");

yield return new WaitForSeconds(1f);

Time.timeScale = 1f;

var backButton = GameObject.Find("BackToSelectionButton");

yield return SimulateClick(backButton);

yield return new WaitForSeconds(2f);

Assert.AreEqual("HomePage", SceneManager.GetActiveScene().name);

}

[UnityTest]

public IEnumerator CloseTutorialPanel_ReturnsToLevelSelection()

{

yield return ClickButton("play");

yield return ClickButton("tutorial");

yield return ClickButton("close");

yield return new WaitForSeconds(1f);

Assert.IsNotNull(GameObject.Find("close (1)"), "Did not return to level selection from tutorial.");

}

[UnityTest]

public IEnumerator CloseConfirmPanelFromTutorial_ReturnsToTutorial()

{

yield return ClickButton("play");

yield return ClickButton("tutorial");

for (int i = 0; i < 6; i++)

yield return ClickButton("next");

yield return ClickButton("play (1)");

yield return ClickButton("close (3)");

yield return new WaitForSeconds(1f);

Assert.IsNotNull(GameObject.Find("play (1)"), "Did not return to tutorial after closing confirm from tutorial.");

}

[UnityTest]

public IEnumerator CloseConfirmPanelFromLevelSelection_ReturnsToLevelSelection()

{

yield return ClickButton("play");

yield return ClickButton("play (1)");

yield return ClickButton("close (3)");

yield return new WaitForSeconds(1f);

Assert.IsNotNull(GameObject.Find("close (1)"), "Did not return to level selection after closing confirm from direct play.");

}

[UnityTest]

public IEnumerator CloseLevelSelectionPanelFromHome()

{

yield return ClickButton("play");

yield return ClickButton("close (1)");

yield return new WaitForSeconds(1f);

Assert.AreEqual("HomePage", SceneManager.GetActiveScene().name);

}

private IEnumerator ClickButton(string objectName)

{

yield return new WaitForSeconds(0.5f);

var go = GameObject.Find(objectName);

Assert.IsNotNull(go, $"Button '{objectName}' not found.");

var btn = go.GetComponentIntegration Coverage Summary

Various methods were written and run using the Unity PlayMode Test Runner to verify whether users had consistent access to all layers of the game and could transition between scenes without any exceptions.

One core focus was ensuring that the user could always return to the previous screen by clicking the X (close) button from any main UI panel. This reverse-routing capability ensured that users were never stuck and could always return to the homepage.

We also tested transitions between UI menus and in-game scenes. Specifically, we checked that:

- The correct scenes loaded consistently when the user began a game.

- The pause panel allowed users to exit levels mid-play and return to the level selection screen.

- All UI flow routes allowed returning to the homepage without breaking the game state.

With 100% test coverage across all critical scene transitions and navigation paths, the integration results confirm that the UI logic and flow are structurally sound and highly reliable.

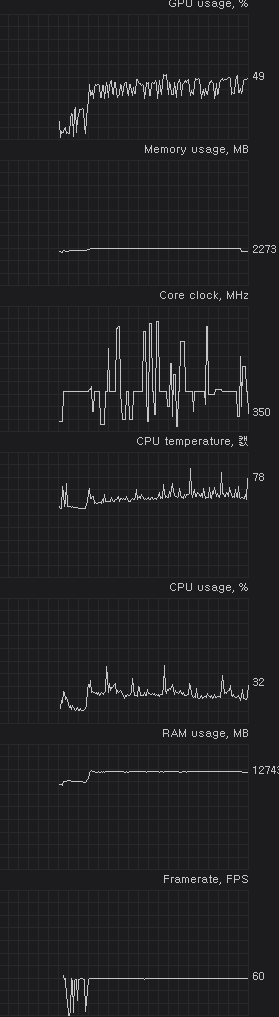

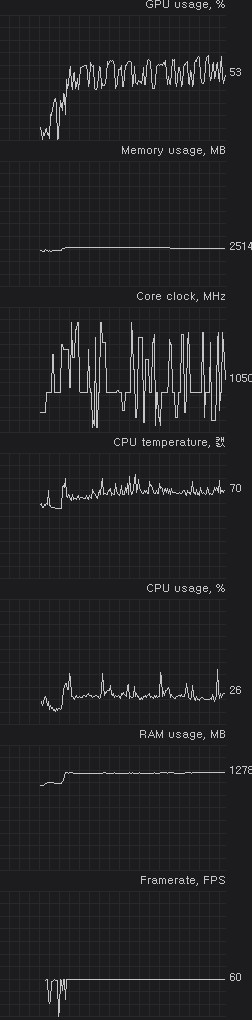

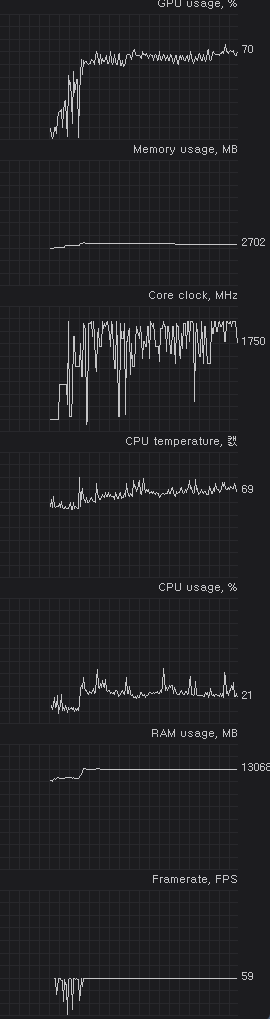

Performance Testing

It is essential that the executable file we provide is compatible with the computer specifications of our clients, especially as a majority of our potential users may be designated to government-funded organizations. We must ensure that our game will run smoothly throughout the low-end hardwares, so a series of performance tests were conducted.

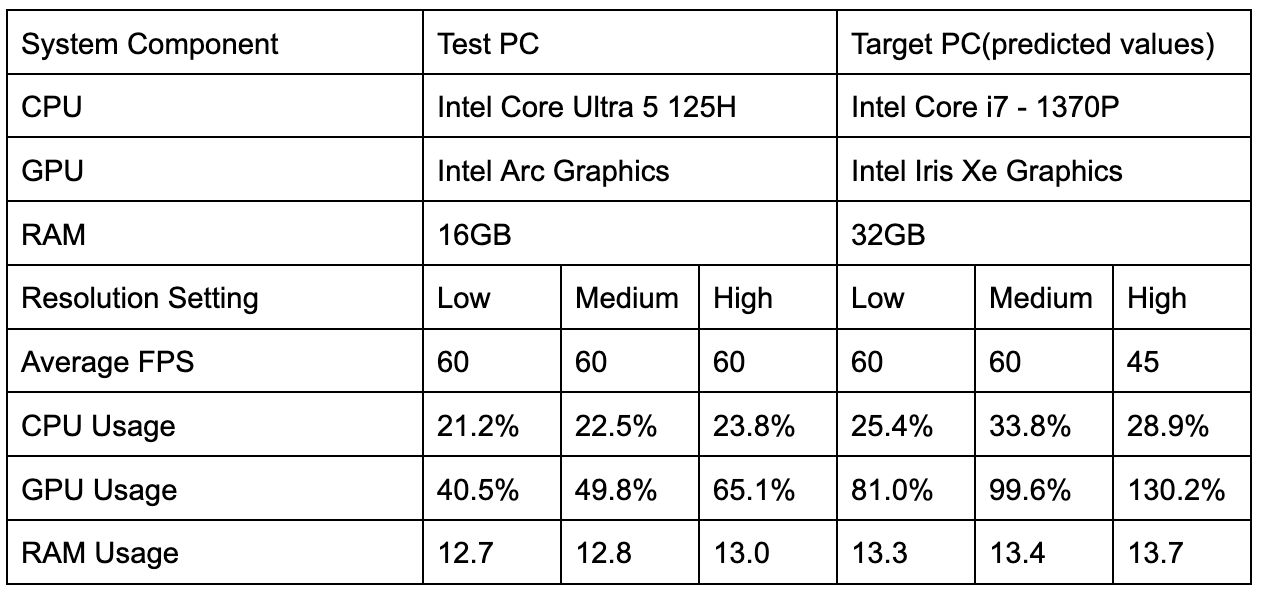

The test was run as a comparative performance test; as we were not able to directly run tests on the clients’ device, we ran a performance test on a specific PC, and received the specification of the weakest laptop they currently have at NAS. Comparing the CPU, GPU, and RAM of the specification of the two devices, we derived percent usage multipliers to approximate the usage rate of the general system components for our target PC (with minimum spec).

Predicted Performance Comparison Table

Click the table to enlarge and view detailed metrics comparing the test PC and predicted values for the target PC.

System Multiplier Values

- CPU Multiplier: 1.2

- GPU Multiplier: 2.0

- RAM Multiplier: 1.05

Through a base performance test on the test device, it can be seen that the game’s CPU performance and RAM usage are stable across all resolution settings. The system is able to maintain a consistent 60 FPS as long as the GPU usage remains below 100%.

However, GPU usage on the test machine increases significantly as resolution increases. When applying the GPU multiplier of 2.0, it can be predicted that medium resolution will use close to 100% of the GPU on the target PC, while high resolution will exceed it.

This result was expected—our partner organization works with low-end hardware, which is why we implemented a scalable resolution setting in the game. Devices used by clients vary, and this scaling feature ensures accessibility across different setups. The device used for prediction was the lowest-end system currently available at NAS, so if the game runs smoothly at 60 FPS on that, it will perform well on all client machines.

Furthermore, we received an email from NAS stating their intention to purchase a new device to run our game. Based on performance analysis, we have recommended a specification that ensures both CPU and GPU usage remain around 50%, offering reliable performance for therapeutic use.

User Acceptance Testing

Upon completion of the prototype of each level, we had the chance to visit Helen Allison School, a special needs school incorporated with NAS. Under the guidance of occupational therapists and staff members on site, we were able to conduct a proxy-based observational field case study regarding our game development.

Due to various ethical considerations, we were not able to directly communicate with the children for live feedback; however, the staff and therapists served as proxies to collect responses and interact with the participants regarding various features of the game.

The test environment was conducted such that each student participant was able to perform live interactions with the games at least once, with additional trials upon request. The final participants for testing were approximately 40 individuals, 26 recorded official responses from both the children and the staff/therapists, and numerous unofficial verbal responses that were given during the play testing.

Proxy-Based Beta Testing

The testing was done in a real-world environment with an actual user pool under a specific limited condition. Through the experience, various feedback was received regarding the game experience from both our primary users (children participants) and our secondary users (occupational therapists & staff members).

Business Acceptance Testing

Our project is designed to support occupational therapists and related experts to perform occupational therapy in special environments, making business alignment critical. By observing how the children interacted with the game, the therapists provided critical feedback and insight to verify that the game meets its intended education and therapeutic goals, along with areas of improvement to enhance the overall experience.

Feedback from User Testing

Common Beta Testing Feedback

- Controlling difficulty 3

- Camera angle & adjustment 2

- Motion trigger adjustment 3

Common Business Acceptance Testing Feedback

- Motion trigger adjustment 2

- Camera angle & adjustment 2

Problems Needed to Be Solved

Controlling Difficulty

Added an "Adjust Speed" feature in the settings page to allow velocity adjustment for relevant games. Instead of a slider, we implemented preset options (slow, normal, fast) to ensure stable gameplay performance across all speeds.

Camera Angle & Adjustment

Silhouettes were added to the motion input window to help users align themselves properly within the ideal camera frame. This improves input accuracy by ensuring optimal player positioning.

Motion Trigger Visibility & Location

- Trigger colour changed from grey to green to improve visibility

- Motion input window now allows real-time adjustment of trigger location using click-and-drag functionality

Conclusion

Although we were not able to conduct a continuous series of feedback loops given the uniqueness of the user pool along with ethical considerations, the comments and feedback that we have received from the tests were extremely helpful in determining the desired scope of the clients and users.

We were able to have a better understanding on what features work with the children, and what features the children have a hard time on, and would be better off changed or deleted.

Our group would like to finish the conclusion of the testing index with an appreciation towards all officials involved in the testing procedure, including NAS therapists & staff, children in Helen Allison School, MotionInputGames team, Intel officials, Professor Dean, and many other individuals who helped our team in the testing process.