Testing and Evaluation

To ensure that our system meets quality standards, we will be placing a heavy emphasis on software testing throughout development. This page details the testing strategies for this project. Click the buttons below to jump to the corresponding section.

1. Unit Testing 2. Functional Testing 3. Compatibility Testing 4. Requirements Evaluation 5. Performance Testing 6. Acceptance Testing

Unit Testing

To ensure the integrity, completeness and correctness of our software, we developed a individual test suites which consisted of unit tests for both our front and back-ends. After developing a feature we would carry out regression testing by automatically running the test suites to ensure no new bugs are introduced.

Back-end - Python Modules

Since our backend consisted of Python modules, we used a full-featured testing tool called Pytest to create our unit tests. While developing the test suite for the backend, we made sure to individually test each python function with extreme, normal and abnormal data to ensure that our software was robust enough. In total we had 100 unit tests in our test suite.

Front-end - Angular.js

To construct unit tests for the front-end, we used Jasmine which is a behaviour driven development framework as well as Karma which is a test runner that is framework agnostic. we used Karma to run the tests written in Jasmine to run on different browsers. While constructing the unit tests we tried to test most of the important features of our UI. Overall we have 75 tests in our suite.

The locations of these unit tests can be found in the Project File Structure page of our technical documentation.

Functional Testing

Functional testing is used as a quality assurance process for a software system.It refers to activities that verify a specific action or function of the code. Functional test tends to answer the questions like “can the user do this” or “does this particular feature work”. In order to carry out our functional testing, we used our requirements specification to construct a series of test cases which were used for manual black-box testing.

Manual Testing

The test cases we used were built around the specification, system requirements and design parameters. For each test case we selected both valid and invalid inputs in order to determine whether the system produces the correct desired output. Each of the test cases were carried out manually, the type of test carried out and the result of each test is recorded in the table below:

| Test Scenario | Test Data | Test Data Type | Expected Results | Actual Results | Pass/Fail |

| Upload Screen | |||||

| Upload a CSV file | Valid CSV file | Normal | Parse file successfully and update spreadsheet with data | As expected | Pass |

| Upload a JSON file | Valid JSON file | Normal | Parse file successfully and update spreadsheet with data | As expected | Pass |

| Upload a XLS(X) file | Valid XLS(X) file | Normal | Parse file successfully and update spreadsheet with data | As expected | Pass |

| Upload a file with inconsistencies | Invalid CSV file | Abnormal | unable to parse file error appears | As expected | Pass |

| Select a sample size when uploading a file | Sample size = 50, (Normal Data) | Normal | Upload a percentage of the data-set based on the sample size, (sampling should be done randomly) | As expected | Pass |

| Select a sample size when uploading a file | Sample size = -10 | Abnormal | Input rejected | As expected | Pass |

| Specify a seed for sampling the data | Seed = 4 | Normal | Use the seed for sampling the data | As expected | Pass |

| Specify a seed for sampling the data | Seed = -50 | Abnormal | Input Rejected | Input accepted, File Parsing Failed | Fail |

| Specify a number of initial rows to skip when uploading a file | Skip=10 | Normal | Skip the first 10 rows of the file before uploading | As expected | Pass |

| Specify a number of initial rows to skip when uploading a file | Skip = -10 | Abnormal | Input rejected | As expected | Pass |

| Unmark the option for 'file includes column headers' | Uncheck the checkbox | Normal | Upload the dataset without column headers | As Expected | Pass |

| Clean Tab | |||||

| Create a duplicate of a column | Select a column | Normal | Create a duplicate of the column specified and add it to the dataset | As expected | Pass |

| Split a Column using a delimiter | Delimiter ='.' | Normal | Splits the data contained within the column into multiple columns if the delimiter is present | As expected | Pass |

| Split a Column that contains invalid values | Delimiter = '.' | Extreme | Split the data in the column if the delimiter is present | Operation Fails | Fail |

| Combine multiple columns into a new column | Separator = '@,' New column name = email | Normal | Combine the selected columns using '@' as a separator into a new column named 'email' | As expected | Pass |

| combine multiple columns into a new column | seperator = ' ' (space) | Extreme | Combine the selected columns using ' ' as a separator | The seperator is not used | Fail |

| Use combine feature on a single column | Select a single column to combine only | Abnormal | The user cannot run the operation | As expected | Pass |

| Impute missing data with the columns mean | Select a column with missing values | Normal | Missing values are filled with the column mean | As expected | Pass |

| Impute missing data with the columns mean | Select a column with no missing values | Extreme | Column stays the same | As expected | Pass |

| Impute missing data with the columns mode | Select a column with missing values | Normal | Missing values are filled with the column mode | As expected | Pass |

| Impute missing data with the columns mode | Select a column with missing values but with no mode | Extreme | Missing values are filled with any value contained within the column | As expected | Pass |

| Impute missing data with the columns median | Select a column with missing values | Normal | Missing values are filled with the column median | As expected | Pass |

| Impute missing data with the last valid value | Select a column with missing values | Normal | Missing values are filled with the last valid value | As expected | Pass |

| Impute missing data with the next valid value | Select a column with missing values | Normal | Missing values are filled with the next valid value | As expected | Pass |

| Impute missing data using linear interpolation | Select a column with missing values | Normal | Missing values are filled using linear interpolation | As expected | Pass |

| Impute missing data using spline interpolation | Spline order = 10 | Normal | Missing values are filled using spline interpolation | As expected | Pass |

| Impute missing data using spline interpolation | Spline order = -5 | Abnormal | Input rejected | As expected | Pass |

| Impute missing data using polynomial interpolation | Polynomial order = 3 | Normal | Missing values are filed using polynomial interpolation | As expected | Pass |

| Impute missing data using PHCIP interpolation | Select a column with missing values | Normal | Missing values are filed using PHCIP interpolation | As expected | Pass |

| Impute missing data in a specific column using a custom value | Custom value = "%£$^&^%* | Extreme | Missing values are filed using the custom value | As expected | Pass |

| Delete rows containing missing values in a single column | Select a column with missing values | Normal | Rows containing missing values should be deleted | As expected | Pass |

| Delete rows containing missing values in a single column | Select a column with no missing values | Extreme | Dataset should remain unchanged | As expected | Pass |

| Discretize the data in a column using bins | Number of Bins = 5 | Normal | Column data changed with discretized data | As expected | Pass |

| Discretize the data in a column using bins | Select a column that contains invalid values | Extreme | Column data changed with discretized data | Operation Fails | Fail |

| Specify custom ranges for discretizing a column | ranges = 1,20,30,40 | Normal | descretize the data using the range provided | As expected | Pass |

| Quantile the data in a column using bins | Number of Bins = 5 | Normal | Column data changed with Quantized data | As expected | Pass |

| Quantile the data in a column using bins | Number of Bins = -20 | Abnormal | Input rejected | As expected | Pass |

| Apply categorical feature encoding to a column | Select a column | Normal | Convert each record of the column to a combination of high and low bits | As expected | Pass |

| Apply categorical feature encoding to a column | Select a column with missing/invalid values | Extreme | Convert each record of the column to a combination of high and low bits | As expected | Pass |

| Apply min-max feature scaling to a numeric column | Range = 10 to 20 | Normal | Scale the data in the column according to the range specified | As expected | Pass |

| Apply min-max feature scaling to a numeric column | Range = -3543 to 294342 | Extreme | Scale the data in the column according to the range specified | As expected | Pass |

| Standardise the data in a column | Select a column | Normal | Standardise the data within the column | As expected | Pass |

| Find and replace all values within a column that match the input string | Match = 'hello', Replace = 'world' | Normal | Replace all occurences of the string 'hello' with the string 'world' | As expected | Pass |

| Apply find and replace feature to a column of type date | Select a colum of type date | Normal | Replace all values of the specified string with the replacement string | Operation fails | Fail |

| Use find and replace feature using a regular expression | Regex = [0-9]*, Replace = 10 | Normal | Replace all values that match the regex with the string '10' | As expected | Pass |

| Use find and replace feature using a regular expression | Regex = [0-9]*, Replace = 48"£$%^&*f | Extreme | Replace all values that match the regex with the string provided | As expected | Pass |

| filter the dataset by duplicates in a selected column | Select a column with duplicates | Normal | filter the data to display only duplicates in the selected column | As expected | Pass |

| filter the dataset by duplicates in a selected column | Select a column with no duplicates | Extreme | Filtered dataset should contain nothing | As expected | Pass |

| filter the dataset by Invalid values in a selected column | Select a column with invalid values | Normal | filter the data to display only rows containing invalid values in the selected column | As expected | Pass |

| filter the dataset by Invalid values in a selected column | Select a column with no invalid values | Extreme | Filtered dataset should contain nothing | As expected | Pass |

| filter the dataset using outliers in a selected column | Standard deviation = 2, Trim Percentage = 10 | Normal | filter the data to display only rows containing outliers in the selected column | As expected | Pass |

| filter the dataset using outliers in a selected column | Standard deviation = -24, Trim Percentage = -11 | Abnormal | Input rejected | As expected | Pass |

| Change the name of a column | New name = 'col1' | Normal | Change the column name to 'col1' | As expected | Pass |

| Change the name of a column | New name = '"£$%^&*(' | Extreme | Change the column name | As expected | Pass |

| Change the name of a column | Change the name to a name that is already present | Extreme | Reject the input | Operation fails | Fail |

| Change the type of a column from float to int | Select a column with no invalid values | Normal | Data type changes to int | As expected | Pass |

| Change the type of a column from float to int | Select a column with invalid values | Extreme | Data type changes to int | Operation fails | Fail |

| Change the type of a column from int to float | Select a column | Normal | Data type changes to float | As expected | Pass |

| Change the type of a column from int/float to string | Select a column | Normal | Data type changes to String | As expected | Pass |

| Change the type of a column from int/float to string | Select a column with missing values | Extreme | Data type changes to String | As expected | Pass |

| Change the type of a column from string to datetime | Select a column of type string with date values | Normal | Data type changes to datetime | As expected | Pass |

| Change the type of a column from string to datetime | Select a column of type date | Normal | Data type changes to string | Operation fails | Fail |

| Delet a selected column | Select a column | Normal | The selected column should be deleted | As expected | Pass |

| Sort the dataset by a single column | Select a column | Normal | Sort the dataset with respect to the specified column | As expected | Pass |

| Sort the dataset by a single column | Select a column where every value is the same | Extreme | Data set remains unchanged | As expected | Pass |

| Sort the dataset by multiple columns | Select multiple columns for sorting | Normal | Sort the dataset with respect to the specified columns | As expected | Pass |

| Search the rows in a column using a search term | Search term = 'hello' | Normal | Display only those rows within the column that contatin the word hello (complete match) | The filtered results arenot a complete match | Fail |

| Search the rows in a column usng a reular expression | Select a column that is not a numeric type, regex = [0-9] | Normal | Display only those rows that contatin the matched regex | As expected | Pass |

| Search the rows in a column using a search term | Select a search term that is not contained within the column | Extreme | Filtered dataset should contain no rows | As expected | Pass |

| Search for a term in all the columns | Search term = 'hello' | Normal | Display only those rows which contain the search term in any column | As expected | Pass |

| Search for a term in all the columns using a regular expression | Regex = [0-9]* | Normal | Display only those rows which contain the regex term in any column | As expected | Pass |

| Filter the column than search through the column | Filter = Duplicates, Search = 'hello' | Noramal | Display only those rows which contain the search term in the filtered column | Operation fails | Fail |

| Edit the vallue in a particular cell | New cell value = 'hello world' | Normal | Change the value of the cell to 'hello world' | As expected | Pass |

| Edit the vallue in a particular cell | New cell value = '£$^&&@(-3' | Extreme | Change the value of the cell to ''£$^&&@(-3' | As expected | Pass |

| Analysis tab | |||||

| Check if all generic analyses are displayed for every column | Full dataset | Normal | Display all generic analyses for every column | As expected | Pass |

| Check if all numeric analyses are displayed for numeric columns | Numeric columns | Normal | Display numeric analyses for all the numeric columns | As expected | Pass |

| Check if all String analyses are displayed for string and date columns | String and date columns | Normal | Display string analyses for all the string and date columns | As expected | Pass |

| Check if a word frequency table is available for string types in the analysis tab | String and date columns | Normal | Display the word frequency table for all the string and date columns | As expected | Pass |

| Visualise Tab | |||||

| Check If a line chart works correctly | Select two columns | Normal | Line charat is diaplayed | As expected | Pass |

| Check If a scatter chart work correctly | Select two columns | Normal | Scatter charat is diaplayed | As expected | Pass |

| Check If a histogram works correctly | Select two columns | Normal | Histogram is diaplayed | As expected | Pass |

| Change the bin size of the histogram | bin size = 10 | Normal | Display histogram with a bin size of 10 | As expected | Pass |

| Change the bin size of the histogram | bin size = '$%^&* | Extreme | Input rejected | Input accepted | Fail |

| Check If a frequency chart work correctly | Select a single colum | Normal | Frequency charat is diaplayed | As expected | Pass |

| Check If a time series chart work correctly | Select a column | Normal | Time series chart is displayed | Dates are not ordered before the chart is generated | Fail |

| Export/Save | |||||

| Save the dataframe as a CSV file | Dataset | Normal | Dataset is downloaded as a CSV file | As expected | Pass |

| Save the dataframe as a JSON file | Dataset | Normal | Dataset is downloaded as a JSON file | As expected | Pass |

N.B. Most of the failures has since been fixed.

Compatibility Testing

With all the different operating systems and web browsers available, it is very important to these the compatibility of an application. Below are some of the tests we carried out:

Browser compatibility

Ensuring that a web application works on the most widely used browsers is extremely important, this is because applications can behave differently dependent on browsers they are run on. It is also the case that different browsers have different configurations and settings that a web page should be compatible with. We therefore tested our web application on Internet explorer, Firefox, Chrome, Safari, and Edge with different versions.

OS compatibility

In some cases a functionality of a web application may not be compatible with all operating systems. Technologies such as graphic designs and interface calls may not be available in all Operating Systems. We therefore tested our application on Windows, Mac and Linux systems.

Requirements Evaluation

Throughout the development of the system, we evaluate the progress and success of the system by referring to our requirements specification for the system and made sure that all must have and should have requirements were implemented. The status of each requirement at the end of the project is listed below:

| ID | Requirement | Type | Category | Priority | Status |

| UI/UX | |||||

| Um1 | The DCS shall display the user imported dataset as a spreadsheet/table. | Functional | UI/UX | Must | Implemented |

| Uc1 | The DCS shall display all unresolved cleanliness issues | Functional | UI/UX | Could | Not Implemented |

| Uc2 | The DCS shall offer the user a choice between a pure GUI interface and a notebook style interface. | Functional | UI/UX | Could | Withdrawn |

| Uc3 | The DCS shall support persistence of user sessions. | Functional | UI/UX | Could | Implemented |

| Uc4 | The DCS shall support partial loading of rows in datasets. | Functional | UI/UX | Could | Implemented |

| Uw1 | The DCS shall allow multiple users to collaborate showing changes in real time | Functional | UI/UX | Would | Partially Implemented |

| Uw2 | The DCS shall compute a "messiness" score. | Functional | UI/UX | Would | Not Implemented |

| Data Loading | |||||

| Lm1 | The DCS shall support loading of user-uploaded CSV files. | Functional | Data Loading | Must | Implemented |

| Lm2 | The DCS shall allow users to specify variable names and types. | Functional | Data Loading | Must | Implemented |

| Ls1 | The DCS shall support a Date variable type. | Functional | Data Loading | Should | Implemented |

| Ls2 | The DCS shall parse dates with a user-provided format string. | Functional | Data Loading | Should | Implemented |

| Lc1 | The DCS shall support loading of user-uploaded structured file formats (JSON, Excel). | Functional | Data Loading | Could | Implemented |

| Lc2 | The DCS shall support partial loading of columns in datasets. | Functional | Data Loading | Could | Not Implemented |

| Lw1 | The DCS shall support loading user-uploaded unstructured data text files. | Functional | Data Loading | Would | Not Implemented |

| Lw2 | The DCS shall support parsing of unstructured file format. | Functional | Data Loading | Would | Not Implemented |

| Lw3 | The DCS shall support loading of data files over network. | Functional | Data Loading | Would | Not Implemented |

| Lw4 | The DCS shall load asynchronously. | Functional | Data Loading | Would | Not Implemented |

| Lw5 | The DCS shall support loading of data streams. | Functional | Data Loading | Would | Not Implemented |

| Lw6 | The DCS shall support an Email variable type. | Functional | Data Loading | Would | Not Implemented |

| Lw7 | The DCS shall intelligently guess variable types. | Functional | Data Loading | Would | Partially Implemented |

| Data Viewing | |||||

| Xs1 | The DCS shall support sorting of rows by user-specified column | Functional | Data Viewing | Should | Implemented |

| Xs2 | The DCS shall support searching datasets with a keyword | Functional | Data Viewing | Should | Implemented |

| Xc1 | The DCS shall support querying datasets with SQL | Functional | Data Viewing | Could | Not Implemented |

| Data Cleaning | |||||

| Cm1 | The DCS shall support removing rows as a universal cleaning operation | Functional | Data Cleaning | Must | Implemented |

| Cm2 | The DCS shall support inserting user-specified values as a universal cleaning operation | Functional | Data Cleaning | Must | Implemented |

| Cm3 | The DCS shall show rows with invalid numbers in specified column | Functional | Data Cleaning | Must | Implemented |

| Cm4 | The DCS shall show rows with missing values in specified column | Functional | Data Cleaning | Must | Implemented |

| Cm5 | The DCS shall support cleaning of missing values by inserting an average | Functional | Data Cleaning | Must | Implemented |

| Cm6 | The DCS shall support cleaning of missing values by filling with the most recent value | Functional | Data Cleaning | Must | Implemented |

| Cm7 | The DCS shall support cleaning of missing values by interpolation | Functional | Data Cleaning | Must | Implemented |

| Cs1 | The DCS shall show rows where Date parsing failed | Functional | Data Cleaning | Should | Withdrawn |

| Cs2 | The DCS shall show duplicate rows | Functional | Data Cleaning | Should | Implemented |

| Cs3 | The DCS shall provide the option to ignore outliers | Functional | Data Cleaning | Should | Withdrawn |

| Cs4 | The DCS shall provide the option to filter rows using regular expression | Functional | Data Cleaning | Should | Implemented |

| Cs5 | The DCS shall support data normalisation and standarisation | Functional | Data Cleaning | Should | Implemented |

| Cc1 | The DCS shall provide the option to group multiple text representation of the same entity and replace them with a single value | Functional | Data Cleaning | Could | Not Implemented |

| Cc2 | The DCS shall fix escaped HTML strings | Functional | Data Cleaning | Could | Not Implemented |

| Cc3 | The DCS shall show values that are not found in English dictionary | Functional | Data Cleaning | Could | Not Implemented |

| Cw1 | The DCS shall show rows where Email parsing failed | Functional | Data Cleaning | Would | Not Implemented |

| Data Analysis | |||||

| Am1 | The DCS shall show the unique values and their count of every column of the dataset | Functional | Data Analysis | Must | Implemented |

| Am2 | The DCS shall show the mean, median, mode of columns with numerical data | Functional | Data Analysis | Must | Implemented |

| Am3 | The DCS shall show the max and min values of columns with numerical data | Functional | Data Analysis | Must | Implemented |

| Am4 | The DCS shall show the range and standard deviation of columns with numerical data | Functional | Data Analysis | Must | Implemented |

| As1 | The DCS shall show text analysis such as most frequent word for string type data | Functional | Data Analysis | Should | Implemented |

| Data Visualisation | |||||

| Vm1 | The DCS shall be able to visualise data using histograms | Functional | Data Visualisation | Must | Implemented |

| Vm2 | The DCS shall be able to visualise data using line charts | Functional | Data Visualisation | Must | Implemented |

| Vs1 | The DCS shall be able to visualise data using scatter plots | Functional | Data Visualisation | Should | Implemented |

| Vs2 | The DCS shall be able to visualise data using time-series plots | Functional | Data Visualisation | Should | Implemented |

| Vc1 | The DCS shall provide the option to export graphs to image | Functional | Data Visualisation | Could | Implemented |

| Vc2 | The DCS shall be able to visualise data using pie charts | Functional | Data Visualisation | Could | Not Implemented |

| Vw1 | The DCS shall be able to visualise data using regression matricies | Functional | Data Visualisation | Would | Not Implemented |

| Vw2 | The DCS shall be able to visualise data using bar charts | Functional | Data Visualisation | Would | Partially Implemented |

| Others | |||||

| Nm1 | The DCS shall use a browser as its user interface | Non-Functional | Compliance to Standards | Must | Implemented |

| Nm2 | The DCS shall support the latest versions of Safari, Internet Explorer, Chrome, Firefox | Non-Functional | Performance | Must | Implemented |

| Nm3 | The DCS shall be easily installable by an untrained user with the help of documentation | Non-Functional | Deployment | Must | Implemented |

| Nc1 | The DCS shall ensure that error messages give the users specific instructions for recovery | Non-Functional | Ease of Use | Could | Implemented |

| Nc2 | The DCS shall ensure that a users persistence data has an availability of 100% | Non-Functional | Availability | Could | Not Tested |

| Nw1 | The DCS shall support 100 concurrent sessions | Non-Functional | Capacity | Would | Not Tested |

| Nw2 | The DCS shall be easily scalable to accommodate more concurrent users | Non-Functional | Capacity | Would | Not Implemented |

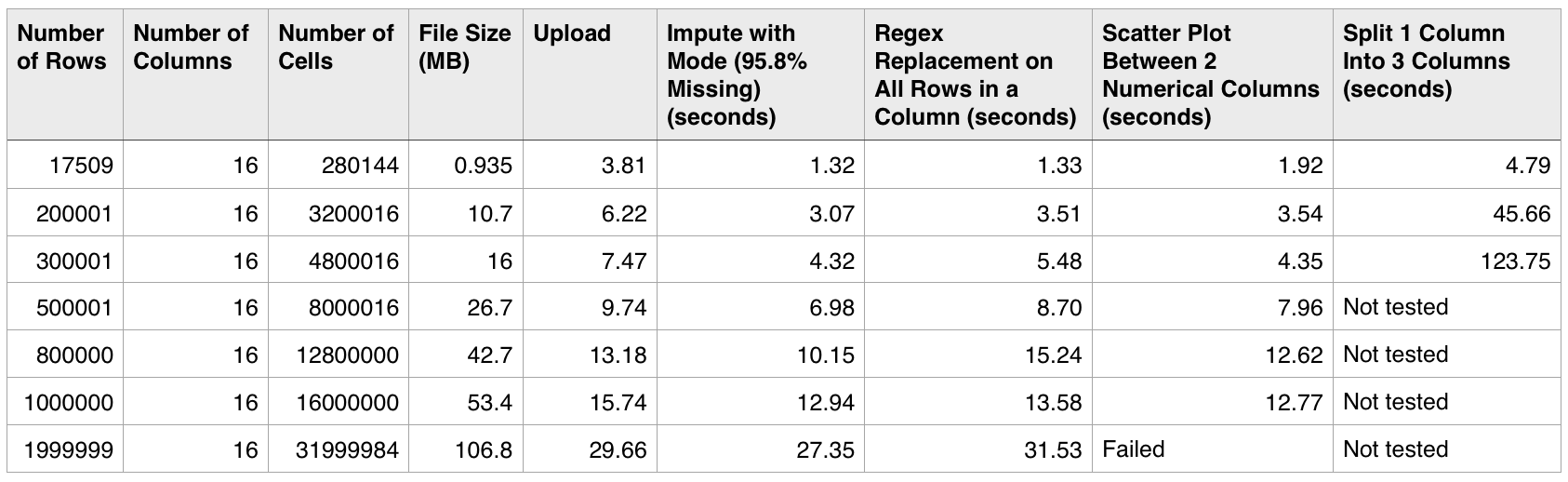

Performance Testing

To make sure the web application performs well with reasonably sized datasets, we tested the final system with a series of artificially generated datasets, ranging from less than 1MB in size to over 100MB. We tested 5 main functionalities of the system, including:

- Upload the dataset

- Impute missing values in a column (with 95.8% values missing) with the column mode

- Perform a find and replace operation using regex on a column

- Generate a scatter plot between 2 numerical columns

- Split a column into 3 columns using a delimiter

We measured the time the system takes to perform each of these operations using a stopwatch.

Specification of the machine used for testing:

- MacBook Pro 15-inch (Late 2013)

- 2.0 GHz (i7-4750HQ) quad-core Intel Core i7 Haswell with 6 MB on-chip L3 and 128 MB L4 cache (Crystalwell)

- 8 GB built-in onboard RAM

- Intel Iris Pro 5200 Graphics with DDR3L SDRAM shared with main memory

- Running on Google Chrome 50

Results

Results of performance testing.

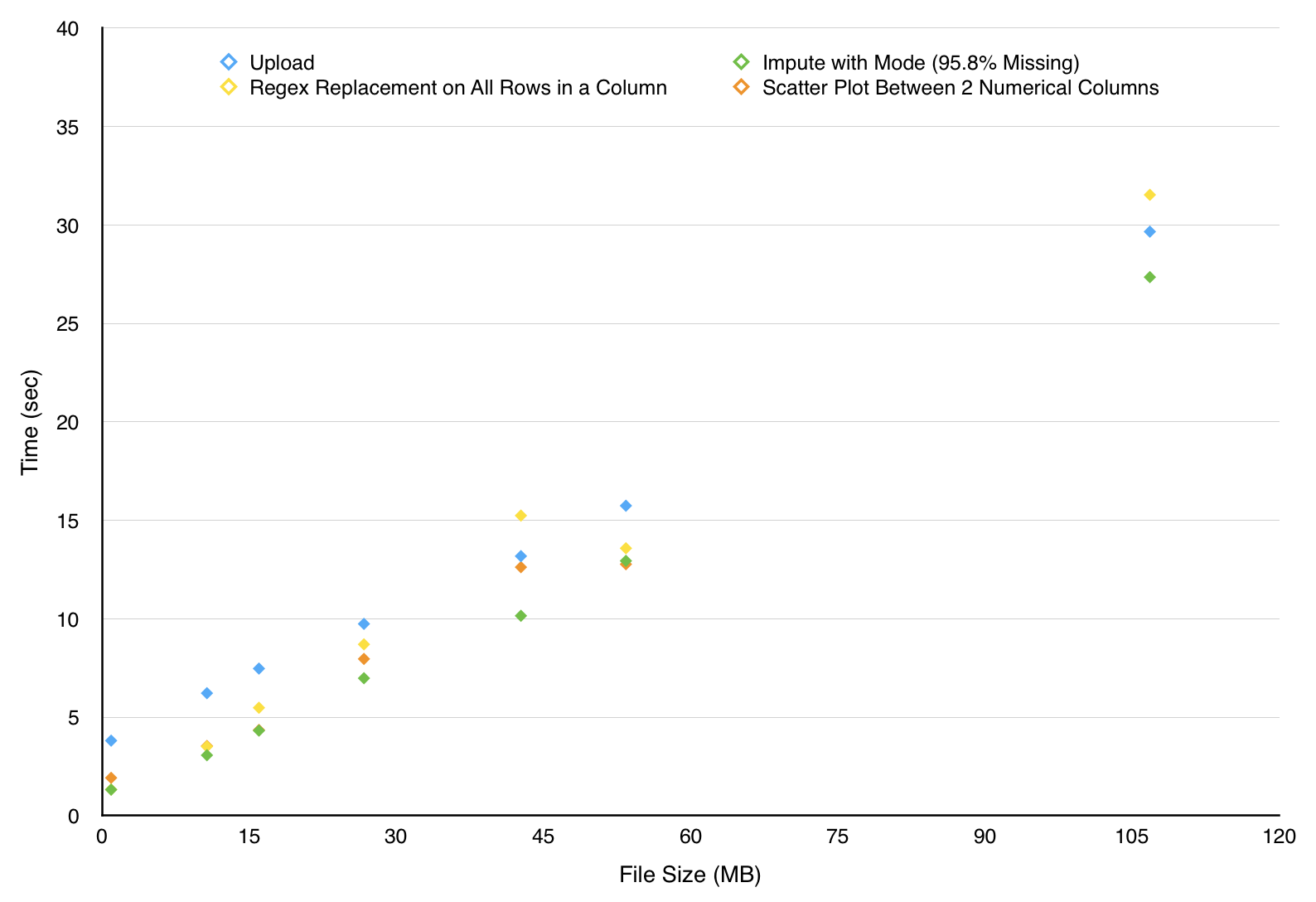

The effect of file size on the time taken by each operation.

N.B. Most operations performed by the system works on columns rather than rows of a dataset so the performance of the system depends more on the number of rows in the dataset.

The results were not surprising to us as most basic operations performs well enough even with a large file size. Some operations are resource intensive in its nature and there’s little we can do to improve their performance, but they still work well with smaller datasets. When compared to other software such as OpenRefine and Excel, our system performs as well if not better on some of the features. For example, both OpenRefine and Excel failed to load the 106.8MB file while our system loaded it under 30 seconds. Overall we are satisfied by the performance of our system.

User Acceptance Testing (UAT)

This is the last phase of the software engineering process. During UAT the completed software is tested by potential users of the system to ensure it can handle required tasks in real-world scenarios. In order to carry out UAT we designed several test cases which covered most of our key requirements. We then presented these test cases to our client who in turn decided to pass them on to potential users of the system to carry out the UAT. Our client informed us that they will send us the feedback they may receive regarding bugs and usability. However it is worth mentioning that our clients were extremely pleased with each individual feature offered by the system as well as the overall User Experience.