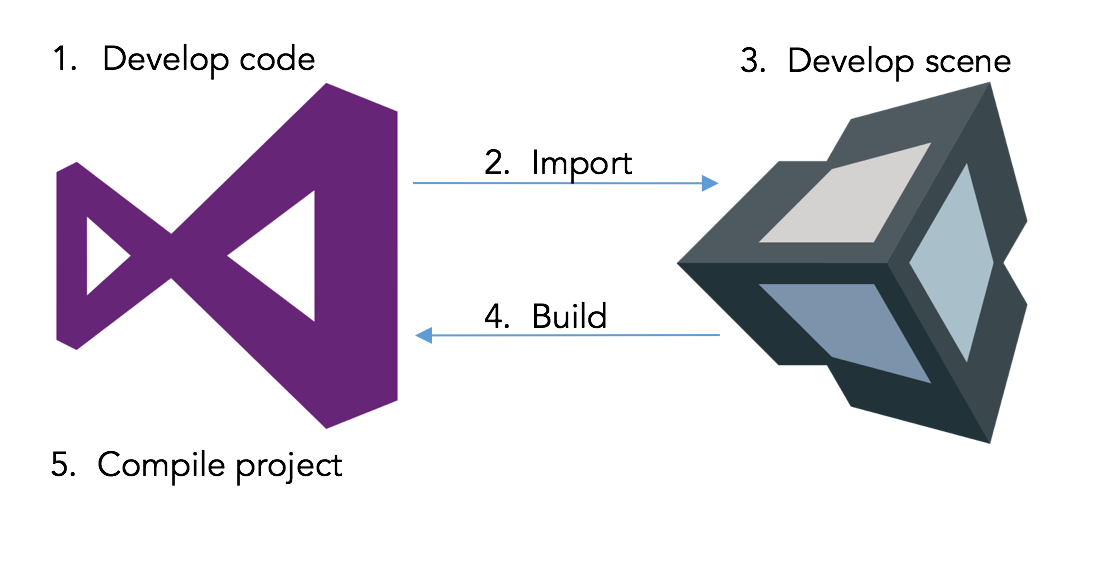

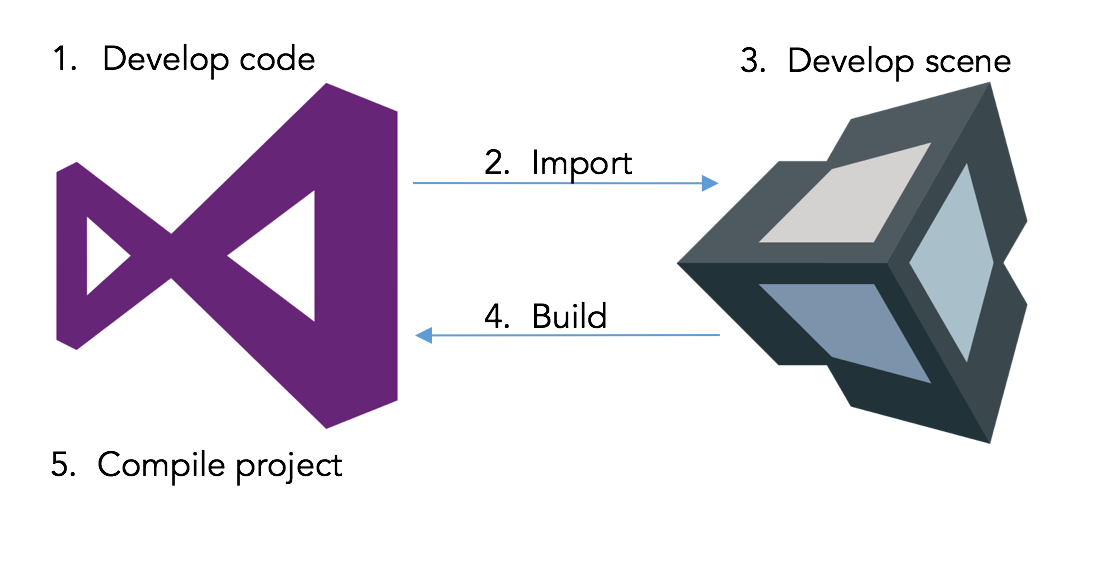

Our application was developed inside Visual Studio (Community Edition), using Unity (5.5) as the build IDE. Code would be written in Visual Studio, before being loaded and attached to the scene in Unity. Next it would be built from Unity into a C# Visual Studio project after which it would be opened in a separate instance of Visual Studio for compiling onto the device/emulator.

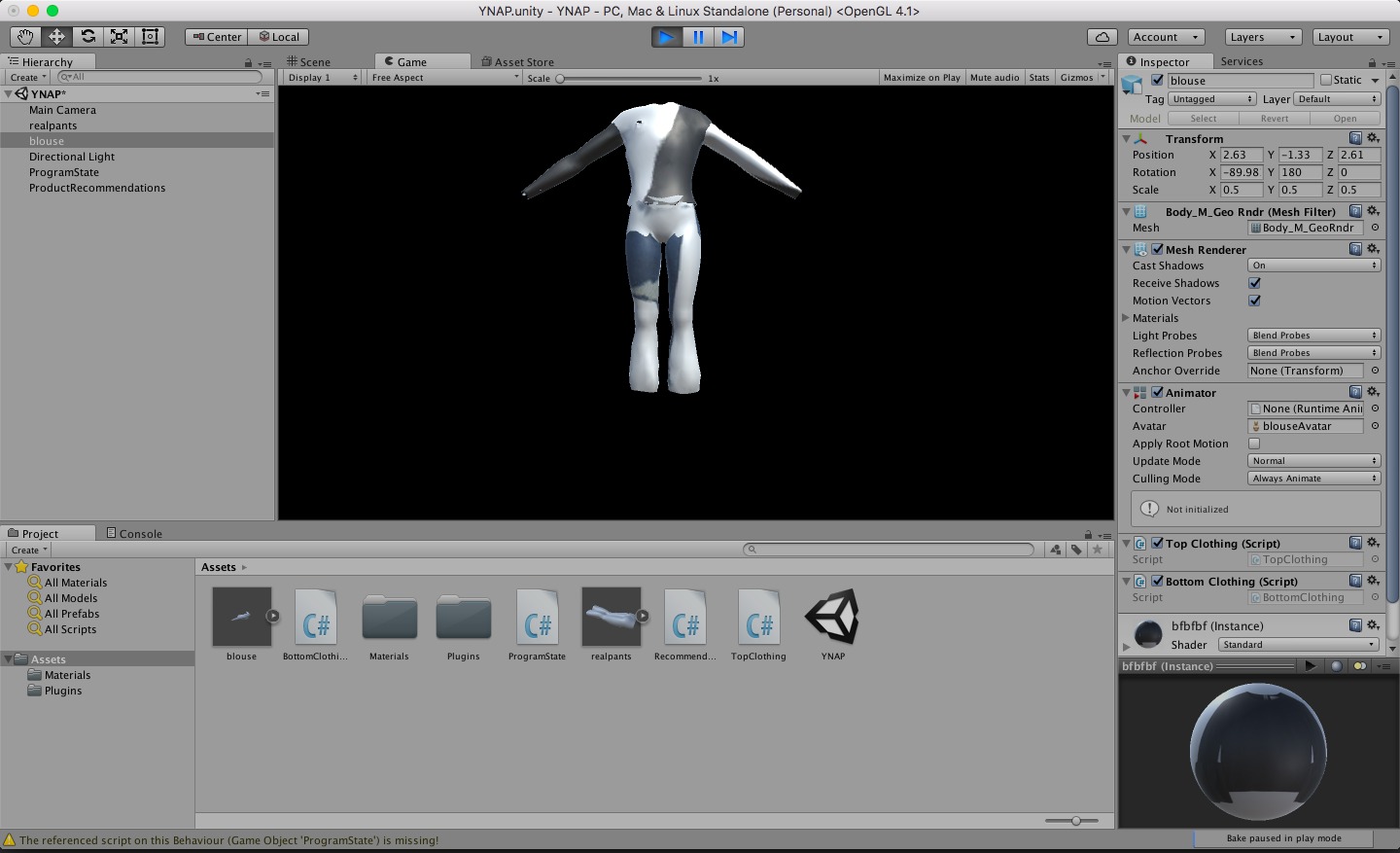

In the first few iterations of our application, we mostly used default Unity geometries as placeholders for future UI elements. Later on we used Blender to build 3D models for importing into Unity, but these did not end up making it into the final product. In the latter iterations we used Adobe Illustrator CC for creating the vector graphics which filled the placeholders as UI elements.

[Example final graphics we used]

Throughout the duration of the project, we made full use of a shared GitHub repository containing our most recent working build. We ensured to follow a simple guideline before pushing to the code repository, outlined below:

- Code would not be pushed to the repository before substantial testing had been done.

- All code would have to first be thouroughly unit tested and integration tested for inconsistencies.

- Any new iterations of the application following changes would need to be compiled onto the device or the emulator for functional testing.

- After agreeing with the rest of the team, code is now safe to push into the repo to be merged.

We purposefully decided to keep this consistent with similar policies used for moving code from a DEV environment into a UAT environment as it allowed us to be sure our repo stayed 'clean'.