Unit and Integration Testing

Unit Testing

Along with the usual manual testing methods such as user acceptance testing, we ventured into a set of tools implemented by Unity to help us with test automation. This was done by writing ‘Editor C# Scripts’ in Unity, which allow separate Unity objects to be tested individually using our own test scripts. Unity test tools utilises nUnit as the basis for their unit testing framework, which is a well known and easy to use framework already. We ensured to enable automatic unit test code execution on every recompile so we had immediate feedback whilst writing code. Some example Unit tests we wrote:

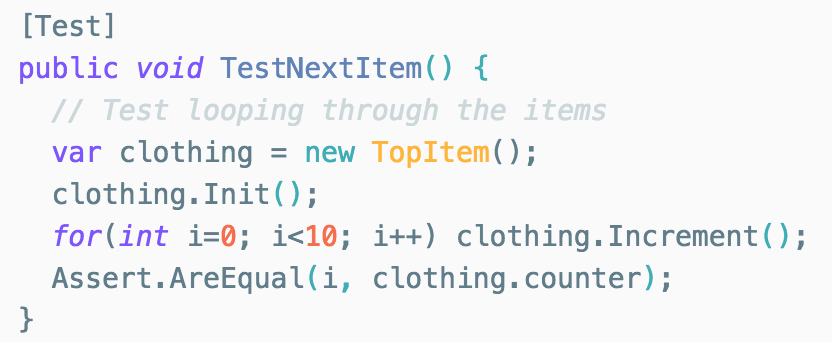

- TestNextItem()/TestPrevItem() - tests if looping through the list of individual clothing items works as expected.

- TestAddToFavourites() - makes sure that invoking "addToFavourites" adds the correct clothing items to the lists of favourites.

- TestJSONParse() - checks if the cleanup of the API response is valid.

- TestNextOutfit()/TestPrevOutfit() - asserts that iterating over outfits increments/decrements all the outfit elements, instead of just one.

- TestNavigation() - ensures that the correct UI element properties are being set when invoking navigation methods.

Integration Testing

In order for us to test the integration of the individual Unity objects and assets we required a higher level of tests, which ideally is what the Unity Integration Test Framework is for. Integration tests are also considered one of the easier methods of starting to write automated tests, since they don’t have requirements on the architecture of the code, making them a good starting point for us. We utilised the Unity/C# 'Assert' method which is able to monitor the state of whole objects at runtime (or playmode as Unity calls it) and pause the execution if a certain condition on the object is met. This uncovered more bugs compared to our Unit tests which simply ran individual test functions on single components of objects. It proved to be very useful for debugging, where we could pause the entire simulation if a certain condition was met, to ensure our application was behaving as expected, and handling exceptions as required.