Research

Eye Tracking as an Input Mechanism

Designing a handsfree painting application requires substantial considerations regarding human computer interaction. The mouse’s success as an interaction mechanism can be attributed to its effective imitation of human behaviours that are instinctively understood, such as pointing and dragging [2]. However, the eye is naturally an input mechanism used to absorb visual information, not to communicate. For this reason, utilising deliberate eye movements to control a system may not come naturally to most people, and will require practise and a clever interaction mechanism.

Designing a handsfree painting application requires substantial considerations regarding human computer interaction. The mouse’s success as an interaction mechanism can be attributed to its effective imitation of human behaviours that are instinctively understood, such as pointing and dragging [2]. However, the eye is naturally an input mechanism used to absorb visual information, not to communicate. For this reason, utilising deliberate eye movements to control a system may not come naturally to most people, and will require practise and a clever interaction mechanism.

The limited nature of the eye’s movement also raised significant challenges. The essence of drawing is connected to people’s ability to make smooth, consistent strokes with their hands. Unfortunately, the eye is not capable of making such movements deliberately. When processing an image, the eye will jump around, abruptly shifting focus, in movements called “saccades”, and then stay focused on an area in what is called a “fixation” [1] (See Image [5]). Such movements are quite difficult to control. In addition, if one were to create a paint stroke following the eye’s gaze, it is possible that the user could become stuck in a loop of drawing and subconsciously attempting to see what he/she has drawn, spoiling their intended stroke. For information detailing the drawing mechanism that takes these considerations into account, please see the HCI section.

![]() When eye-tracking is used as the singular input mechanism, the ability to click to make selections is lost. For handsfree applications, dwelling is used instead. To dwell, the user must fixate their gaze on a specific point for a pre-set amount of time, and the object being dwelled upon will be selected.

When different buttons are pressed, the procedures that are invoked have varying degrees of impact. Some actions, such as clearing every stroke from the canvas, can be frustrating for users if they are selected unintentionally. Thus, the dwell time for each button must be selected carefully.

When eye-tracking is used as the singular input mechanism, the ability to click to make selections is lost. For handsfree applications, dwelling is used instead. To dwell, the user must fixate their gaze on a specific point for a pre-set amount of time, and the object being dwelled upon will be selected.

When different buttons are pressed, the procedures that are invoked have varying degrees of impact. Some actions, such as clearing every stroke from the canvas, can be frustrating for users if they are selected unintentionally. Thus, the dwell time for each button must be selected carefully.

Similar Projects

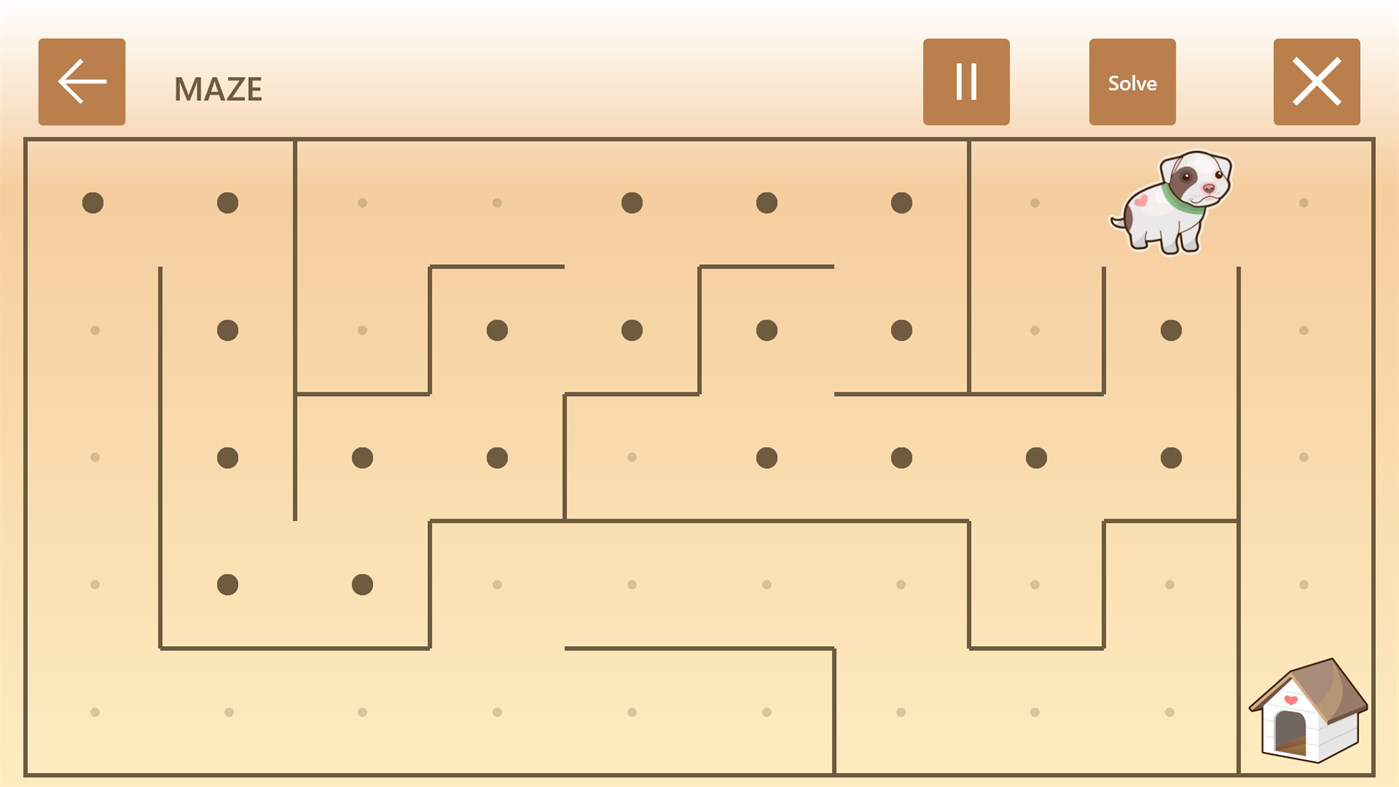

Early in 2019, Microsoft released four Eyes First games unto the Windows Store. The games are Tile Slide, Match Two, Double Up, and Maze. They are four reinventions of classic computer gaming applications, that focus on delivering a smooth user experience when eye-tracking devices are the primary source of input [3]. Several design principles are common across the games, and these were closely taken into consideration for the design of Avant-Garde. The use of large, easily distinguishable buttons and grid-based displays are of interest.

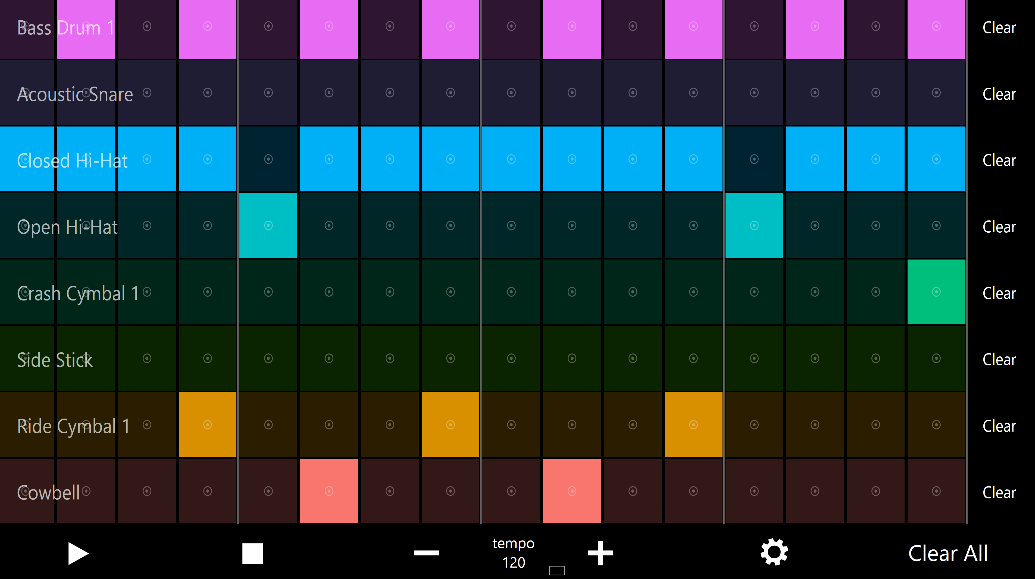

In addition, in late 2017, Microsoft released the Hands-Free Music Project, which is a collection of musical applications that are also designed for eye-tracking input. The project includes Sound Machine, Sound Jam, and Expressive Pixels [4]. From this project, a profoundly important aspect of Eyes-First design can be observed. Different lengths of dwell time are used to activate buttons, depending on their functionality, to ensure every selection is completely deliberate.

Technical Considerations

The primary hope for Avant-Garde is to have it released on the Windows Store upon its completion. This would allow the product to reach as many real users globally as possible. For this reason, developing a Universal Windows Platform (UWP) application was the natural choice, as this is a prerequisite for deployment unto the Windows Store. The most common language for the backend of a UWP app is C#. In hopes of having access to as many resources and documentation as possible for development, this was the clear choice. For the front end, all UWP applications use XAML (Extensible Application Markup Language).

The primary hope for Avant-Garde is to have it released on the Windows Store upon its completion. This would allow the product to reach as many real users globally as possible. For this reason, developing a Universal Windows Platform (UWP) application was the natural choice, as this is a prerequisite for deployment unto the Windows Store. The most common language for the backend of a UWP app is C#. In hopes of having access to as many resources and documentation as possible for development, this was the clear choice. For the front end, all UWP applications use XAML (Extensible Application Markup Language).

Choosing to create a UWP application came with considerable advantages and disadvantages. The main complication was that UWP has a particularly steep learning curve, and at the start of this project, the team had no experience developing for the Windows ecosystem. For this reason, initial progress was particularly slow and challenging. However, developing in UWP comes with access to the .NET framework, which put a vast array of APIs at the team’s disposal, saving significant time, and largely expanding the possibilities for Avant-Garde.

References

[1] Young, L.R. & Sheena, D. “Survey of eye movement recording methods,” in Behavior Research Methods & Instrumentation, Psychonomic Society, Volume 7, Issue 5, 1975, pp- 1-397.

[1] Young, L.R. & Sheena, D. “Survey of eye movement recording methods,” in Behavior Research Methods & Instrumentation, Psychonomic Society, Volume 7, Issue 5, 1975, pp- 1-397.

[2] Jacob, R.J.K. “Eye Tracking in Advanced Interface Design,” Human-Computer Interaction Lab, Naval Research Laboratory, Washington, D.C, 1995.

[3] Microsoft Corporation, “Eyes First games & apps,” Research, 2019. [Online] Available: https://www.microsoft.com/en-us/research/product/eyes-first/games-and-apps/ [Accessed: Jan 2, 2020]

[4] Microsoft Corporation, “Microsoft Hands-Free Music,” Research, 2017. [Online] Available: https://www.microsoft.com/en-us/research/project/microsoft-hands-free-music/ [Accessed: Jan 2, 2020]

[5] Farnsworth, B. “10 Most Used Eye Tracking Metrics and Terms,” imotions.com, Aug 14, 2018. [Online]. Available: https://imotions.com/blog/7-terms-metrics-eye-tracking/ [Accessed Jan 4, 2020].