Evaluation

Achievements:

We split up the project into three main parts – Apple Watch, Kinect, and Server development.

Here's what we've managed to achieve so far:

Apple Watch

- Managed to get gyroscope, accelerometer and orientation data

- Track the number of spins the user has made

- Record and save the activity to the Apple Health Kit (Duration, distance travelled, average heartbeat and calories burned)

- Store and send this data to a server in a standardized JSON format.

Kinect

- Managed to get and recognize coordinate data from the camera

- Camera can adapt to user location and user proportions

- Tracks and stores the activity level of users as well as limb positionment metrics

- Can display skeletals ovelaid over live video feed

- Stores video file of session with skeletals and video feed

- Sends data to server in standardized JSON format

Server

- Runs on a Virtual Machine in Microsoft Azure

- Receives JSON files from Watch and Kinect

- Displays data to user

- Will algorithmically merge the data from the two devices

| ID | Requirements | Priority | State | Contributors |

|---|---|---|---|---|

| Must have | ||||

| 1 | Relatively accurate body and skeletal tracking of a single person dancing | Must have | ✔ | Yide |

| 2 | Apple Watch integration with health metrics available after session has ended. (The health metrics from the Apple Watch include calories burned, average heart rate, duration of dance and the no. of steps taken) | Must have | ✔ | Jan |

| 3 | Researched, analyzed and considered alternative technologies to track and monitor health benefits of dance | Must have | ✔ | Jan,Yide,Alex |

| Should have | ||||

| 4 | Ability to display video with skeletal tracking overlaid | Should have | ✔ | Alex |

| 5 | Ability to save skeletal tracking video | Should have | ✔ | Alex |

| 6 | Ability to save the data from one dance session in a file | Should have | ✔ | Yide,Alex |

| 7 | Ability to calibrate to the user's proportions and the position of the floor relative to the camera | Should have | ✔ | Yide,Alex |

| 8 | The system should be easy to use and set-up | Should have | ✔ | Jan,Yide,Alex |

| 9 | Simple analysis on skeletal tracking that is health/dance related. As an example, measuring the activity level of the user, or the height of their limbs. | Should have | ✔ | Yide |

| 10 | The Apple Watch data and skeletal tracking data should be viewable on a single integrated dashboard | Should have | ✔ | Jan,Yide,Alex |

| 11 | All the health metrics that are gathered and displayed should be relatively accurate and repeatable | Should have | ✔ | Jan,Yide |

| 12 | A user application, where all their dance sessions and health data would be visible for them (usable without the help of a technical person) | Should have | ✔ | Jan |

| Could have | ||||

| 13 | A simple user interface | Could have | ✔ | Alex,Jan |

| 14 | Relatively accurate body and skeletal tracking and display of two people | Could have | ✔ | Yide, Alex |

| 15 | Have the data be stored in a database semi-permanently | Could have | ✔ | Jan,Yide,Alex |

| Would (won't yet) have | ||||

| 16 | Automatic posture corrector via vibrations in the Apple Watch | Would have | ✖ | N/A |

| 17 | User survey after each session to track their mental health | Would have | ✖ | N/A |

| 18 | Machine Learning implementation that recognises the different styles of dance | Would have | ✖ | N/A |

| 19 | Might turn into an iPhone-centric app | Would have | ✖ | N/A |

| 20 | Other wearable integration (smart shoes…) | Would have | ✖ | N/A |

| Key Functionalities (must have and should have) | 100% completed | |||

| Optional Functionalities (could have) | 100% completed | |||

Known Bug Table

| ID | Bug Description | Priority |

|---|---|---|

| 1 | If the user denies the access to apple health data to the app, it occasionally causes crashes | High |

| 2 | If the spin is performed quickly, the Apple Watch sometimes overestimates the number of spins | Medium |

| 3 | The skeletal tracking does badly with occlusions, especially on couples dancing: this is visible in the skeletal display | Medium |

| 4 | When joints are out of camera space or occluded, the joint co-ordinate data appear to be random numbers | Medium |

| 5 | Cannot programmatically delete faulty or corrupt video output due to lack of permission | Low |

Contribution Table

| Work Package | Jan | Yide | Alex |

|---|---|---|---|

| Project Partner Liaison | 70% - Organized and led meetings with the clients | 15% | 15% | Requirement Analysis | 33.3% | 33.3% | 33.3% |

| Research and Experiments | 30% | 40% - Found research papers on similar projects | 30% | Bi-Weekly Reports | 40% | 20% | 40% |

| Testing | 33% - Testing of the Apple Watch & Server | 33% - Testing of the Server | 34% - Testing of the Kinect | Apple Watch Development | 100% | 0% | 0% |

| Kinect Development | 0% | 50% - Managed to do health-related analysis with using the co-orrdinates of joints | 50% - Focused on displaying and saving video with skeletals | Server Development | 70% - Created the Virtual Machine and the MongoDB Server | 30% - Pair programmed the "merge function" with Jan | 0% |

| Demo and Test Video Production | 35% | 30% | 35% | Video Editing | 70% - Edited the client and technical videos | 0% | 30% - Edited the user testing video | Report Website | 50% - Created main template with bootstrap | 25% | 25% |

| Poster Design | 10% - Added some contents | 0% | 90% - Created main template |

| UI design | 50% - Apple Watch UI Design | 0% | 50% - Kinect UI Design |

| Record Keeping and Minutes | 0% | 0% | 100% |

| Blogpost Writing | 60% | 20% | 20% |

| Overall Contribution | 33.3% | 33.3% | 33.3% |

| Main Roles | Team Leader, Apple Watch Developer, Server Developer |

Kinect Developer, Researcher, Server Developer |

Kinect Developer, User Interface Designer, Transcriber |

Elevator Pitch

We also gave a 2 minute pitch for this project at the end of term 1:

Critical evaluation of the project

User Interface and Experience (UI and UX)

We user did a final test of our interfaces and experience on a group of 5 people in accordance with the Nielsen and Norman Group's consensus [1] Most of the feedback we got was positive, but we noted that for the Apple Watch interface, the main gripes were the button placement being potentially confusing to some users, the fact that the components are not linked or synchronised was a bit counter-intitive to some people, and overall they would have appreciated some colour being added. For the Kinect UI, they mostly would have liked more feedback on what is happening (i.e. a message when the user is out of frame or their feet/floor aren't detected), and for us to have split the plaintext into more digestible portions. Lastly, for the server interface, by and large the consensus was to lock the headings to a place where no matter how many entries were in the database, they were always easily visible to the user (so they didn't need to scroll up to identify their data), units could have been added, and they'd like the page to refresh automatically if an entry is deleted or added. Their feedback was polled in a Google form, the results of which can be viewed on the Testing page. Overall, their feeling was extremely positive, as they enjoyed our simple user interfaces, but the constructive criticism was also greatly appreciated. If we had more time, we woudl definitely implement their suggestions.

Functionality

The Apple Watch functionality included showcasing what some of the health statistics that the sensors could provide. Overall is satisfied all of the requirements however, more health statistics could be derived from the data in the future.

In terms of evaluating the Kinect functionality, we conducted a lot of testing through out the development process, were we and our test-users evaluated the Kinect and it's tracking, displaying, saving, and analysis of people dancing, and found its abilities to be sufficient in facilitating and fulfilling the requirements set to and by us

Stability

Apple Watch: The Apple Watch app can occasionally crash if the user has not allowed it the specific permissions. This could definitely be improved. Other than that, it is very stable.

Kinect: Due to the way we implemented the code, the data uploading and the video saving functions are called at the end of the session, so if the program crashed in the middle of the session, no data will be stored and sent to the server. The Kinect code rarely crashes.

Server: The session id may cause problem if the naming convention was not followed or there is a spelling. This may cause situation where some data never merged. Furthermore, the server may throw an error with erroneous user input. Next time, we should check the user input first, before allowing it over a more complex and complete specification.

If internet problem occuered on on of the devices, this will cause the data got lost and the result won't be send successfully to the server.

Efficiency

Video saving: the video will only be recorded after the user starts the session so that the video has the minimum size, and the video will be stored locally instead of sending it to the server as saving a lot of video files on the server can cost a lot of money and may not be financially viable.

Data extracting: for most of the raw data we get, they are all collected between frames and only the data that is used for further computations is saved to the memory. All joint data are calibrated at the end of the session instead of each time we obtain it, so there are less amount of calculation needed which makes data extracting relatively efficient.

Server: the database is made to be simple and easy so that it can be run with a lower price server on the cheapest virtual machine from Microsoft Axure, which is enough for this project. However, the implementation of the merge function, i.e. searching through all of the records is not that efficient and can be improved. One idea would be to use the fact that the user data is sorted according to time it was recorded (and thus sorted by time stamp). This means that the merge function could be implemented not to have to search below a specified time stamp than is on the incoming data, increasing its efficiency.

Compatibility

Since in our project requirements, we had to use very specific devices, we will discuss the compatiibility of our software to similar devices or older versions of them.

The Apple Watch software is compatible with all of the currently available Apple Watches, assuming that they have the latest operating system. Due to the new API and HealthKit updates from Apple, we can not run the software on older WatchOS version. There isn't anything we could do about this.

The Kinect software had to be specified for the Kinect 2, but it should be partially compatible with the new Kinect Azure with some slight modifications that might be needed. Unfortunately, we could not get access to the latest Kinect since it is only available in the US to test the compatibility.

With the server, it was tested on multiple browsers and functioned normally on every one.

Maintainability

The server runs on a virtual machine on Microsoft Azure and costs approximately 7.26 euros per month. If the amount of data sent to the server dramatically increases, the client might need to upgrade the virtual machine, leading to a higher cost. This is something that has to be considered and maintained.

The Apple Watch code may need to be updated so that it is compatible with the latest WatchOS when Apple release a new update. Whether the code needs to be updated depends on what the new WatchOS update will bring. The Kinect code will not have to be maintained for the Kinect 2 but might require some minor changes to be compatible with the new Kinect Azure.

Project Management

Jan has been mainly in charge of the project management of the project. We have devised a very specific plan at the start of the project, which laid out who will be the main point of contact with the clients, how we will communicate internally, the time frame of the project and internal deadlines including a creation of a Gannt chart, which you can find in the Appendix page. We had both structured and unstructured communication, including frequent meetings whether online or face to face.

Initially, we have spent too little time in the planning phase as we already wanted to start the first iteration of programming the project. This slowed us down at the beginning, since we had to return to gathering requirements after we discoevered that our idea of the project was slightly different than what the client had in mind. This is something we would definitetly improve in the future; spending more time on requirements gathering. However, we managed to catch-up the delay over term break.

We have also followed the agile methodology in project development which included short development cycles (slightly adapted scrums, to fit our project needs).

Overall, the project management was done really well and the results speak for themselves. We finished the main part of the project (all the must and should have requirements) 2 weeks ahead of schedule which meant we had time to implement all the could have features as well.

Future Work

If we had 6 more months to work on this project, here is what we could potentially achieve:

More Health Metrics

We plan to identify more possible results from the Kinect camera and Apple Watch, such as speed of moving, angle between bones and potentially posture recognition. These results may help us to get more health related metrics. However, this may prove complicated, given the way the Kinect tracks spinal movements: we hope this will improve with the Kinect Azure (coming out in March).

Hardware integration

In the future, we would like to integrate the Kinect and Apple Watch part hardware launch: ideally, we want an automatically synchronised launch. This wold be helpful in time-stamping the data for the students after each session.

Kinect: Azure

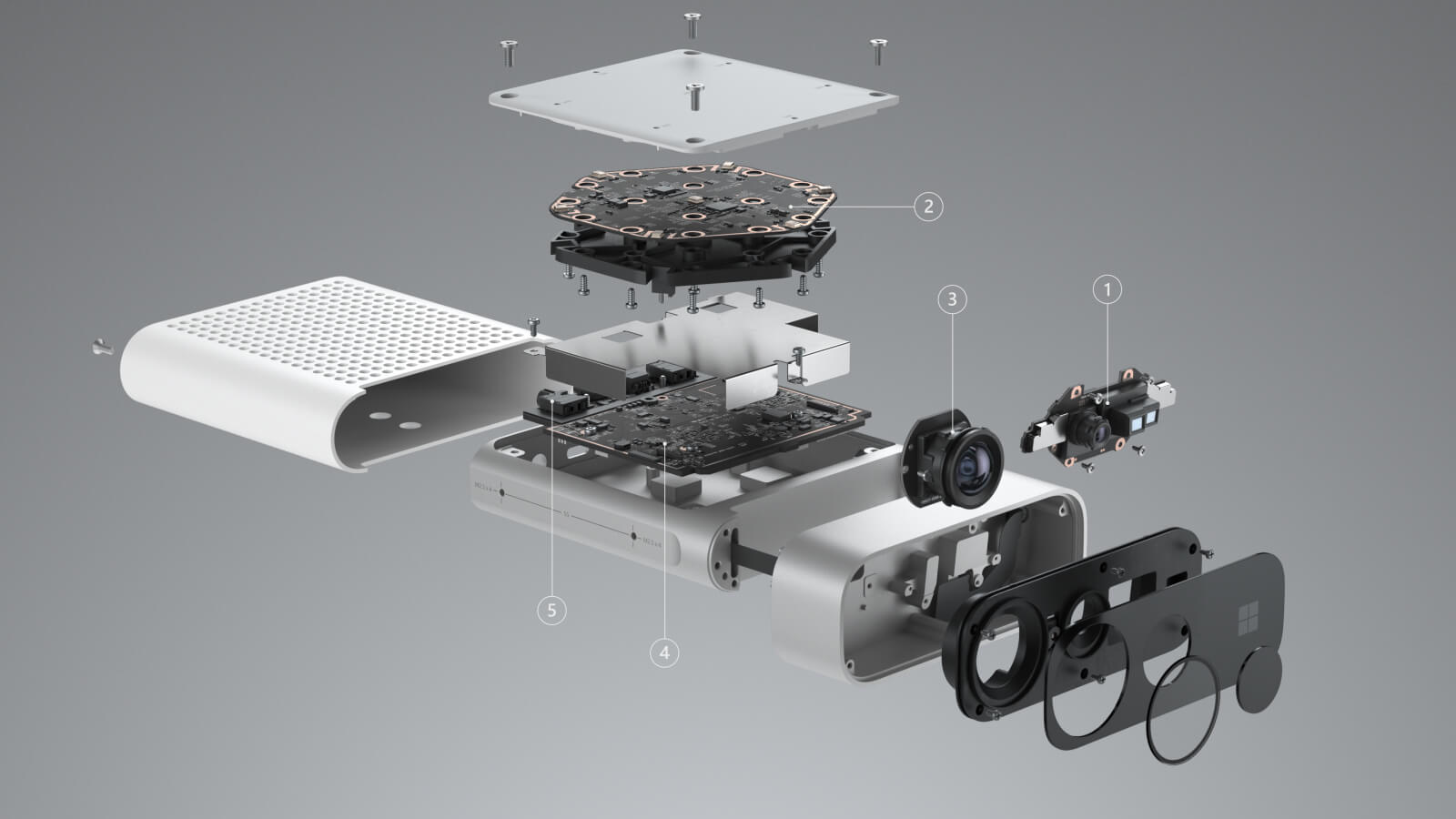

We would also switch the the latest Kinect technology, for more accurate data: the Azure Kinect, coming out in March 2020 in the United Kingdom, will

allow for multiple camera integration, with many other interesting features for calculating health metrics.

We would have liked to start this project with the Azure Kinect, but as it was not available in the United Kingdom.

Once we decided to use the Kinect 2, we chose to do our best to make our code compatible with this future upgrade.

Potential Idea: ARKit 3

We briefly discussed the possibility of also using Apple's ARKit 3 once it gains more research and documentation, as we'd entertained the idea of using this camera instead of the Kinect.

This would be helpful as most Apple Watch users are also iPhone users, but for now, the Kinect was still a better solution.

Our partners at Arthur Murray expressed potential interst in eventually developing a fully iOS version of this project.