This is the original Hand-drawn sketches of our UI, but this one is simple but still not convenient for visually-impaired people, because the buttons are really small and there are too many buttons for visually-impaired users to operate.

Hand-drawn sketches

This is the original Hand-drawn sketches of our UI, but this one is simple but still not convenient for visually-impaired people, because the buttons are really small and there are too many buttons for visually-impaired users to operate.

Voice User Interface (With Flutter)

|

|

|

This is the final voice UI, and this UI is really simple - only one big button for the UI. Visually-impaired users just need to press the main button (green) and say the instructions to the APP, which means users just need to use voice command to control this voice-guidence system.

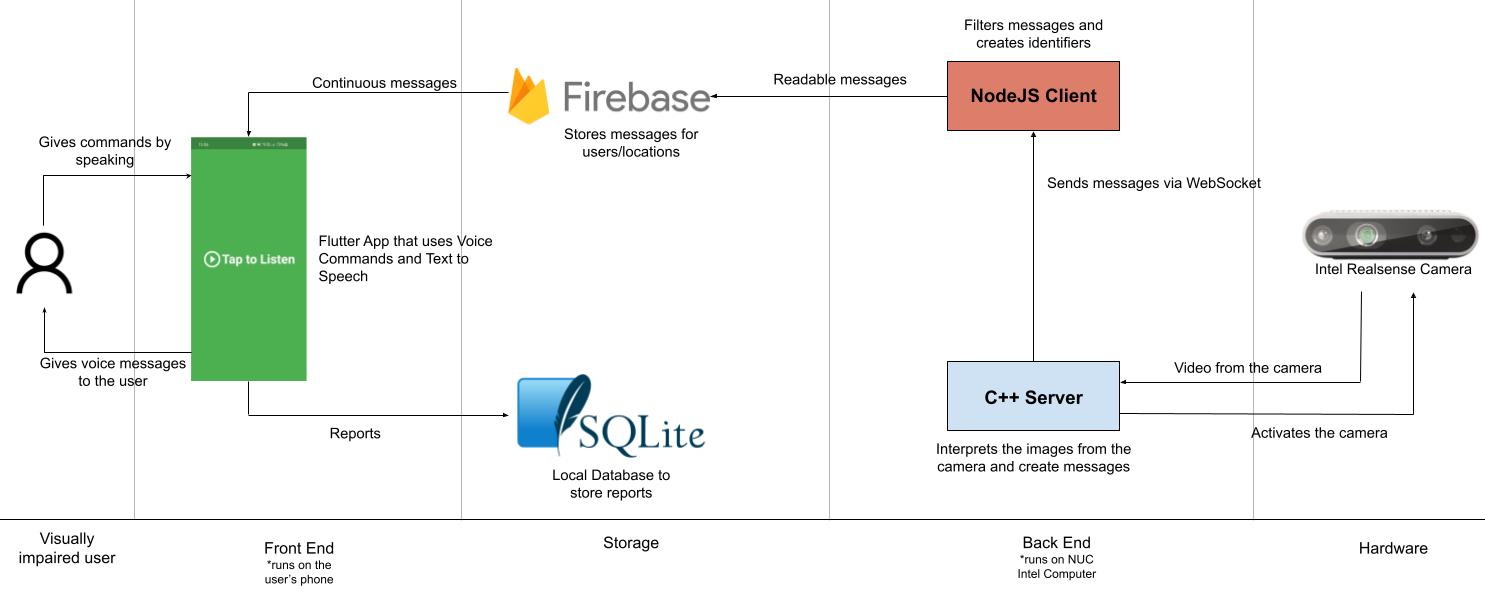

System Architecture Diagram

Our system is divided into multiple pieces.

The Back-End of our system runs on the NUC computer from Intel. The C++ Server receives video from the camera (hardware part),

analyzes and interprets them and create messages which are sent via WebSocket to the NodeJS Client-side,

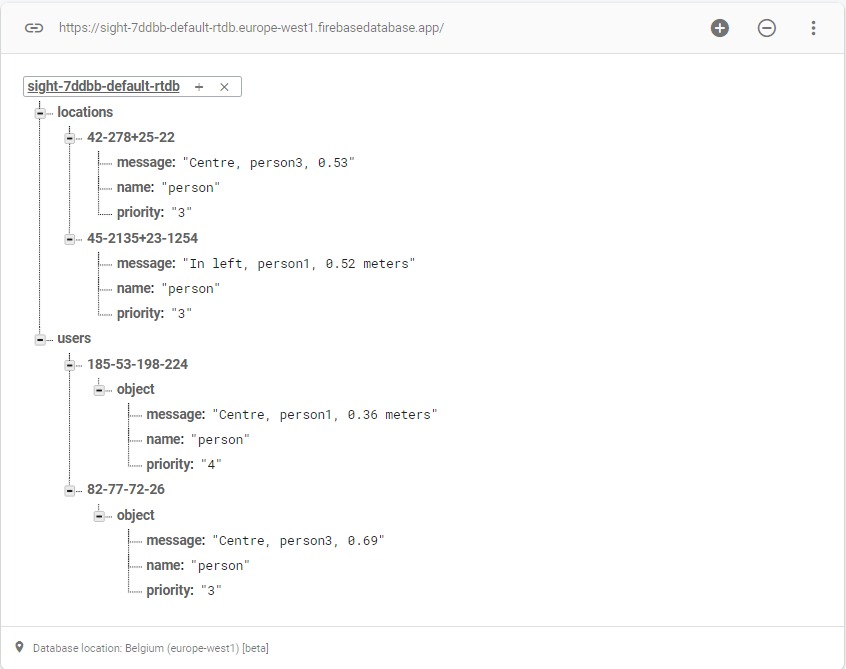

from where they will be added into the Firebase Realtime Database at certain IDs (depends on the setting [static/remote]).

From here the app will read the messages to the user. The user is a visually impaired person that wants a new assistive device

for him/her to navigate easier and make his/her life better. The UI we designed (Front-End) is the app on the user’s phone that is

controlled using voice commands and provides haptic and acoustic feedback. The user taps the screen and gives a command to the app (e.g. “START”).

The system interprets that command and does the action needed. It can change settings, provide feedback, or start the remote or the static system.

Whenever the user starts the system, the app will retrieve data from the Firebase Realtime Database and will use Text to Speech technologies to speak the message

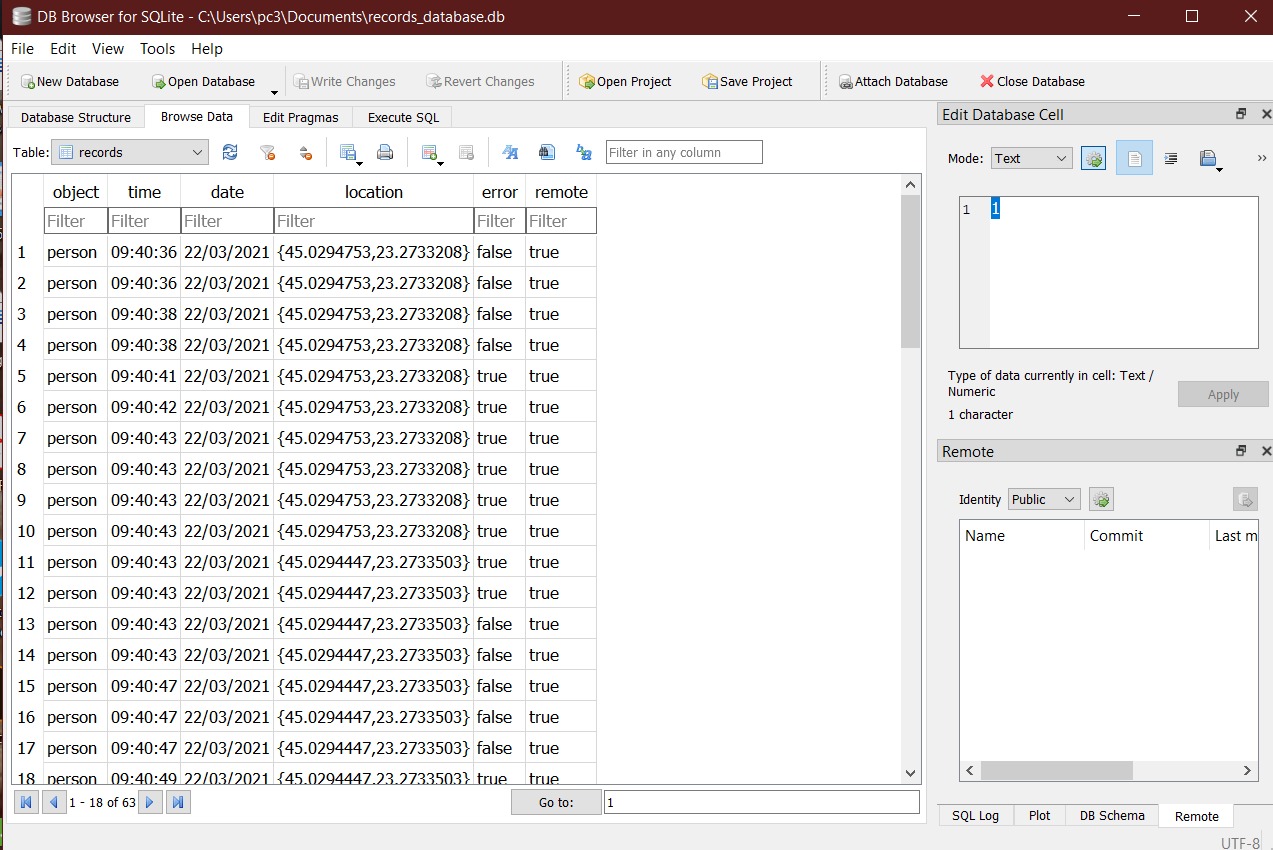

(from the Firebase realtime database) for the visually impaired person. It also adds into a local SQLite database all the objects recognized, alongside the date

and time, the location, the remote/static attribute and either true/false for an error parameter (changed if the user sees an error in the object

recognizing part – activated by double-tapping on the screen).

Data storage

When the user starts the system, the app will retrieve data from the database and will use Text to Speech technologies to speak the message (from the Firebase realtime database) for the visually impaired person.

We also use a local SQLite database that stores retrieved Sight++ data for mobile camera data, including the time, the location, the remote/static attribute and either true/false for an error parameter.