Testing

Testing Strategy

HeartBOT is a client-side application and hence it is important to perform a large variety of tests on it to ensure the application is usable, functional, and stable. This ensures that it will meet the expectations of both the client and any users using the application.

Testing Scope

- Since the users of our chatbot are people (including policy makers, researchers, law makers, etc) we need to account for the uncertain and unpredictable behaviours of humans. For example, typos. Therefore, we have thoroughly tested HeartBOT to ensure it can correctly handle and respond to any uncertain actions and provide clear guidance and responses where possible.

- HeartBot provides a wide range of functionalities like answering FAQs and retrieving data from the BHF compendium. Therefore, each of these functionalities needs to be thoroughly tested to ensure the program is robust and users can easily interact with the application.

- HeartBot is intended to help researchers and BHF staff. Therefore, we conducted tests to ensure the application meets their requirements and needs.

Testing Method

- The different components of HeartBOT were tested by designing unit tests to ensure correctness of each basic component. In addition to this, unit tests were crucial as they highlighted hidden bugs and allowed us to refactor the code.

- To evaluate the compliance of the system with the drafted specification, we conducted integration testing.

- To ensure reliability and a correct overall solution, we ran documentation testing, clearing up our comments and file structure. We also went through the code once again to handle all miscellaneous exceptions and ensure that our web-based GUI is functioning as expected.

- To ensure a great user experience with both the bot's design and the user interface, we conducted user acceptance testing.

Testing Principle

Throughout the development of the HeartBot application, we conducted different tests. We continuously wrote and tested the system on different inputs to ensure the system behaved correctly and as expected. Any tests that failed were investigated and any problems found were immediately patched. After implementing the complete functionality, we wrote multiple unit, integration, and system tests to cover as much of the code as possible.

Unit Testing

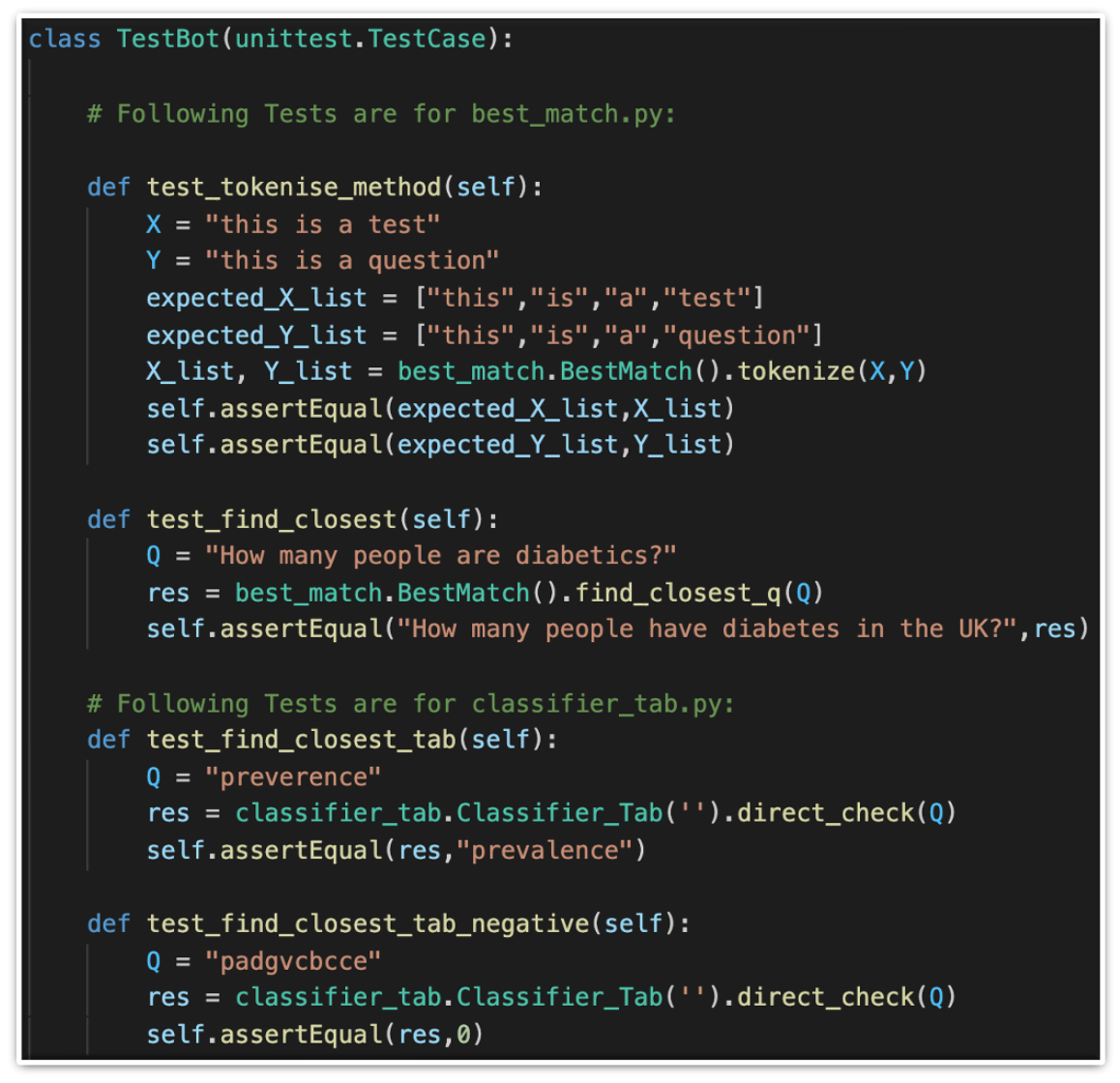

We wrote unit tests for our functions. We did this using python's unittest. Our unit tests covered our tokenise method, our finding closest matches methods, our check methods and our table name class. By testing different possible inputs, we covered most of the code.

System & Integration Testing

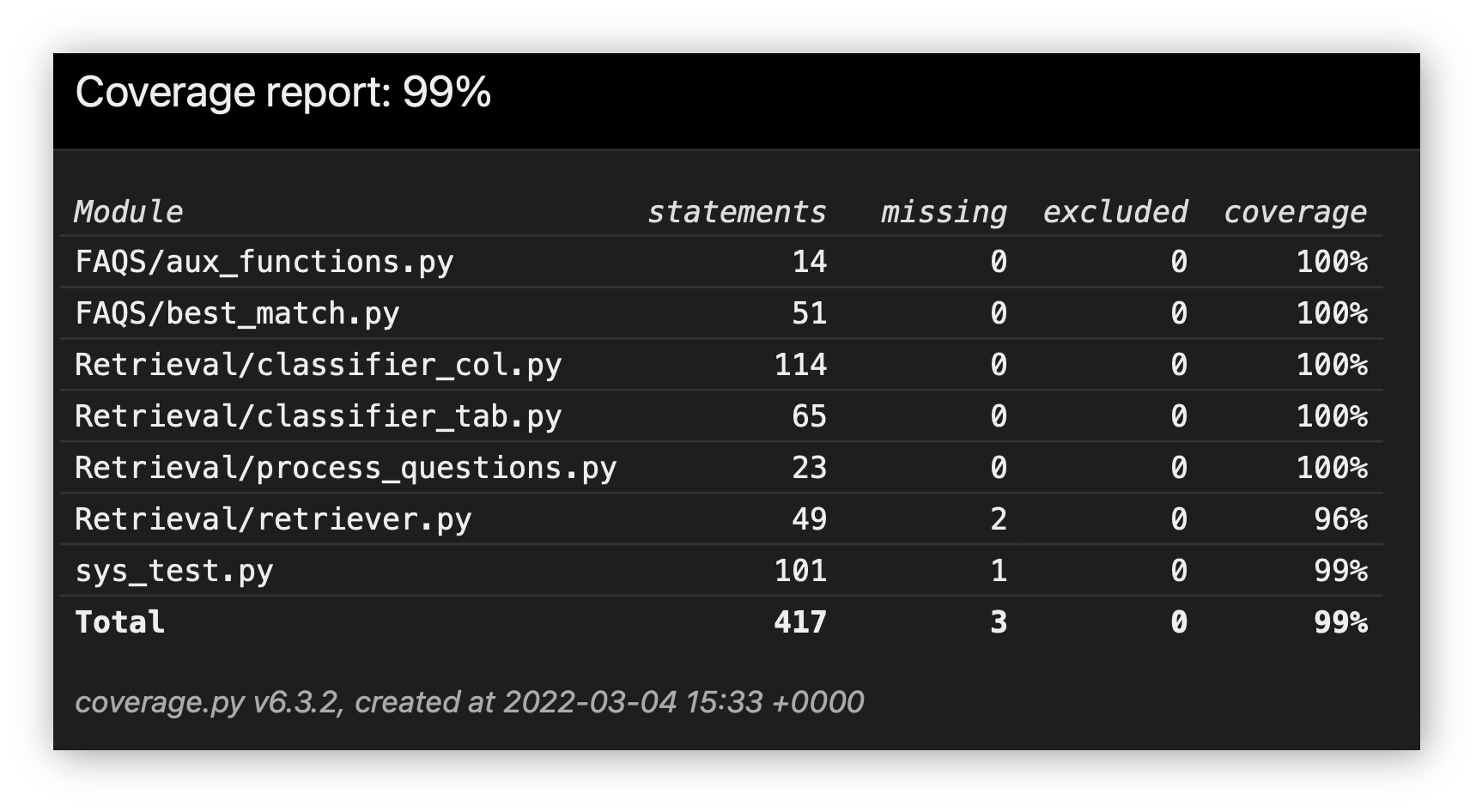

We wrote system tests for the whole system by writing tests for all possible inputs there can be for example greetings, FAQs, retrieval questions from different tables, questions with no answers etc. Together, both system and unit tests provide 99% coverage for our functionality.

User Acceptance Testing

Since it is very important that HeartBot has met the user needs and requirements, and to get constructive feedback, we asked 4 people to test Heartbot for us. This included our client and pseudo users who helped us gather requirements

Testers

Testers include:

-

Charles - 33 years old researcher interested in key statistics regarding cardiovascular diseases

-

Michael - 60 years old policy maker, who makes health policies regarding the prevalence of obesity

-

Amelia - BHF team member, who's job includes answering people's questions about the data in the BHF compendium

-

Katie - PHD student at UCL, researching data about hospital admits for cardiac arrests

These users were chosen because they are potential users of the Heartbot for example the BHF team who need to query the compendium regularly, researchers who need statistics from the compendium, and policy makers who use this data. None of these users are technology/software experts and they all come from different fields of work.

Test Cases

We came up with 4 test cases that the users would try and give feedback. We gave the users some requirements and a scale to rate them and asked them for comments.

-

Test Case 1 - We let the users ask FAQs to Heartbot and see if they were satisfied with the responses

-

Test Case 2 - We asked the users to ask HeartBot data retrieval questions from the BHF compendium and see if they got appropriate data

-

Test Case 3 - We asked the users to ask questions with no data/wrong questions and see if they got friendly error messages

-

Test Case 4 - We asked the users to make general conversation with HeartBot like greetings etc and see if they got appropriate responses back

Feedback from Users

| Requirement | Totally Disagree | Disagree | Neutral | Agree | Totally Agree | Comments |

|---|---|---|---|---|---|---|

| HeartBot runs to end of the job | 0 | 0 | 0 | 0 | 4 | |

| Responses are clear and not confusing | 0 | 1 | 0 | 0 | 3 | + the tables displayed as results provide all the information so are very clear - error messages are very generic |

| Correct data answers | 0 | 0 | 0 | 0 | 4 | + correct table displayed for questions + correct data retrieved |

| Answers received | 0 | 0 | 0 | 0 | 4 | + chatbot answers all questions + question always answered |

| Correct FAQ answers | 0 | 0 | 0 | 0 | 4 | + all FAQs answered correctly + correct answers |

| Chatbot can make conversation | 3 | 1 | 0 | 0 | 0 | - chatbot cannot answer questions like how are you - chatbot does not make conversation |

| Friendly error messages | 2 | 0 | 2 | 0 | 0 | - generic error messages + error messages are friendly but do not state the error - error messages do not define what to do |

| Fast response | 0 | 0 | 0 | 0 | 4 | |

| Easy to use/navigate | 0 | 0 | 0 | 0 | 4 | + useful help button + clear icons and buttons + easy to use interface like a chat |

Conclusion

We were happy with the users' feedback to HeartBot. The constructive feedback helped us to make improvements to HeartBot to make it better and more suited for the needs of people. We made error messages more detailed and useful and updated the chatbot to be able to make general conversation with the users.