Testing

Testing Strategy

Our Community Impact Report Portal consists of a server-side (Python Django backend) and client-side (React frontend). Our testing strategy thoroughly covers both areas. Our testing strategy ensures reliability, usability, and performance for both server-side and client-side components. The majority of backend testing is automated, covering Application Programming Interface (API) endpoints and key algorithms, while the majority of frontend tests were user-oriented, including live-demo feedback from test users, clients and faculty members. We also tested compatibility of our codebase on various machines. Further, we incorporated a rigorous continuous integration (CI) and code review process to minimise errors in our application.

Testing Scope

Our testing scope includes the following areas:

| Testing Area | Description |

|---|---|

| Backend Testing | Verifying API endpoints, database interactions, and algorithms (e.g., semantic search, PDF content extraction). |

| Frontend Testing | Ensuring correct rendering of React components, proper user interactions, and UI responsiveness across devices. |

| Compatibility Testing | Testing on different OSes (Windows, Linux, macOS) and browsers (Chrome, Firefox, Safari, Edge). |

| Performance Testing | Evaluating application performance under stress, particularly for resource-intensive features like the browser-based large language model (LLM). |

| User Acceptance Testing (UAT) | Collecting real-world feedback to identify usability issues and improvements. |

| Security Testing | Ensuring secure handling of user data, especially during login and password management. |

Testing Approach

Automated Testing

- Unit Testing: Verifying individual components and functions.

- Linting: Maintaining code consistency and style.

- Continuous Integration (CI): Running tests on every pull request to catch errors early.

Manual Testing

- User Acceptance Testing (UAT): Testing with real users to assess usability and functionality.

- Compatibility Testing: Verifying cross-device, OS, and browser compatibility.

- Performance Testing: Testing responsiveness and stability under varying conditions.

Unit and Integration Testing

Continuous Integration (GitHub Actions)

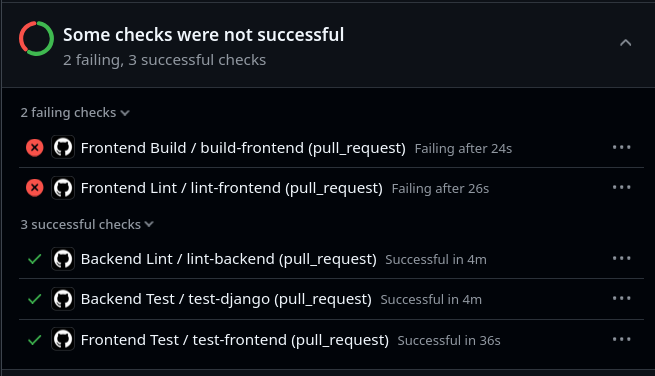

The following tests have been implemented as part of our Continuous Integration (CI).

- Linting Frontend

- Linting Backend

- Building Frontend

- Unit Testing Frontend

- Unit Testing Backend

GitHub Actions enables us to automatically verify that a pull request has not introduced any breaking changes, helping to maintain the integrity of our codebase by catching errors early in the development process. We write our workflow files in .github/, where tests are executed when a new pull request is made. [2].

The CI tests either fail or pass, and are viewable in detail on GitHub.

Each team member can use these CI tests to troubleshoot the faults that have been introduced in their new pull request. Along with this, a potential reviewer can very quickly see whether a pull request is ready for further review, or still needs to be refined.

Implementing CI tests early on in our project (January) led to iterative improvements and simple workload. This is in contrast to one of our previous projects where adding CI tests later on led to many checks failing, leading to bulk changing of the code.

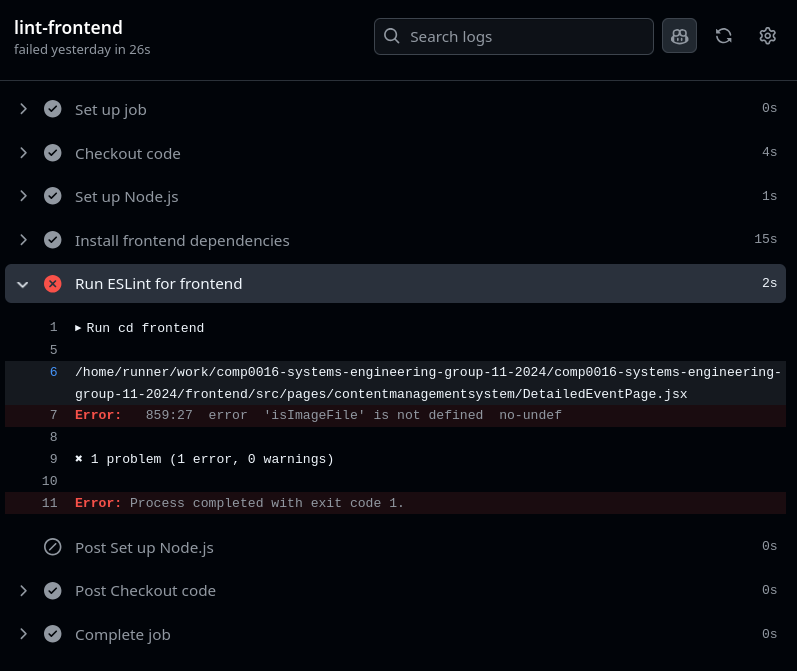

Linting

Linting checks automatically read our code and provide warnings and errors for stylistic issues. We perform linting tests to enforce code consistency regardless of the team member [1].

This includes no unused variables or methods, correct indentation and trailing white-spaces.

Frontend

The linting test tool for our React frontend is eslint, with a predefined ruleset based on create-react-app.

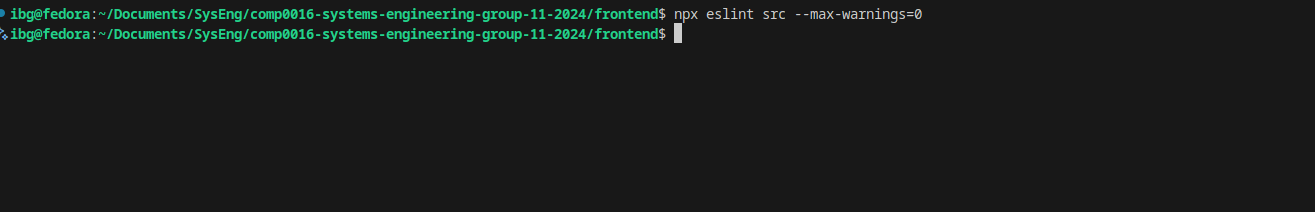

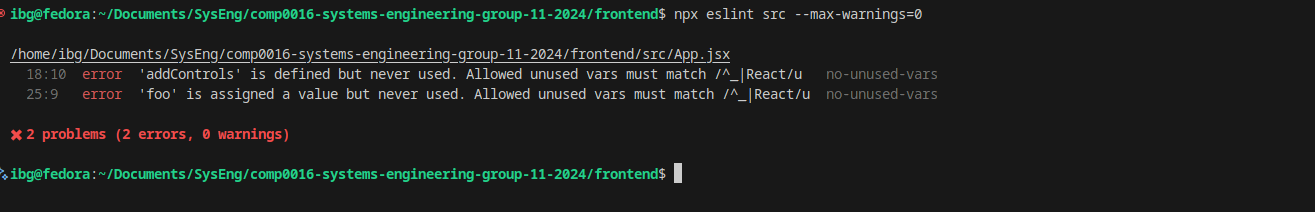

To perform linting tests, run npx eslint src --max-warnings=0.

This runs linting tests in the src directory, enforcing no warnings, else the linting will fail.

If the code had no linting errors, there will be no output in terminal.

However, if

However, if eslint found issues, they will be marked as warnings or errors, with file references and line numbers attached.

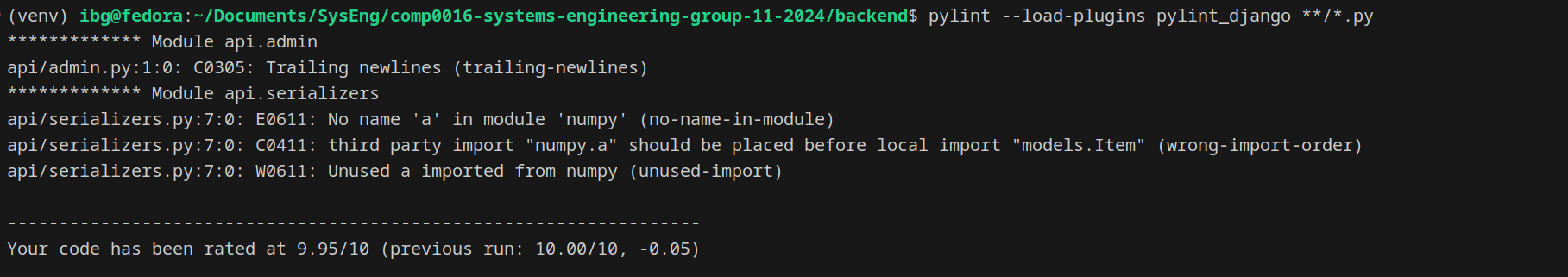

Backend

The linting test tool for our Python backend is pylint. We use the pylint_django python package to improve code analysis with our Django code.

Here is the command:

pylint --load-plugins pylint_django **/*.py

The result of the command will be a score out of 10. The CI test fails if the score is below 10.

One of the best results of our backend linting was enforcement of comments for each method and file because it led to a very thorough auto-generation of documentation

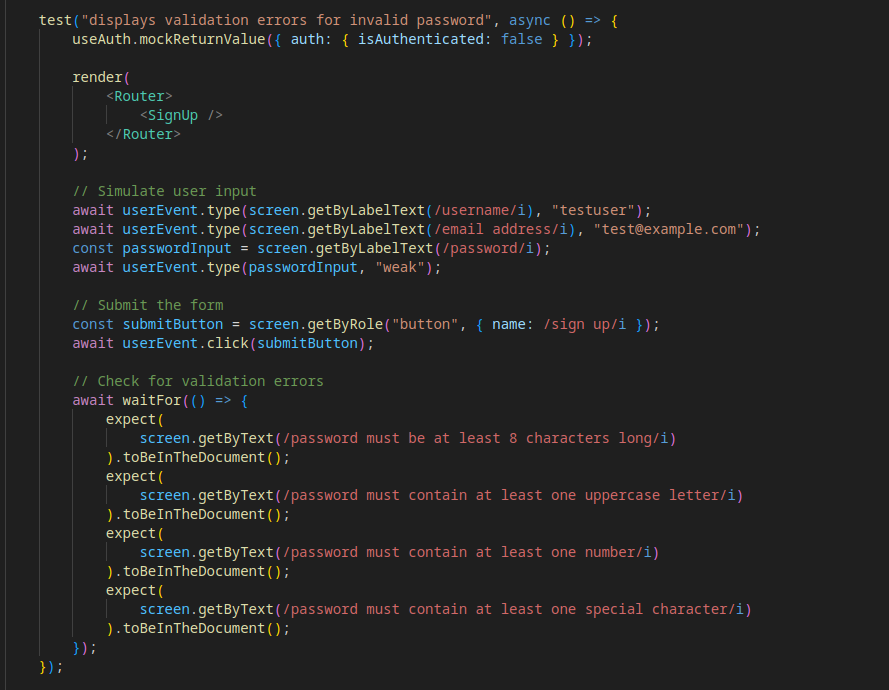

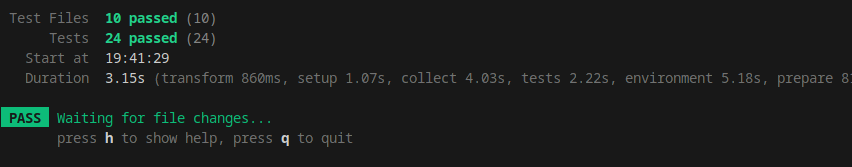

Unit Testing Frontend

We perform unit tests on the frontend to test component renders and simulate user actions. This ensures that components such as buttons and fields propagate expected behaviour given specific user actions.

We use vitest to write tests and execute them. An attempt was made to use jest, however this proved impossible with our implementation of ProtectedRoutes in our React app. Therefore, we changed our build process from create-react-app to vite to incorporate frontend tests that would work. This was not without its challenges, where our tailwindcss styling was disfigured as a result of the change in build process, leading to troubleshooting to remedy this [3] [4].

We write test files in frontend/src/__tests__

For example, here is a mock test for the sign up page. We simulate a weak password being entered to the form, and expect that the user receives a warning about their password, notifying them of what to change.

To execute all tests, run npm run test which executes the vitest command.

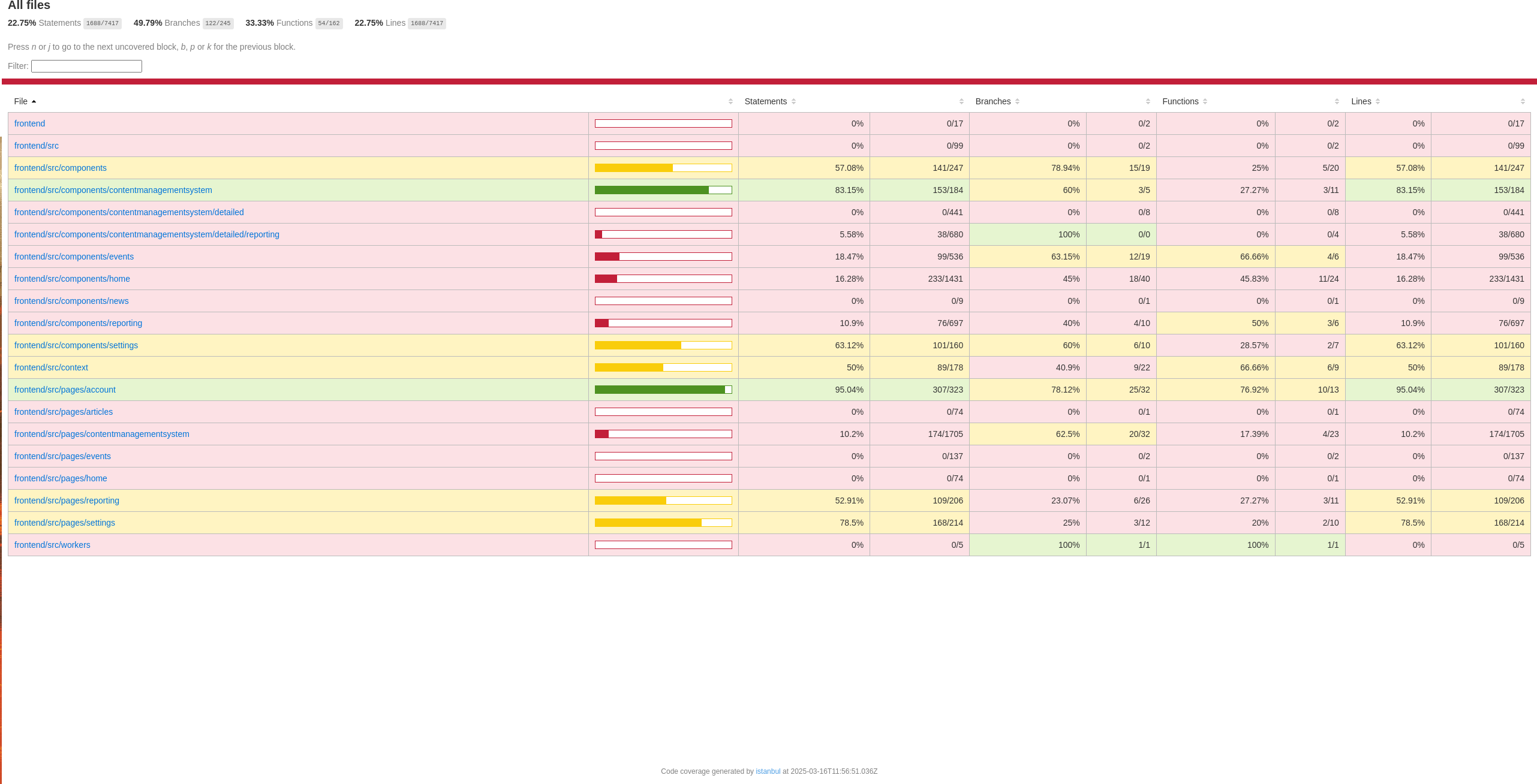

We have 23% test coverage on frontend (npm run coverage), a larger focus of our testing efforts were spent on user acceptance testing.

Our frontend unit tests are extensible, meaning new components and pages can be tested by future developers, and the current (24) tests ensure that future changes do not break previous components.

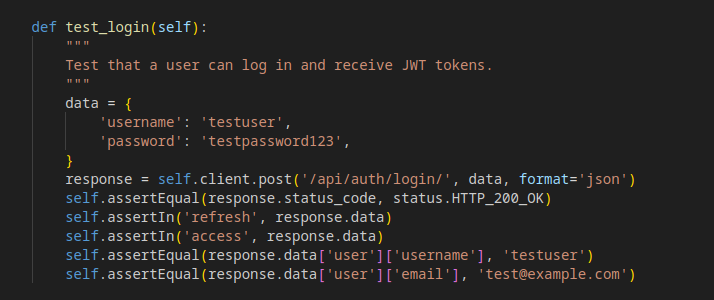

Unit Testing Backend

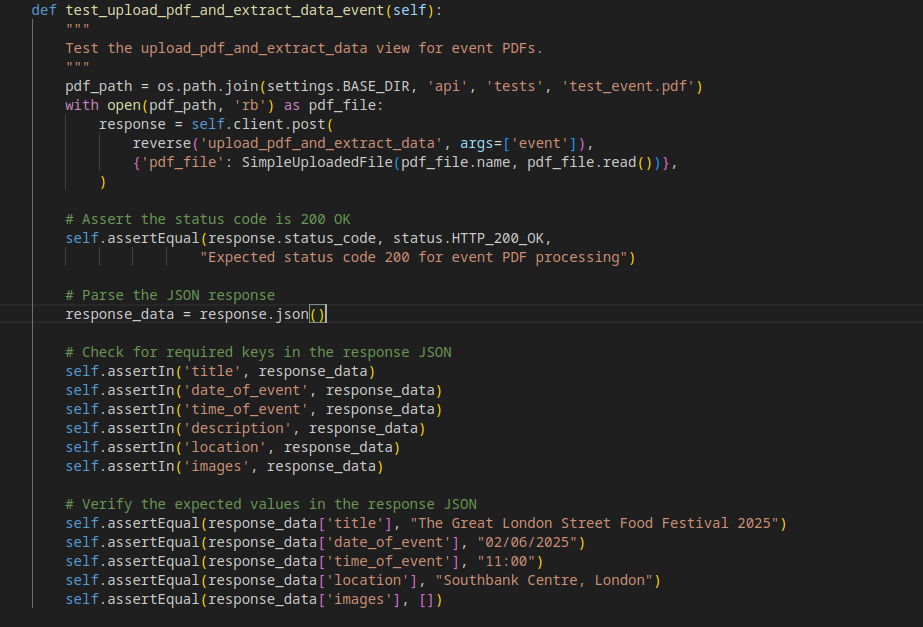

Unit testing on the backend tests API endpoints and model behaviour in the Django application. This is done to ensure that the endpoints produce the desired behaviour, and serve as a guideline to future developers. Key algorithms such as semantic search and content management extraction of portable document format (PDF) documents are also tested for the same reason.

We used Django's unit test functionality, which uses the unittest library [5].

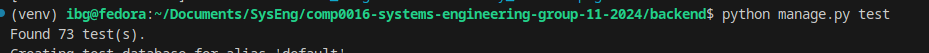

Any file with test in the beginning of the name is run using the python manage.py test command.

Here is one of our API tests for reference:

We also test key algorithms with example files:

The unit tests can be run all at once, and if any failed, will be outputted to the console.

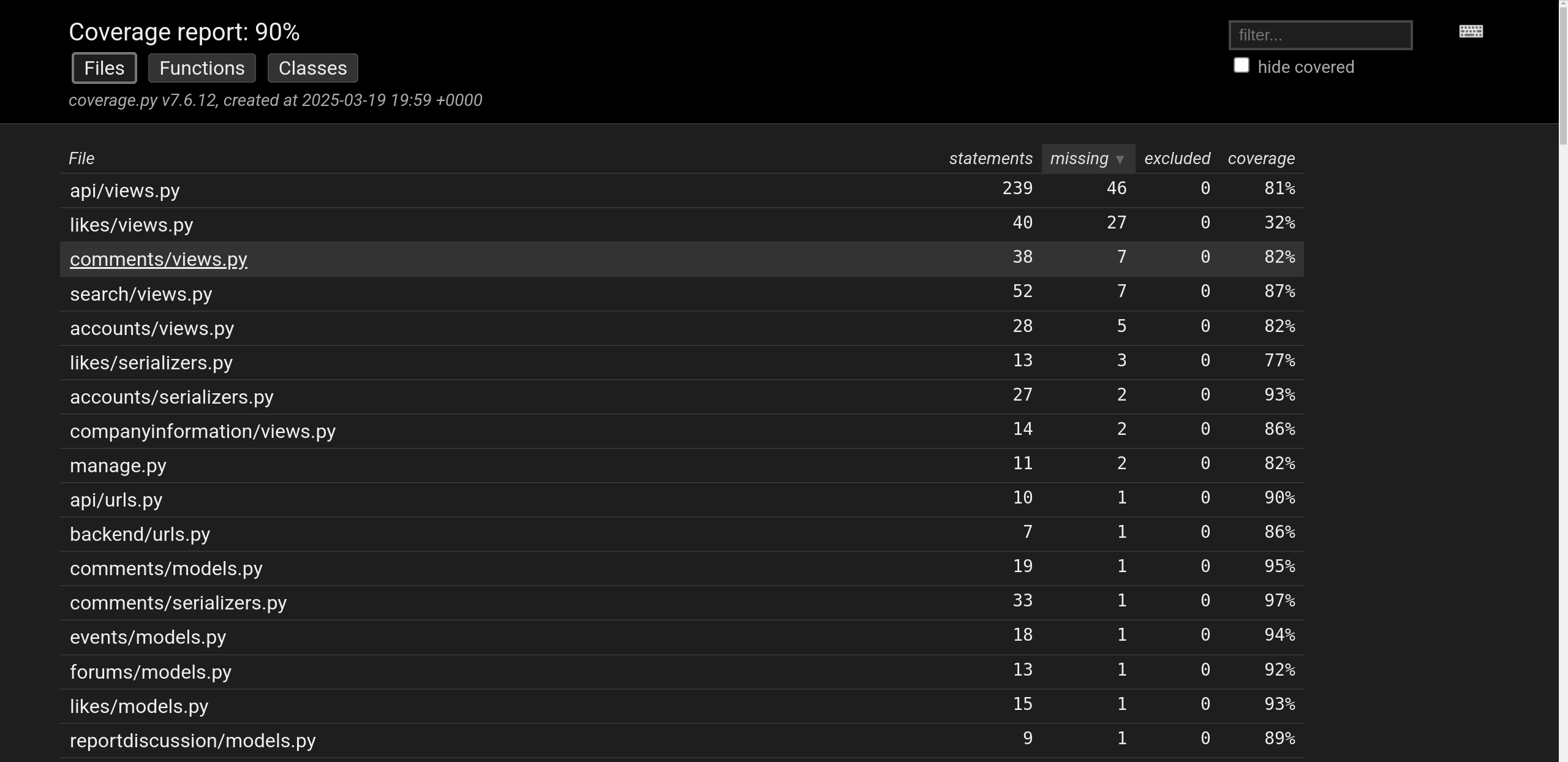

We are pleased to present a test coverage of 90%.

To run coverage:

To run coverage:

coverage run manage.py testcoverage html --omit="*/test*" -i- Open

backend/htmlcov/index.htmlto view coverage

Ensuring high coverage and robust tests is especially important given that we handle user logins and passwords in the backend, so ensuring that API requests are handled correctly is crucial for a production-ready application. We are happy to report that this is the case with our project.

Build Testing Frontend

We also have a build test for our frontend (npm run build). The result should be a frontend/dist folder with HTML, CSS and JS build files. The build tests are also used to illustrate any breaking changes in the code for CI tests. Further, they were helpful with our continuously deployed frontend, to double-check the build process with our application before submitting for deployment to Azure.

Continuous Deployment

We have a working configuration for Azure deployments. We have a workflow file so that whenever the main branch is changed (e.g: from a PR), the frontend and backend are automatically rebuilt and redeployed using Microsoft Azure.

To deploy the application, follow the relevant documentation for your cloud service.

The aspects that will be the same across all cloud providers are as follows:

- Django production checklist [6]

- This checklist provides details on what to follow for production

- frontend vs backend folder.

- The deployment process for the backend and frontend both require a

cdinto their respective folders before deployment is possible. This is evident in our deployment workflow files (62-azure-live-host_sysenggroup11.yml,azure-static-web-apps-gentle-meadow-09cc5f11e.yml)

- The deployment process for the backend and frontend both require a

The outcome of our live-host application was a great learning experience, and useful in terms of sending our public host version, filled with example data, to potential testers and clients. This allowed us to get our project in the hands of others without physically being there with the code. This led to higher user testing feedback at a quicker rate.

Unfortunately, our public host version of our application has been disabled due to incurring too many costs.

Documentation

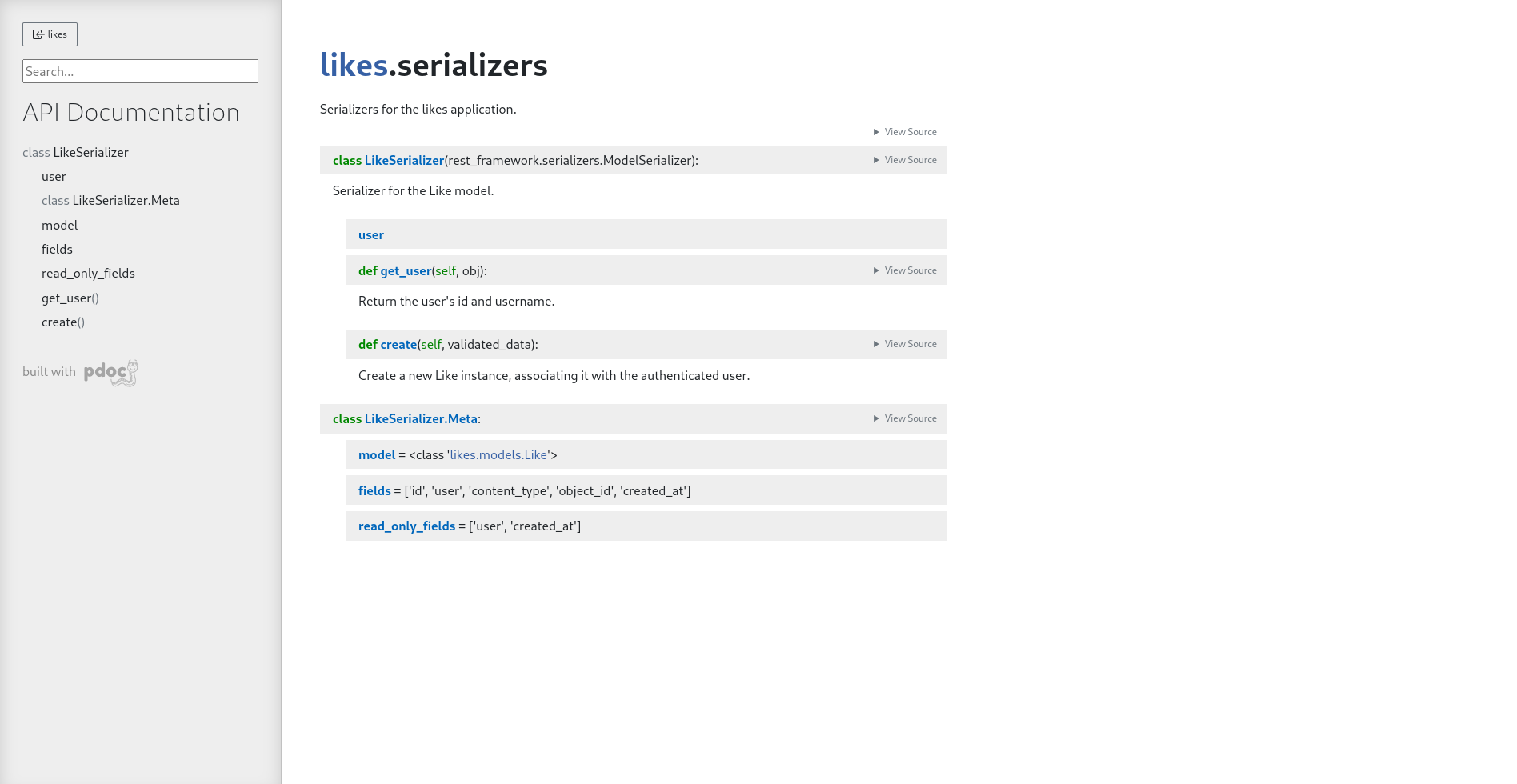

We have used pdoc to auto generate documentation from Python Django comments. This has led to an extensive API documentation available at pdocs/index.html.

To rebuild (from the backend folder), run: pdoc backend/ accounts/ articles/ events/ api/ comments/ companyinformation/ exampledata/ forums/ likes/ reportdiscussion/ reports/ search/ --output-dir pdocs

The output folder is backend/pdocs/

Any further created apps should also be included in the pdoc command (e.g: following on from search/)

Here is a snapshot:

Further, as a result of rigorous linting checks described earlier, every method and class in our Django application has been documented.

The reason for this generation of documentation is to provide future developers with an easy-to-view API reference for our code, and to provide seamless extension by way of Python comments.

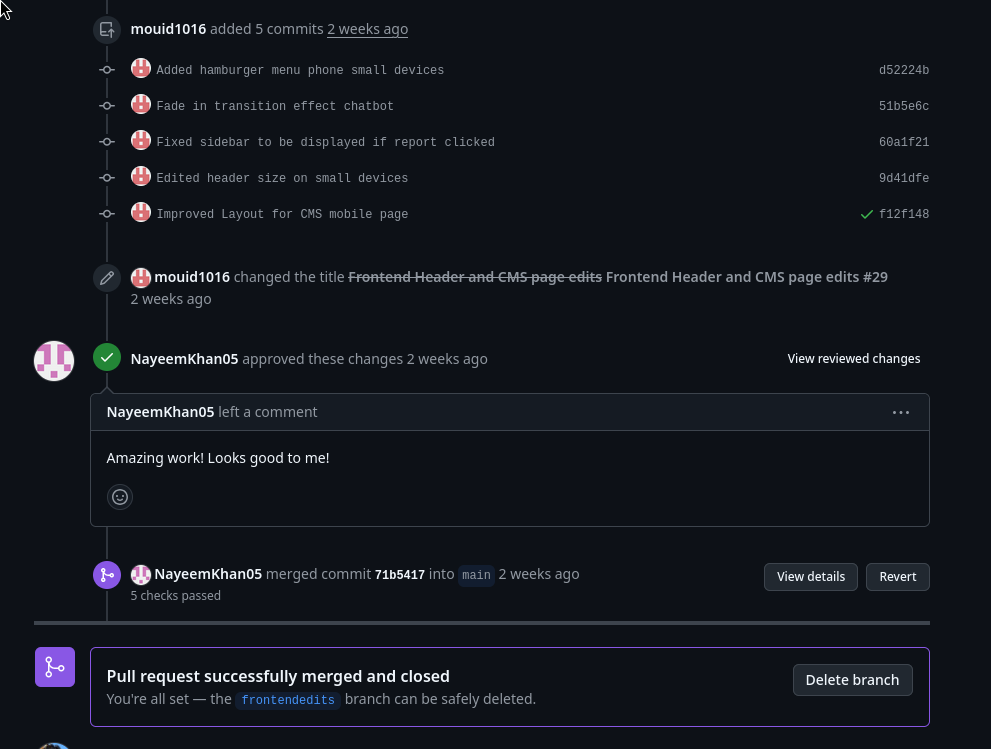

Pull Requests

Any changes to our code must first be proposed as a pull request (PR). This means a team member create a branch, implements their feature, and then proposes to merge it into main. This is done using the GitHub web app.

The reason for this requirement is so that we can:

- Keep track of changes to our code and easily revert in case of breaking changes (which are unlikely considering our CI tests)

- Give a chance to the team member making the PR to document their changes with context and key information.

Branch Protection

We have locked the main branch using GitHub branch protection rules [7]. This means no commits to main are possible without a reviewed PR. This removes the possibility of accidentally making a change to the main branch, or merging untested code, which could compromise the stability of our project..

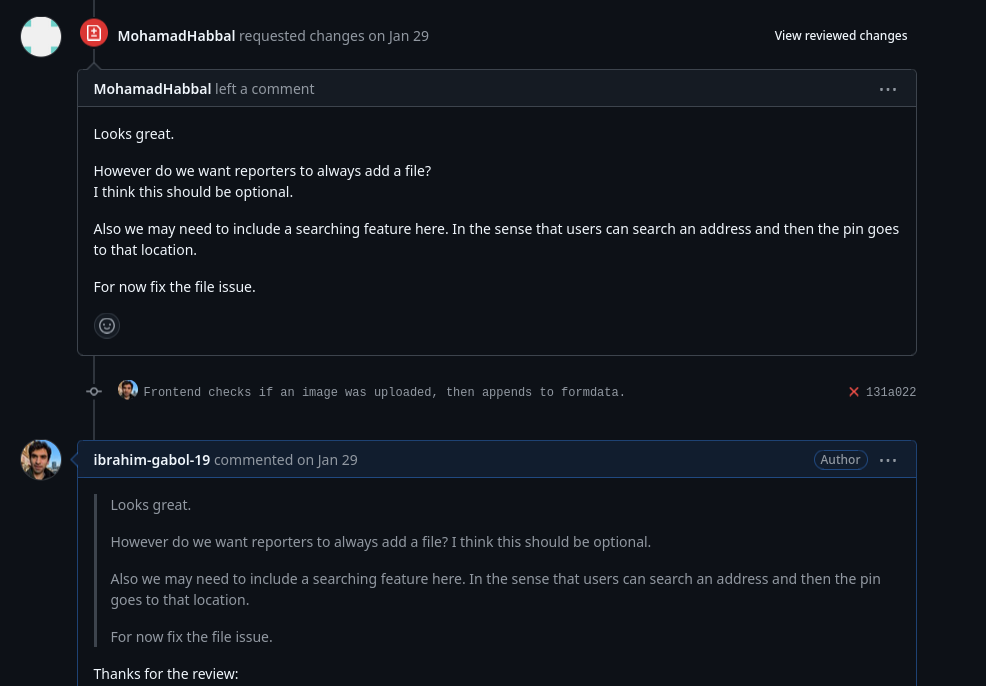

Code Reviews

Furthermore, every pull request is required to have been reviewed by at least one other team member before allowing it to be merged. This has again been implemented using GitHub branch protection rules. The reason for this is to improve code quality and reduce the likelihood of introducing bugs or regressions.

Code reviews have proven invaluable:

- They have encouraged team communication, expanding on ideas and iterating on implementations

- They force the code to be tested on at least one other machine, finding hard to notice bugs.

- They increase the knowledge of the code base all around: even though a team member didn't work on the feature of this PR, by reviewing the code they can understand better the state of the codebase.

Overall, code reviews have proven to be a cornerstone strength to the quality of our software and teamwork.

Compatibility testing

We have tested the backend and frontend applications on Windows, Linux and Mac machines along with Android and iOS devices. We have navigated the frontend of our application, interacted with the site, and inputted forms across the different pages. Because Python and React are very popular tools, they have a high amount of compatibility across devices.

The results were excellent, no operating system or web browser was unable to run our site. As described below however, there were minor issues with WebGPU compatibility.

Windows

Linux

MacOS

iOS

Android

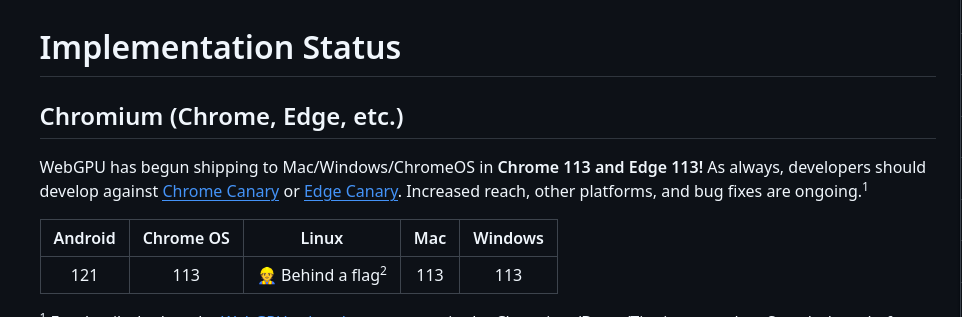

WebGPU compatibility

There was one key consideration when testing compatibility. The webllm JavaScript package we used to implement the browser large language model (LLM) artificial intelligence (AI) runs using WebGPU [8].

WebGPU is a relatively new web technology, so browser compatibility had to be researched. Thankfully, this table shows that compatibility and adoption is high across OSes and browsers [9], and this has been our experience as well, no platform was unable to run the LLM.

Out of the box, Chromium-based browsers, including Microsoft Edge, support WebGPU. Safari and Firefox, on the other hand, offer experimental compatibility, which is not enabled by default in our testing. However, over 75% of users will still be able to use the LLM without any configuration [10], so this is not a significant issue. Browser support for WebGPU is expected to grow over time as more browsers adopt it [9].

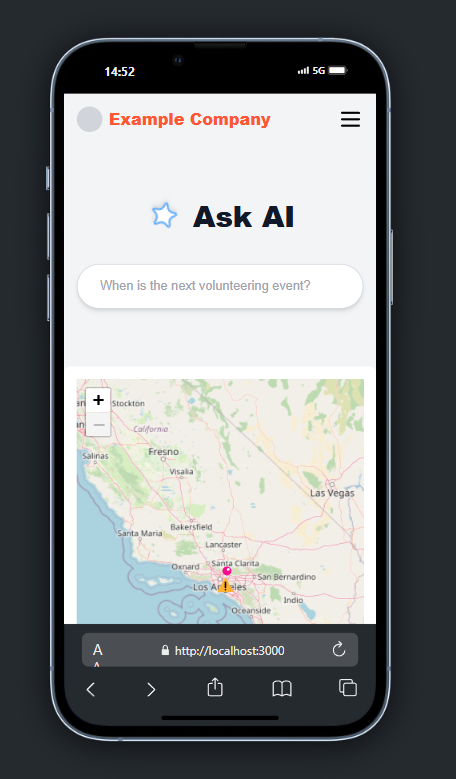

Responsive Design Testing

Every page on our frontend application is responsive. We used browser responsive tools to test different phone resolutions to ensure our website worked well. The results were such:

We can confidently say that the application is functional on all main device resolutions, including laptops, tablets, desktops and phones. This has meant we can support a majority of devices.

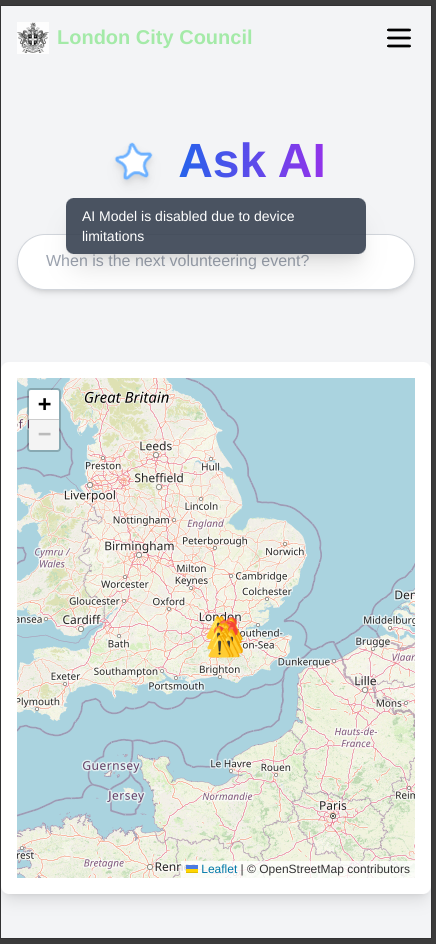

Performance/stress testing

Our backend was able to run on Premium v3 Azure services and our frontend was deployable as a Static Web Service. The main point of 'stress' from the user's perspective would be the local browser LLM for our frontend. It was able to run on:

- An Acer Aspire with 4GB RAM and an Intel i3 CPU.

- A ThinkPad e14 Gen 5 i5 16 GB RAM.

- Dell XPS 8GB Ram.

Unfortunately, mobile devices experienced poor slowdown when running the LLM. Therefore, the LLM is disabled when viewing the application on a mobile.

User Acceptance Testing

Testers

We chose a variety of roles for our testers, who are real-world testers, but have been made anonymous.

17 testers were considered in total, their backgrounds have been merged where similar, and feedback collated into one imaginary person.

- Alice: 20-year-old student from a Computer Science background (Represents 5 users)

- Candice: 22-year- old student from a non-Computer Science background (Represents 5 testers)

- Dave: 40-year-old professor from a Computer Science background (Represents 3 testers)

- Sam: 50-year-old business owner & community member from a non-Computer Science background (Represents 4 testers)

Test Cases

Our tests involved a full user showcase. We showed our application, then let them navigate the site, and interact with it.

- 1: March Demo Day

- Dave

- 2: February demo day

- Eve

- 3: External demo

- Candice

- Alice

Feedback

| Person | Category | Feedback | + / - |

|---|---|---|---|

| Alice | Accounts Page | Add an eye button to see the password being inputted. | + |

| Home Page | The local AI is really cool, as is the semantic search; make it stand out more! | + | |

| The ability to edit on the manage tab is really nice. | + | ||

| The site is very user-friendly and well built. | + | ||

| AI Implementation | How can you push the LLM idea? (at this point, only the AI Search had been implemented, but the feedback inspired us to implement AI generation on content management pages, reporting summarisation, creation of reports with AI...) | + | |

| Reporting | When selecting a report, it should be highlighted in some way on the map to let you know. | - | |

| The date reported needs to be clarified (currently just says the date). | - | ||

| It is not clear how to filter reports (was at the top, now moved to pills at the bottom). | - | ||

| Events | Make featured events more centered and clearer. | - | |

| Make a back arrow when pressing an event. | - | ||

| Manage | Search icon is cut off. | - | |

| New article/event button shouldn't be on the top left, it should be on the bottom right (or top right). | - | ||

| The padding is not correct on the left side of the cards. | - | ||

| The tick on the top left of a card is curly; make it look like the x and star (straight). | - | ||

| Text in the cards should have padding. | - | ||

| Title in the cards should be 2 lines and then truncated (with ellipsis). | - | ||

| Miscellaneous | Miscellaneous section shouldn't be part of the CMS page, it should be a settings button. | - | |

| The logo preview does not work for Miscellaneous. | - | ||

| Candice | Home Page | Map on the home page is not clear what it does. | - |

| It looks a bit too plain, maybe let the business change the colours? | - | ||

| Miscellaneous | The map boundaries should not be a square with hard-to-use sliders, but a circle radius with one slider. | - | |

| The circle should be draggable. | - | ||

| Reporting | The discussion sidebar overflows after too many messages. | - | |

| Dave | Home Page | Remove the animation on the home page star, it does not look professional. | - |

| Events | Keep the top bar when viewing a detailed page for event (and articles) events. | - | |

| Manage | Move the back button and save button closer to the centre for editing articles and events. | - | |

| Sam | Home Page | You could create a short summary in the morning of what happened yesterday. | - |

| Miscellaneous | You could tailor to building managements instead of communities. E.g. Show floor plan of the building instead of the map. | - | |

| Services section | You could have a section where users can see who to contact for plumbing issues, repairs, etc. | - | |

| Profile | Allow users to fill in there location so that when a report is made near it, they receive a notification that says an incident has been reported close to where there live. E.g. "There has been a plumbing incident reported near you. Would you like to call a plumber?" | - |

Conclusion

The user feedback has been crucial. After prolonged development, certain issues become invisible to us but are immediately obvious to new users. This fresh perspective has allowed us to address key problems and create a more user-friendly, HCI-centred product. Both technical and non-technical users highlighted specific pain points, such as the AI feature being unclear and concerns about button placement and colour choices.

References

[1] Perforce, "What is Linting," Perforce. [Online]. Available: https://www.perforce.com/blog/qac/what-is-linting. [Accessed March 2025].

[2] GitHub, "About continuous integration with GitHub Actions," GitHub Docs. [Online]. Available: https://docs.github.com/en/actions/about-github-actions/about-continuous-integration-with-github-actions. [Accessed March 2025].

[3] Stack Overflow, "Tailwind CSS is not working in Vite React," Stack Overflow. [Online]. Available: https://stackoverflow.com/questions/75329285/tailwind-css-is-not-working-in-vite-react. [Accessed March 2025].

[4] Tailwind CSS, "Vite Guide," Tailwind CSS Docs. [Online]. Available: https://v3.tailwindcss.com/docs/guides/vite. [Accessed March 2025].

[5] Django Software Foundation, "Testing overview," Django 5.1 Documentation. [Online]. Available: https://docs.djangoproject.com/en/5.1/topics/testing/overview/. [Accessed March 2025].

[6] Django Software Foundation, "Deployment checklist," Django 5.1 Documentation. [Online]. Available: https://docs.djangoproject.com/en/5.1/howto/deployment/checklist/. [Accessed March 2025].

[7] GitHub, "About protected branches," GitHub Docs. [Online]. Available: https://docs.github.com/en/repositories/configuring-branches-and-merges-in-your-repository/managing-protected-branches/about-protected-branches. [Accessed March 2025].

[8] MLC AI, "Deploying WebLLM," MLC AI Documentation. [Online]. Available: https://llm.mlc.ai/docs/deploy/webllm.html. [Accessed March 2025].

[9] W3C, "Implementation status of the GPU for the Web," GPUWeb Wiki. [Online]. Available: https://github.com/gpuweb/gpuweb/wiki/Implementation-Status. [Accessed March 2025].

[10] StatCounter, "Desktop Browser Market Share Worldwide - February 2025," StatCounter Global Stats. [Online]. Available: https://gs.statcounter.com/browser-market-share/desktop/worldwide. [Accessed March 2025].