TESTING

- Home

- Testing

Testing Strategy

IFRC's Sampling Tool is a web-based application designed to facilitate the process of data collection and analysis.

Given its critical role in the process of sampling, it is essential to conduct thorough

tests on the tool to ensure it meets the desired standards as outlined in the project requirements and IFRC's guidelines.

Testing Scope

The tool is designed to collect data from real-world situations. Therefore, testing should simulate real-world scenarios, considering the unpredictable nature of human behaviour. This will ensure that the tool can handle both expected and unexpected responses and provide reasonable actions and guidance to users. Each feature of the tool should be tested to ensure it works properly and users can access and utilize every functionality without constraints.

The aim of the tool is to simplify the calculation and analysis process for IFRC in their sampling studies.

Thus, tests should be conducted to ensure the tool is designed to meet the specific needs and requirements of IFRC.

Test Methodology

Unit testing will be used to test the individual components of the tool,

ensuring that each class and function performs as expected.

Integration testing will be used to ensure that the sampling tool is functioning properly

as a cohesive whole, with all its various components and modules working together seamlessly

to provide the intended functionality. User acceptance testing will be conducted to evaluate the tool's

design and user experience.

Test Principle

The development of the IFRC Sampling Tool followed the Continuous integration (CI) principle.

Automate Testing

Continuous Integration

Continuous Integration is the process of frequently merging code changes

from multiple developers into a central repository, followed by an automated build

and testing process to check for integration issues. The goal of CI is to catch integration problems

early and quickly, so that they can be fixed before they become more serious and difficult to resolve. With CI, we can quickly identify issues with their code before they are merged into the main codebase.

The tool was tested continuously during development using unit testing and integration testing to ensure its functionalities

worked correctly. Any failed test was analysed and fixed immediately. With this approach, we aim to ensure that the tool meets the highest possible standards of usability, functionality, and stability.

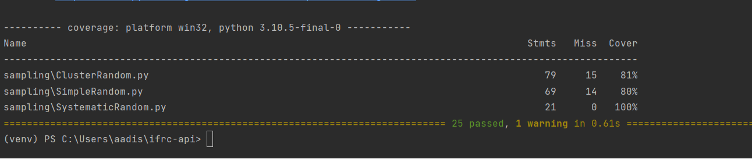

We use PyTest to generate our report for framework and coverage testing.

Continuous Integration is the process of frequently merging code changes

from multiple developers into a central repository, followed by an automated build

and testing process to check for integration issues. The goal of CI is to catch integration problems

early and quickly, so that they can be fixed before they become more serious and difficult to resolve. With CI, we can quickly identify issues with their code before they are merged into the main codebase.

The tool was tested continuously during development using unit testing and integration testing to ensure its functionalities

worked correctly. Any failed test was analysed and fixed immediately. With this approach, we aim to ensure that the tool meets the highest possible standards of usability, functionality, and stability.

We use PyTest to generate our report for framework and coverage testing.

All three calculators underwent automated unit testing, which provided valuable insights into their functionality and accuracy. The unit test coverage for each calculator is summarized below:

Integration testing with Postman

In the context of a sampling tool developed for IFRC, integration testing would involve testing the interactions between different components and modules of the sampling tool to ensure that they work together as expected.

Our goal is to test the integration of the user interface with the backend modules, to ensure that the user interface is properly communicating with the backend modules and that user inputs are being processed correctly.

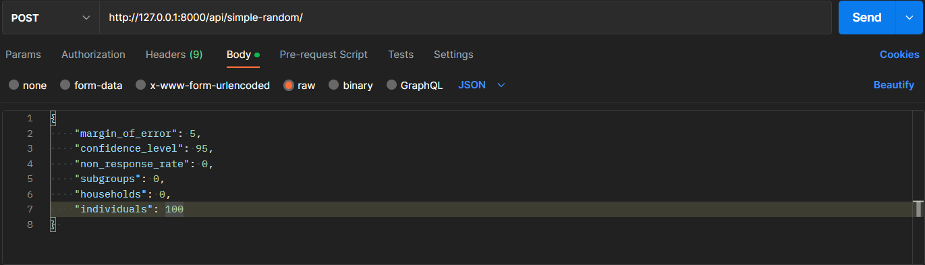

The integration testing is done using Postman. Postman allows you to send HTTP requests to your DRF API endpoints and inspect the responses returned. This can be useful for testing different scenarios and verifying that your API behaves as expected.

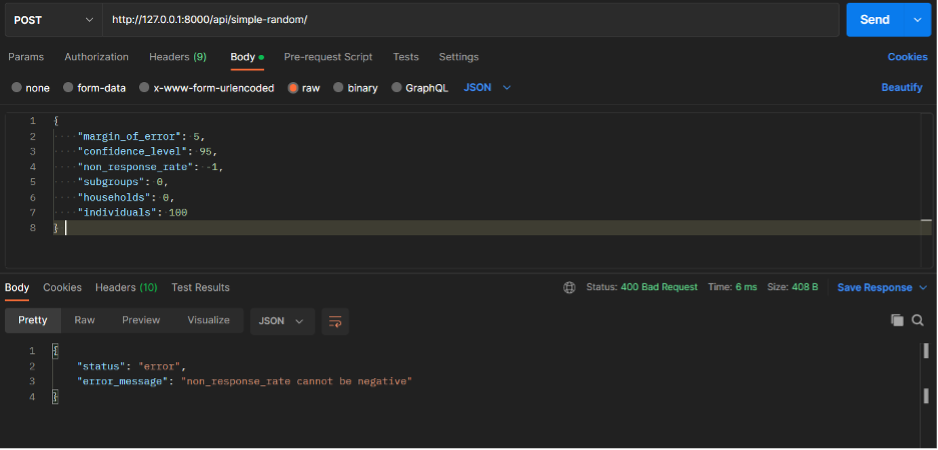

Here, a post method is sent to the working server on my localhost:8000. The body contains all the different parameters in the form of a raw JSON file.

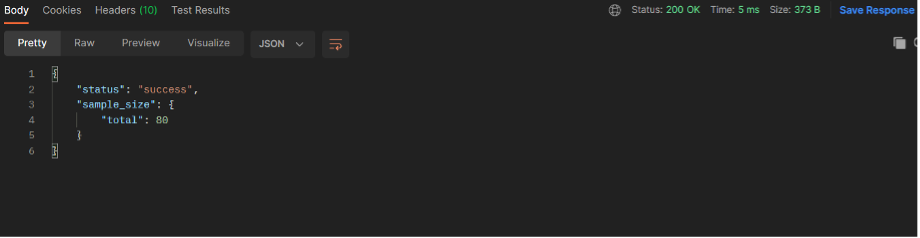

This test generates the following output on postman:

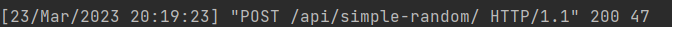

The Django-based backend displays the following on the terminal:

The status code 200 means that the request was successful, and the server was able to process it without any errors. The content length of 47 refers to the size of the response body in bytes. This clearly signifies that the test is successful, and the Simple Random sample size calculator’s API is up and running.

The API was also tested to see if its throwing errors:

And we can clearly see that an error is thrown when the non_response_rate is negative which is not possible. The error message is displayed correctly on the output.

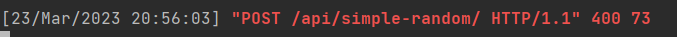

The Django backend displays the following on the terminal:

This indicates that the status is 400 and the request was not successful in generating the sample size.

Similar tests were done for all the APIs, and we made sure every API is well tested and running.

Unit testing

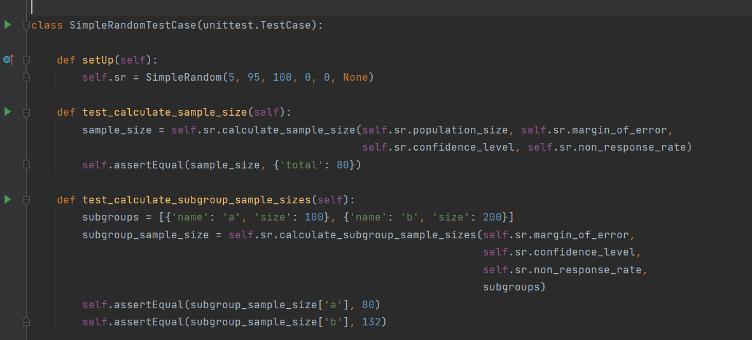

In this code, two unit tests are being performed to test the functions calculate_sample_size() and calculate_subgroup_sample_sizes() in the SimpleRandom calculator class.

For the first test, a sample size is calculated using the calculate_sample_size() function with specific inputs. Then, the assertEqual() method is used to check if the calculated sample size is equal to the expected sample size of 80 given the instantiation in the setup.

For the second test, subgroup sample sizes are calculated using the calculate_subgroup_sample_sizes() function with specific inputs. Then, the assertEqual() method is used twice to check if the calculated sample sizes for each subgroup are equal to the expected sample sizes of 80 and 132, respectively.

Both tests are isolated from external dependencies and only test the behaviour of their respective functions. This is the essence of unit testing - testing individual units of code in isolation to ensure that they behave as expected.

A comprehensive unit testing approach was employed to test each individual class similar to the example above, covering as many branches as possible, aiding in discovering hidden problems and further refactoring, in order to ensure the proper functionality and stability of the codebase.

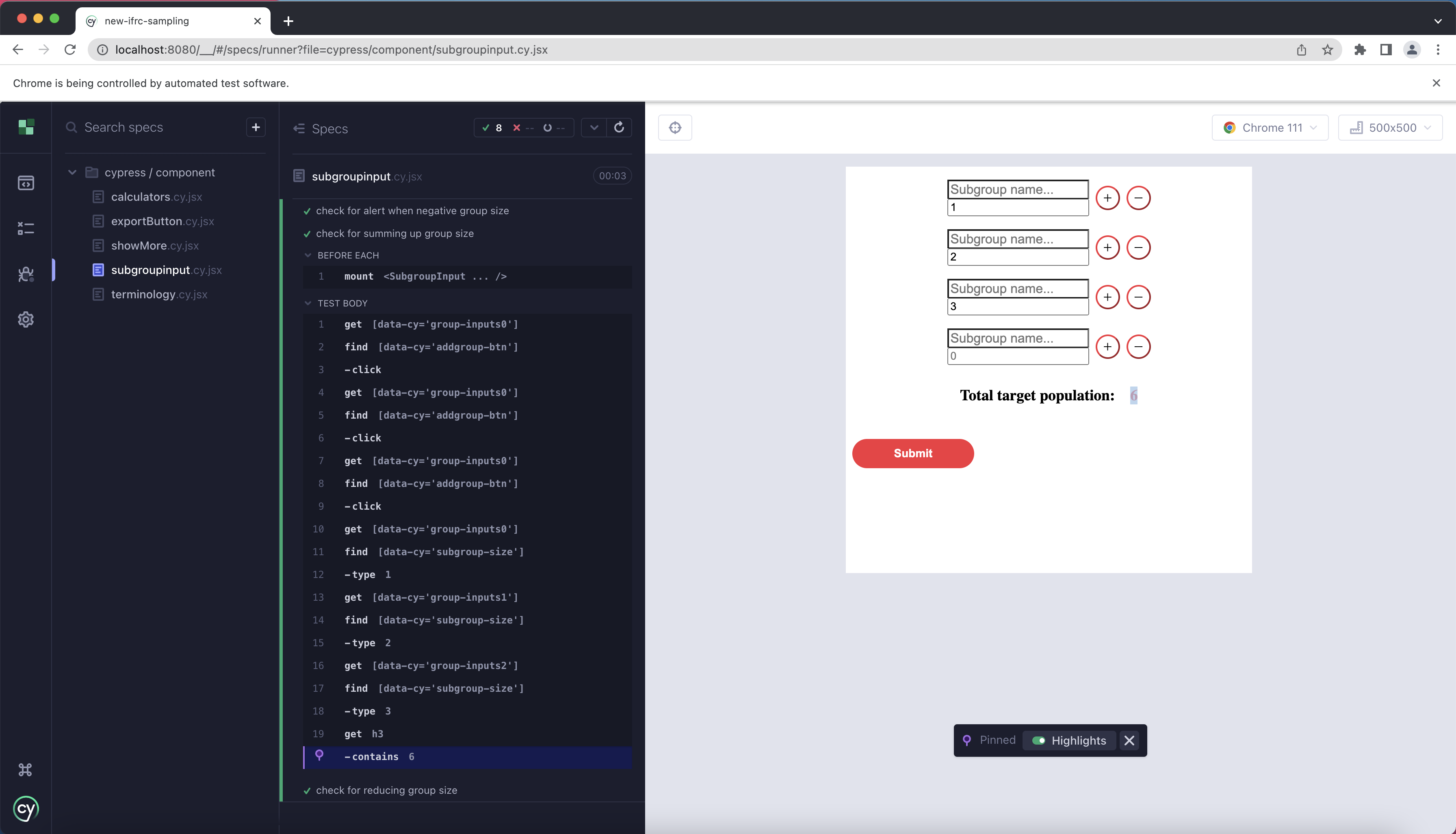

For frontend testing, we used Cypress to test the React components. Cypress is a popular JavaScript testing framework

that can be used to carry out component tests and end-to-end (E2E) tests for the IFRC Community Sampling tool.

For frontend testing, we used Cypress to test the React components. Cypress is a popular JavaScript testing framework

that can be used to carry out component tests and end-to-end (E2E) tests for the IFRC Community Sampling tool.

Component testing involves testing individual parts of the application, while E2E testing involves testing the entire application from start to finish. To carry out component tests using Cypress, we use its powerful API to simulate user interactions with the various components of the application and ensure that they are functioning correctly. The following is an screenshot which shows the Cypress test for a component.

For E2E testing, we use Cypress to automate the entire user journey, from open the application to submitting data, and verify that the application is functioning as expected. Also, we use Cypress since it provides features such as time travel, which allows us to see exactly what is happening during each step of the test, making it an effective tool for testing the IFRC Community Sampling tool's functionality and user experience. The following is an screenshot which shows the Cypress test for the entire application.

User Acceptance Testing

We requested the feedback of four individuals to evaluate the effectiveness of our IFRC Community Sampling

tool in meeting user requirements and identify possible enhancements.

Tester

The testers were deliberately chosen, where they all have some characteristics in common and in different.

they are our potential users, i.e., IFRC employees who were working for sampling,

Note: our testers are real-world persons who chose to take the tests anonymously, we thus masked them with

fake names and AI-generated portraits to ensure anonymity and confidentiality.

Test Case

Four instances (circumstances) were used in the test, and the testers went through each one before providing input. The testers were given acceptance requirements, and their feedback was based on how they rated each need on a Likert scale and added their own comments.

Test case 1

We let the testers go through the flowchart, and fill any data whatever they want, and see if they can get convicing results.

Test case 2

We give a simulated real-world senario (carefully designed research circumstance) to the testers and

ask them to work it out and give us their sampling solution by using this sampling tool.

Test case 3

Again, we give another sampling senario to the testers. But this time, we did not give any hint on which sampling method should be

use. We ask them to export the pdf file of its result.

Feedback

| Acceptance Requirement | Total Dis. | Dis. | Neu- tral |

Agree | Total Agree | Comments |

|---|---|---|---|---|---|---|

| Sampling tool run to end of job | 0 | 0 | 0 | 0 | 4 | +Everyone agree sampling tool run till end of job. There is no obvious long pending existing. |

| the whole sampling process is intuitive, not confusing | 0 | 0 | 0 | 0 | 4 | +The users commented that interface is very clear and easy to understand, which is the result of following

HCI principles.

+The button options are very handy |

| quick and clear response to every action of users | 0 | 0 | 0 | 0 | 4 | +Everyone agree responses are clearly given |

| accurate answers for sampling | 0 | 0 | 0 | 0 | 4 | +good answers for questions |

| a pdf can be successfully exported in high quality | 0 | 0 | 0 | 0 | 4 | +Everyone agree pdf exported is in high quality |

| reasonable summary and results | 0 | 0 | 0 | 1 | 3 | +Satisfactory summary |

| clear and precise guidelines | 0 | 0 | 0 | 1 | 3 | +the terminologies are all explained well and clear +the instructions are clear and easy to follow |

Conclusion

We are pleased to see that the test participants had a favourable attitude towards and enthusiasm for the sampling tool as they used it and provided comments. Their opinions—both positive and negative—meant a lot to us. These comments have influenced us and will continue to guide us as we rework our sample tool to make it more sophisticated and thorough so that it can assist an increasing number of individuals.

Melody

21 years old, an IFRC volunteer studying in UCL, majoring in Computer Science.

She has some basic knowledge of data science and statistics, but lack of the experience in field research

Bob

24 years old, a IFRC staff in a particular developing country

He is an IFRC staff but this is his first time to conduct the a survey involving community sampling.

He has nearly no background in statistics and sampling.

Alice

22 years old, a experienced IFRC staff in sampling

She has been working in IFRC for 3 years, and she has conducted many surveys involving community sampling.

Her research usually involve complicated data analysis and has high requirements on the sampling method and efficiency.

Helios

35 years old, an editor at IFRC

He is not good at English, and it is his first time to use a tool like this.

He has nearly no background in statistics and sampling.