Testing

Testing Strategy

As our product focussed mainly on the usability of the product by the teachers and children at the schools, we placed a stronger emphasis on user acceptance testing than unit testing or integration testing. We tested our product on live users every week, whether they were fellow students or visitors at events and refined any bugs, features that they wished to be implemented. Also, we had a weekly Friday meeting with our NAS clients to discuss our current situation of the project and obtain any direct feedback from them.

Unit and Integration Testing

Due to our end users being completely non-technical occupational therapists or primary school children, we limited the project structure so that the users are very limited in what they can do. This made the Unit testing aspect very easy to do as there was only a handful of functions which could be tested manually from the frontend.

The AI outputs were very broad in what they could produce and the only tests we created was to manually check whether the outputs they produced were satisfiable for a handful of demo songs. For example, there was an instance where the AI did not produce any background prompts and after manual debugging, we realised that the AI replied by stating “the lyrics was not interesting” enough for it to extract any meaningful backgrounds.

Compatibility testing

Compatibility testing was done manually on Mac and Windows based computers. Our client wished us to deploy on an Intel based hardware, on a Windows computer.

The application works smoothly on Intel based machines running windows as well as performing the animations to a decent extent on windows machines using other chips (AMD was tested).

However, electron-builder (library used to package our product) optimises the code for a specific OS format with the path referencing. As UNIX based systems (mac and linux are the major ones) use a different file system, therefore the relative references to the image files and audio files are not configured properly and fails to load. Therefore, our system only works on windows based systems at the current state.

The performance on each type of machine is detailed below in the Performance section.

Performance/stress testing

The main intel hardware that we tested on had the following specs:

- Windows with Intel CPU: Intel i9 CPU

- Windows with Medium level Intel hardware: Intel i5 114000 CPU, 8GB RAM and Intel UHD 740 GPU

- Windows with Non-Intel hardware: AMD CPU

Although we could have used a higher end hardware, we agreed that our clients will not have access to these hardware so testing on these would not be ideal or realistic. The majority of our clients at the NAS schools mainly had access to CPUs with rare access to GPUs, and to extend our affordability criteria to those without Intel GPUs we chose to test on CPU functionality only.

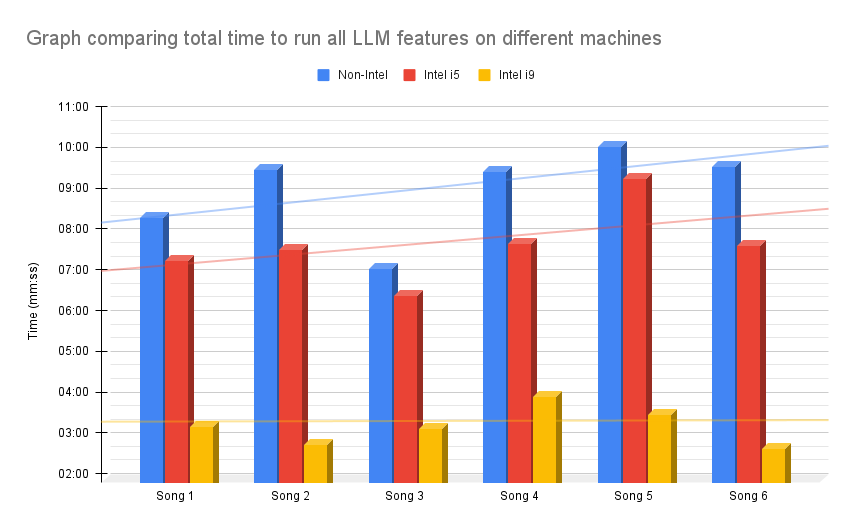

From the frontend, we timed how long it took to run the generation of all LLM outputs from the 3 separate devices that we had access to. The graph below shows the distribution of this. Although the generation time relies slightly on the length of the song (and hence its lyrics) there is a nice trend in all songs that better Intel CPUs perform drastically better than the other options. Moreover, the Non-Intel laptop was an AMD Ryzen 7000 series, whose benchmarks are better than an Intel 11th gen i5. Despite this, the Intel CPUs consistently performed better than the AMD CPUs, proving our theory that OpenVINO is indeed well suited for execution on Intel hardware. Although the average time difference between these two is around 1min, this will pile up to a significant amount of time when running the program as a batch.

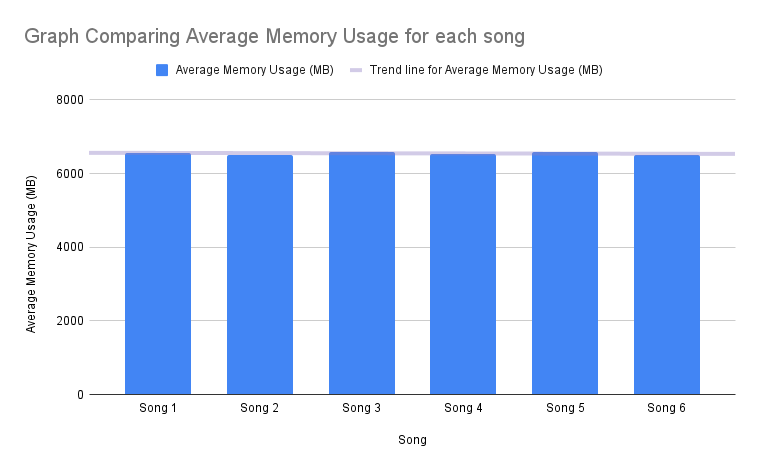

We also measured the average memory usage when analysing the song with the LLM, which turned out to be very consistent value 6547MB. This is a relatively high RAM usage which may impede the user’s experience of using the laptop normally. However, as our product contains an option to run the LLM commands as a batch overnight, we believe this will have less of an impact than normally. Moreover, when testing on a machine with lower memory size of 4GB, the LLM did successfully lower the memory usage to fit the machine despite taking a slightly longer time to generate, so it is not completely inaccessible for those with devices under RAM size of 6GB.

In regards to the whisper and stable diffusion testing, we have not created a graph as the difference between systems was negligible.

For whisper:

- the non-Intel machine took average 01:06 per song

- the intel i5 machine took average 00:53 per song

- the intel i9 machine took average 00:45 per song

For stable diffusion, we tested on generating the full set of 3 backgrounds and 3 objects (total of 6 images)

- the non-Intel machine took 01:16 per song (of which 1min was taken to load the code)

- the intel i5 machine took 01:12 per song

- the intel i9 machine took 00:46 per song

The non-intel and intel machines had similar specs so produced similar times but the i9 machine had a much better CPU, loading the code much faster than the others.

User acceptance testing

As our product mainly focussed on user satisfaction and how easy it is for the school to use it, we spent multiple rounds on user testing with various age groups.

- Round 1 - Haggerston School and Enfield Grammar School

- Round 2 - Helen Allison School Visit

- Round 3 - AI for good showcase

- Simulated testers from Colleagues

Round 1 - Haggerston School and Enfield Grammar School

Our initial user tests, where we collected data from 18 secondary school children using Microsoft forms. We have attached the graphs of the most important feedback now. Our product was still in its prototype phase and didn’t have many functionalities. However, they seemed to be attracted to the shader mode more than the particle mode, which was more visually appealing but less stimulating.

At this stage, our product was still in a prototype stage, with a very basic particle system and the shader working for one song.

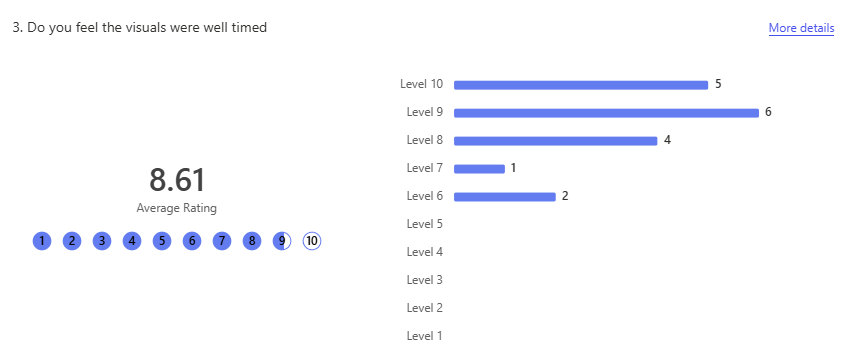

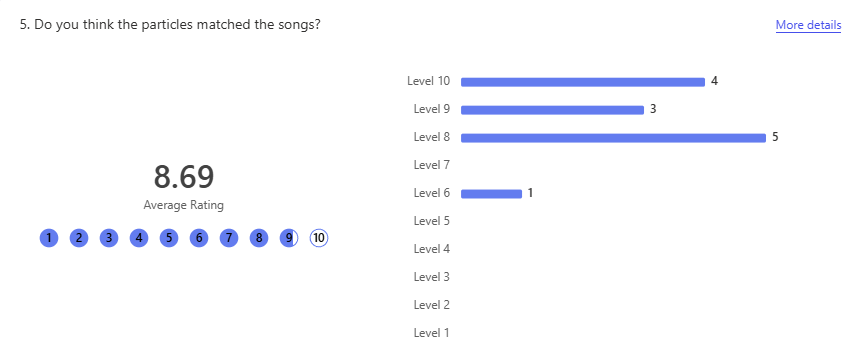

From the graphs, we can see that our product was widely accepted as interesting from the students, with the majority feeling that the visuals were well timed to the song and the particles chosen by the AI matched the theme of the song. An interesting response was that the whiteboard looked plain, so we increased the max particle limit to 50 particles and particle spawn rate to 2 per second.

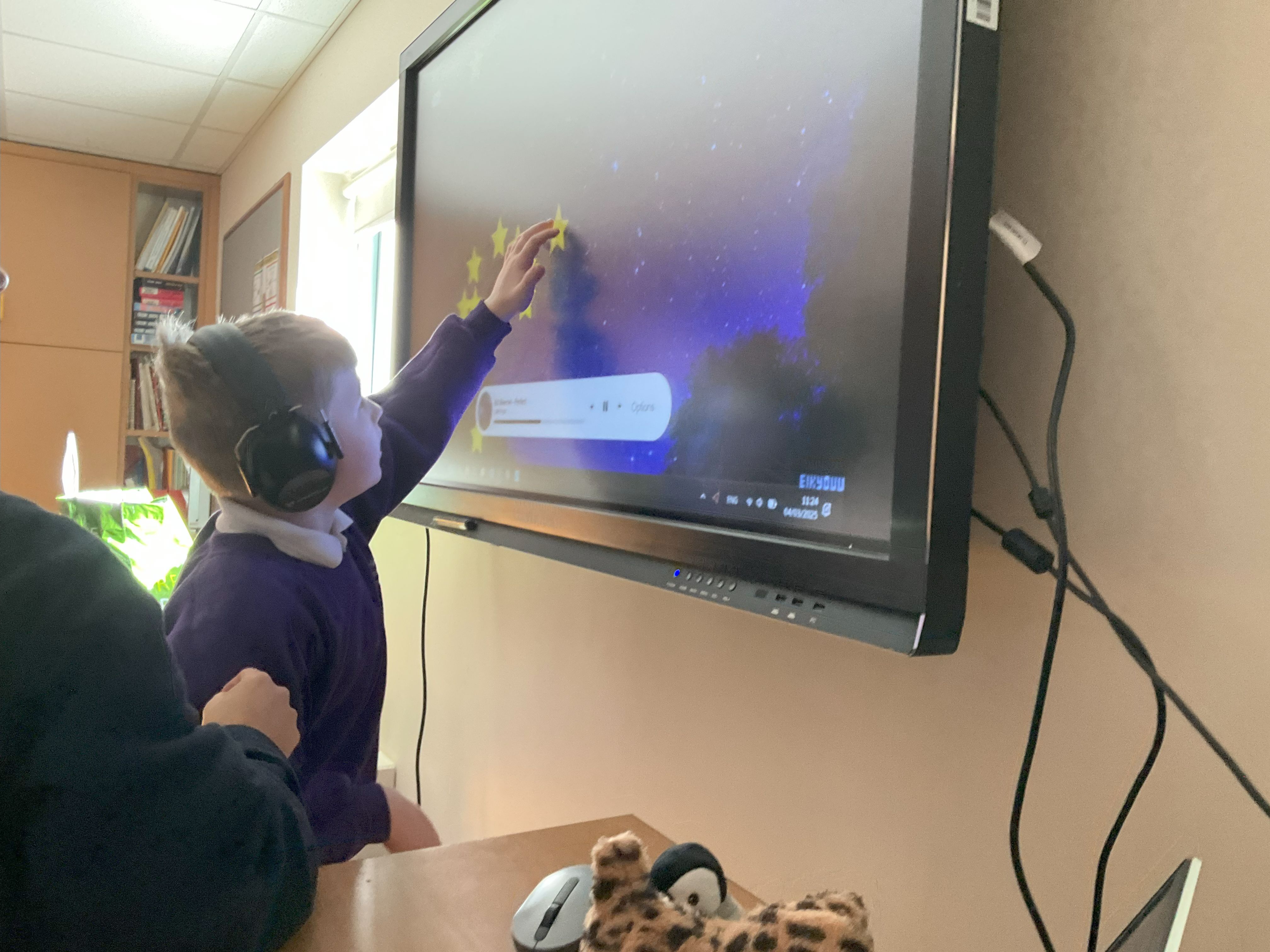

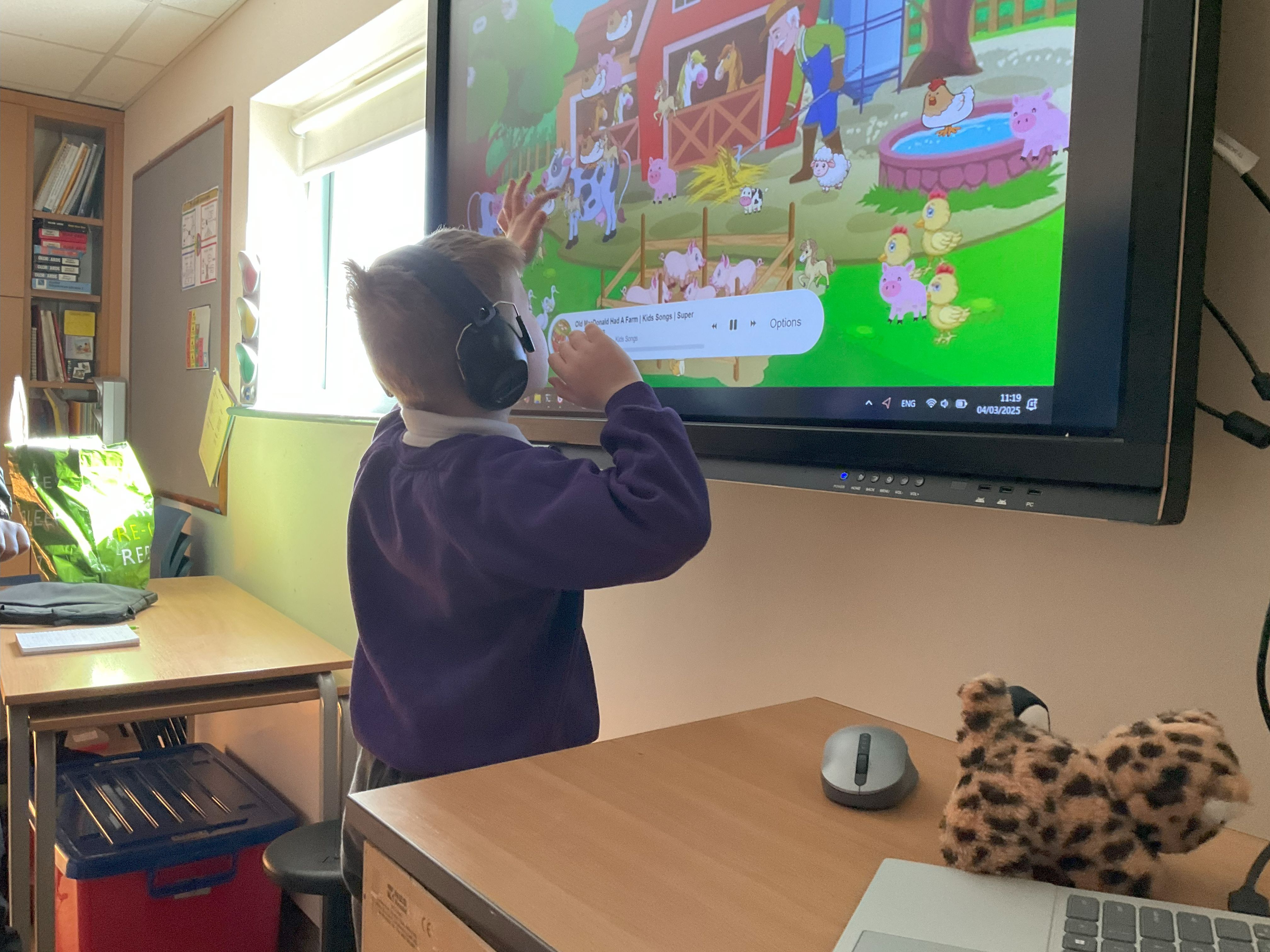

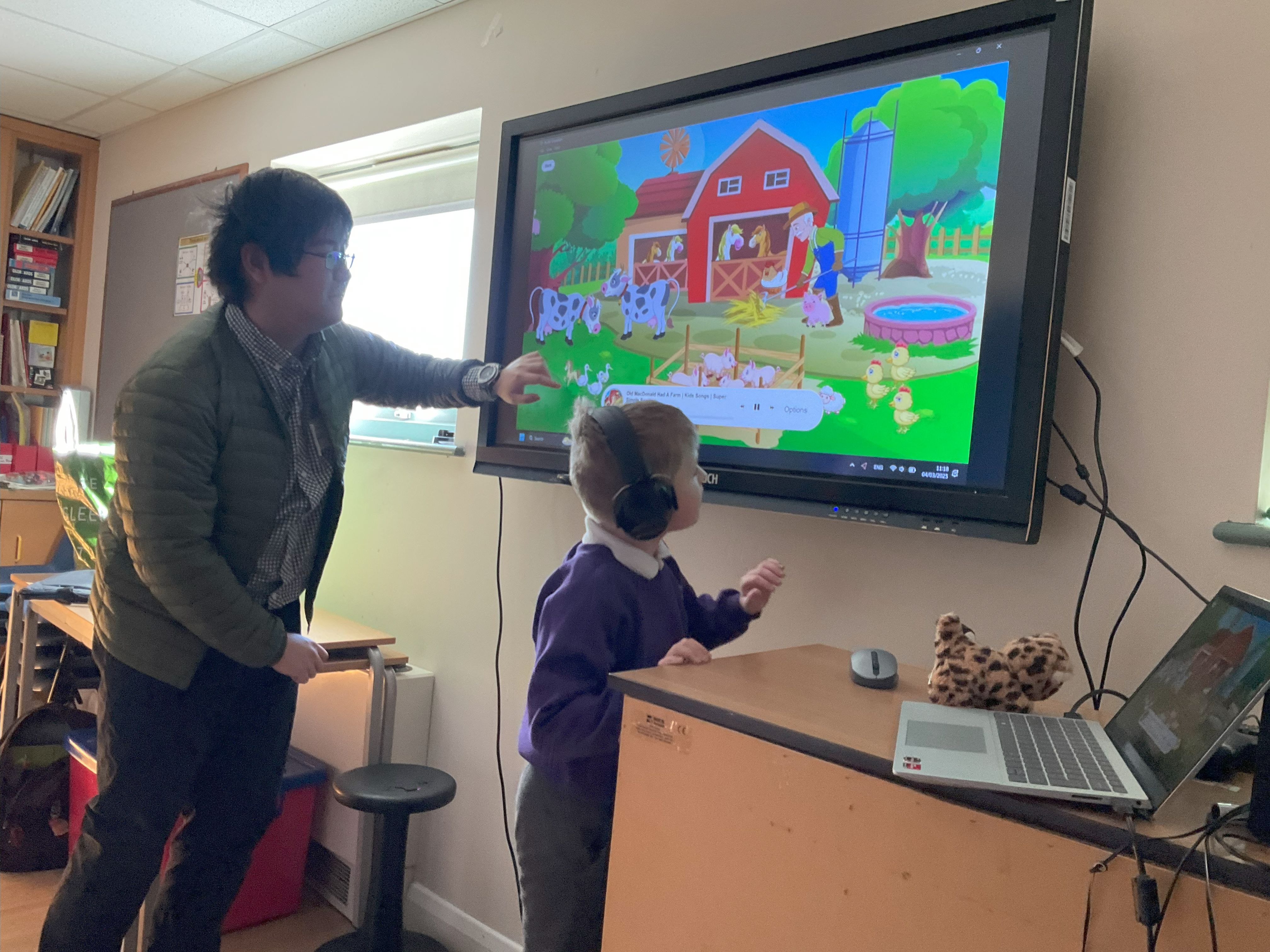

Round 2 - Helen Allison School Visit

We visited Helen Allison School on the 4th March 2025, and tested our product on the school children, with varying levels of autism. Helen Allison School is part of the National Autistic Society and was run by one of our clients which we had met online at regular intervals. This test was by far the most important as we tested on our actual clients who will be using our product after the end of our project. We managed to get feedback from 6 students and 11 teachers at the school.

Overall, the students really enjoyed our product, especially the particle physics mode. As expected, they tended to prefer nursery rhymes such as old Mc Donalds but to a decent surprise Ed Sheeran’s Perfect and Dancey Monkey was very popular from the children. The last minute adjustment of allowing gifs for the background seemed to especially amuse them contrasted to a simple background image. Although the majority of them were non verbal, their actions gave us a lot of insight on areas of improvement such as:

- some of them accidentally clicked outside the application on the windows taskbar, opening other applications

- The majority of the testers tried to do interactions which we did not expect, such as dragging around the screen or holding down

- Initially, we had the system such that a single touch produced multiple images, all separate and random from the images linked to the selected particles. However, a teacher suggested that making one image appear per click would entice the children to play with our product multiple times

- We noticed that the testers had a hard time interacting with the particles, as the interaction area of the mouse was too small

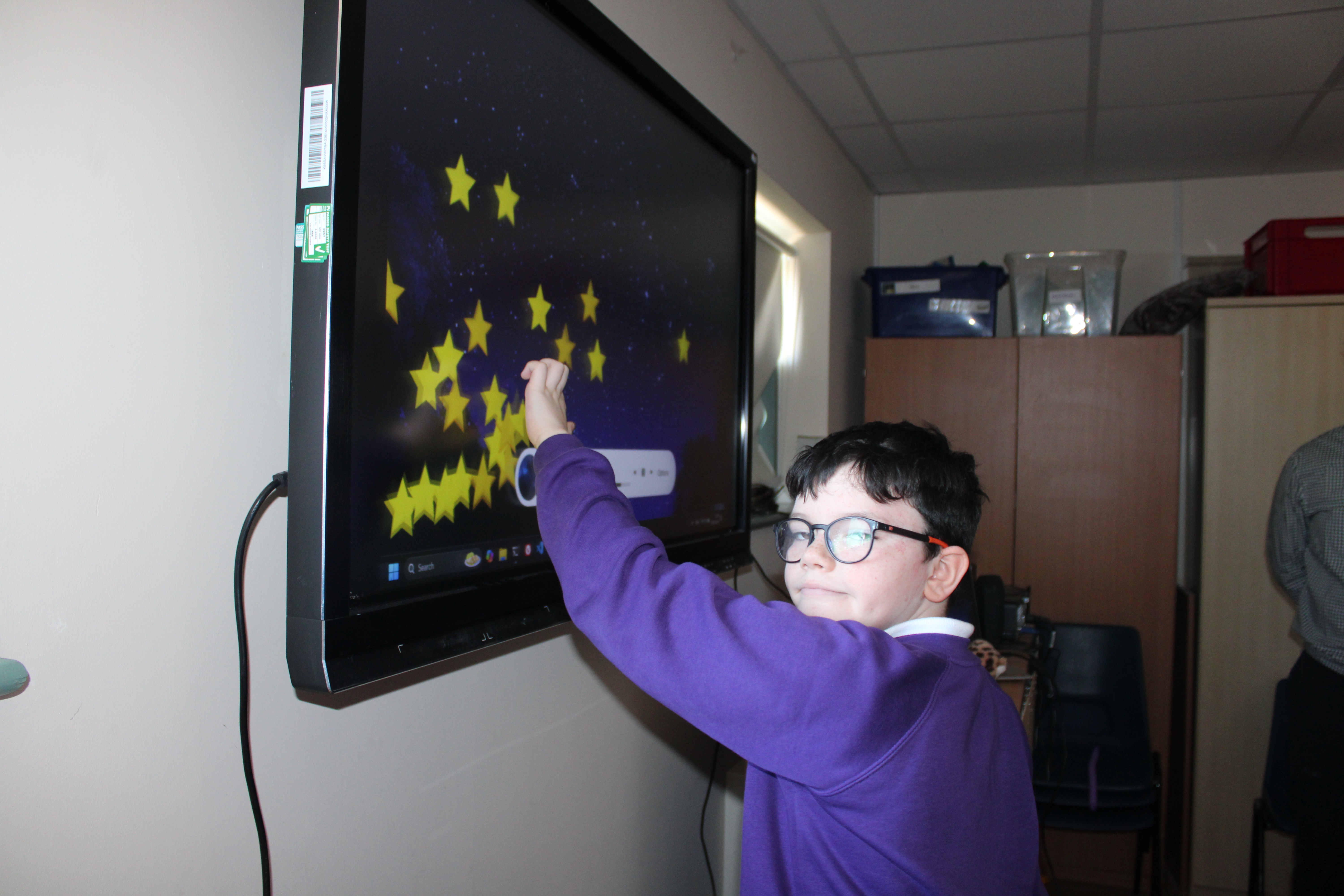

From this feedback, we made many adjustments, such as continous particle streams from holding down, full screen mode, and a circular overlap area for the particles to interact with the mouse. The particle image selection was revamped to allow for a single image to be selected per click, and the background image was made to be a gif. The images below show the students and teachers interacting with our product.

Due to the limitations of there being multiple groups, we were setup in a particular room that the students were not very familiar with. Some of them did not wish to leave their comfortable areas and test our product. This was an incident which lead us to realise that portability was a much bigger requirement than we had initially believed to be.

Unfortunately, we could not test out the Phillips Hue Lights as we figured out that the ethernet and wifi that the machine is connedted to must have the same SSID. Below is the documentation supplied by the head of NAS’ IT department explaining how to improve when creating apps for public environments such as schools.

Round 3 - AI for good showcase

On the 11th March 2025, UCL hosted an AI for good showcase, where company executives and those interested in the application for AI came and tested our products. We managed to test with a lot of teachers and technology experts, getting very useful feedback for our final few adjustments and potential future expansions with our product.

Here are some of our photos on the day:

We received many positive feedback from the experts, with the following to name a few:

- Addition for projectors and projecting on ground, with motion input feet detection

- Multiple simultaneous touches at the same time for multiple students to interact seamlessly at once

Here, we also received feedback that our product will be great for creating a familiar environment for autistic children. A few teachers we talked with commented that they often find it hard to help children transition between primary and secondary school as it is such a big jump in difficulty and they are forced to move out of their comfort zones. They commented that our product will be great in helping them connect their new locations to their old comfortable ones. This was a great feedback, further enhancing our importance to extend the portability aspect of our product.

Simulated testers from Colleagues

Throughout the project, we continued to test and obtain feedback from our fellow colleagues to gain their insight from a technical point of view. Below are some feedbacks and improvements which were made off that. All of these comments have

Tester 1

Comment - ”button colours are unintuitive”

In our initial prototype stage, the majority of our buttons in the program were a greyish colour. The tester commented that he could not understand which buttons colour coding these buttons may make the product more intuitive. We colour coded it such that unavailable buttons were a lighter shade of grey, buttons which run a long process are blue and delete buttons were red.

Tester 2

Comment - “Don’t know what shaders work”

In a previous iteration, the shader images were supplied after the song creation so was not active by default. We created a place holder message asking to upload the shader visuals if it was not present. However, this message was only given after the user had tried to click on a song. The tester commented that it would be better to know this on the main song selector page. This was implemented by greying out the songs which did not have a shader image and making them unclickable on shader mode.

Tester 3

Comment - “Can you not close the popup page when you click outside”

Formerly, the only way to exit a popup, such as the song info was to click on the exit button on the top right. However, as the outsides of this page was darkened out, the tester thought it was unintuitive that clicking outside the popup did not close this.