Technology Review

Possible Solutions

For solutions to a system like Blueprint AI, we considered two aspects.

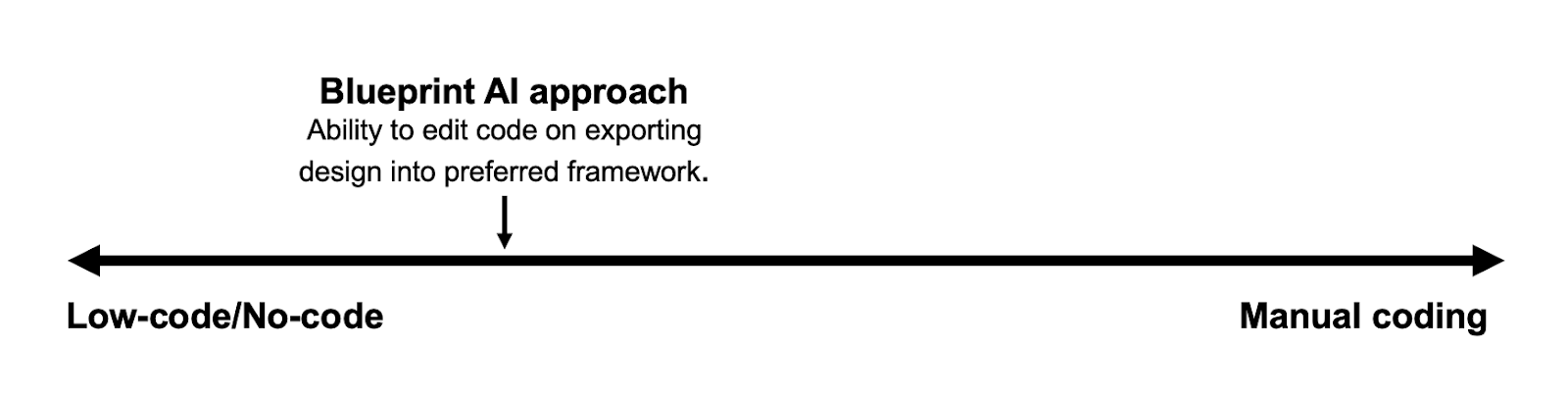

1. Presence of Coding

This aspect refers to how much the user will need to code to get their frontend designs out and working,

also taking into account a user’s programming skill level. The increasing prevalence of low-code/no-code

products such as Wix and Microsoft PowerApps signals a shift in traditional software development workflow,

reducing the barriers to entry for software development. This is greatly empowering for the average user as the

technical skills needed are vastly reduced [11]. Furthermore, the estimated low-code/no-code market size was

USD 6.78 billion in 2022, and is projected to grow significantly in the next decade [12].

While a low-code/no-code design choice would make sense given the current market, this would limit the flexibility

and control a user has when designing their frontend. The user would be limited to pre-designed components and structures,

which is restrictive [13]. Additionally, employing a low-code/no-code design would not make our student-developed project

standout against industry standard solutions like Wix and PowerApps which are already established.

On the other hand, incorporating too many elements of coding required by the user would cater towards the more experienced

developers and would be more time-consuming; a counter-intuitive design choice for Blueprint AI.

With the intended users being those who require a quick, reliable way to scaffold and generate frontend designs -

regardless of their know-how and technical expertise in frontend development, our approach would need to cater to this type of user.

As such, an approach requiring more coding than conventional low-code/no-code platforms but at the same time not be a necessary

component for the frontend generation. This comes in the form of the exporting feature where users can edit the exported code in the

frontend framework they need, a welcomed feature over low-code/no-code platforms like Wix which lack an exporting feature. Users of all

programming backgrounds would thus find that using Blueprint AI is valuable in terms of the time savings it affords and also the flexibility

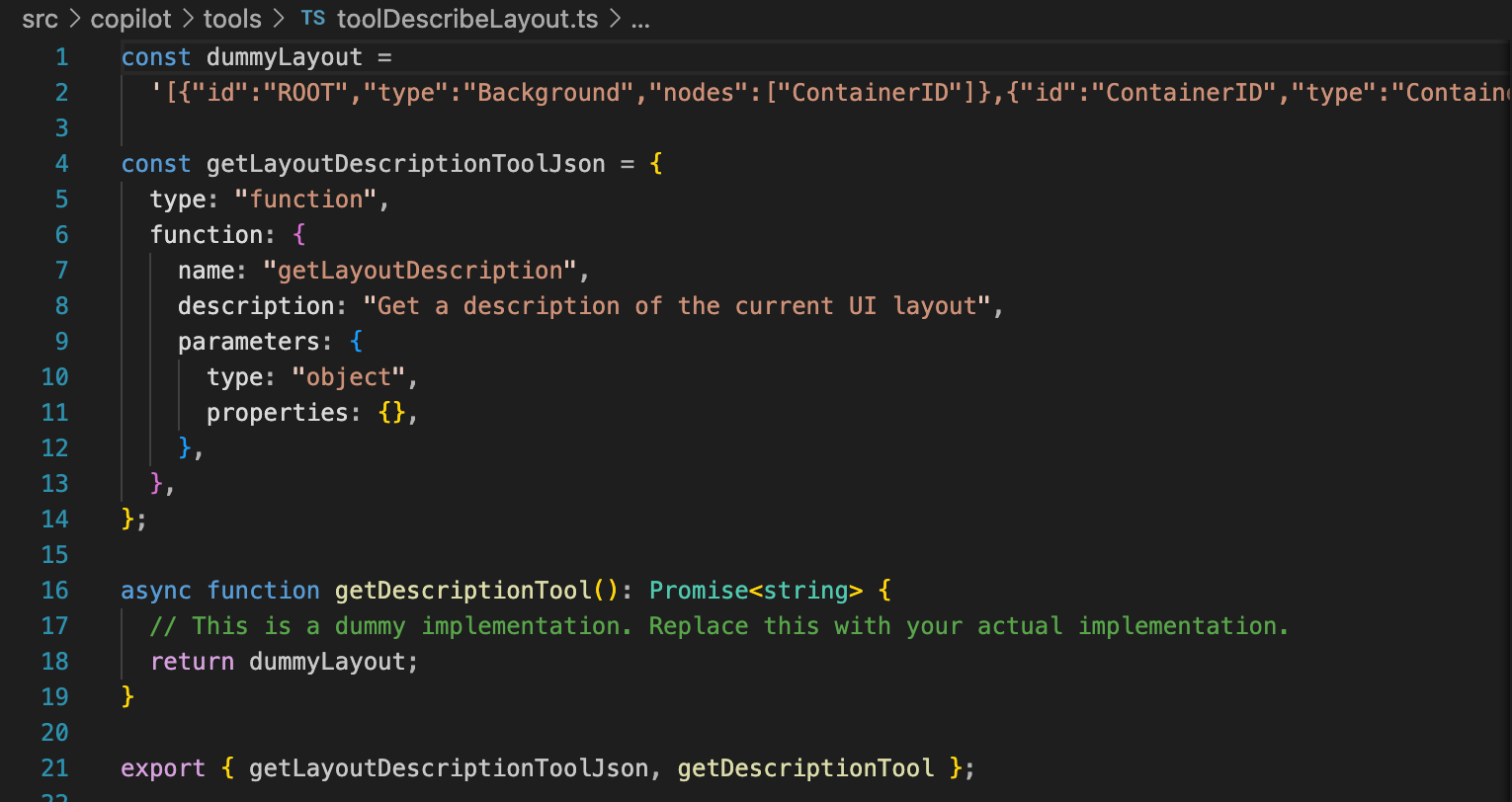

in editing the frontend code generated. Figure 5 depicts a general indication of where Blueprint AI falls in the spectrum of coding required by the user.

Figure 5. Coding spectrum approach used for Blueprint AI

2. Prominence of AI

Currently, popular industry solutions like Wix, Visily and PowerApps do feature AI tools to help generate frontend designs

(as discussed under Section 1.1), but these AI tools are relegated to being an additional feature rather than being integrated

throughout every aspect that the user interacts with when designing. We aim to get Blueprint AI to stand out from existing solutions

through making AI more prominent; studies have shown that AI is extremely useful in expediting frontend development, thereby alleviating

workloads through automation [14].

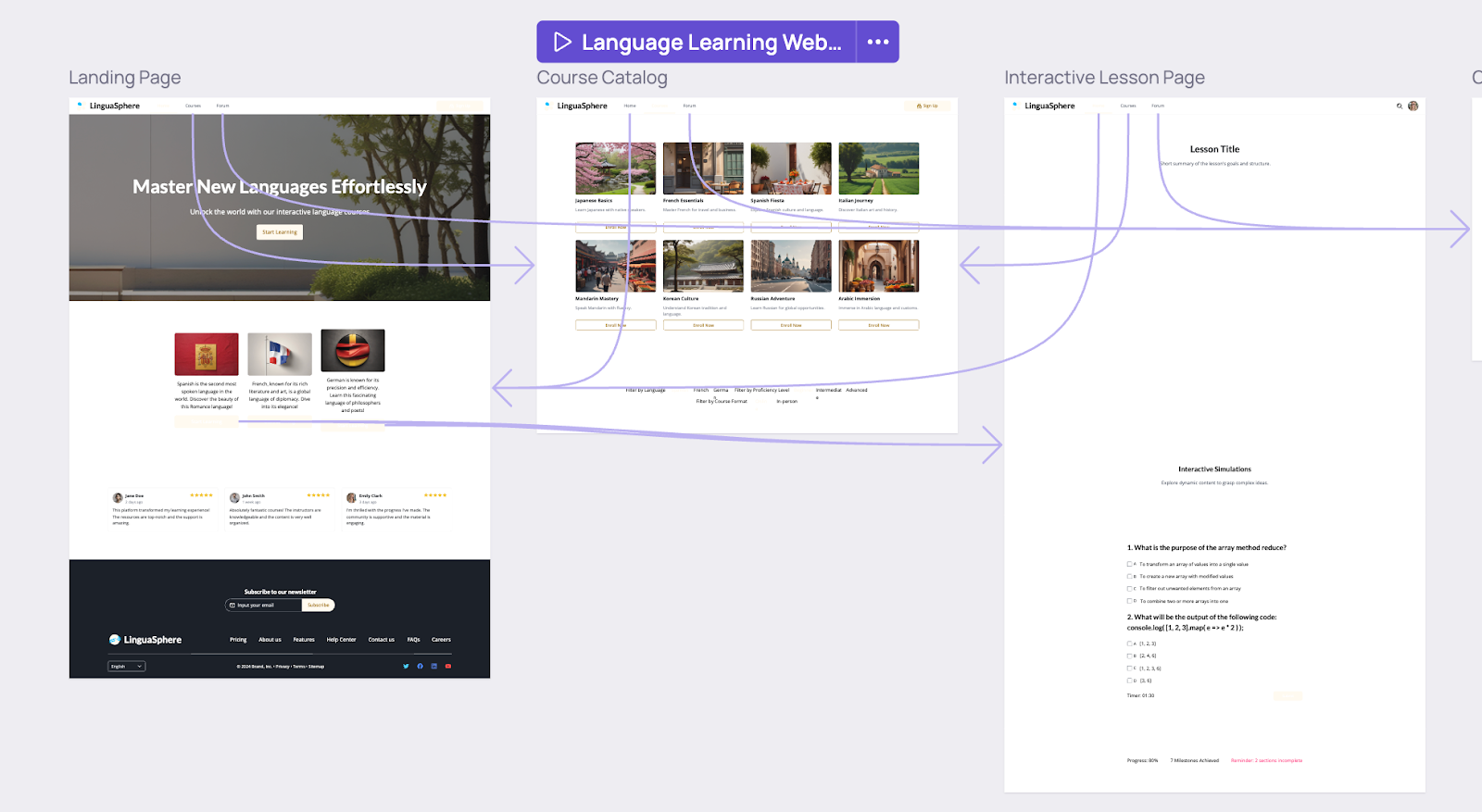

In order to make AI more prominent, from the start, users are prompted to tell the AI what they want through text,

image or both. We also plan to include a chat bot for the user to interact with the AI by telling it what changes to make.

This allows for prompts by the user that can be tailored to the users specific needs, enhancing the flexibility and control

that the user has as well - beyond manually tweaking the frontend source code themselves. Additionally, the AI also recommends

different pages that the users need as frontend design requires multiple pages, on average 12-30 pages for a full-fledged website -

according to [15].

With this, at every step, AI is integrated seamlessly into the frontend development experience,

streamlining the development process. With AI-driven UI generation, users are afforded efficiency and flexibility.

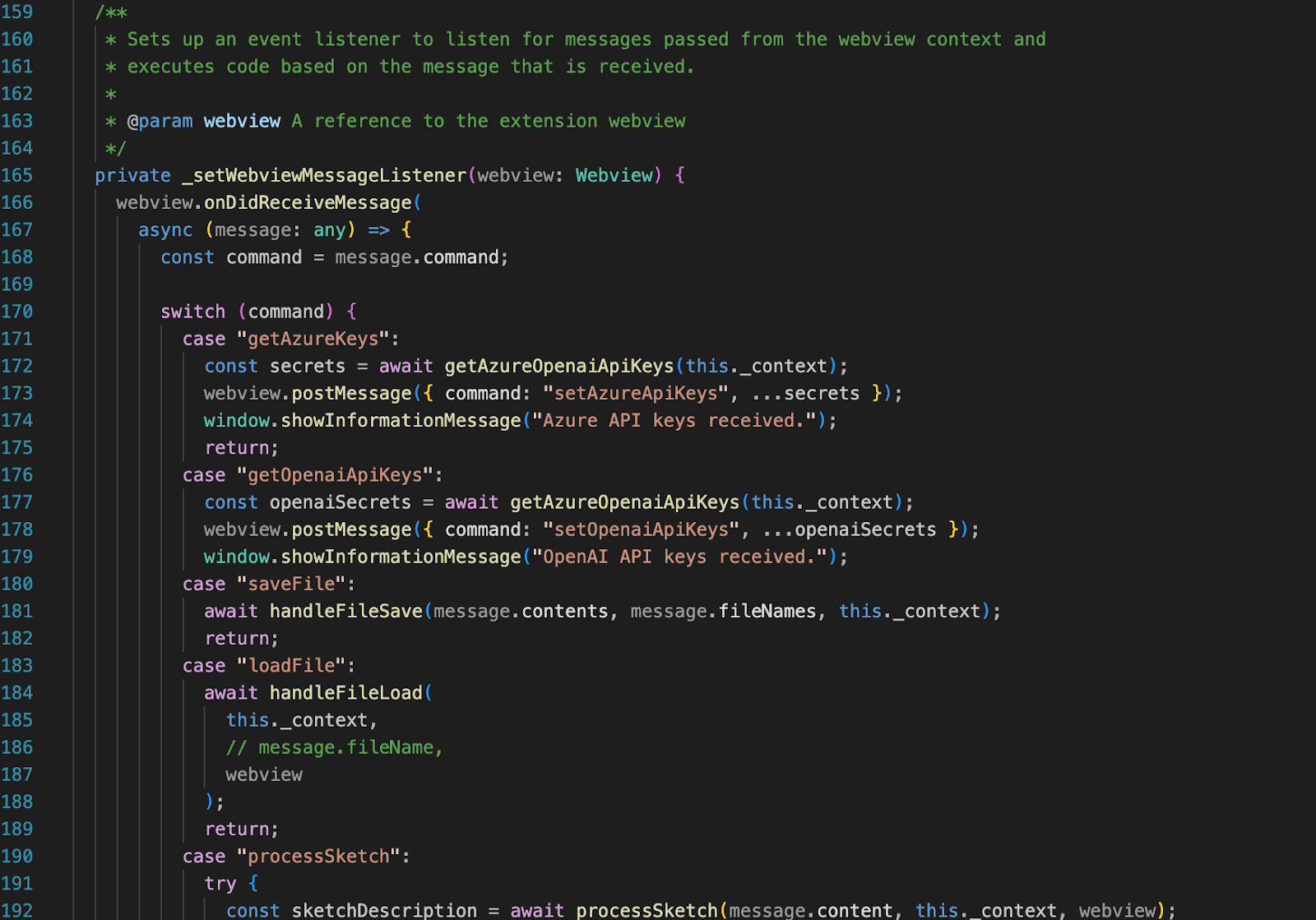

Figure 6 depicts the continuous integration of AI in Blueprint AI.

Figure 6. Continuous integration of AI in Blueprint AI

Devices

Algorithms

For UI layout recognition, we needed software that could effectively and efficiently extract text description from given screenshots of websites. This text description would then be used to describe to the AI what kind of website the user wants to build. With this as a priority, we considered the following approaches:

1. Traditional Image Processing (OpenCV, cv2)

OpenCV (Open Source Computer Vision Library) is a computer vision and machine learning software library. It is widely used for real-time image and video processing, and it provides a comprehensive set of tools for tasks like object detection, facial recognition, motion tracking, and more. Its cross platform capabilities and common programming language interfaces (C, C++, Python, Java) make it easier to develop with [17].

| Pros | Cons |

|---|---|

|

No licensing cost, Extensive Documentation, Versatile as it supports a wide range of computer vision tasks. |

Steep learning curve owing to wide range of advanced tools available |

For Blueprint AI, there is simply no need for real-time image processing. This allowed us to narrow the search for libraries that related to text description extraction from an image, overcoming the need to pore through advanced libraries for heavy-duty image processing. Given that OpenCV is also widely used in industry, it made OpenCV a suitable choice.

Deep learning based Optical Character Recognition (OCR):

OCR is a technology that converts images (e.g., scanned documents, photos, or screenshots) into text that computers can read and edit .

While OCRs can defer in the types of images they can extract from, the main function of an OCR is to allow computers to recognise and

extract text from images. Some OCRs leverage AI and deep learning in the image processing and text detection and recognition front,

allowing for more robust and impressive functionality [18]. This use of AI by OCR is inline with Blueprint AI’s central theme and

abstract, as such, we researched on OCRs that specialised in extracting from screenshots that have deep learning capabilities.

We narrowed our options down to two competing solutions: EasyOCR and Tesseract. Both are open-source systems which use AI for processing.

The table below shows what each OCR can do.

| OCR | Description | Standout Features | Drawbacks |

|---|---|---|---|

| EasyOCR |

Python based OCR which has support for over 80 languages. Built on Pytorch and easy to implement and use [19]. Faster on GPU due to its deep learning-based architecture. |

Provides higher accuracy for noisy, complicated images. This can be useful for complicated website layouts such as Chinese e-commerce platforms,

which are known to be more intricate compared to Western counterparts [20]. Handles multilingual text in a single image effectively |

Limited fine-tuning options. |

| Tesseract |

PA traditional OCR engine developed in part by Google with comprehensive documentation found in [21]. Optimized for CPU and generally faster on CPU. |

Highly customisable, supports custom training and fine tuning. Affords high accuracy with clean input. |

Struggles with noisy or distorted text. Requires separate language data files for each language. |

We decided to use EasyOCR, with the primary reason being the ease of installation and implementation with Python

and its simple API. Tesseract would require more set-up to get it running and implementation would be a bit more tedious.

Additionally, while both OCRs would need some form of image pre-processing to be done before character recognition, EasyOCR

requires less pre-processing. Lastly, while AI capabilities are an important factor for Blueprint AI, fine-tuning the OCR to

produce the highest quality result is not a priority as we want our system to focus more on efficiency and speed for our users

to rapidly scaffold their frontend development.

In Blueprint AI, we use cv2 (OpenCV) to clean up images before running them through EasyOCR for text extraction.

Think of cv2 as a tool that prepares the image—adjusting brightness, removing noise, and sharpening text—so it’s easier to read.

This step is important because raw screenshots can be cluttered or blurry, making it harder for the OCR to recognize text accurately.

Once the image is prepped, EasyOCR, a deep learning-based tool, quickly scans and extracts the text, even from complex website layouts.

This setup helps us efficiently turn website screenshots into clear, structured descriptions that the AI can use to generate front-end designs.

Programming Languages, Frameworks, Libraries, APIs

1. Programming Languages and Frameworks

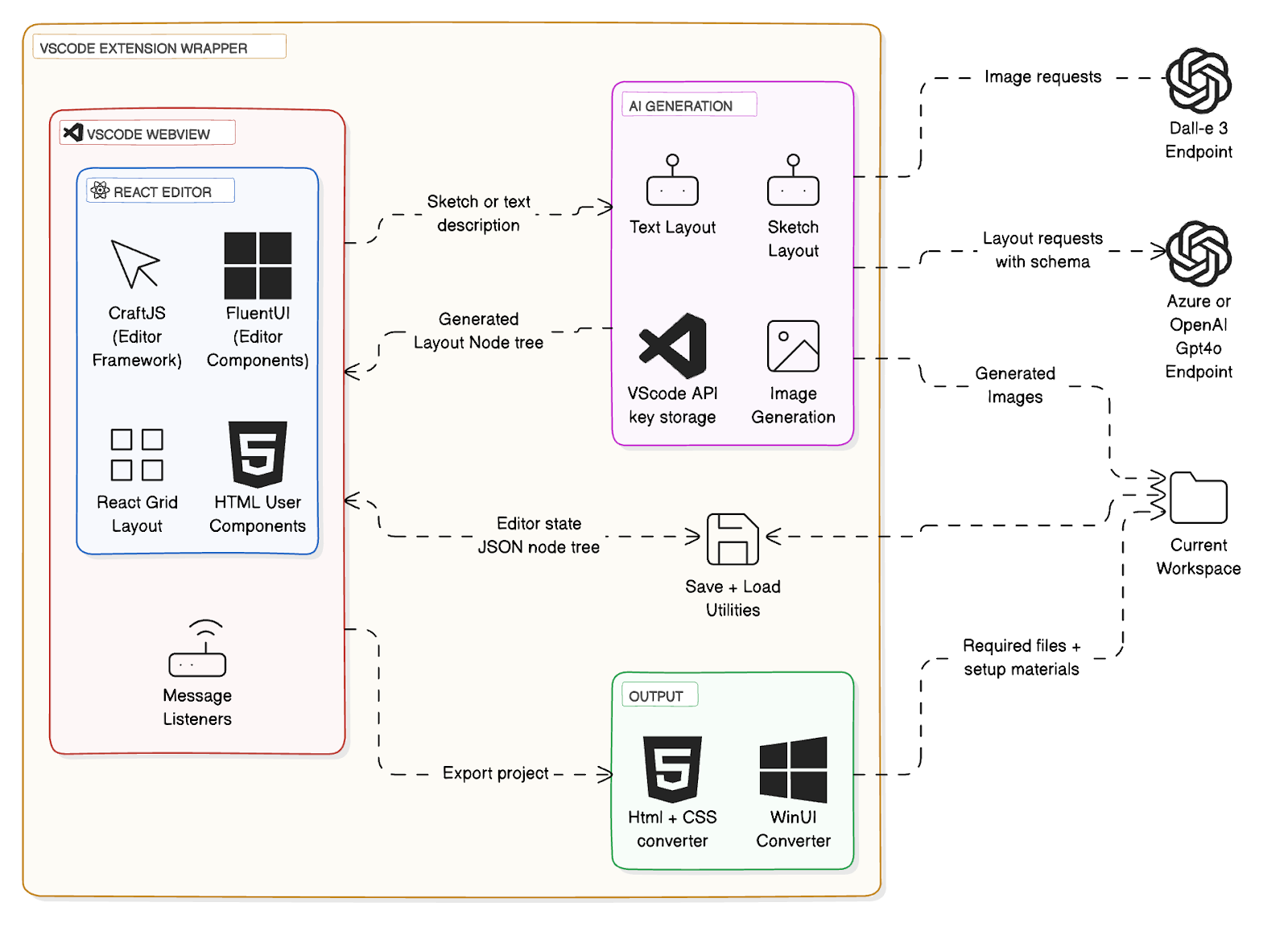

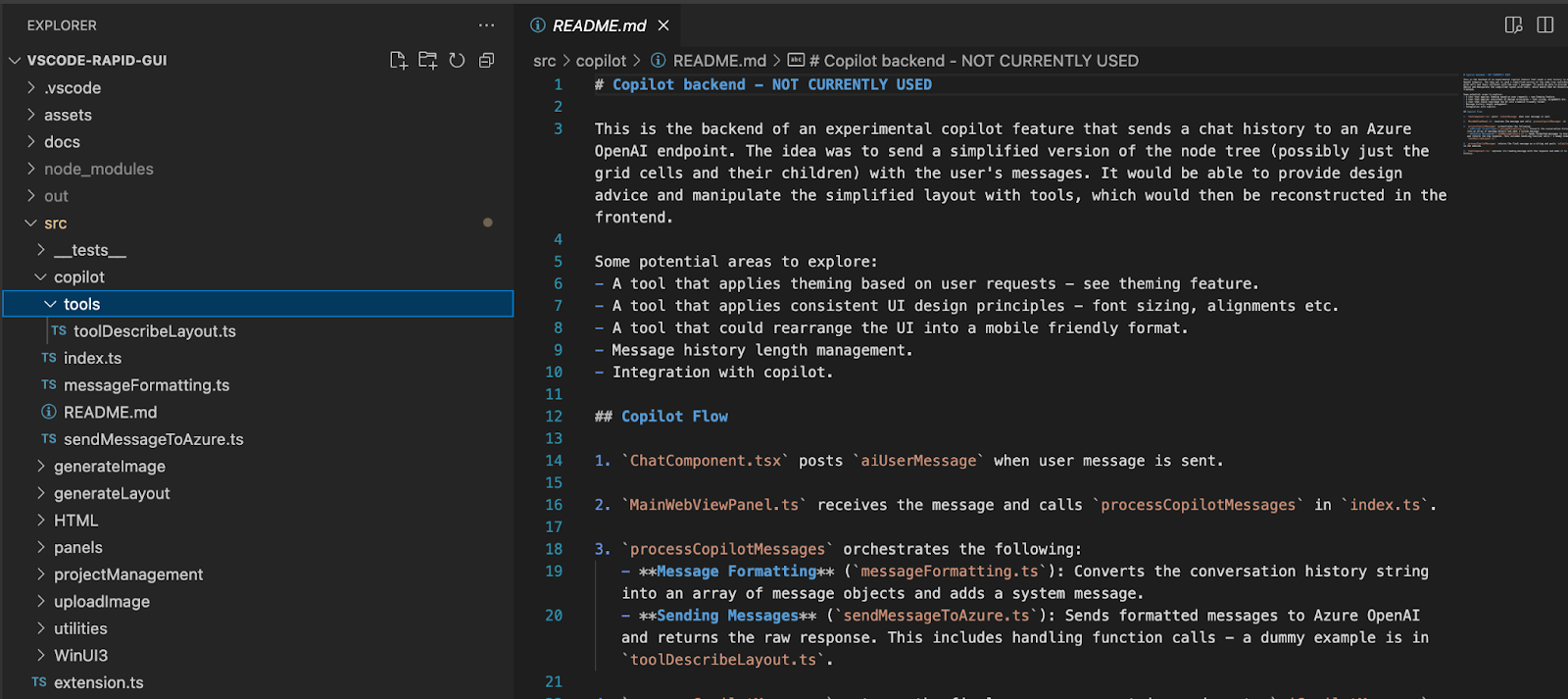

We decided to continue the use of React, CraftJS and FluentUI from v1.0.

React’s component-based architecture allows us to break down UI components

into smaller, reusable pieces. This modularity is perfect for generating dynamic UIs,

as components can be composed and reused across the application, ensuring ease of generating

UIs dynamically based on user and AI input.

CraftJS provides a drag-and-drop interface for building and editing UIs.

This makes it easier for non-technical users or designers to create and modify

layouts without writing code. Also, CraftJS integrates with React components which

makes the system more cohesive.

With these frameworks, we used Typescript instead of Javascript because the

static typing of Typescript allowed for catching errors at compile time rather

than runtime, easing the development and debugging process significantly.

This also helps ensure the project remains scalable and maintainable as it

grows as Typescript is better for organisation and refactoring.

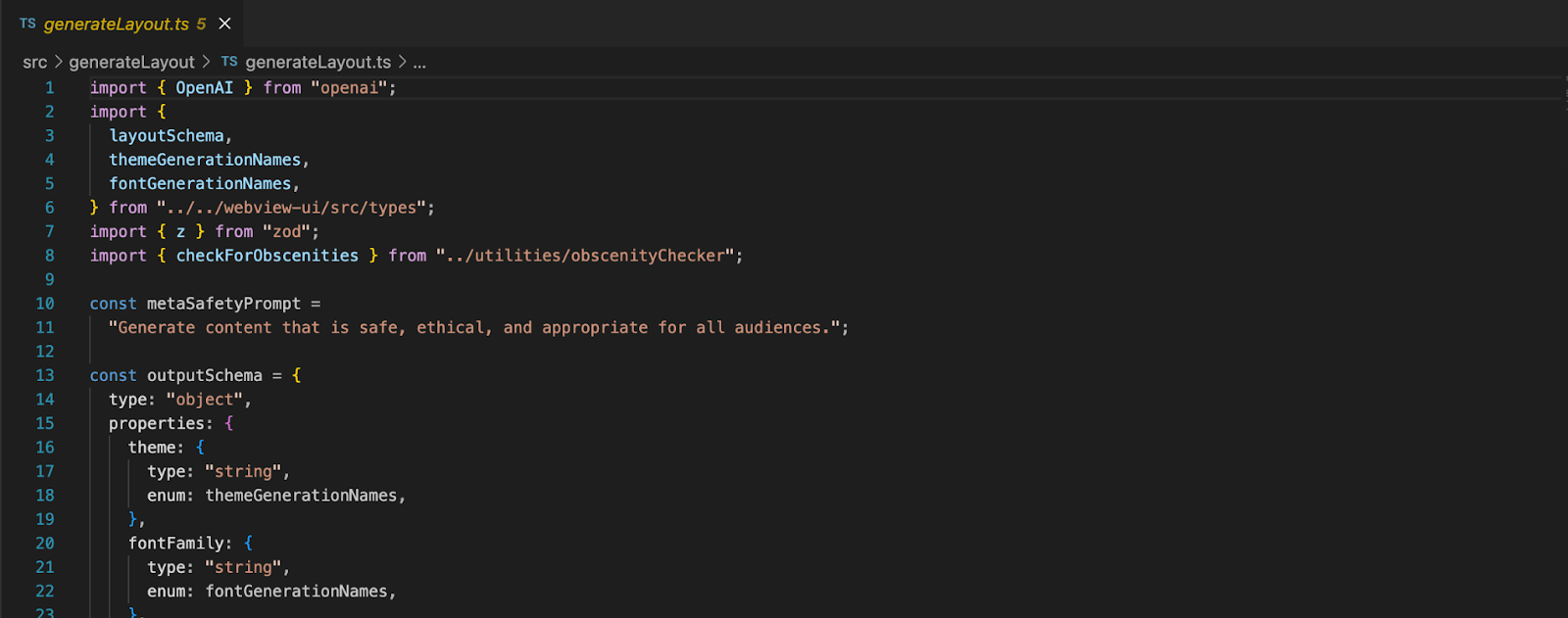

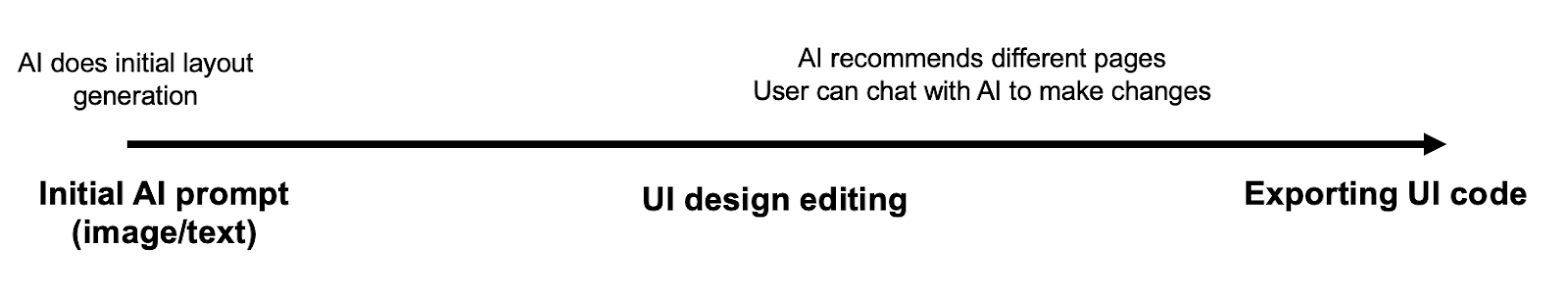

2. AI API

For Blueprint AI’s backend, we leverage LangChain and Axios to streamline AI interactions

and data fetching. LangChain is a powerful framework designed to work with large language

models (LLMs), making it easier to build AI-driven applications by managing prompt chaining,

memory, and model selection. This allows Blueprint AI to create more dynamic and context-aware

interactions when generating front-end designs. Meanwhile, Axios, a popular JavaScript library

for making HTTP requests, ensures smooth and efficient communication with APIs, including OpenAI’s models.

Its promise-based structure simplifies data handling, making API calls more reliable and easier to manage.

Together, LangChain and Axios help optimize AI integration, ensuring a seamless and responsive user experience.

We have also decided to stick with using OpenAI for the AI API.

OpenAI contains many different models suitable for this project,

such as DALLE, a generative AI model that creates images from text descriptions,

allowing for image generation. OpenAI also boasts industry leading models that

are highly advanced and intelligent, outperforming other competitors like Meta,

Amazon and Microsoft [22]. With the impressive line-up of AI models, users also

have more flexibility in managing their own tokens to manage their costs.

Combined with the fact that OpenAI is also highly popular and widely used [23], and

integrating OpenAI API calls into the backend is relatively straightforward, Blueprint AI

will use OpenAI for its AI integration.

Summary of Technical Decisions

| Category | Final Choice | Reason |

|---|---|---|

| Programming Frameworks | React (CraftJS, FluentUI, MaterialUI) | Best for component-based UI generation |

| Programming Language | Typescript | Easier to catch errors, debug, refactor and extend |

| Backend AI Integration | OpenAI, LangChain, Axios | Best Natural Language Processing capabilities |

| Programming Frameworks | React (CraftJS, FluentUI, MaterialUI) | Best for component-based UI generation |

| OCR/Text Recognition | EasyOCR + cv2 | Best for text extraction from screenshots. |

| Deployment Platform | VSCode | Developers work within VSCode, improving workflow, eliminating hardware dependencies |

References

[1] K. Goldstein, “Wix ADI: How Design AI Elevates Website Creation for Everyone,” Wix Blog, Jun. 19, 2022. https://www.wix.com/blog/wix-artificial-design-intelligence

[2] “Power Pages – Website Builder | Microsoft Power Platform,” Microsoft.com, 2024. https://www.microsoft.com/en-us/power-platform/products/power-pages#tabs-pill-bar-ocb9d4_tab4 (accessed Mar. 05, 2025).

[3] “Edit code with Visual Studio Code for the Web (preview),” Microsoft.com, Mar. 07, 2024. https://learn.microsoft.com/en-us/power-pages/configure/visual-studio-code-editor?WT.mc_id=powerportals_inproduct_portalstudio2 (accessed Mar. 05, 2025).

[4] I. Bouchrika, “Mobile vs Desktop Usage Statistics for 2020/2021,” Research.com, Feb. 24, 2021. https://research.com/software/mobile-vs-desktop-usage

[5] lukejlatham, “GitHub - lukejlatham/vscode-rapid-gui,” GitHub, 2024. https://github.com/lukejlatham/vscode-rapid-gui/tree/main (accessed Mar. 15, 2025).

[6] “Webview API,” code.visualstudio.com. https://code.visualstudio.com/api/extension-guides/webview

[7] Karl-Bridge-Microsoft, “Windows UI Library (WinUI) - Windows apps,” learn.microsoft.com. https://learn.microsoft.com/en-us/windows/apps/winui/

[8] Y. Zhang, Y. Yuan, and A. C.-C. Yao, “Meta Prompting for AI Systems,” arXiv.org, 2023. https://arxiv.org/abs/2311.11482

[9] A. Mendonça, “Monolithic Modular Architecture: Modular Folder Organization,” Medium, Jul. 22, 2023. https://medium.com/@abel.ncm/monolithic-modular-architecture-modular-folder-organization-4cbf97175ab4 (accessed Mar. 18, 2025).

[10] S. Watts, “The Importance of SOLID Design Principles,” BMC Blogs, Jun. 15, 2020. https://www.bmc.com/blogs/solid-design-principles/

[11] A. Saxena, “How Policy Reforms And Interoperability Are Reshaping Value-Based Care,” Forbes, Mar. 20, 2025. https://www.forbes.com/councils/forbestechcouncil/2025/03/20/how-policy-reforms-and-interoperability-are-reshaping-value-based-care/ (accessed Mar. 20, 2025).

[12] “Low-code Development Platform Market Size Report, 2030,” www.grandviewresearch.com. https://www.grandviewresearch.com/industry-analysis/low-code-development-platform-market-report

[13] “What’s Wrong with Low and No Code Platforms?,” www.pandium.com. https://www.pandium.com/blogs/whats-wrong-with-low-and-no-code-platforms

[14] Manoj Kumar Dobbala, “Rise of Generative AI: Impacts on Frontend Development,” Sep. 09, 2023. https://www.researchgate.net/publication/382639784_Rise_of_Generative_AI_Impacts_on_Frontend_Development

[15] J. Bracamontes, “How Many Pages Should A Website Have?,” Acumenstudio.com, Feb. 06, 2023. https://acumenstudio.com/how-many-pages-should-a-website-have/

[16] S. McBreen, “Visual Studio Code 2.6M Users, 12 months of momentum and more to come.,” Nov. 15, 2017 code.visualstudio.com. https://code.visualstudio.com/blogs/2017/11/16/connect

[17] OpenCV, “About OpenCV,” OpenCV, 2018. https://opencv.org/about/

[18] Amazon, “What is OCR (Optical Character Recognition)? - AWS,” Amazon Web Services, Inc. https://aws.amazon.com/what-is/ocr/

[19] A. Mahajan, “EasyOCR: A Comprehensive Guide,” Medium, Oct. 29, 2023. https://medium.com/@adityamahajan.work/easyocr-a-comprehensive-guide-5ff1cb850168

[20] J. Nielsen and Y. Cheng, “Are Chinese Websites Too Complex?,” Nielsen Norman Group, Nov. 06, 2016. https://www.nngroup.com/articles/china-website-complexity/ (accessed Mar. 22, 2025).

[21] tesseract-ocr, “tesseract-ocr/tesseract,” GitHub, Oct. 20, 2019. https://github.com/tesseract-ocr/tesseract

[22] “OpenAI - Quality, Performance & Price Analysis | Artificial Analysis,” Artificialanalysis.ai, 2025. https://artificialanalysis.ai/providers/openai

[23] E. H. Schwartz, “OpenAI confirms 400 million weekly ChatGPT users - here’s 5 great ways to use the world’s most popular AI chatbot,” TechRadar, Feb. 22, 2025. https://www.techradar.com/computing/artificial-intelligence/openai-confirms-400-million-weekly-chatgpt-users-heres-5-great-ways-to-use-the-worlds-most-popular-ai-chatbot (accessed Mar. 22, 2025).

This research forms the foundation for Blueprint AI’s development, ensuring we use the best technologies to optimize AI-assisted front-end generation.