Welcome to HandsUp!

A computer vision-based classroom interaction system.

Abstract

Teachers often struggle to determine real-time student engagement and understanding in traditional classroom settings. Many students may feel hesitant or anxious about raising their hands due to various factors, and it becomes especially difficult to monitor individual responses in large or fast-paced classes.This lack of immediate and inclusive feedback can hinder effective classroom interaction and reduce opportunities for timely support.

To address this issue, we developed HandsUp! - an intelligent classroom system based on computer vision. The system enables students to raise color cards or their hands, which are detected using a YOLO-based object detection model integrated with OpenVINO for real-time performance. It processes video input, counts the detected responses, and provides instant feedback to the teacher, enhancing communication efficiency and student engagement during lessons.

HandsUp has demonstrated strong usability and adaptability across various classroom environments. It enhances inclusivity by enabling even shy or hesitant students to participate effortlessly. Through successful testing sessions and presentations, the system was proven to improve classroom flow, increase student interaction, and reduce teacher workload in managing responses. To ensure the system is practical in real-world school environments, especially those with limited computational resources, we integrated OpenVINO to optimise inference performance. This allows HandsUp to run smoothly on a wider range of hardware, making it more scalable and accessible to a broader user community.

Demo Video

Development Team

Aishani Sinha

aishani.sinha.23@ucl.ac.uk

Krish Mahtani

krish.mahtani.23@ucl.ac.uk

Misha Kersh

michael.kersh.23@ucl.ac.uk

William Lin

runfeng.lin.23@ucl.ac.uk

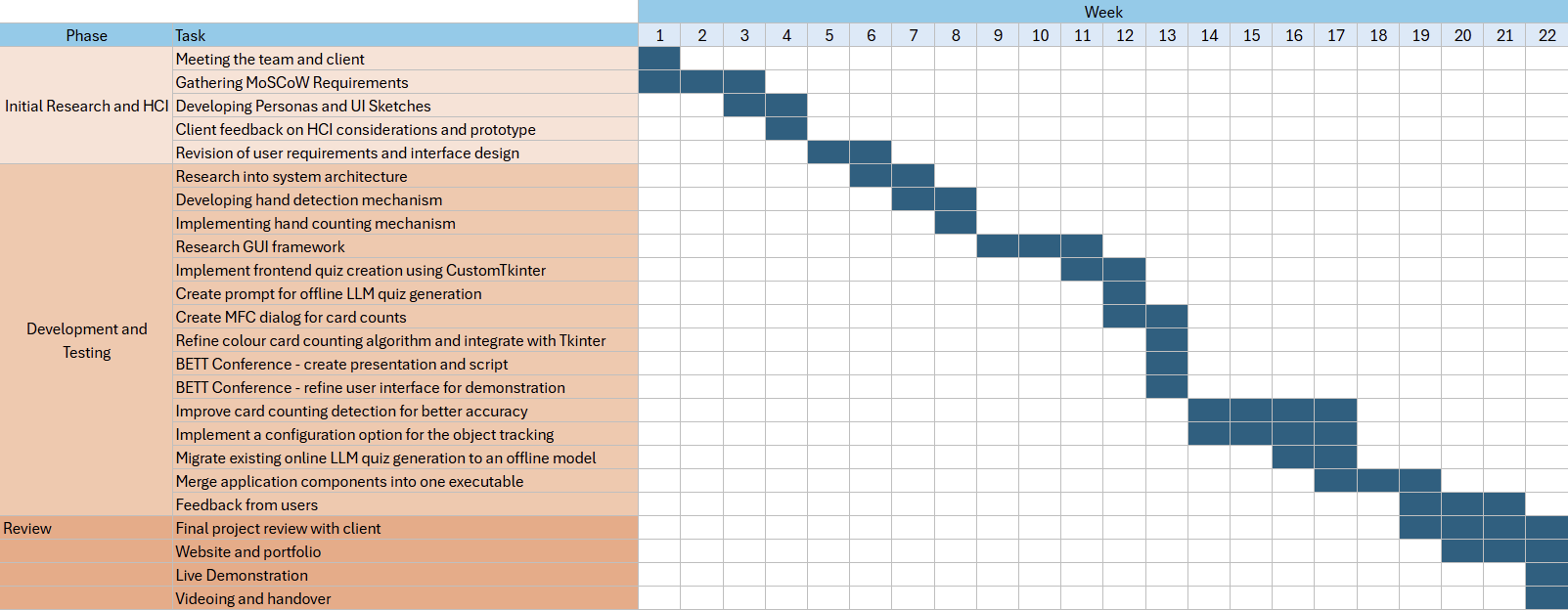

Gantt Chart

From October 21, 2024 to March 28, 2025