Research

Requirements

| ID | Requirement |

|---|---|

| F1 | Use voice recognition to search for item of clothing |

| F2 | Ability to choose more than one item of clothing |

| F3 | Ability to try on more than one item of clothing at the same time |

| F4 | Use voice recognition to change item of clothing worn based on categories |

| F5 | Use voice recognition to display product information including purchase and sizing |

| F6 | Ability to shortlist clothing to purchase on their website (add to basket) |

| F7 | Use voice recognition to add to basket |

| F8 | Show clothing virtually worn on the user’s body |

| ID | Requirement |

|---|---|

| NF1 | Simulate texture of clothing |

| NF2 | First page displays search instructions for using voice |

| NF3 | Show pages of categories of clothing |

| NF4 | Avatar has to look like the user |

| NF5 | Show basket page |

| NF6 | Help available with voice commands |

| NF7 | Use voice recognition to add to basket |

| NF8 | Show clothing virtually worn on the user’s body |

| ID | Requirement |

|---|---|

| UI1 | Easy to use and navigate through the pages of the menu |

| UI2 | Use voice command and tap/pinch gesture to select |

| ID | Requirement | Priority |

|---|---|---|

| F1 | Ability to choose more than one item of clothing. | Must have |

| F2 | Ability to try on more than one item of clothing at the same time. | Must have |

| F3 | Ability to place, rotate and resize items of clothing within the application. | Must have |

| F4 | Ability to use image recognition technology to project garment hologram such as bracelet. | Must have |

| NF1 | Show buttons to navigate through different categories of clothing | Must have |

| F5 | Use voice recognition to change item of clothing worn based on categories. | Should have |

| F6 | Use voice recognition to display product information including purchase and sizing (chat bot). | Should have |

| F7 | Use voice recognition to place, rotate and resize garments in their space. | Should have |

| NF2 | Help available by tapping on a button | Should have |

| NF3 | Displaying help available by voice commands | Should have |

| UI1 | Easy to use and navigate through the pages of the menu | Should have |

| F8 | Ability to shortlist clothing to purchase on their website (add to basket) | Could have |

| F9 | Use voice recognition to add to basket | Could have |

| NF4 | Avatar has to look like the user | Could have |

| NF5 | Show basket page | Could have |

| NF6 | Simulate texture of clothing. | Would have |

| NF7 | Show clothing virtually worn on the user’s body | Would have |

Use Cases

Use case 1: Try an article of clothing

Actor: Shopper

Goal: User should be able to see the clothing worn on them in augmented reality

Pre-condition: User should be on the virtual wardrobe app on the Hololens and they should be standing in front of a full-body mirror

Main flow:

- User selects a category of clothing (e.g. dress, top, pants) from the menu using voice command or tap gesture.

- User browses through different items of clothing and selects it by doing the tap/pinch gesture.

- User selects “next” and the main menu closes.

- User stands in front of a mirror and see the clothing worn on them.

- The garment is morphed into the body of the user so the user can see how it looks like on them

Extensions:

- Show help in menu

- User can tap on the “Help” button on the screen

- A guide on how to use the app is displayed on the screen

- Show more information about the product

- User can tap on the product or use voice command to show more information (e.g. price, item ID, size)

- Information about the products will be displayed on the screen

Use case 2: Try multiple articles of clothing and buy them

Actor: Shopper

Goal: User should be able to try on multiple items of clothing at once (e.g. top and pants) and buy them on Net-a-Porter’s website

Pre-condition: User should be on the virtual wardrobe app on the Hololens and they should be standing in front of a full-body mirror

Main flow:

- User selects a category of clothing by voice command or gesture and subsequently selects on an article of clothing that they want to try.

- The specific article of clothing is selected and displayed on the side of the screen as “selected”.

- User navigates back to the main screen.

- User chooses another category and a another article of clothing within that category.

- The article of clothing is selected and added to the “selected” field.

- User selects “next” and the main menu closes.

- User stands in front of a mirror and see the clothing virtually worn on them.

- User decides to buy the item and they are added to basket.

- Show help in menu

- User can tap on the “Help” button on the screen

- A guide on how to use the app is displayed on the screen

- Show more information about the product

- User can tap on the product or use voice command to show more information (e.g. price, item ID, size)

- Information about the products will be displayed on the screen

- User wants to buy the items

- User goes to checkout page

- User reviews the items that they want to buy

- User selects “Buy” and they will be redirected to Net-a-Porter’s website to complete their purchase

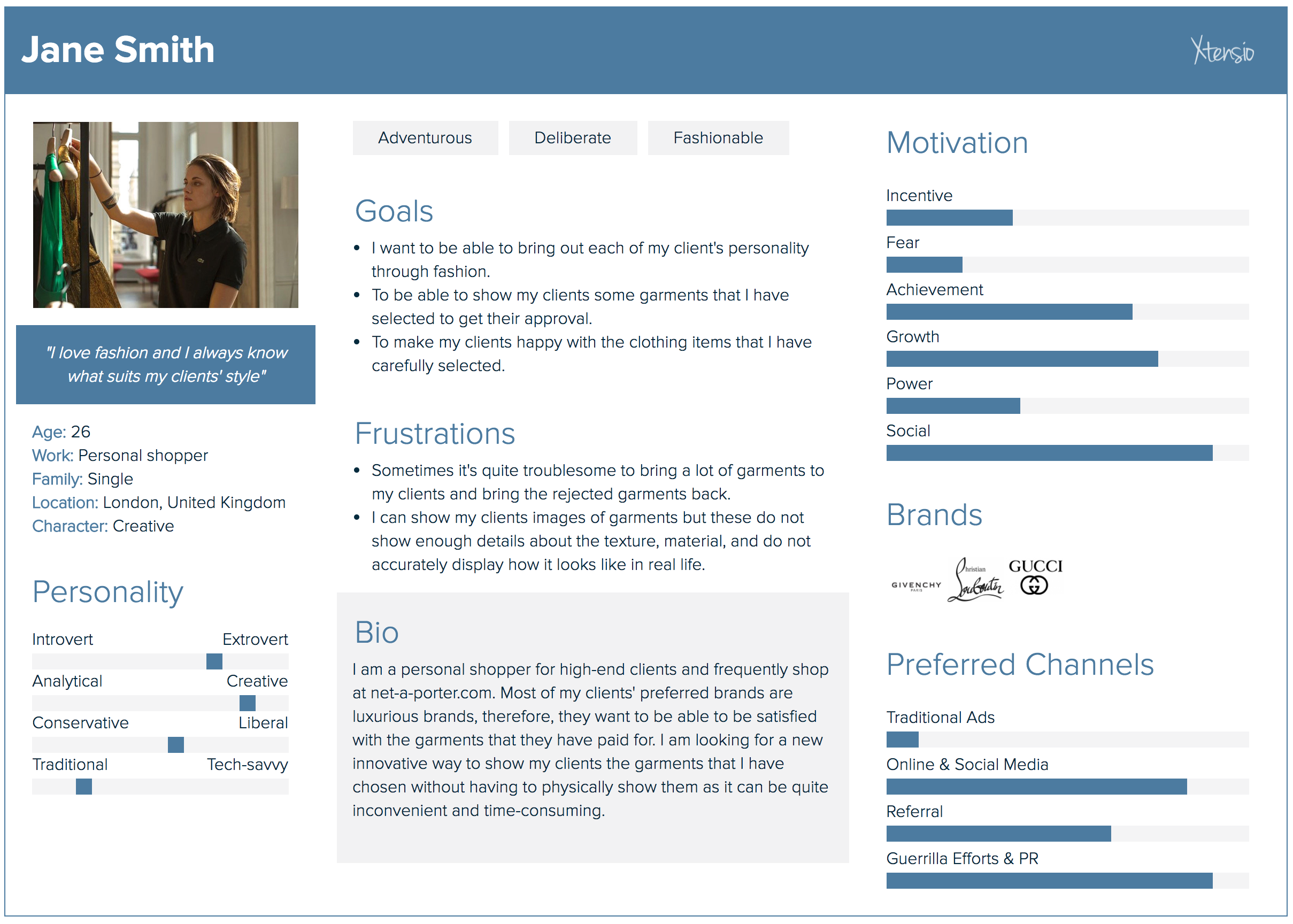

Personas

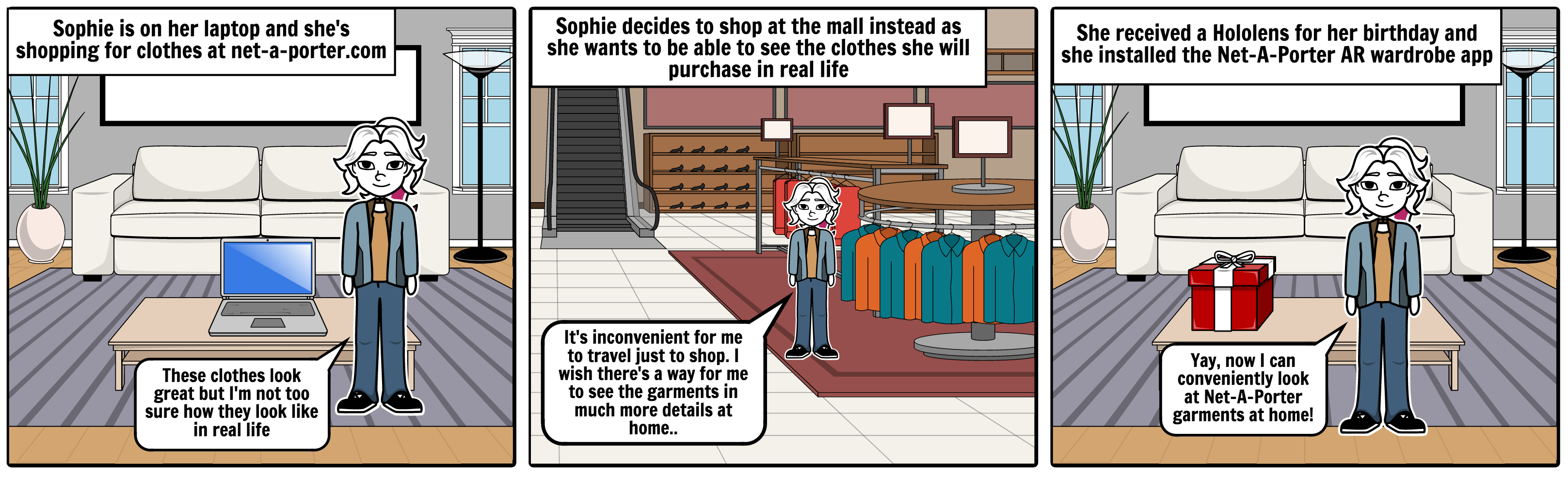

Storyboard

Research Results

We needed several hardware in order to successfully complete our project. The main hardware that we need are a 3D scanner and a VR/AR device. Before deciding on which hardware to use, we researched about what features each hardware has and compared them to their alternatives. We made sure that the platform can support the requirements that we need and that it is the most suitable for our client and user.

Option 1: Sense 3D Scanner

Features:

- Lightweight and portable

- Operating range: 0.2m - 1.6m

- Color image size: 1920 x 1080 px

- Depth image size: 640 x 480 px

- Max scan volume: 2m x 2m x 2m

- Tripod mount: Yes

- Price: £299

Option 2: XYZ Printing 3D Scanner

Features:

- Lightweight and portable

- Operating range: 0.1m - 0.7m

- Color image size: 640 x 480 px

- Depth image size: 640 x 480 px

- Max scan vol: 0.6mx0.6mx0.3m

- Tripod mount: No

- Price: £140

Option 3: Fuel3D Scanify

Features:

- Camera: Two 3.5 MP Cameras

- Operating range: 0.35m - 0.45m

- Flash: Three Xenon flashes

- Time to take each scan: 0.1s

- Resolution: 350 microns

- Tripod mount: Yes

- Price: £990

Decision: We have decided to choose Sense 3D Scanner because it is affordable, takes better quality images as compared to XYZ 3D scanner and has the greatest maximum scan volume which will allow us to capture a full image of clothings including long gowns. It has the largest operating range among the three scanners which also helps us take full scans instead of taking only close-ups of the clothing. Sense also has a tripod mount so that we can attach it onto a tripod and take more stable 3D scans. Although the quality of the 3D image will not be as good as Fuel3D Scanify, it will be sufficient for our project as the small details of the garment will not be our main concern at this point.

VR/AR Device

Option 1: Oculus Rift

Features:

- Display: 2160 x 1200 px OLED display

- Control: Oculus Touch, Xbox One controller

- Sensors: Accelerometer, gyroscope, magnetometer, Constellation tracking camera.

- Field of view: 80°×90°

Option 2: Microsoft Hololens

Features:

- Display: See-through holographic lenses with 2 HD 16:9 light engines

- Control: Clicker, gesture input, gaze tracking, voice support

- Sensors: 4 environment understanding cameras, 1 IMU, 1 depth camera, 1 2MP photo/HD video camera, mixed reality capture, 4 microphones, 1 ambient light sensor

- Field of view: 30°×17.5°

Decision: We have decided to choose Microsoft Hololens as it has holographic lenses that allow users to see multi-dimensional full-color holographic objects in the real world. Hololens contains advanced sensors to capture users' input and enables them to interact with the holograms in a natural way. Since Oculus Rift gives a fully immersive experience to users, we think using the Hololens would fit the brief better because it will simulate the experience of looking and trying on a piece of clothing in real life, just as a Net-a-Porter EIP shopper would. The trade-off of using Hololens as our platform is that it has a limited field of view, but the benefits of using Hololens outweighs this drawback.

Since we have decided to use Microsoft Hololens for our project, we looked into online resources that can help us build the application. In order to build an application for Hololens, we use Unity to build 3D objects and Visual Studio for debugging and deploying apps. We also installed Hololens Emulator which allows us to run applications in a virtual machine without a Hololens.

Adding features such as voice input and gesture can be implemented by using the APIs that are already built in Unity. Scripts in Unity are written in C#. These scripts are what enables the app to respond to input from the user, create graphical effects and control the physical behaviour of objects.

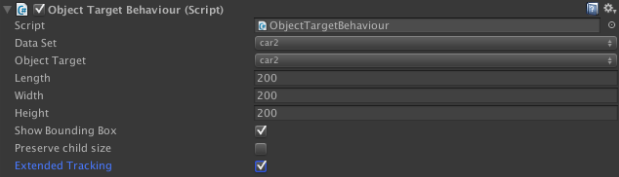

Aside from Unity and Visual Studio, we are also implementing Vuforia - an SDK for Unity - which enables us to create holographic applications that can recognise specific things in the environment so that we can attach actions on the specific objects. This is useful as our app needs to be able to recognise user's body and morph the clothing onto the body.

In order to enable Vuforia to work with the Hololens spatial mapping and positional tracking systems, we need to enable "extended tracking" on a target and bind the AR Camera to the Hololens scene camera. This feature is handled automatically between the Vuforia SDK and Hololens API in Unity without interference from the developer. Here is the sequence of processes that occurs when extended tracking is enabled on a target:

- Vuforia's target tracker recognises the target

- Target tracking is initialised

- The position and rotation of the target are analyzed to provide a robust pose estimate for HoloLens to use

- Vuforia transforms the target's pose into the HoloLens spatial mapping coordinate space

- HoloLens takes over tracking and the Vuforia tracker is deactivated

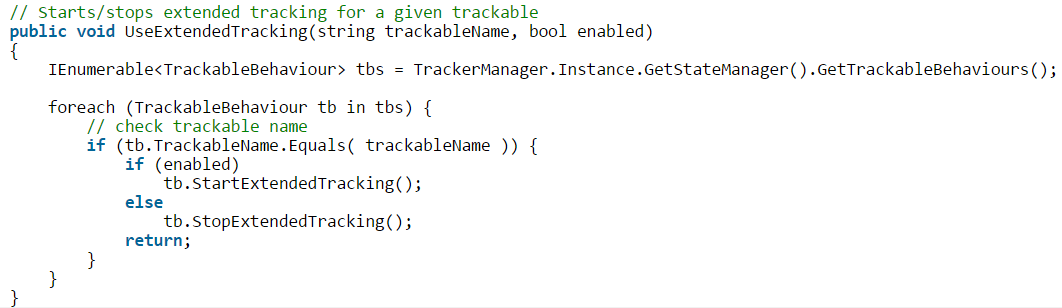

If the application must dynamically enable or disable the extended tracking at run time, we can use the Extended Tracking API's startExtendedTracking and stopExtendedTracking methods as illustrated in the following code example:

Summary of final research

After extensive research into both solutions that we considered in term 1: Use of a mirror to project holograms onto a user's body and manipulation of holograms so user can observe them in the room they're in, we came to the conclusion that the first would be too difficult. After thorough research into the HoloLen's camera and its ability in providing depth information, we were told by a Microsoft employee that the only way we could begin to implement something like this would be perhaps with the use of the Kinect camera and even with this it would be way beyond our capabilities to implement the rest of the requirements. For this reason we focused on using our research on placing holograms in a position to relative to the user's environment so that they could then walk around the object as well as manipulating it in various ways.