Evaluation

Summary of Achievements

Known Bugs:

Throughout the project, we kept track of bugs that we had during the project and worked through them as we progressed through the project. At this point in time, all aspects of the project are fully functioning and there are no known bugs at this time.

Critical Evaluation of the Project

Functionality:

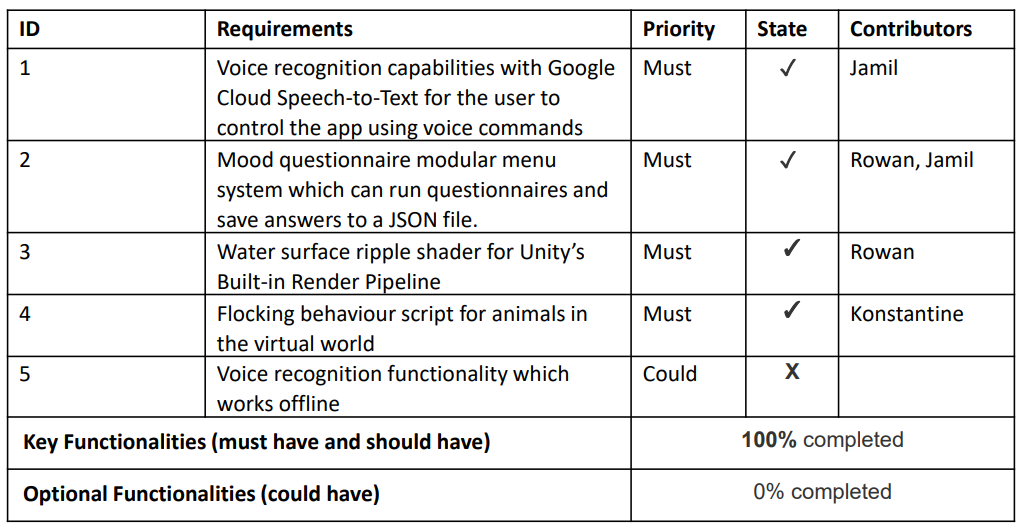

As a team, we managed to implement all the primary required functionalities as requested by the project partners at TEND-VR, namely the speech-to-text mood questionnaire, the resource-efficient water ripple shader, and the flocking behaviour applicable to the movement of birds and fish. To our MoSCoW statement, we added a SHOULD requirement that the water ripple shader implementation had to be resource-efficient, which we also managed to achieve. In one of the COULD requirements of the MoSCoW statement, they wanted us to get the speech-to-text mood questionnaire working offline. We concluded that this wasn’t plausible as the basis for the API being used was that it worked from the cloud. We suggested a deep-speech implementation looked into by a group of people and recommended the appropriate GitHub links for it and they were content for us to move on from this. We ended the project with 100% of our MUST requirements accomplished, and 100% of our SHOULD requirements accomplished.

Stability:

After each phase of development with each assignment of the project, we carried out user acceptance testing where a user would extensively analyze an implemented feature of our project. As a result of this, we managed to identify and squash a few bugs we found throughout the course of the project, primarily in the speech-to-text mood questionnaire implementation.

Efficiency

Our aim throughout all our tasks was to ensure maximum performance throughout. For example, we made the water ripple shader very resource efficient by instead of calculating a random value for the ripple's width, speed and fade speed every time the ripple is run, the shader looks up the rgb value of a pixel in a noise texture to generate a pseudorandom value.

The flocking behaviour achieved efficiency in a few ways. Firstly, the flocking rules are only applied to the objects 20% of the time, this was to take the load of having to constantly calculate the rules for every single animal all the time. Secondly, it was efficient in the way the code was written. For example, the code was modular having been split between two separate files and it was also concise and very easy to follow. Any customisation options were added to the main screen in unity as to allow for quick edits for our partners.

We removed the coroutine that was used to transition to the next question in the mood questionnaire module which also helped with efficient resource management as we stopped the scene from completely reloading itself with the new implementation.

Compatibility:

Our focus was on implementing our project for virtual reality products - in particular the Oculus Quest. In this case, the mood questionnaire module was coded and tested using a VR headset, whereas the other parts of our project were all coded within a 3D environment in unity which would allow for easy addition to our partners existing build.

Maintainability:

The project should have good maintainability because we have written extensive documentation including our user manual and deployment manual which covers all features of the project. In the Implementation page on the website we have also explained how each aspect of the project works, covering key sections of code. Therefore, it should not be difficult to understand how our project works and how to extend it. Furthermore, we have also written the code following good software engineering practices such as avoiding code smells, so the code should be readable and easy to understand, which would make it easier to maintain.

Project Management:

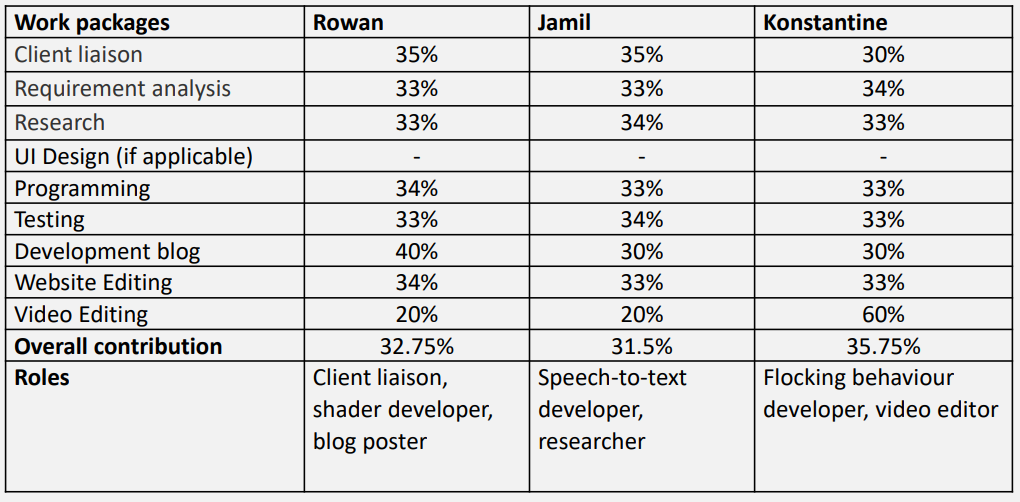

The project was managed well, as we were able to finish all development relatively quickly and completed all portfolios within deadlines. Every week we would have at least one meeting to discuss our projects solutions and to update each other on the progress of the features that each of us are developing. We would meet with our project partners after each stage of our project to review the code, ensuring that our partners were also constantly kept updated on our progress.

Future Work

Speech-to-Text Mood Questionnaire:

In the current implementation, the user must hold one of the face buttons on the right controller before speaking into the VR headset microphone for their speech to be transcribed by the Google Cloud Speech-to-Text API. If we had more time with this project, we could optimise this feature to make it easier for the user and be constantly recording the user’s speech input and detecting for an appropriate command. We suggested to the project partners one of the ways they could achieve this:

To omit background noise from the recording, a calibration test must be completed. With the headset microphone active, the background noise level is recorded. To isolate the user’s voice, audio that is recorded a certain number of decibels over a set constant threshold (or set percentage) is sent over to the speech-to-text API to be transcribed. By doing this, we can isolate the user’s voice which will result in a more reliable interpretation to match a given command.

Water Ripple Shader:

As the GPU on our VR headset platform is limited, it is important that shaders are as resource-friendly as possible. In the future, we can run some tests to evaluate the cost of running the shader in the app, and try to find ways to optimise it further. We could also try to expand the customisability of the shader, allowing developers to have even more options to tweak such as the number of ripples.

Flocking Behaviour Script

If we had more time to work on this project there are a few things we could add to the flocking behaviour script. Firstly, we could add a few more customization options to create more variation within the behaviour in real-time as to make the TEND-VR world more engaging. Secondly, we would love to add a bit more variation between different types of animal’s flocking behaviour patterns. For example, birds flock in a different way to how fish do and although it is a small difference, implementing this feature would increase the allure of the TEND-VR world.