System Design

Speech-to-Text Mood Questionnaire

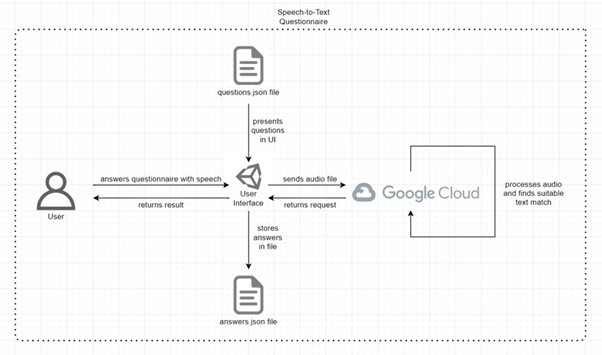

For this aspect of the VR application, we constructed a diagram that describes how all the different components work and communicate with each other. The user is presented with a simple user interface that allows for them to complete the clinically derived mood questionnaire that the project partners wanted us to use. Questions displayed to the user originate from a questions.json file stored in a QuestionData folder within the project files, and answers are stored in the answers.json file once the questionnaire has been finished.

If the user would like to utilize the speech-to-text functionality, the audio spoken into their microphone is sent over as an audio file to the Google Cloud Speech-to-Text API to be transcribed and returned to be processed in Unity. If they do not wish to use this functionality, they can instead use the controller to navigate to their chosen answer and select it directly (in this case, there is no communication with the API).