Implementation

Technologies

The project was primarily developed in Unity using C#. Other technologies include Google Cloud Speech-to-Text and Newtonsoft packages.

Mood Questionnaire Menu System

The GameManager script is used to save, load and run the assessment. First, the loadJSONQuestions method loads the questions from the Questions.json file, using a JSON framework called Newtonsoft, which deserialises the json string from the file into a List of Question objects, which hold the text of each question.

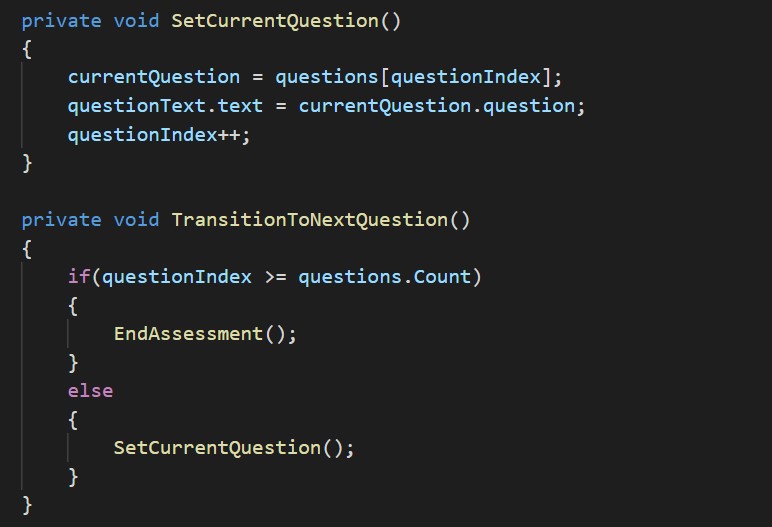

Next the TransitionToNextQuestion method changes the currentQuestion to the first question in the questions List . The questionText, which is a Unity serialized field, is changed to the currentQuestion's question text, which switches the question in the scene to the currentQuestion after the previous question is answered. The questionIndex is incremented by 1, to keep track of the number of questions answered. When the user selects an answer, that answer is stored in the currentQuestion object's answer variable. Once the questionIndex is greater than the number of questions in the assessment, the EndAssessment method is called, which shows the end screen and calculates the user's final score as the sum of all answers.

The users answers to each question is saved to the Answers.json file. Newtonsoft is used to serialize the questions List into a json string, which is written into the file.

Voice Input to Text

The speech-to-text system developed primarily in the GSManager C# script is a system that works with Unity and Google Cloud’s speech-to-text API that, in this application, is implemented for the user to be able to verbally complete a clinically derived mood questionnaire known as GAD-7. The goal established by the project partners at Tend-VR was for the application’s users to be able to immerse themselves in a much more user-friendly environment.

Using an API key provided by the Google Cloud platform, our VR application is able to send audio on the request stream and receive the corresponding recognition results on the response stream in real time. Questions from the mood questionnaire are displayed to the user from a .json file that currently contains the GAD-7 questionnaire. Our client has the ability to edit this .json file to add or edit questions or reuse the canvas elsewhere. By creating a specialized C# script in Unity and utilizing the available tools to improve its transcription results with speech adaptation, we were able to make the API recognize the specific selection of words that we expected the user to use during the mood questionnaire. After the user completes the quiz, their score is displayed on-screen and their answers are logged and saved in a .json file.

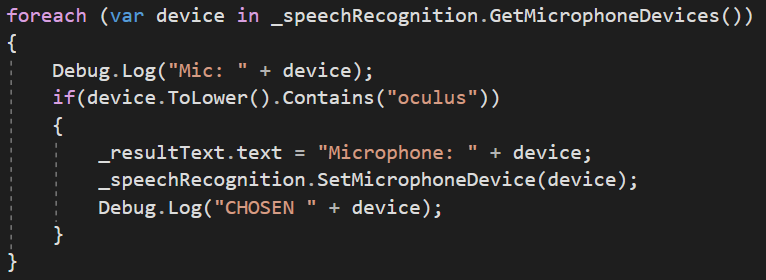

As soon as the mood questionnaire is launched, the first thing we need to do is to select the correct microphone to be used when recording the user’s speech. As the project partners specified that the Oculus headset microphone was to be used, we had to research ways to isolate this microphone if it was a Quest 1 headset or a Quest 2 headset in the cases where they are being used both with a PC and as a standalone device. The common factor between these devices in all cases were that they would always have the “oculus” substring in the name, so this is the component that is checked before selecting the appropriate microphone.

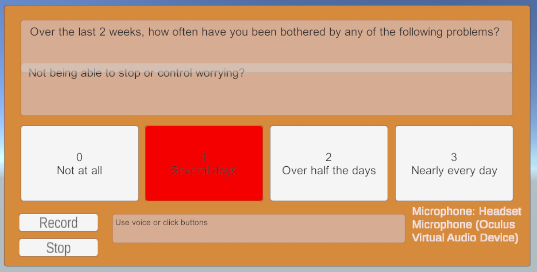

For the purposes of debugging, the name of the microphone in use is displayed in the bottom right of the user interface.

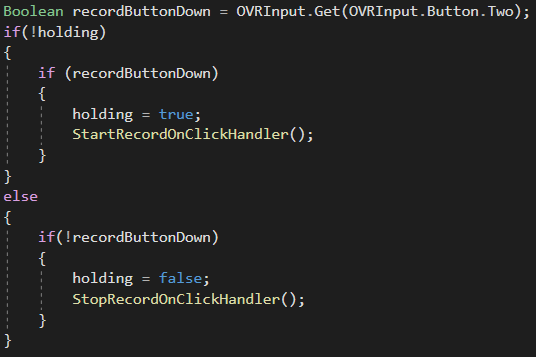

Within the update method of the GSManager C# script, the application is constantly checking whether the user would like to begin recording their voice. By defining a Boolean variable (holding) that determines whether the record button on the controller is being held down, the “start record” and “stop record” handlers are called at suitable times.

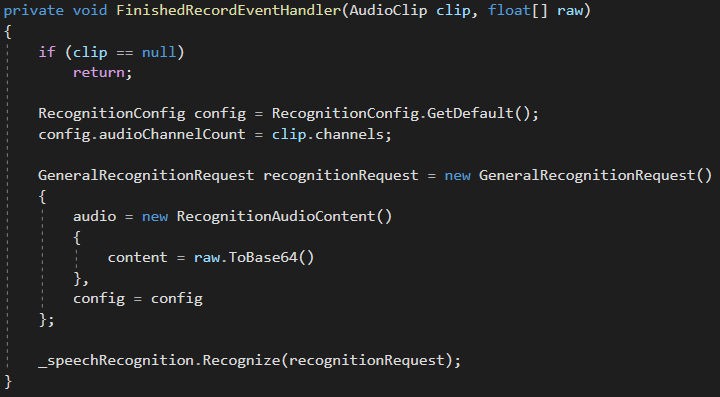

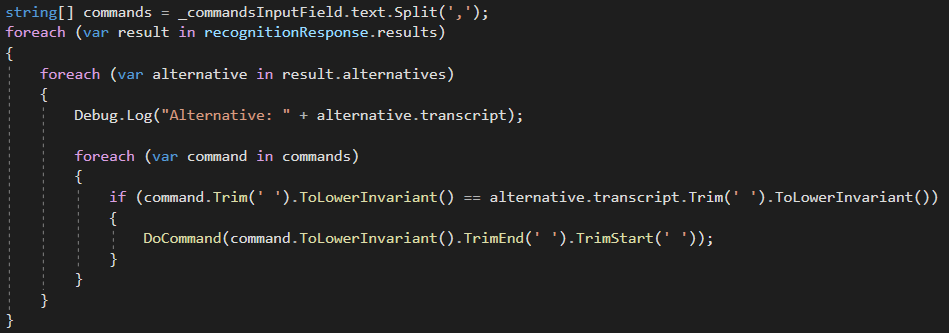

Once the recording has finished successfully, a recognition request is created and ready to be sent over to the Google Cloud’s speech-to-text API to be transcribed and returned. The API is responsible for converting audio to text by applying neural network models. The helper tool in this case is used to set the appropriate encoding scheme of supplied audio files from the user, the sample rate of the audio, the language code, profanity filters used etc.

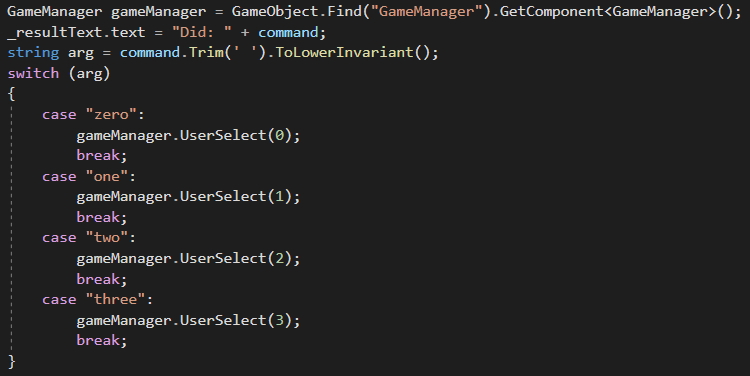

Assuming the recording has been processed successfully, the speech-to-text API will return a best match. This is determined by a string array that currently holds zero, one, two, and three as the chosen options but is customizable by the project partners if they wish for its usage to be adapted. The API returns a list of possible matches according to what the user has said and a simple comparison is made between the potential interpretations and the elements of the commands array, where a best match is outputted and processed.

As the speech has now been reliably transcribed, it is processed with a simple switch statement that selects the corresponding option chosen by the user. For the purposes of confirmation, the chosen answer is shown in the bottom right of the user interface.

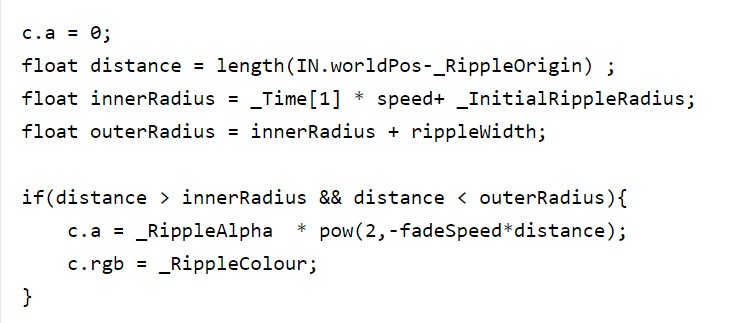

Water Surface Ripple Shader

The water surface ripple shader is a Standard Surface Shader from Unity, written using Unity’s Shaderlab language. The shader takes a 2D UV mapping as input and outputs a SurfaceOutput structure, which describes how the the surface should be displayed in the game world.

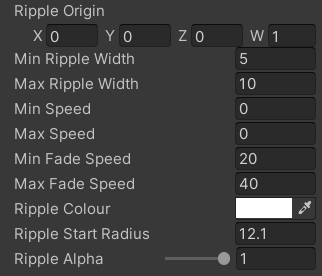

The developer can change the parameters of the ripple shader, to tailor how they want it to look.

- World position of ripple origin

- Width of ripple

- Speed of ripple

- Fade speed of ripple

- Colour of ripple

- Initial start radius of ripple

- Alpha (transparency) of ripple

The shader calculates the distance between the worldPos of each pixel on the texture and the _RippleOrigin. An innerRadius and outerRadius are defined which are the bounds of the ripple. The innerRadius is calculated using the _Time (time since the start of the scene), the ripple speed and the initial ripple radius, which makes the ripple’s radius increase as time passes.

The inner and outer radius act as bounds for the ripple, such that any pixel whose distance from the ripple origin is within the inner and outer radius is made visible and changed to the _RippleColour, while any the c.a (alpha) value of any pixels outside the ripple is 0, making it transparent. The alpha of the ripple itself is set to an exponential which decreases as the distance increases, eventually reaching 0. This makes the ripple fade as it grows from the ripple origin.

The speed, fade speed and width is a randomly generated value between a minimum and maximum value. This is done using a noise texture, which is a texture where every pixel has a random rgb value. The random values are generated by looking up pixel values from the noise texture based on the world position of the ripple origin. This makes it so that changing the world position of the ripple changes the speed, fade speed and width to a random value between the min and max.

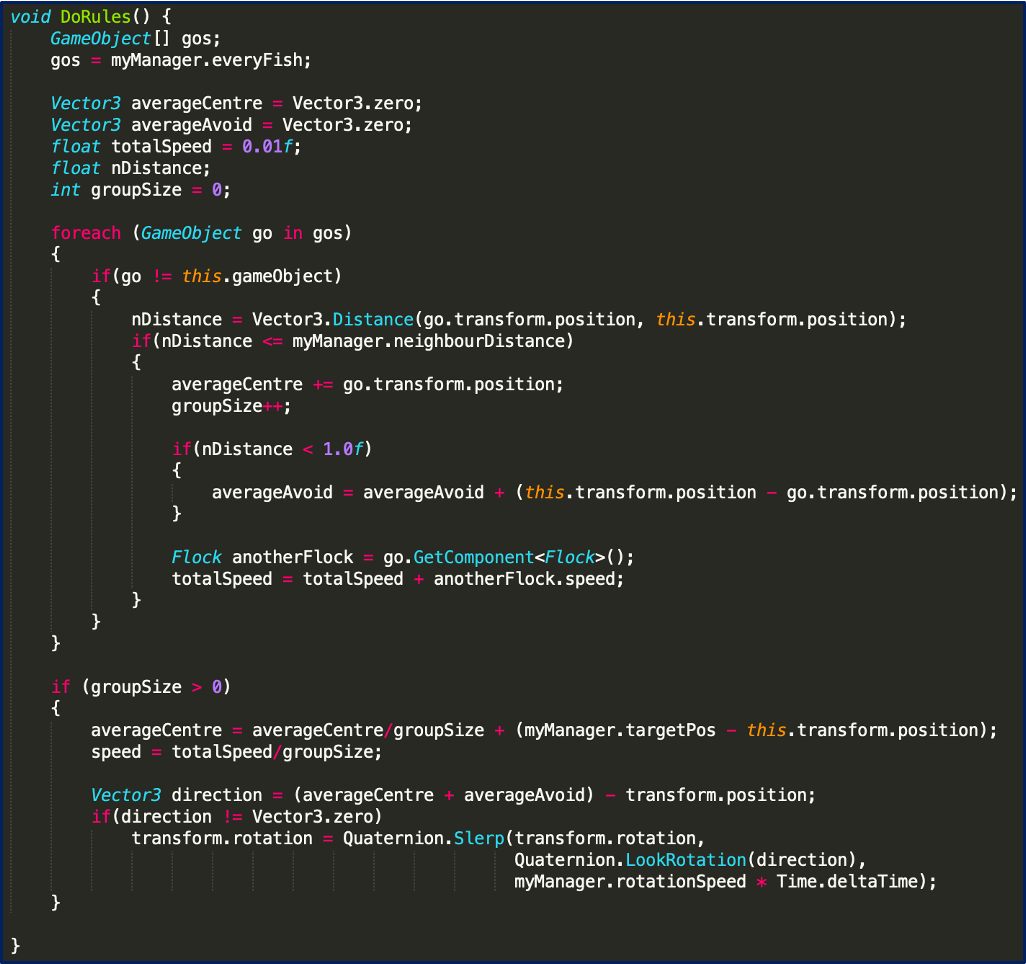

Flocking Behaviour Script

There were two scripts written for flocking behaviour: The Flock manager script and the Flock script. The flock manager script deals with the setting of all the different variables of a particular flock and with the instantiation of all the fish whereas, the flock script is the one that is attached to the fish Prefab. Essentially this script is attached to any model you create and deals with the actual flocking rules.

Flocking behaviour is dictated by three simple rules: Seperation, Alignment and Cohension, so I needed a function that could calculate and apply these to each animal in the world. Below is the function we came up with.

This is the ‘DoRules’ method within the ‘flock.cs’ script. Here is how it works:

The first line is where a holder is made which gets a hold of all the fish in the current flock (it is called ‘gos’ for game objects). These are the fish that we are trying to find the average position/average heading for, and they are the ones that are considered as neighbours.

- Then, we calculate the average centre of the group in ‘averageCentre’. Initially set to 0.

- Then we calculate the average avoidance vector in ‘averageAvoid’. It is not just one neighbour we are trying to avoid, its everybody. This is also initially set to 0.

- ‘totalSpeed’ is the global speed of the group or the average speed that the group is moving.

- ‘nDistance’ is neighbour distance. It is used to determine the distance between each fish.

- ‘groupSize’ counts how many fish in the flock are in our group.

We use a FOREACH loop to go through each fish in the flock. We check if the current fish (‘go’ standing for game object) is not equal to the fish running the ‘DoRules’ code at that moment as we don’t need to calculate the fish’s data for itself (e.g. the distance to itself).

We then work out the distance of the neighbour with a Vector3. It finds the distance between the game object in our array’s position and our current fish’s position in the world.

If that distance is less than or equal to the ‘neighbourDistance’, it makes it a fish of interest. If it is a fish of interest then:

- We add that fish’s position to ‘averageCentre’.

- We also increase the group size by one.

The final value for ‘averageCentre’ is calculated after the loop where the sum of the game objects positions is divided by the ‘groupsize’ to find the average position of the group.

Following that, we have an IF statement to test if our neighbour distance is less than a much smaller value. This is the distance of how close we can be to another fish before we consider avoiding it.

If the distance is less than the hard-fixed value, the ‘averageAvoid’ vector is going to be added to. The vector away from the fish is added to it which is our current position minus the position we want to get away from.

We then go ahead and grab a hold of the flock code that is attached to the fish we are looking at the moment with ‘Flock anotherFlock = go.GetComponent

By the time we finish the FOREACH loop we have looped through every fish in the flock and examined it. If it is considered a close neighbour then we’re finding its position, its avoidance vector and its speed and add it all up.

Underneath the FOREACH loop, we have an IF statement which asks if the ‘groupsize’ is bigger than 0. The ‘groupsize’ will only be 0 if the fish we are looking at has nobody around it – if this is the case then no flocking rules are going to be applied to it. If it is influenced by another fish (i.e the groupsize is bigger than 0) – we find the average to the centre of the group and the average speed.

- The final value for ‘averageCentre’ is calculated by dividing the sum of the game objects positions by the ‘groupsize’ to find the average position of the group. We are also going to make this centre move towards the goal position by adding the vector to our goal position (The vector to our goal is the goals position minus the current fish’s position).

- The speed itself of the fish is set to the average speed of the group, by dividing the sum of all the fish’s speed by the ‘groupsize’.

With all that information we can work out the direction that the fish needs to travel in. It’s direction vector is calculated by adding the ‘averageCentre’ vector and the ‘averageAvoid’ vector minus our current position. If this is 0 then we are facing in the right direction, otherwise if that direction is not equal to zero then we need to rotate the fish.

We do a ‘transform.rotation’ to slowly turn the fish towards the direction it needs to be (according to our set rotation speed). The fish will not snap around quickly due to the slerp we have implemented which is used to create a smooth rotation. Quaternions are used to represent rotation and spherically interpolates between points.

Lastly, this ‘DoRules’ method is called within update method. However, I only call on it some of the time (in this case 20% of the time), this is because it takes off the load of having to constantly calculate the rules for every single fish all the time (especially as the flock gets a lot bigger). Furthermore, only calling on the rules some of the time yields unexpected and exciting results!