Evaluation

Summary of achievements

Achievement table

| Achievement | Contributors | ||

|---|---|---|---|

| MUST | A federated architecture with local speech and personal data processing when in interactive mode | ✓ | Jeremy |

| MUST | Potential operation on a proof-of-concept low-power prototype device (e.g., Intel Core i3 NUC) | ✓ | Jeremy |

| MUST | A plugin system for administrators to install additional voice assistant skills | ✓ | Jeremy |

| SHOULD | A configuration generator progressive web app to help non-experts to develop plugins | ✓ | Akhere |

| MUST | An accessible index of installed voice assistant skills | ✓ | Jeremy |

| SHOULD | The capability for third-party plugins to interface with external APIs | ✓ | Jeremy |

| SHOULD | Integration with team 25’s project (FISE concierge) – providing a voice assistant interface to concierge web services within their Android app that is privacy-safe when run on a private local area network | ✓ | Jeremy |

| SHOULD | Integration with team 38’s project (FISE video conferencing) – providing voice assistant functionality for their ‘video conferencing lounge’ that is privacy-safe when run on a private local area network | ✓ | Jeremy |

| COULD | A ‘skills viewer’ web app to more visually inspect plugin skills installed on an Ask Bob server | ✓ | Akhere |

| COULD | A drag-and-drop-style interface to specify simple skills in the configuration generator web app | ✓ | Akhere |

| COULD | Multilingual support through the ability to use non-English language models | ✓ | Jeremy |

✓: 100% complete

List of known bugs

We have tried our best to thoroughly test all our applications, so at the time of writing, there are no known bugs with the voice assistant framework, configuration generator web app or skills viewer web app.

However, if used with contradictory third-party plugins (that for example, implement the same skill, have overlapping intent training examples or have stories that contradict skill definitions), Ask Bob may fail to train or classify intents correctly. Moreover, if plugin developers have not provided enough training examples for their intents, Ask Bob may also fail to classify intents correctly due to a lack of data.

Individual contribution table

| Role | Jeremy | Akhere |

|---|---|---|

| Client & inter-team liaison | 100% | 0% |

| Requirement analysis | 70% | 30% |

| Research | 60% | 40% |

| UI design | 0% | 100% |

| Programming | 50% | 50% |

| Testing | 50% | 50% |

| Weekly blogs | 75% | 25% |

| Report website | 50% | 50% |

| Video editing | 100% | 0% |

| Overall contribution | 60% | 40% |

| Main roles | Researcher, programmer and Ask Bob-FISE teams system integration coordinator | Web application designer, developer and tester |

N.B.: Akhere joined the team in January 2021 mid-way through the project. Felipe Jin Li contributed 50% to the HCI coursework (with Jeremy the other 50%) before leaving the team in January.

Critical evaluation

User interface and user experience of the web apps

The users we engaged with, including our friends in the other teams, who trialled the configuration generator web app and skills viewer web app found them intuitive and easy to use. We are pleased with our decision to use Material-UI components for the forms, which we have styled to be accessible and to have clear, hover and focus effects. The forms’ input-specific labelled error messages make it evident what each input is for and what input is acceptable. It is our hope, therefore, that both web apps will greatly improve the user experience of both developing new plugins for Ask Bob and inspecting the plugins installed on a particular server.

Furthermore, we were pleased that the skills viewer web app could be integrated as a component in the FISE Lounge web app to describe the FISE Concierge plugin skills available to users of the FISE Lounge application.

Functionality

Throughout the project, we have been using Mozilla DeepSpech’s pretrained English model for speech transcription. This has been satisfactory to produce a working proof-of-concept prototype on an Intel NUC device; however, their pretrained model has a key limitation, namely that the training data used to train their model has a slight bias towards US male voices. In our testing, we found that words spoken with our British English accents that are particularly divergent sonically from American English would fail to be recognised properly, so were this to be developed further, for example, for initial studies with our NHS partners, we would like to see an improved model trained on a greater diversity of accent patterns, or at least a more UK-centric speech model, made available and used instead. For this, we greatly anticipate the research of 4th year student Jiaxing Huang, who has been working on facilitating the training of tailored DeepSpeech models.

We are satisfied with the significant number of Rasa features we were able to use and open up to Ask Bob plugin developers, such as intents, responses, custom actions, SpaCy entities, custom entities (with regular expressions, lookup tables), synonym entity extraction, stories and NLU fallback messages. We worked in tandem with the concierge team (25) to implement features that would be most useful to them as plugin developers, which gave us important insights during the development process in how we could improve the experience of creating new plugins for Ask Bob.

Although Rasa had a steep learning curve to begin with, we greatly appreciate that it requires so little training data to produce NLU models, suiting operation on low-power devices. Occasionally, we have found that certain more complex intents require significantly more training examples to be classified correctly, owing to the large number of equivalent ways the same question may be asked in English. Our solution to this has been to remind plugin developers using the configuration generator web app to include as many training examples as they can for each intent to improve the accuracy of intent classification in training. Furthermore, with the appropriate DeepSpeech and SpaCy models configured, we are also pleased that it could be made to use another language besides English.

We are also content that the interfaces to the voice assistant – an interactive interface and a web server interface – make it easier to integrate Ask Bob into a whole host of different applications. This was demonstrated through our successful integration with the projects of the FISE Concierge (25) and FISE Lounge (38) teams. Moreover, Python developers are free to use our documented Python API as they wish, including to create more interfaces to the voice assistant or use Ask Bob components in their own projects.

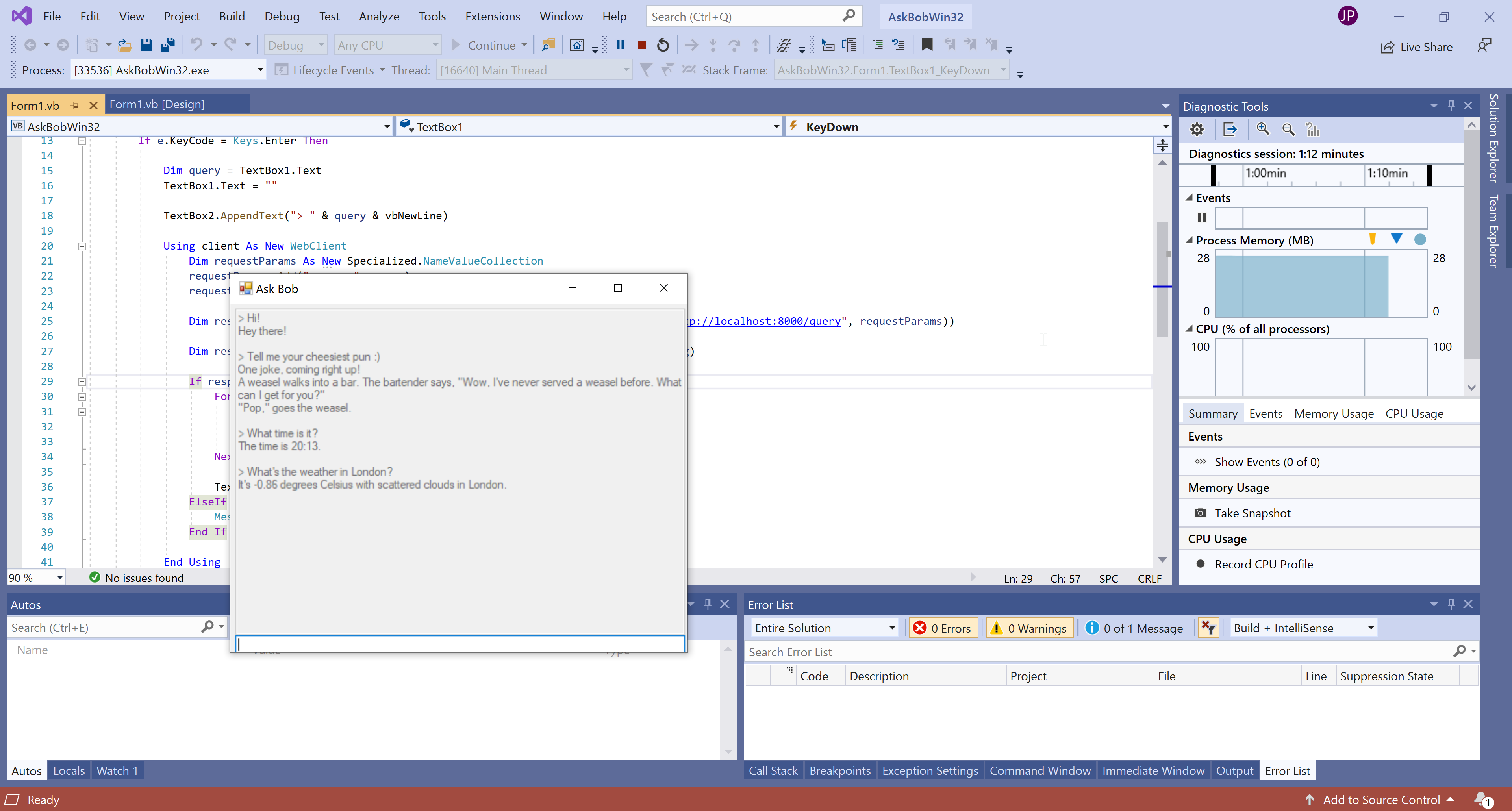

The HTTP web server interface also would allow third-party developers using completely different software stacks to integrate into Ask Bob as well via RESTful API HTTP requests. As a further example, below is a chatbot-style interface to the Ask Bob web server we made using Visual Basic.NET to demonstrate this.

The vast majority of options available to plugin developers in config.json files are modifiable in the form-based configuration generator web app, including intents, synonyms, lookup tables, regexes, slots, entities, responses, stories, skills and additional plugin metadata, such as a plugin name, description and author. Moreover, these can be manipulated in the web app without plugin developers having to be aware of the syntax of the config.json files. Our validation ensures that exported config.json files typically result in a successful training outcome in the Ask Bob setup process. The local storage of this data while they are editing configurations further improves the user experience, as the data persists even if the browser window is accidentally shut. In our assessment, the configuration generator web app makes the creation of config.json files for plugins easier and more efficient.

We also hope that the skills viewer web app will be of use to system administrators testing out multiple Ask Bob plugins.

Stability

The Ask Bob voice assistant has been running as a server in Docker on an Azure virtual private server as part of the deployment for the FISE Lounge team and did not experience any crashes over two weeks of uptime and frequent use, so it is reasonably stable in our assessment on machines with enough computational resources.

Similarly, the configuration generator and skills viewer are lightweight web applications, and we have not encountered any stability issues, such as freezing and crashing in the browser.

Effiency

As demonstrated in the videos on our home page, there is a slight delay between asking a query and hearing a response, while Ask Bob performs speech transcription, query response and speech synthesis, relying on three machine learning models. We have judged this to be reasonable as we have been building a proof-of-concept prototype voice assistant device.

Further to this, we have also discovered that when Ask Bob is run within a Docker container on Windows, rather than installed natively, we can often experience a significant performance improvement.

Compatibility

Given we have been working with both machine learning and audio, it is possible that some atypical Windows or Ubuntu Linux desktop machines may face compatibility issues related to, for example, audio and sound drivers. Some older or lower-power x86-64 processors lack support for AVX or AVX2 instructions, and so they may have to find an alternative method of installing our TensorFlow dependency.

Moreover, although our libraries are all theorically compatible with and have shown to be used on a Raspberry Pi 4 Model B, which is based on an ARM processor, given we focussed instead on deploying to an Intel NUC, the installation procedure may have to be adapted slightly for the different architecture.

However, in general, Ask Bob should be compatible, provided the right drivers and dependencies are installed, with most Linux desktop computers running Ubuntu, and if following our deployment guide for Windows, with most Windows 10 desktop computers with 64-bit Intel Core i3/5/7/9 or 2015+ AMD processors as well.

The configuration generator and skills viewer progressive web apps are usable on any of these machines, as well as tablets and potentially other mobile devices, given its responsive design.

Maintainability

The Ask Bob voice assistant code is heavily modularised into different components. We followed the software engineering principle of writing to interfaces conceptually, even with Python’s duck-typing paradigm. Dependencies, such as utterance services to the speech transcriber, are injected in via constructor injection to further minimise coupling. This helped us to write signficantly more maintainable code and also gives us the freedom in the future to replace many dependencies outright should we ever wish to do so. Furthermore, the code is thoroughly documented and tested with automated unit and integration testing, increasing the likelihood of any problems being identified and fixed quickly.

The code for each web app is organised into multiple folders:

- a

src/componentsfolder containing all the modular components they use, which may be reused independently in other web apps; - a

src/actionsfolder containing code relating to action creators; and - a

src/pagesfolder exporting the pages of each app.

Additionally, the configuration generator web app has exported reducer functions in src/reducers, which may also be reused outside the app. This could be useful for many different projects.

This separation of concerns allows errors to be more easily spotted, and makes it easier to change parts of the codebase without affecting other components.

Project management

We used Jira for project management, particularly during the development phase, as we found it made it easier to keep track of the tasks we had to do and their progress. When the team slimmed down to two members, Jeremy and Ak were in constant communication, which we found to be effective in driving forward progress in the project. We also used the Azure DevOps platform used by the FISE Lounge team (38) to track our progress integrating the projects, which we found to be very useful as well.

Future work

Were we to have had more time to perform the additional research, development and testing it would have entailed, we would have loved to develop an AskBob Marketplace for skills plugins as an Electron.js-based desktop app that could automate the process of downloading (and potentially updating) plugins and retraining new Ask Bob builds.

Third-party AskBob plugins, such as those created by team 25 (concierge web services) are currently stored in their own separate GitHub repositories; an AskBob Marketplace could create a centralised registry of AskBob plugins that are free for anyone to use and extend. This could extend the Ask Bob skills viewer web app we have already made.

A future team could also expand the configuration generator web app to support creating Ask Bob plugin configurations by ingesting Voiceflow .vf files (if the format specification is ever published) or a similar dialogue definition format for conversion into the existing Ask Bob configuration format.

While the remit of our project was low-power desktop devices and our technical choices reflect this, it would be interesting to see an AskBob-like project locally processing speech on wearable devices. Alternatively, in a care home setting with a voice-enabled Ask Bob server running on an Amazon Echo Show-style device, the wearable device could communicate with the Ask Bob server over the private care home wireless network.