System design

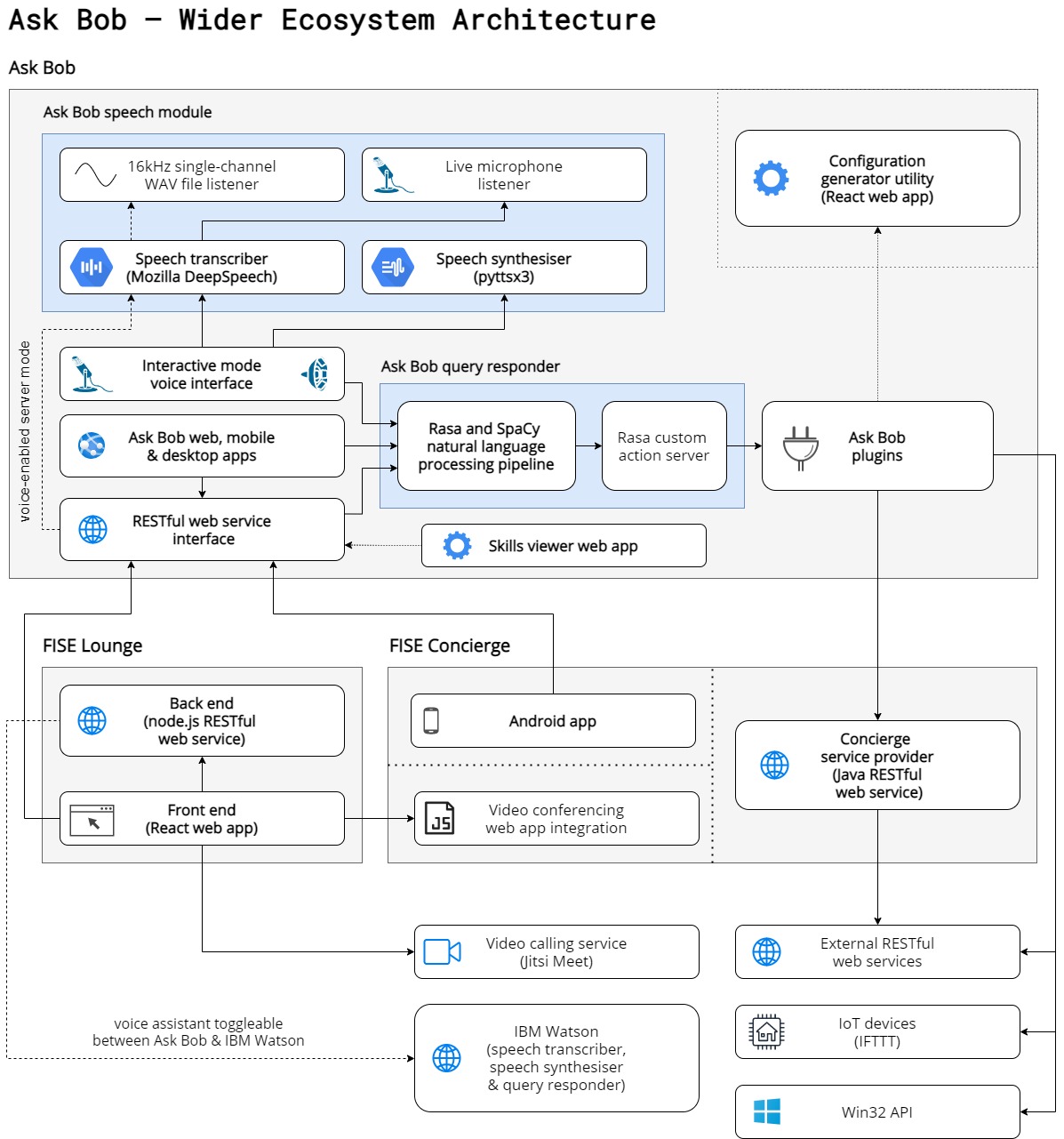

Wider Ask Bob ecosystem architecture

Not only can the Ask Bob voice assistant framework be used standalone with a set of installed plugins, it is also the principal component of a wider integrated ecosystem of IBM FISE projects, which also comprise the FISE Lounge and FISE concierge projects.

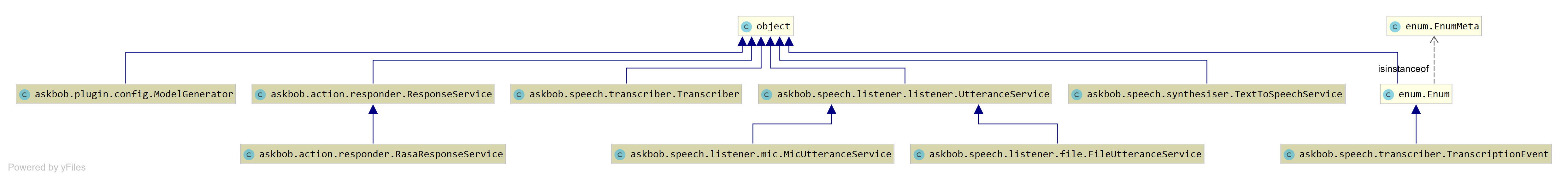

The following is a UML-style class diagram of the Ask Bob voice assistant framework code:

Having coded Ask Bob largely to interfaces (conceptually, given the Pythonic duck typing paradigm), our modules could be replaced with different implementations that use different dependencies within the same architectural framework.

Of the voice assistant framework, there are two major modules: the speech module and the query responder module. Furthermore, unlike cloud-based voice assistants, the speech and query response modules perform all speech and data processing locally.

Ask Bob speech module

The speech module is formed of the following:

- audio listeners that ingest audio from different input sources (e.g. 16kHz single-channel WAV files, live microphone input) and identify speech using the WebRTC voice activity detector

- the speech transcriber based on Mozilla DeepSpeech

- the speech synthesiser based on pyttsx3

The speech module can function as its own independent component that is reusable by third-party developers in their own open-source projects.

Ask Bob query response module

The responsibilities of the query responder module internally are shared across the Rasa NLU and SpaCy-based natural language understanding pipeline responsible for extracting intents and named entities from transcribed speech; and the Rasa custom action server that runs in an isolated process used to run custom action code using the Rasa SDK, and by extension, Ask Bob plugins. When the user triggers a skill intent, the query response module executes the actions registered for that plugin’s skill, including any custom action code.

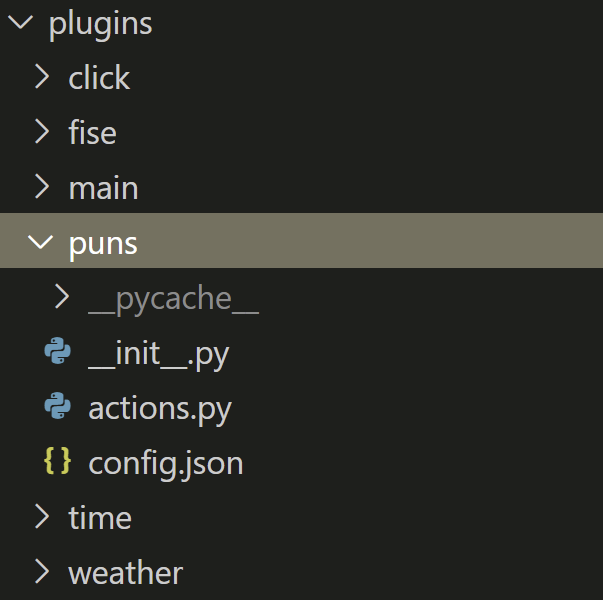

Ask Bob plugin architecture

Ask Bob voice assistant skills are triggered by user intents and then perform a sequence of actions, which can be as simple as responding with a variant of a fixed response or the result of executing a more complex custom action that can invoke arbitrary Python code implementing the Rasa SDK and tagged with the Ask Bob plugin decorator. Similar skills are bundled together and componentised into Ask Bob plugins, which contain both the training data needed to train a Rasa model to recognise the plugin’s skill intents and any additional custom action code.

Typically, plugin custom action code might send requests to external RESTful web service APIs; however, third-party developers could also create plugins that interface with Internet-of-Things devices using the If This Then That (IFTTT) protocol or potentially other APIs. As an example, our project repository contains a plugin that uses PyAutoGUI to simulate mouse clicks on Windows, tapping into the Win32 API.

The FISE Concierge project of team 25 has implemented several such Ask Bob plugins using our framework:

- concierge app navigation

- concierge apps

- concierge communications

- concierge entertainment

- concierge finance

- concierge food

- concierge lookup

- concierge transport

- concierge utility

While some of these plugins are tailored specifically to their Android app, those marked in bold may be reused by other users of the Ask Bob voice assistant framework in their own setups. Furthermore, the FISE Lounge project of team 38 also has an integration plugin related specifically to their video conferencing front end.

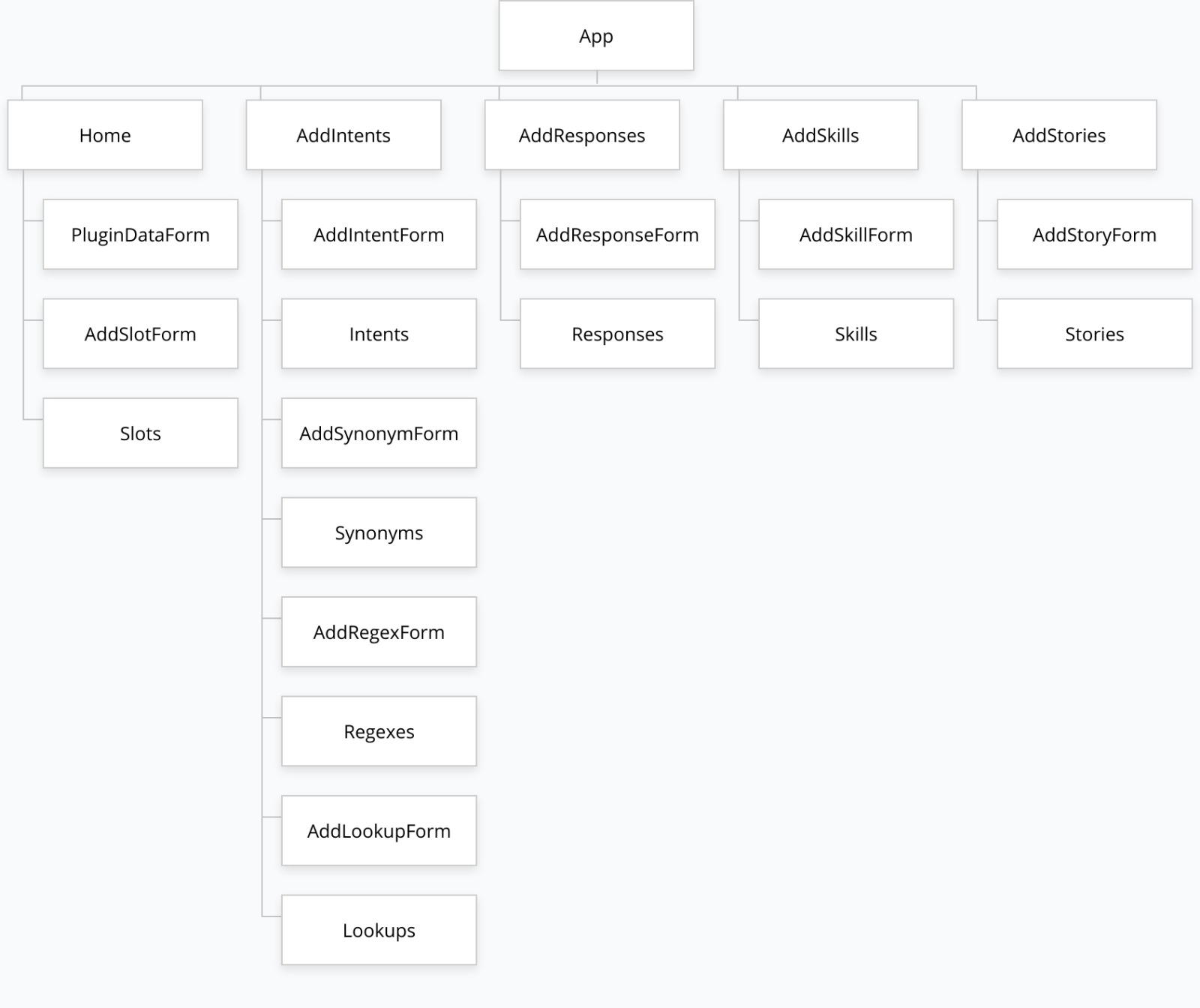

Ask Bob plugin configuration generator web app

Ask Bob is accompanied with a progressive React-based configuration generator web app to aid non-experts in the creation of plugin config.json files, which contain plugin metadata as well as the training data required during the Ask Bob setup process. These config.json files are exported from the configuration generator web app and included within the plugin folder, potentially alongisde custom action code.

JavaScript module diagram

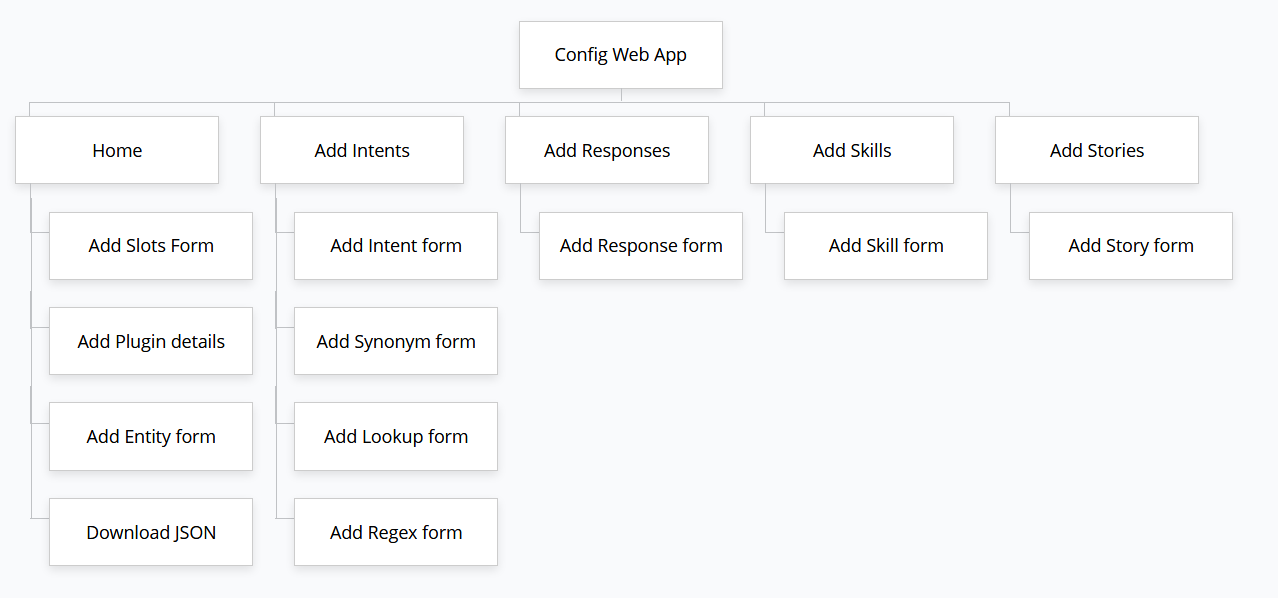

Site map

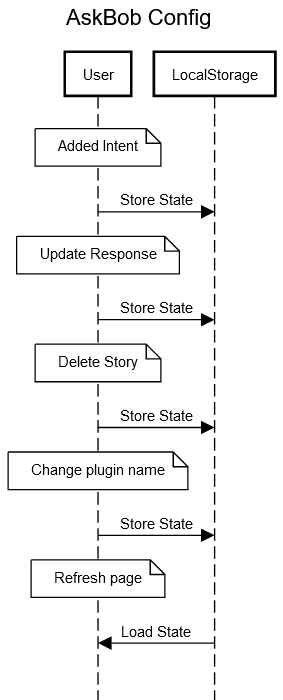

Local storage sequence diagram

Every time the user submits a form, deletes an item or edits the plugin details, the updated state of the application is stored in local storage. Examples of state changes include adding new intents to the list of intents, changing the plugin name and deleting a story.

Ask Bob interfaces

There are two main interfaces to the Ask Bob voice assistant framework:

- the interactive mode interface (where the user can speak directly to the voice assistant)

- the RESTful HTTP web service API (that users can query either with text or by uploading WAV files)

It is possible to run the Ask Bob server either in “voiceless” mode, where audio file upload is disabled (with the added benefit of not needing to download a DeepSpeech model or external scorer), or in “voice-enabled” mode, where audio file upload functionality is enabled.

The Ask Bob skills viewer web app reads data from the web service /skills endpoint to visualise the skills installed on properly configured Ask Bob servers.

FISE project integration

The FISE Concierge project of team 25 sends requests with a textual form of their users’ queries to the web server interface from their Android app, whereas the FISE Lounge project of team 38 upload 16kHz single-channel WAV files to the Ask Bob server directly from their React web app front end. Both teams receive responses from the Ask Bob server in a standard format documented in our README and user manual.

The FISE Concierge plugins include additional metadata in their responses for their Android app to allow them to perform app-specific actions, such as opening links, calling contacts and opening other apps installed on the Android device.

These custom JSON responses are also accessible to the FISE Lounge web app, should it be configured to use an Ask Bob server on a private local area network (to ensure privacy safety) that is also running the FISE Concierge plugins and Java web service back end; therefore, there is some additional logic in the FISE Lounge web app to specifically handle FISE Concierge plugin responses as well, adding functionality for recipes, jokes and air quality information, among other things. This completes the integration across the three FISE projects.

Note: the FISE Lounge web app allows users to toggle between using IBM Watson Assistant and Ask Bob for a more privacy-safe user experience on private local area networks.