Testing

Ask Bob voice assistant framework

Testing strategy

When testing the Ask Bob voice assistant, we primarily relied on the white-box testing strategies of writing unit tests for each component of the voice assistant, as well as integration tests across major modules, such as the speech module, as well as the whole application. We aimed both to comprehensively test our component interfaces, and to maximise both our statement coverage and our branch coverage. Testing the voice assistant posed several challenges due to the nature of the project, which we resolved using several tactics described below.

In order to test the speech module consistently in a controlled manner, multiple recorded audio clips were committed to our repository and re-used within our unit tests every time the test suite was run. This allowed us to better detect and fix bugs within the transcription process more effectively in an automated way. As an example, a bug related to an audio buffer not being cleared at the end of each speech utterance was discovered in this way.

As the voice assistant’s approach to responding to users’ queries is based on machine learning using Rasa, which internally uses Tensorflow, there are always slight variations in the models produced as part of the training and setup process from the same training data. To mitigate this, a simple Rasa model was committed to our repository and used across all our test cases to ensure reproducibility across our test suite runs.

Furthermore, we also performed regression testing: the test suite would be re-run every time a new feature was added to the voice assistant to ensure any major code changes had not introduced breaking changes and software regressions.

We would have liked to have used continuous testing as part of a continous integration pipeline; however, the nature of some of our dependencies requiring certain system-level packages to be installed (e.g. portaudio) and the necessity of having a microphone plugged in to properly test our microphone listener component made automating this infeasible. As a compromise, we developed a policy of re-running the full test suite before committing code changes to mimic this behaviour.

As part of our integration with the other IBM FISE teams (25 and 38), we also devised a testing strategy with team 25, who used Ask Bob to implement skills plugins interfacing with their concierge web services Java-based back end in order to build voice assistant support into their Android app. Here, we agreed on the black-box integration testing approach of launching Ask Bob in server mode and writing automated tests in their repository that asserted the output from RESTful API calls matched what was expected. This was also done in our repository for the aforementioned pretrained Rasa model we were sharing across all our Ask Bob tests.

Unit and integration testing

We used the pytest Python testing framework to perform unit and integration testing to ensure that the voice assistant and its subcomponents exhibit the correct behaviour and meet their requirements. Unit testing allowed us to test each class to ensure it operated as expected. Moreover, integration testing allowed us to verify that the different classes within the application were interoperating properly as expected.

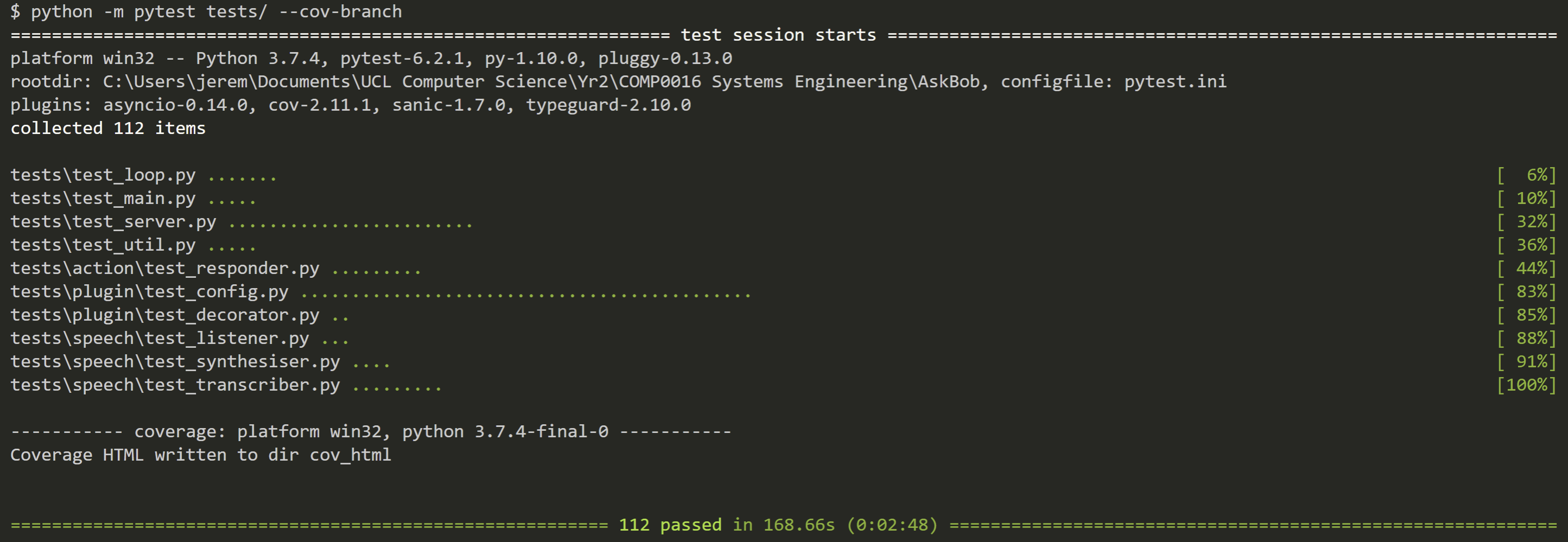

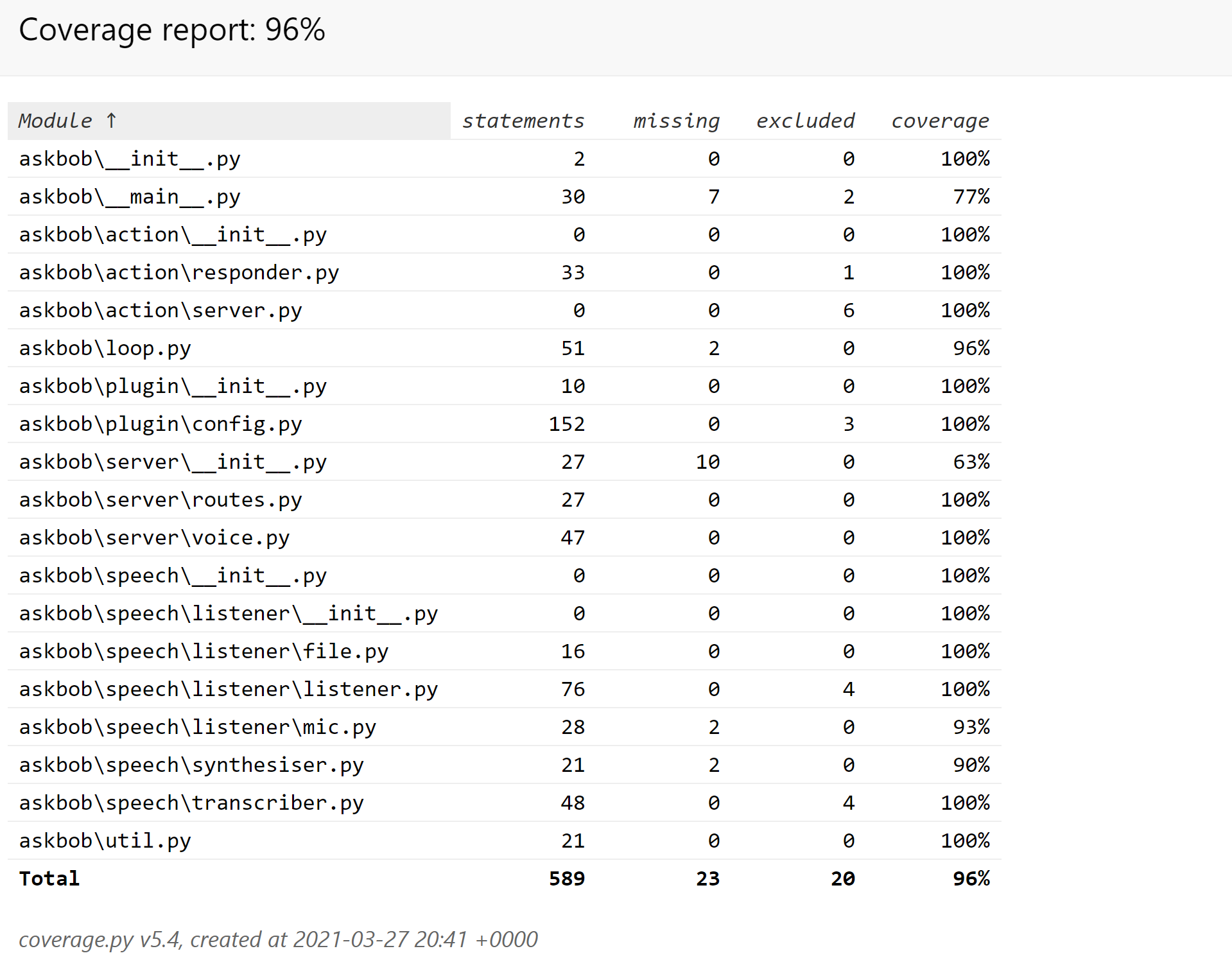

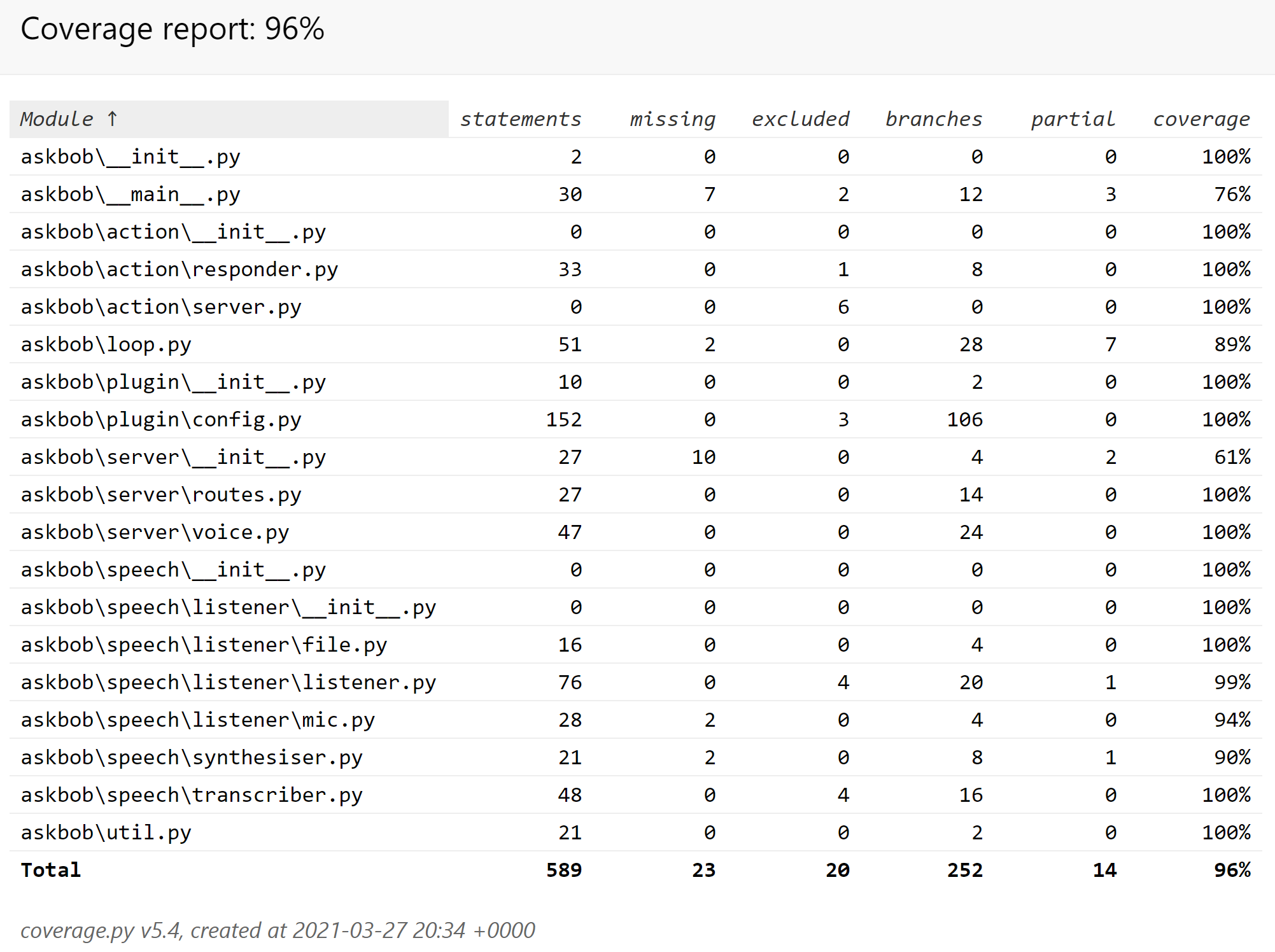

Overall, in our test suite, we conducted 112 tests achieving both 96% statement coverage and 96% branch coverage of our application code.

We have made use of some of the more advanced pytest features available to us, such as the following:

- temporary files and folders, primarily within our plugin

config.json-to-Rasa YAML translation module tests to simulate different confugration times, but also to test running Ask Bob with different runtimeconfig.inifile values and to ensure speech clips are correctly output when the--savewavoption is used; - running tests inside an asyncio event loop to test voice assistant code written as asynchronous tasks;

- mocking the Python

sysmodule using the built-inunittest.mocklibrary to temporarily modifysys.argv– the command line interface (CLI) arguments used to run the application – to simulate the voice assistant being run with different CLI arguments; - output redirection and capture to ensure the correct messages were being written to

stdout; and - logging capture to ensure expected errors and warnings are correctly issued.

| Test file | Summary of tests |

|---|---|

| tests/test_loop.py | Tests related to the Ask Bob main interactive loop |

| tests/test_main.py | Tests related to the main CLI application entrypoint (in __main__.py) |

| tests/test_server.py | Tests related to all of the Ask Bob HTTP server interface endpoints |

| tests/test_util.py | Tests related to the CLI argument parser |

| tests/action/test_responder.py | Tests related to the Rasa-based query responder using a pre-trained model provided in the repository |

| tests/plugin/test_config.py | Tests related to the Ask Bob plugin config.json file to the Rasa YAML training data format translator and model generator |

| tests/plugin/test_decorator.py | Tests related to the plugin decorator used to tag skills Ask Bob plugin code using the Rasa SDK |

| tests/speech/test_listener.py | Tests related to the “utterance service” listener components |

| tests/speech/test_synthesiser.py | Tests related to the pyttsx3-based text-to-speech submodule |

| tests/speech/test_transcriber.py | Tests related to speech transcription, including integration tests with listeners |

We have included the statement and branch coverage reports in this report website available at the following links:

From our testing, we can expect with a reasonable degree of certainty that the majority of voice assistant components are behaving as expected for both developers and end users running the voice assistant in interactive mode, as well as other developers using Ask Bob as a server to query from their own applications. This concurs with our experience when integrating Ask Bob into the projects of teams 25 and 38.

Confugration generator and skills viewer web apps

Testing Strategy for web apps

We used React PropTypes to test if each component being used has the correct props (properties passed to it) of the correct type.

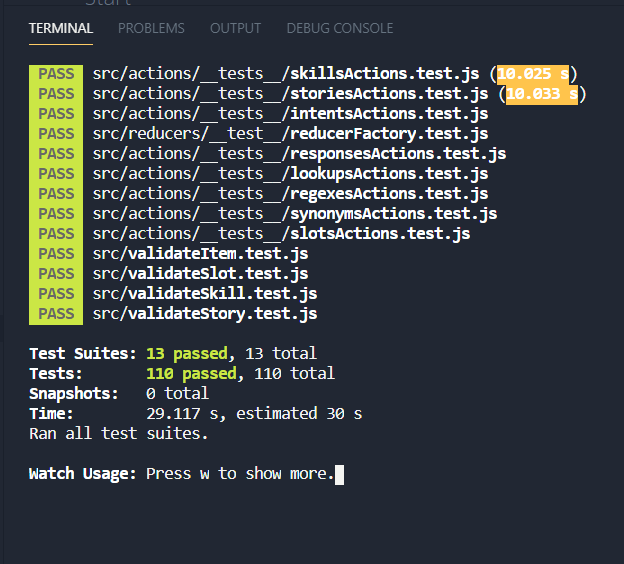

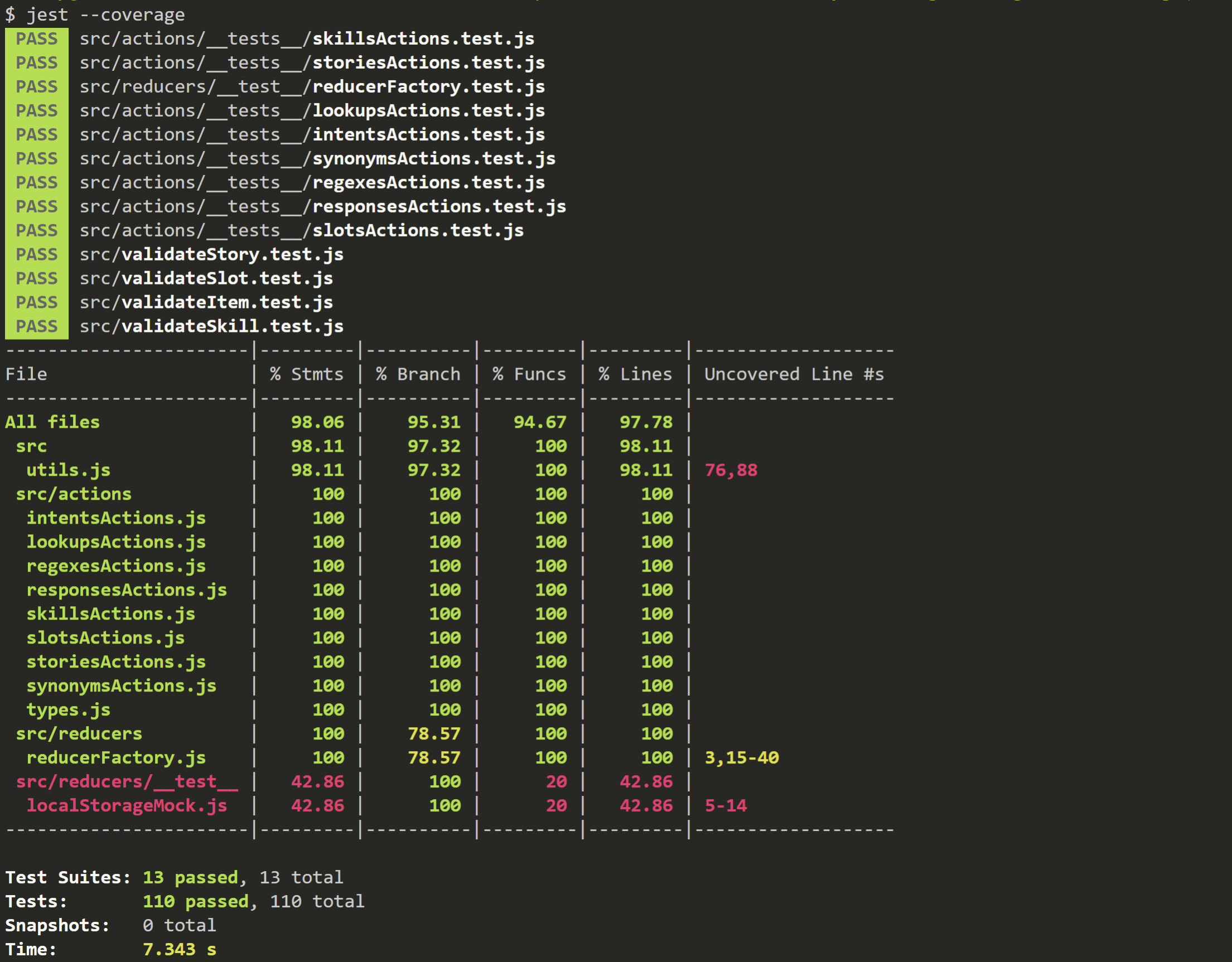

Jest is a javascript testing framework that allows us to easily write fully automated unit tests, which we used for testing our action creator, reducer and validation functions. The skills viewer web app also has tests for ensuring each component correctly renders the props given to it.

For the configuration generator web app, we wrote 110 tests, including but not limited to the following pieces of code: a reducer factory function; a function that takes the current state; and an action that specifies how to change the state and then returns the state appropriately. The reducer factory makes reducers that accept add, delete, update, store and load actions, as well as ‘add’ mode and ‘edit’ mode actions for controlling whether a form is adding or editing an item.

Our tests ensure that the action creator functions return the correct action and that the reducer handles each action by returning the expected new state.

The tests for the user validation functions verify that the correct error message is returned when the user input is invalid and that no error message is returned when the correct input is entered. As the configuration generator web app is quite complex, providing users with proper error feedback about invalid input data is important.

The following table summarises the purpose and use of each test file:

| Tests | Purpose |

|---|---|

| skillsActions.test.js | Tests for ensuring skill action creators return actions of the correct type and payload |

| storiesActions.test.js | Tests for ensuring story action creators return actions of the correct type and payload |

| intentsActions.test.js | Tests for ensuring intent action creators return actions of the correct type and payload |

| reducerFactory.test.js | Tests for ensuring that the reducer factory can handle the appropriate actions |

| responsesActions.test.js | Tests for ensuring response action creators return actions of the correct type and payload |

| lookupsActions.test.js | Tests for ensuring lookup action creators return actions of the correct type and payload |

| regexesActions.test.js | Tests for ensuring regex action creators return actions of the correct type and payload |

| synonymsActions.test.js | Tests for ensuring synonym action creators return actions of the correct type and payload |

| slotsActions.test.js | Tests for ensuring slot action creators return actions of the correct type and payload |

| validateItem.test.js | Tests for ensuring that the correct error messages are returned when users enter invalid data into the intent, regular expression, response, synonym and lookup forms |

| validateSlot.test.js | Tests for ensuring that the correct error messages are returned when users enter data into the slot form |

| validateSkill.test.js | Tests for ensuring that the correct error messages are returned when users enters data into the ‘add skill’ form |

| validateStory.test.js | Tests for ensuring that the correct error messages are returned when users enters data into the ‘add story’ form |

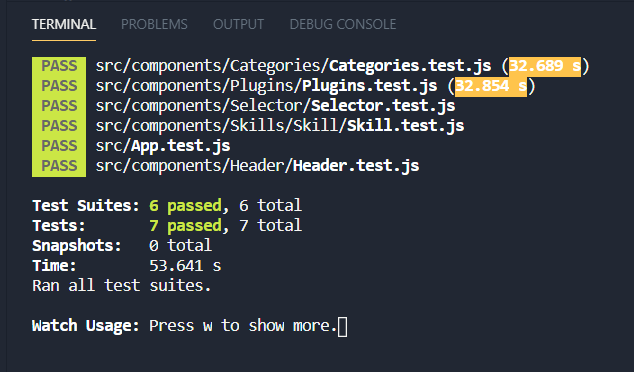

Skills viewer web app

Tests for the skills viewer web app focus more on ensuring that the correct information shows for each prop and that loading animations show before any data are fetched.

Here is the overview for the tests used in the skills viewer app:

| Tests | Purpose |

|---|---|

| Categories.test.js | Tests for ensuring the correct Categories information is shown on screen |

| Header.test.js | Tests for ensuring the Header component renders on the screen |

| Selector.test.js | Tests for ensuring the Selector component renders on the screen |

| Plugins.test.js | Tests for ensuring the correct Plugin information is shown on screen |

| Skill.test.js | Tests for ensuring the Skill component renders on the screen |

| App.test.js | Tests for ensuring the load animation shows before data is fetched |

Responsive design testing

Both web apps use Material-UI components that are responsive. Additional styling has been added to ensure each page fits on screens of all sizes to take devices of many different form factors into account. We used developer tools available in Google Chrome to test this.

We also used a tool called Lighthouse to test accessibility, performance and whether our web apps follow best practices, such as redirecting traffic to HTTPS. Lighthouse also allowed us to test whether our web apps would work well both online and offline.

User acceptance testing

When performing user acceptance testing, we reached out to our friends, as well as our partners in the FISE Concierge and FISE Lounge teams, for feedback. Users who used the configuration generator web app found it intuitve and easy to use. Users found that when they entered invalid input, that they could quickly understand why and correct it due to the error helper text.

The clean styling with Material-UI was greatly appreciated, and users noted that the hover and focus animations of buttons helped them understand which elements they could interact with effectively. Users felt like they could quickly understand how to navigate the web app with its simple layout and top-mounted navigation bar, and that the consistent form structure across pages aided with creating a consistent feel.

With the skills viewer web app, users commented that they found the app easy to understand and use, given its intuitive interface, and they also appreciated the styling, especially the use of accordions for the plugins and categories.

The consistent use of design elements across the configuration generator web app and skills viewer web app created the positive impression of having created an integrated design across the project.