In a digital age the ability to interact with the functionality of a computer is a near necessity, as such, the barriers to entry for using one should be lowered as much as possible. Hence the need for a way for people of many different needs to be able to control and interact with a computer when they may not be able to or feel comfortable using the traditional methods of keyboard and mouse.

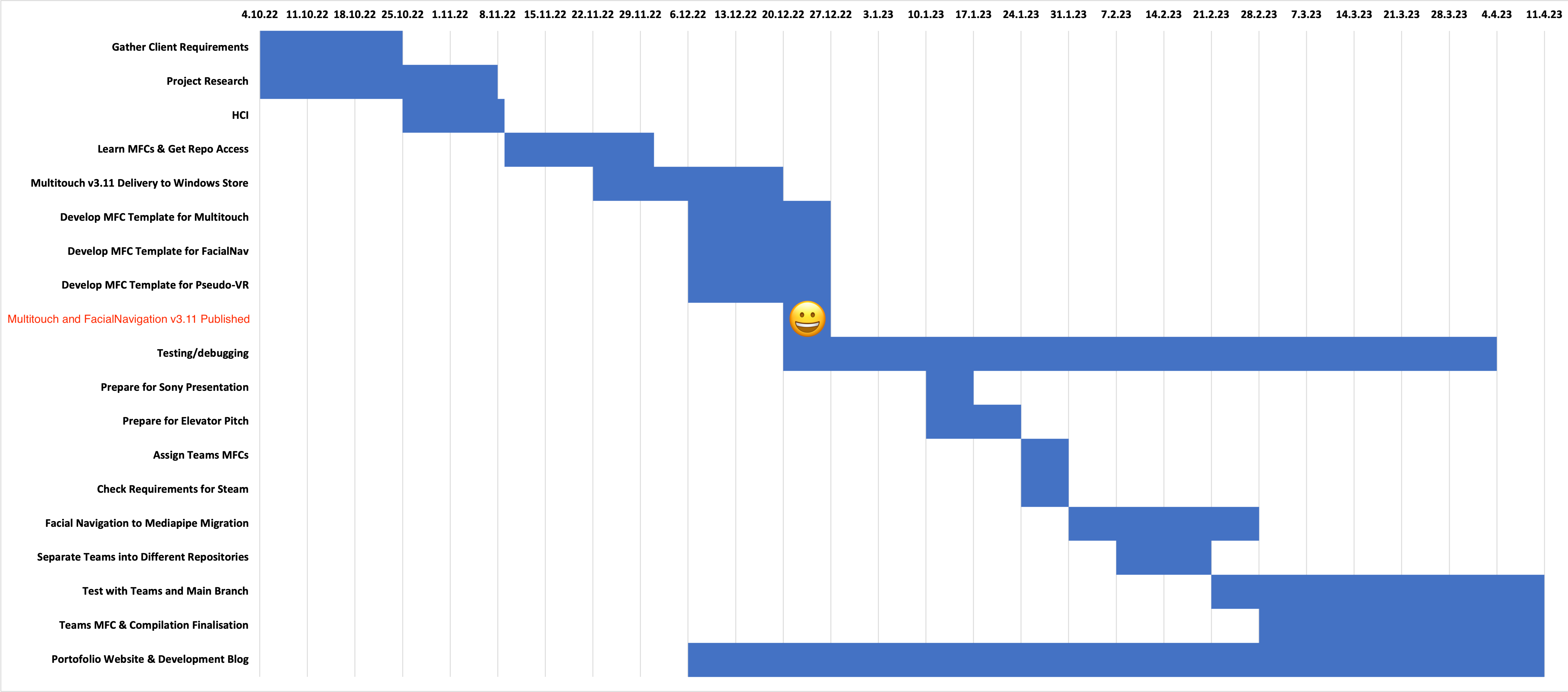

In light of this, MotionInput aims to provide a touchless control system that utilises gestures to interact with a computer. By using the webcam instead of relying on a keyboard and mouse, the possibility of control opens up to the entire human body rather than just the hands. Furthermore, speech commands come integrated to allow for control even without any movement of the body, and intuitive GUIs allow users to modify and adapt to whichever method of using MotionInput works best for them.

The impact this can have is vast, people who cannot use their arms can use their legs or their face as controls, use speech to give commands and navigate their computers with a wave of their hand. Beyond this, in version 3.2, there are many advancements from Custom Gesture recording for gaming for a Pseudo-VR experience, to Stereoscopic Image Navigation for medical use and beyond. These are just a few of the applications of the software of which there are many and even more to come.