Technologies

Python

Python stands out for its elegant syntax that facilitates clear programming on both small and large scales. It's widely adopted in various fields, from web development to data science, owing to its extensive standard library and vibrant community support.

MediaPipe

Developed by Google, MediaPipe offers a robust framework that enables developers to craft complex pipelines for processing multimedia content, integrating capabilities like face detection and augmented reality.

OpenVino

Intel's OpenVINO toolkit accelerates the deployment of deep learning models with a focus on edge computing, allowing for efficient inference on Intel hardware. It provides a comprehensive set of tools and pre-optimized kernels to enhance performance on vision-based tasks.

Vosk

Vosk distinguishes itself as a compact, yet powerful open-source speech recognition toolkit capable of functioning offline. It supports over 20 languages, making it adaptable for a wide range of applications, from voice-controlled assistants to transcription services.

OpenCV

OpenCV is a foundational library in the field of computer vision, offering an extensive collection of tools for image and video analysis, including real-time object detection and image processing.

Migration and Feature Enhancements

Our team was entrusted with the task of migrating features from version 3.2 to 3.4 of MotionInput, along with implementing new features that would benefit developers and ultimately aid disabled individuals. This undertaking required an extensive process, which we dedicated ourselves to throughout the first term.

Preparation Phase

In addition to our four-member team, the migration effort was a joint collaboration primarily involving the Calibration Team, represented by Suhas Hariharan, and the AX-CS Frontend Team, represented by Vayk Mathrani. To effectively manage such a large group, we established a custom Notion page to allocate and track tasks.

In the initial month, we engaged in numerous discussions with our client, teaching assistants, and past contributors to MotionInput. These conversations provided us with valuable insights into the functionalities of versions 3.0 and 3.2, and the enhancements introduced in version 3.4. We then shared these insights with the broader migration team through comprehensive sessions, facilitating their understanding of the new repository. To aid in this transition, we developed a detailed migration manual, available here:

Planning Stage

After all members have familirized with the MotionInput codebase, we held a number of dicussions about features we should focus on and which are the most needed. As the number of these features is in the dozens, we needed to develop a framework to objectively assess their importance. As such, we utilized a ranking system, where we scored features based on their flexibility of use, migration difficulty, and future potential. In the end, we came to the following list of features and functionalities, which we have successfully implemented as well:

| Poses Migrated | Modes Migrated | Games Tested |

|---|---|---|

| zoomevent.py Samurai_swipe_event.py Gun_move_event.py Mr_swipe_event.py Nose_tracking_event.py Nose_scroll_event.py Forcefield_event.py Spiderman_thwip.py Pitch_click.py Head_trigger.py Head_biometrics.py Head_calculator.py Head_gesture_classifier.py Head_landmark_detector.py Head_module.py Head_position.py Head_transformation.py head.py Landmark_frame.py Nosebox_display.py Face_display.py Display_element.py Circle_trigger.py Body_points.py Sound_pose.py Mr_swipe.py Brick_ball.py Gun_move.py |

Zoomevent.json Samurai_swipe_event.json Gun_move_event.json Head.json Mr_swipe_event.json Nose_tracking_event.json Force_field_event.json Nose_scroll_event.json Spiderman_thwip.json Pitch_click.json Head_trigger.json Nosebox_display.json Face_display.json Display_element.json Circle_trigger.json Body_points.json Sound_pose.json Mr_swipe.json Brick_ball.json Gun_move.json |

Dino Run Traffic Rush Dinosaur Game Snake Cool Moving Bounty Truck Crazy Car Block vs Ball Head Controller Four in a row Blumgi slime Blumgi Dragon Penalty Shooters 2 Rocket League |

Migration Stage

Upon finalizing the selection of features, we embarked on the journey of integrating them into the new version of MotionInput. To ensure a smooth and efficient migration process, we adopted an agile methodology, organizing our efforts into weekly sprints. These sprints were kick-started during our lab sessions every Tuesday, where we delegated specific functionalities to team members. Despite this structured approach, we maintained frequent meetings throughout the week to foster communication and enhance our overall productivity. The following is video from one of such sessions:

For a streamlined development process, each feature was migrated in its own dedicated branch, created by the respective assignee. This strategy not only facilitated focused development and isolated testing but also simplified the review process. Once a feature was fully developed and rigorously tested, the assignee submitted a pull request. This request was then reviewed by another team member before being merged into the main project. Through this iterative and collaborative approach, we were able to monitor our progress effectively and continuously refine the newly added features.

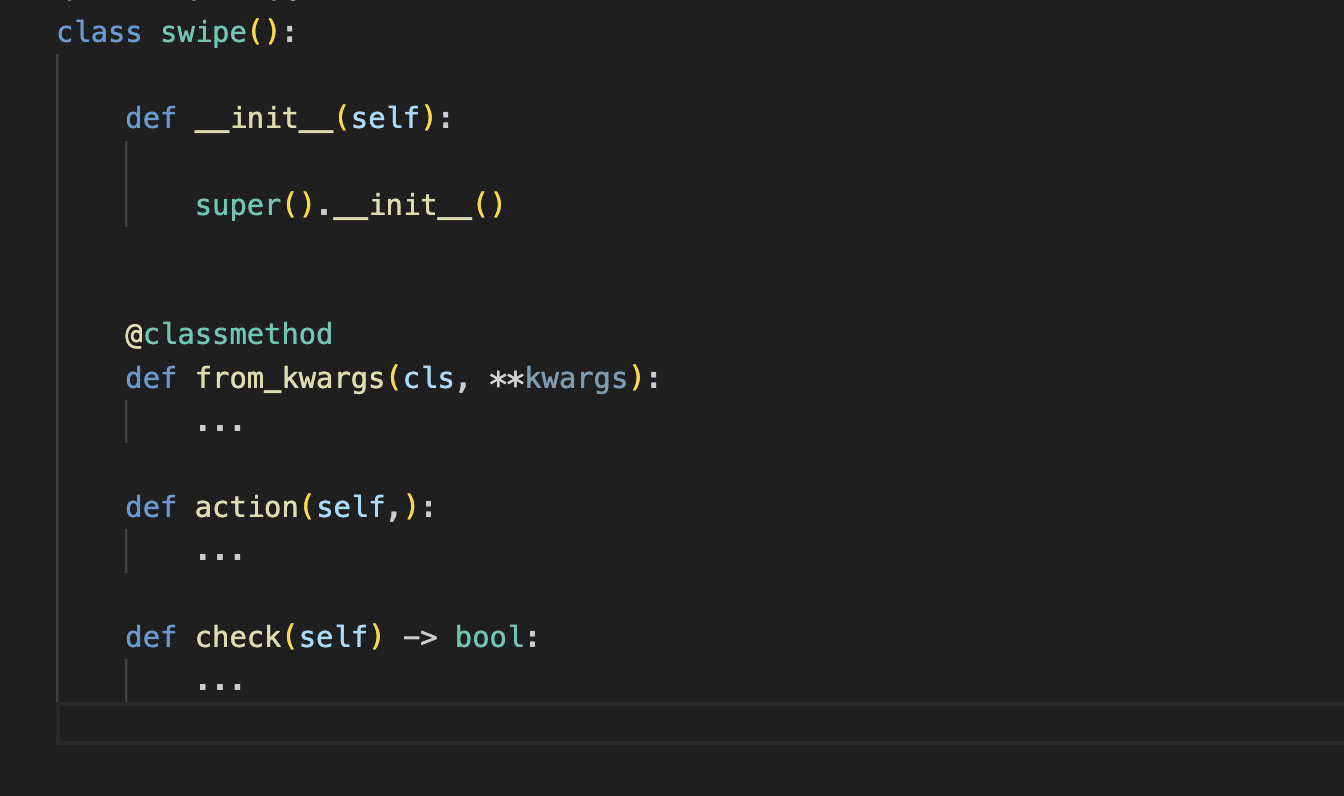

Key Feature: Swipe Feature Migration

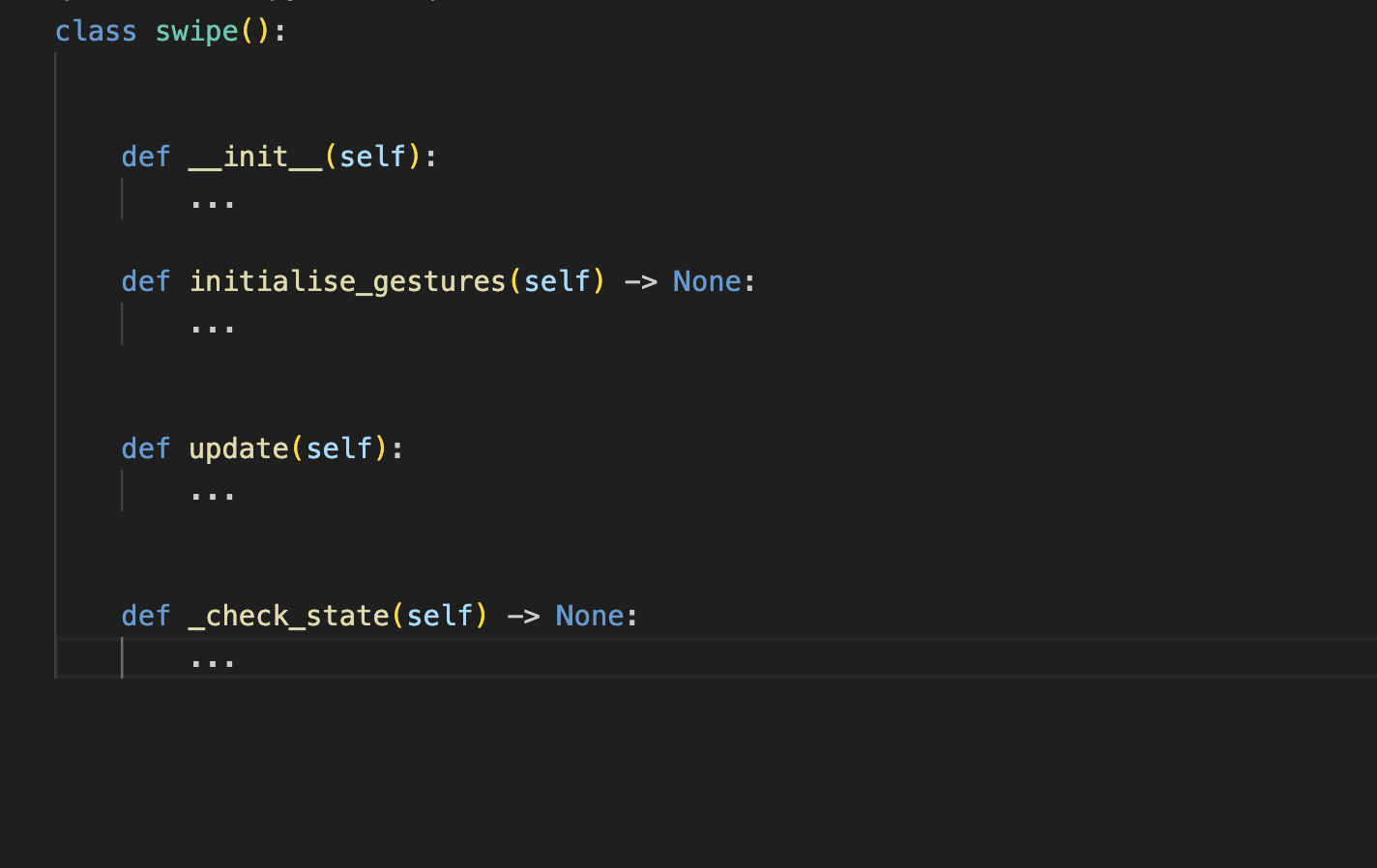

This case study outlines the migration process of a swipe gesture feature, which we have specifically focused on based on the feedback from our client. Additionally, as the client requested, we omit any specific code details. Instead, we'll focus on the structural approach taken to enhance the feature's modularity and maintainability.

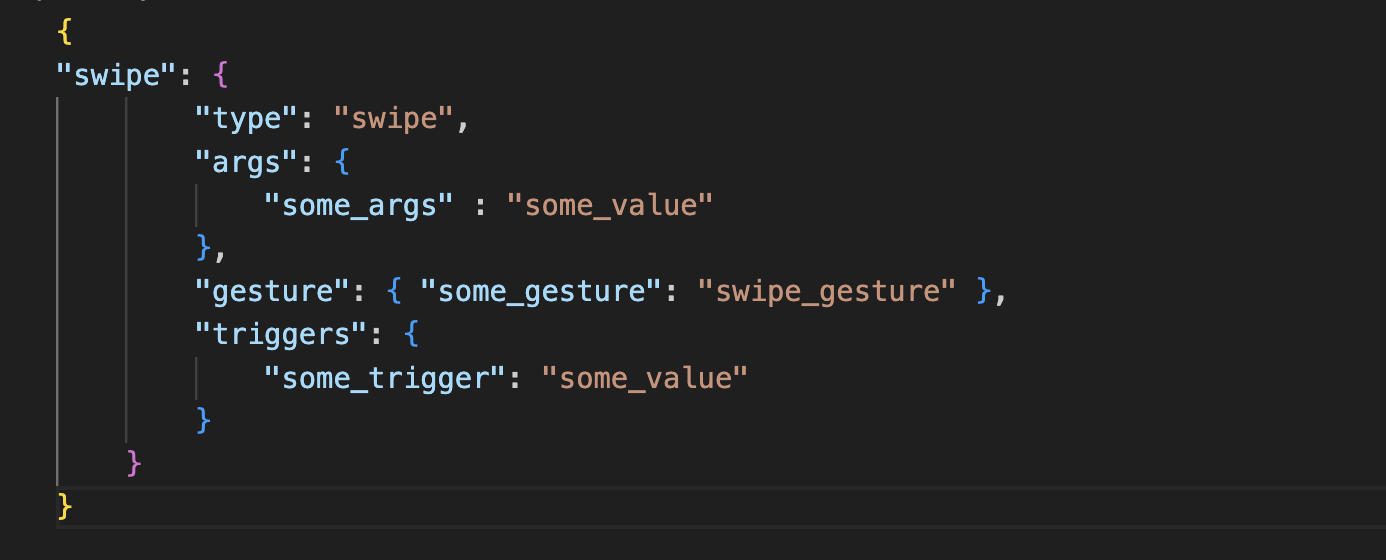

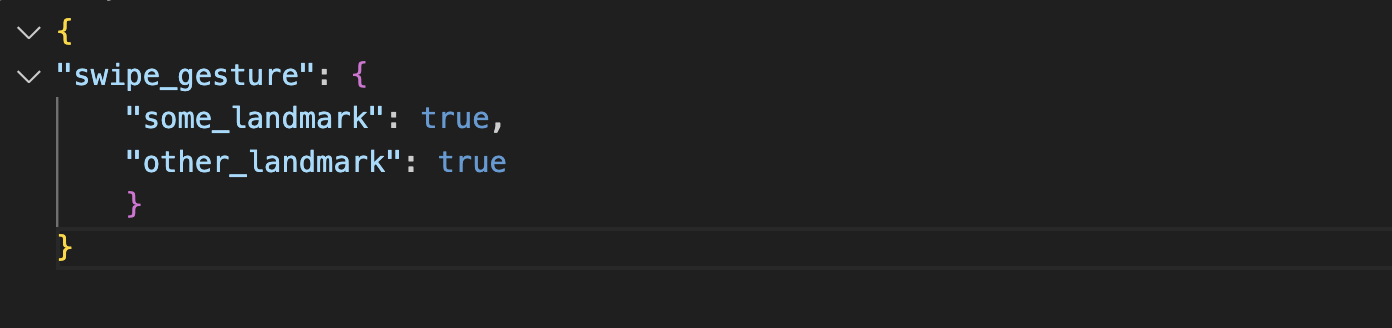

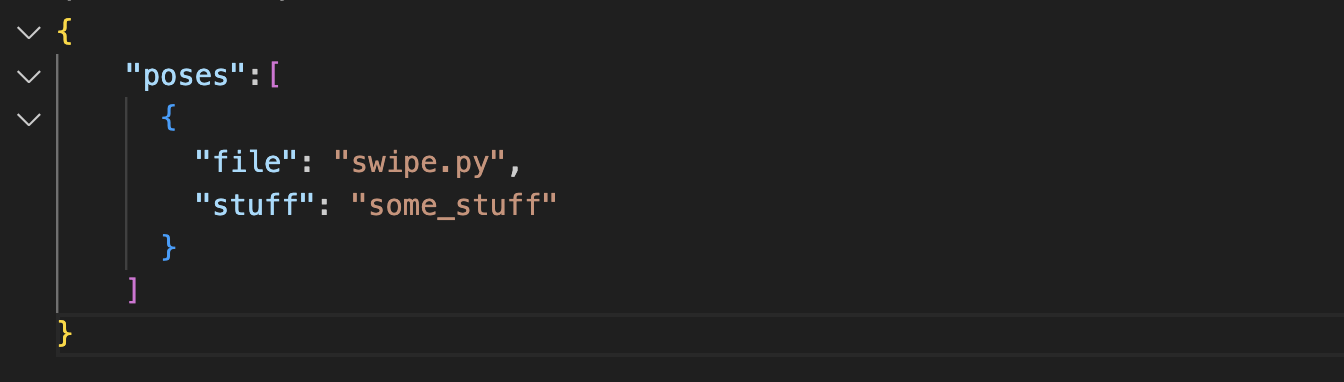

In version MotionInput 3.2, the swipe gesture is encapsulated within a Python module, comprising several methods. The method _check_state is pivotal, as it monitors for gesture activation on a per-frame basis, subsequently invoking the update method when necessary. Complementing the Python logic, each gesture is defined in a JSON file, detailing the gesture's name and the associated actions it can trigger. These gestures are then grouped into a collection, forming a 'gesture' that users can select and interact with. Despite its functionality, the existing architecture presents a challenge due to its complexity and lack of modularity, with components dispersed across multiple files. Our migration strategy aims to address these issues, streamlining the feature for better flexibility and integration.

With the transition to MotionInput 3.4, the swipe gesture has undergone thoughtful refinement, which you can find below. It is now encapsulated within a Python class that introduces a more modular approach, incorporating methods for initialization and action handling.

A key feature of this update is the from_kwargs method. This addition enables the dynamic creation of gesture instances using keyword arguments, facilitating a more adaptable and responsive design. We need to compile a list of these keywords based on previously implemented features, and ensure our newly added feature is modular enough for future users and development.

To ensure clarity and maintain the system's integrity, the action and check methods have been introduced, evolving from the previous update and _check_state methods. We then define these methods to mimic the functionality we require, with additional auxiliary ones to help them proccess incoming frames.

Before fully integrating this refined gesture into MI 3.4, it undergoes a thorough testing phase. This involves adjusting the active game mode in the "config.json" file to assess the gesture's performance and ensure it meets our expectations. Following successful testing, we will conclude the gesture's integration by documenting each method. This documentation aims to clearly explain the functionality and purpose of the methods, ensuring a smooth transition and easy adoption for users.

MotionInput SDK Development

During the latter half of the term, our team pivoted towards empowering developers to seamlessly integrate MotionInput into their projects. This led to the creation of the MotionInput SDK, designed for use across different platforms and programming languages, enhancing its modularity. This marks the inaugural adaptation of MotionInput into an SDK format, accessible to developers beyond the original MotionInput team.

Conceptualization & Design Stage

To delineate the SDK's scope, we engaged in extensive discussions with our client, peers engaged in both MotionInput and unrelated projects, and educators from the Richard Cloudesley School.

These conversations helped us pinpoint essential features for developers and end-users, particularly those with disabilities. It became clear that the SDK needed to support seamless mode transitions, instantaneous start and stop capabilities, and flexible application property modifications, such as view type adjustments and language selection for VOSK models. Consequently, we crafted an API with the following structure and endpoints to meet these needs.

MotionInput Python Package

Our initial step involved developing a Python package to facilitate the use of MotionInput across various devices within any Python-based application. Due to confidentiality and data protection requirements stipulated by our client, it wasn't feasible to directly transform the MotionInput repository into a package.

To address this, we employed the nuitka tool to compile the repository into a Python-compatible DLL, which manifests as a .pyd file on Windows or a .so file on Unix-based systems, while only making the MotionInputAPI class public. This approach ensures that the core functionalities are accessible without exposing sensitive code. The compiled file can then be integrated into a Python package, enabling developers to import it from any location on their system without direct access to the source code.

Moreover, to streamline the installation process, we developed a setup.py script. Executing the pip install . command through this script automates the installation of MotionInput dependencies, compiles the source code into a Python DLL, and installs the package into the user's Python environment.

With these steps, developers can effortlessly import the MotionInputAPI in any Python project, provided a data folder is located within the project directory. The following video demonstrates the package installation process and shows our key feature of having MotionInput integrated into a standalone Python script in a separate directory:

MotionInput Dynamic Link Library

Following the successful development and testing of our Python package, our team pivoted to a novel solution - the creation of a Dynamic Link Library (DLL). This approach eliminates the necessity for an external Python interpreter, enabling the integration of MotionInput into various programming languages. This marks a pioneering moment in the application's history, offering cross-language compatibility for the first time.

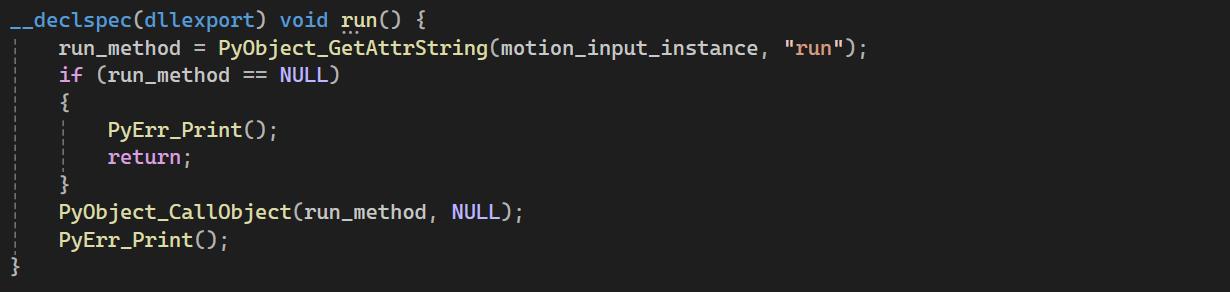

The DLL serves as a C-based wrapper encapsulating the Python package, necessitating access to the Python DLL (compiled in the previous stage), a data folder, and a local Python interpreter within the data folder. Currently, embedding these components directly into the DLL is unfeasible; thus, they must be distributed alongside the DLL.

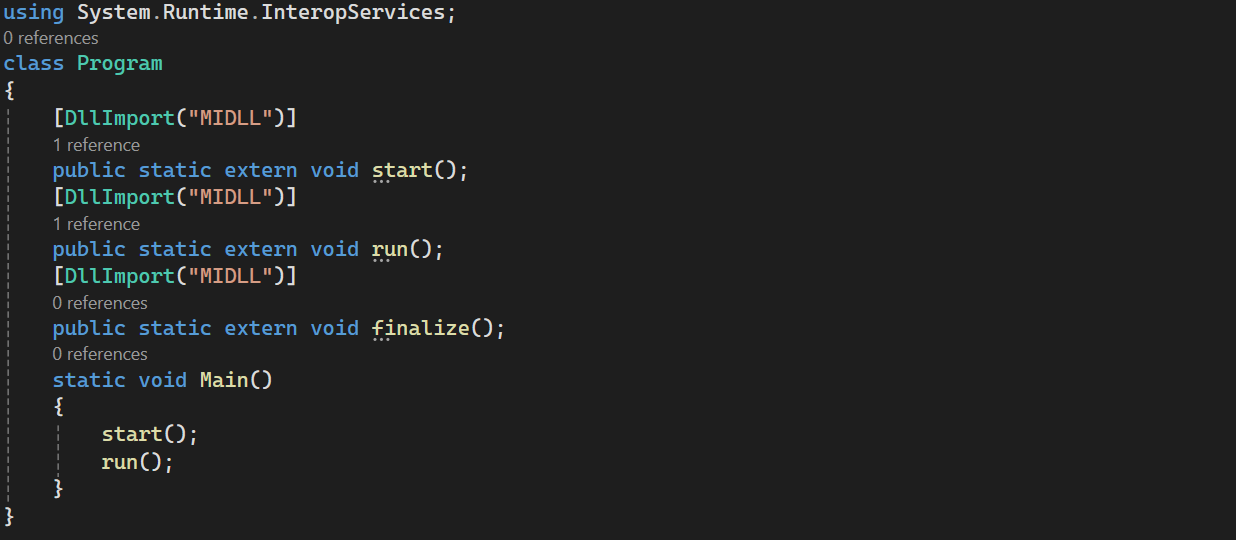

The wrapper initiates by configuring the Python interpreter with the essential environment variables, followed by the instantiation of the MotionInputAPI object from the module. It comprises various DLL endpoints, each corresponding to a property or a method within the MotionInputAPI object. This structure necessitates a direct correspondence between the Python DLL's exposed methods and those within the C wrapper. For instance, a key functionality that initiates MotionInput is depicted in Figure 8.

Subsequently, the DLL can be integrated into any programming language, allowing developers to selectively incorporate the functionalities pertinent to their specific requirements. This introduces an additional layer of abstraction, enhancing flexibility in application development. Figure 9 illustrates a basic example of initiating MotionInput, while an accompanying video demonstrates a vital feature highly requested by our client - the capability to dynamically modify the configuration of MotionInput.