Testing strategy

Following our conversation with the client and teachers from Richard Cloudesley School, we implemented Compatibility Testing, Performance Testing, and User Acceptance Testing.

Compatibility Testing

Compatibility Testing serves as a crucial checkpoint to ensure that the application behaves as intended across a variety of environments. This involves rigorously evaluating the software on different devices, operating systems, browsers, and network environments. The goal is to uncover any compatibility issues that could hinder user experience or functionality. By simulating real-world usage scenarios, it is possible to identify potential discrepancies early, guaranteeing a seamless and inclusive user experience across all supported platforms.

Performance Testing

Performance Testing is important in assessing the responsiveness, stability, scalability, and speed of a software application under varying levels of workload. This testing phase is designed to push the application to its limits, identifying any bottlenecks or performance issues that could compromise the user experience or operational efficiency. Through a series of methodical tests, such as load testing, stress testing, and spike testing, it is possible to get valuable insights into the software's behavior under peak loads, its ability to recover from failures, and its scalability potential.

User Acceptance Testing

User Acceptance Testing stands as the final frontier before a software product meets its audience. This critical phase focuses on validating the software against its business requirements and user needs to ensure it delivers the intended value. Often conducted by the end-users or clients, this testing encapsulates real-world usage scenarios to ascertain the software's functionality, usability, and performance in the hands of its intended users.

Performance Testing

For the performance testing, we decided to test the three most popular game modes within MotionInput, which are also the most complex ones. This will allow us to test the limits of what is currently feasible with MotionInput and in a setting that represents its use cases well. In summary, we have not found any degradation in speed when utilizing the MotionInput SDK or DLL compared to the native Python code base.

Inking Mode

The Inking pose is a mode that is used to simulate the action of drawing on a digital canvas. The pose requires the user to pinch their index finger and thumb together, and then move their hand in a drawing motion.

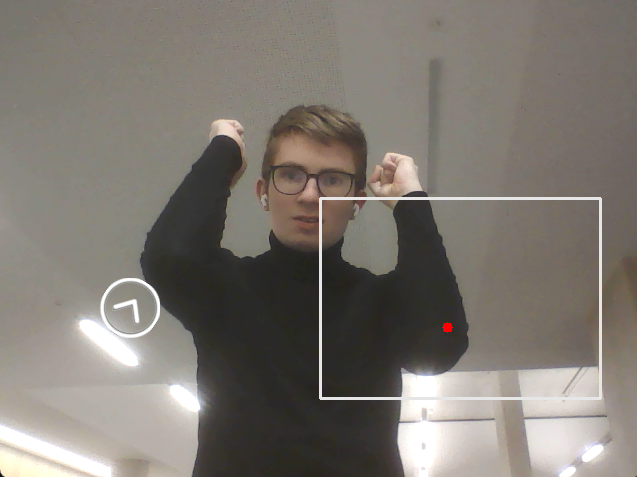

Minecraft Elbows Mode

The Minecraft Elbows mode is a pose that is used to control the character in the game. The LEFT elbow in the box will control the direction which the character is moving in. The RIGHT elbow with the arrow in the circle will control the direction which character is looking at.

Rocket League Mode

The Rocket League mode is a pose that is used to control the car in the game. The 4 circles on the screen have different purposes.

Top Right: hold keyboard shift

Top Left: right mouse click

Bottom Right: click keyboard space

Bottom Left: left mouse click

| Poses | Native | Python Package | DLL |

|---|---|---|---|

| Inking (average fps/s) | 12 | 14 | 14 |

| Minecraft Elbows (average fps/s) | 15 | 18 | 15 |

| Rocket League (average fps/s) | 15 | 18 | 15 |

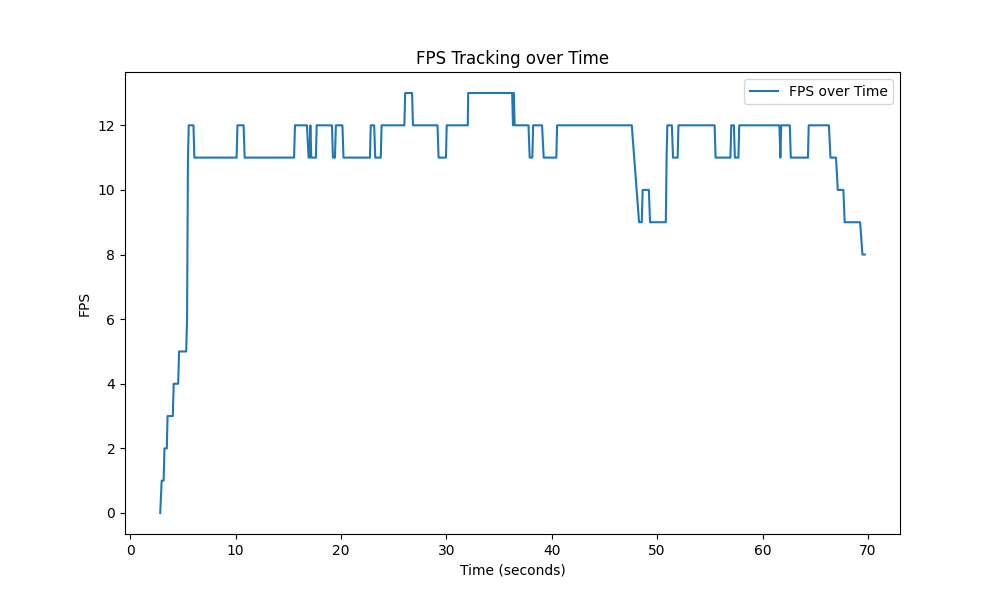

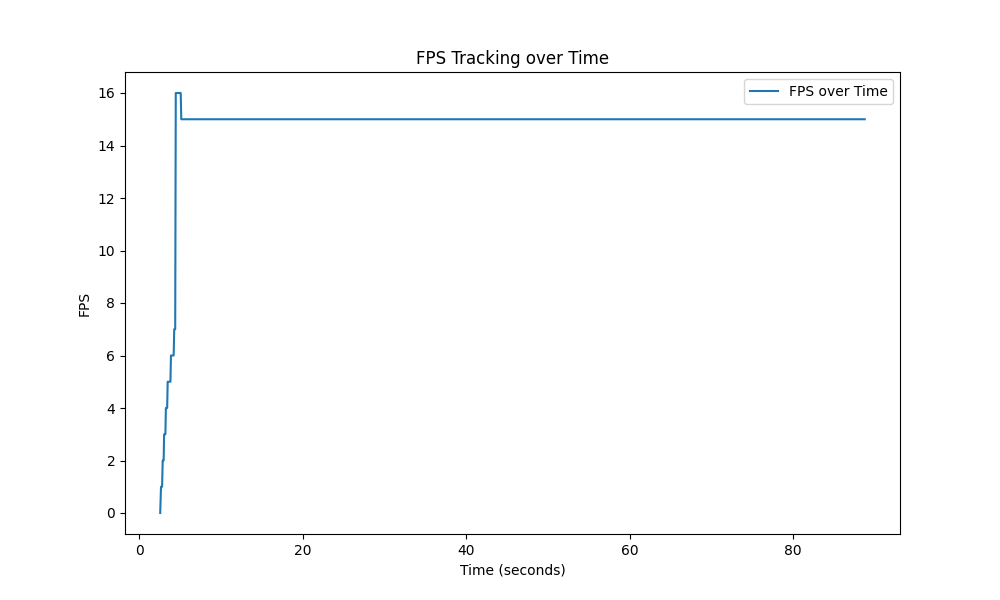

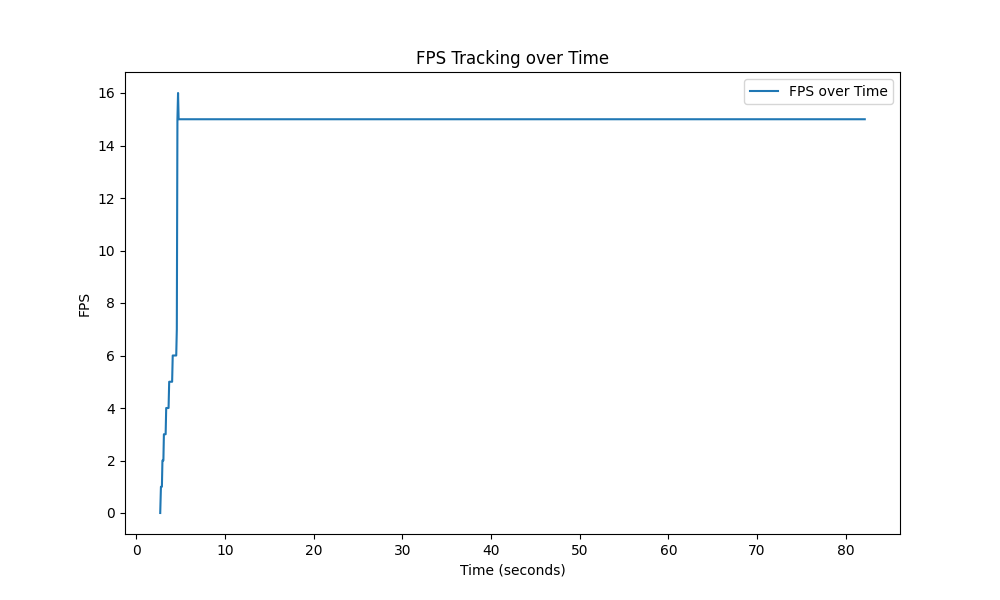

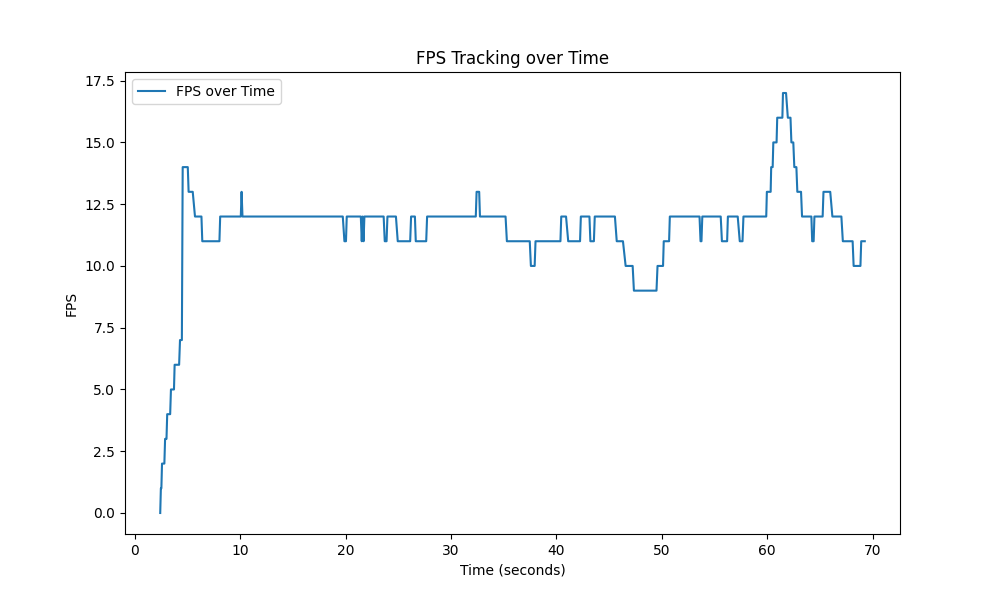

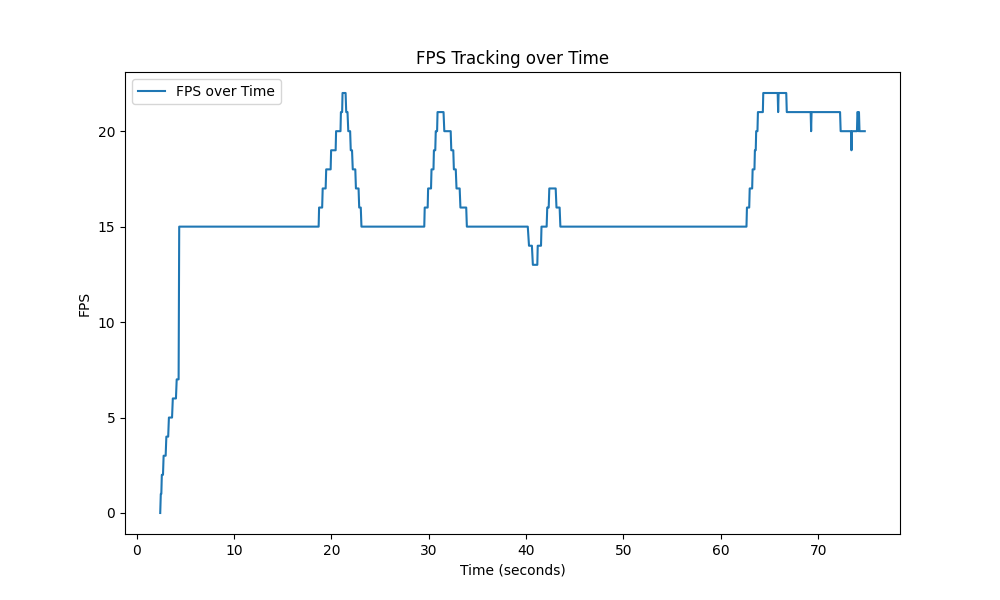

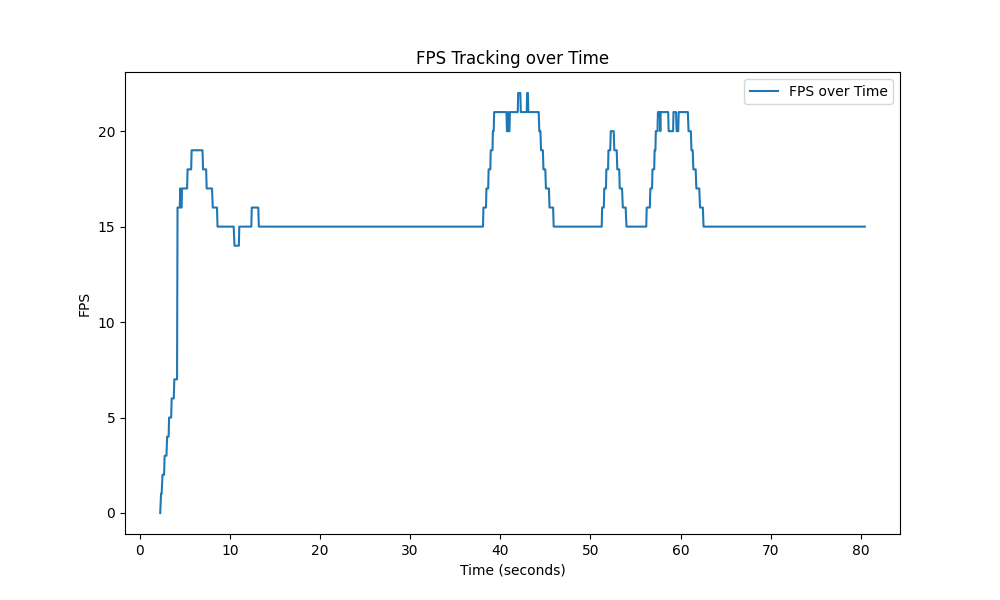

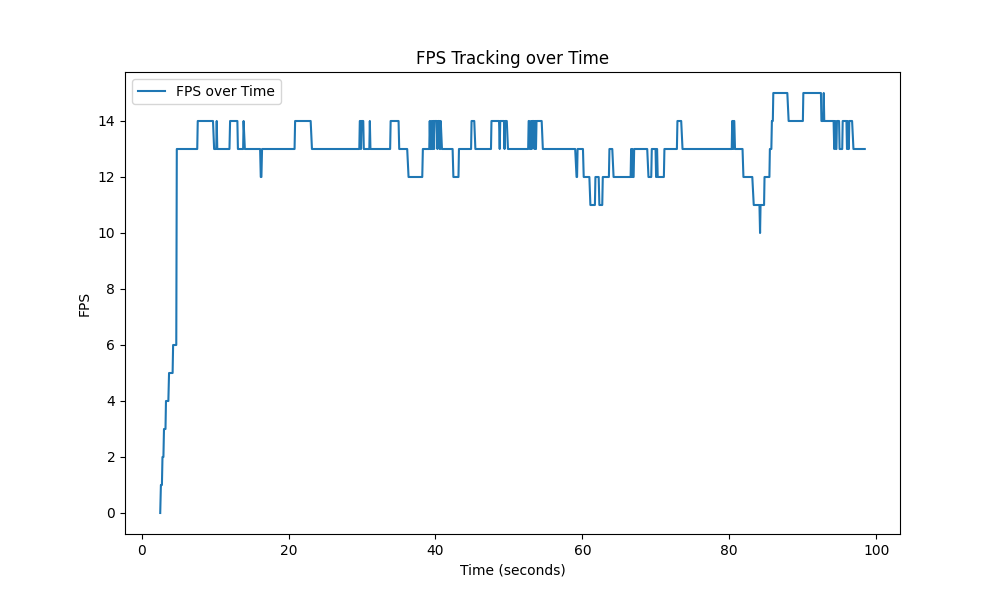

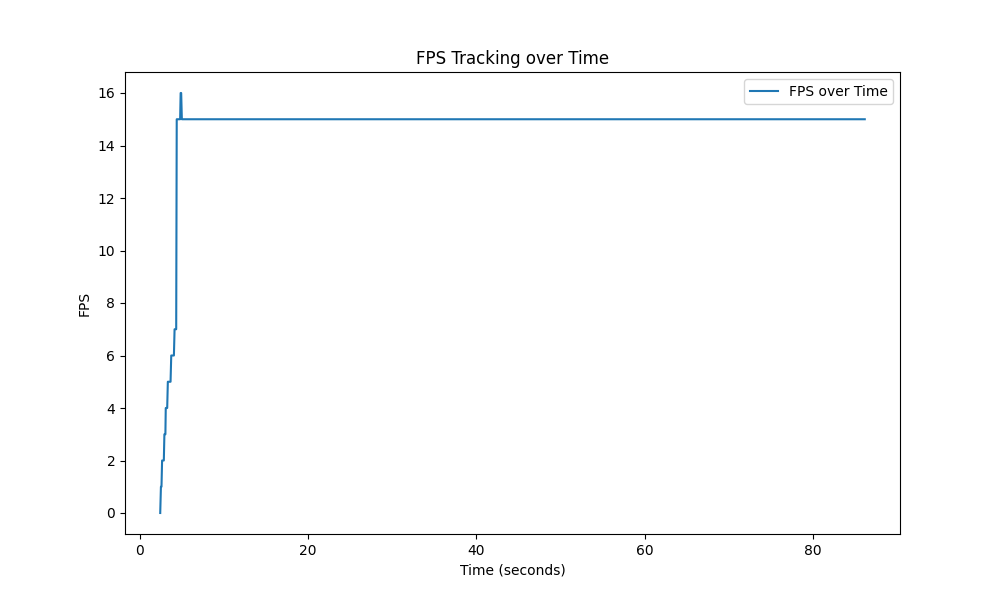

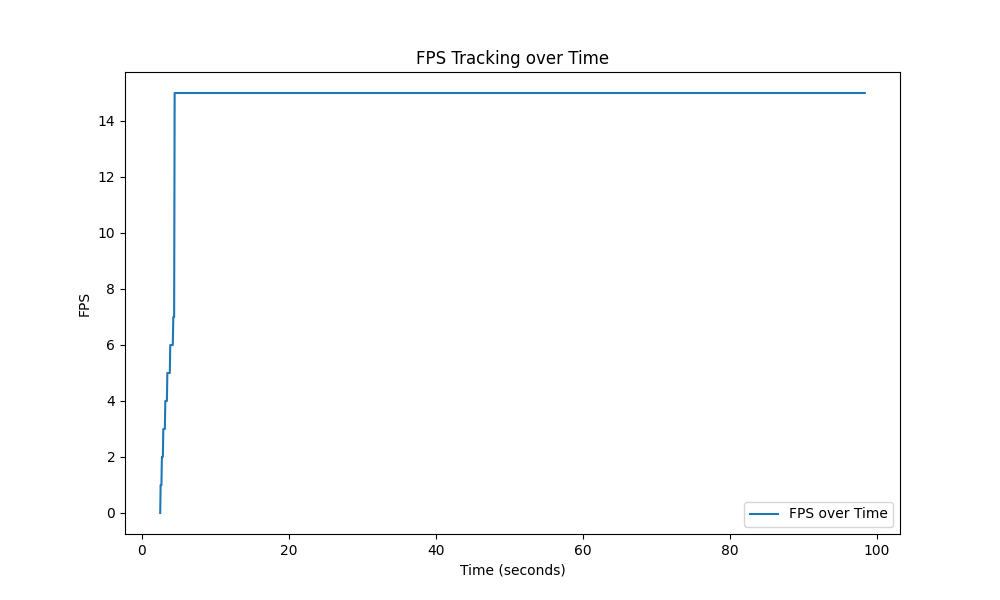

The graphs below shows the performance of 3 different poses tested in three different states, namely - Native, Python Package and DLL. All 3 states were tested on Windows laptop with NVIDIA RTX 3060 graphic card. The graphs shows the frames per second (fps) over time for the three different poses in the three different environments.

Finding 1: Poses had higher FPS when ran as a Python Package and DLL

On average, the FPS for all 3 poses were higher when ran as a Python Package and DLL compared to when ran natively. This shows that the package and DLL are more efficient than the native environment.

Finding 2: Across all 3 states, Inking has the slowest FPS

Across all 3 states, Inking has the slowest FPS. This could be due to the complexity of the pose required to be checked by the check() method, needing to check whether the index finger and thumb is pinched. This could also be due to the complexity of the action() method in the pose, requiring the Pen driver.

Finding 3: FPS spikes could be due to idle periods

FPS spikes in poses Rocket League and Minecraft Elbows as Python Packages could be due to idle periods where the check() method returns False. The action() method is not called, and the FPS increases.

Native

Inking

The following graph illustrates the performance of the Inking pose in its native environment. As depicted, the FPS initially increases as the system stabilizes and then plateaus, indicating a steady performance over time.

Minecraft Elbows

This graph tracks the FPS for the Minecraft Elbows pose natively. The data reveals no fluctuations that could effect the stability of the gameplay.

Rocket League

Displayed here is the FPS over time for the Rocket League pose in its native setting. The FPS appears to be stable after an initial ramp-up, indicating that the pose can consistently translate movements into game controls.

Python Package

Inking

Shown next is the performance graph of the Inking pose when run as a Python package. Notice the remarkable consistency in FPS, suggesting that the Python package manages resources efficiently during the simulation of drawing actions.

Minecraft Elbows

The corresponding graph for the Python package format demonstrates an improvement in FPS, implying an optimized execution compared to the native environment.

Rocket League

The graph for the Rocket League pose running as a Python package showcases higher FPS rates compared to the native environment, which can be seen as an enhancement in processing efficiency.

Dynamic Linked Library (DLL)

Inking

The final graph for the Inking pose, within the context of a dynamic link library, shows a similar pattern to the Python package, maintaining a consistent FPS with minor fluctuations, reflecting stable performance throughout the testing period.

Minecraft Elbows

When executed as a DLL, the pose shows a steady FPS performance with minor dips, which may be attributed to the computational demands of interpreting elbow movements for gameplay.

Rocket League

The DLL version of the Rocket League pose maintains a stable FPS with occasional spikes, potentially reflecting periods of inactivity or less intensive processing demands.

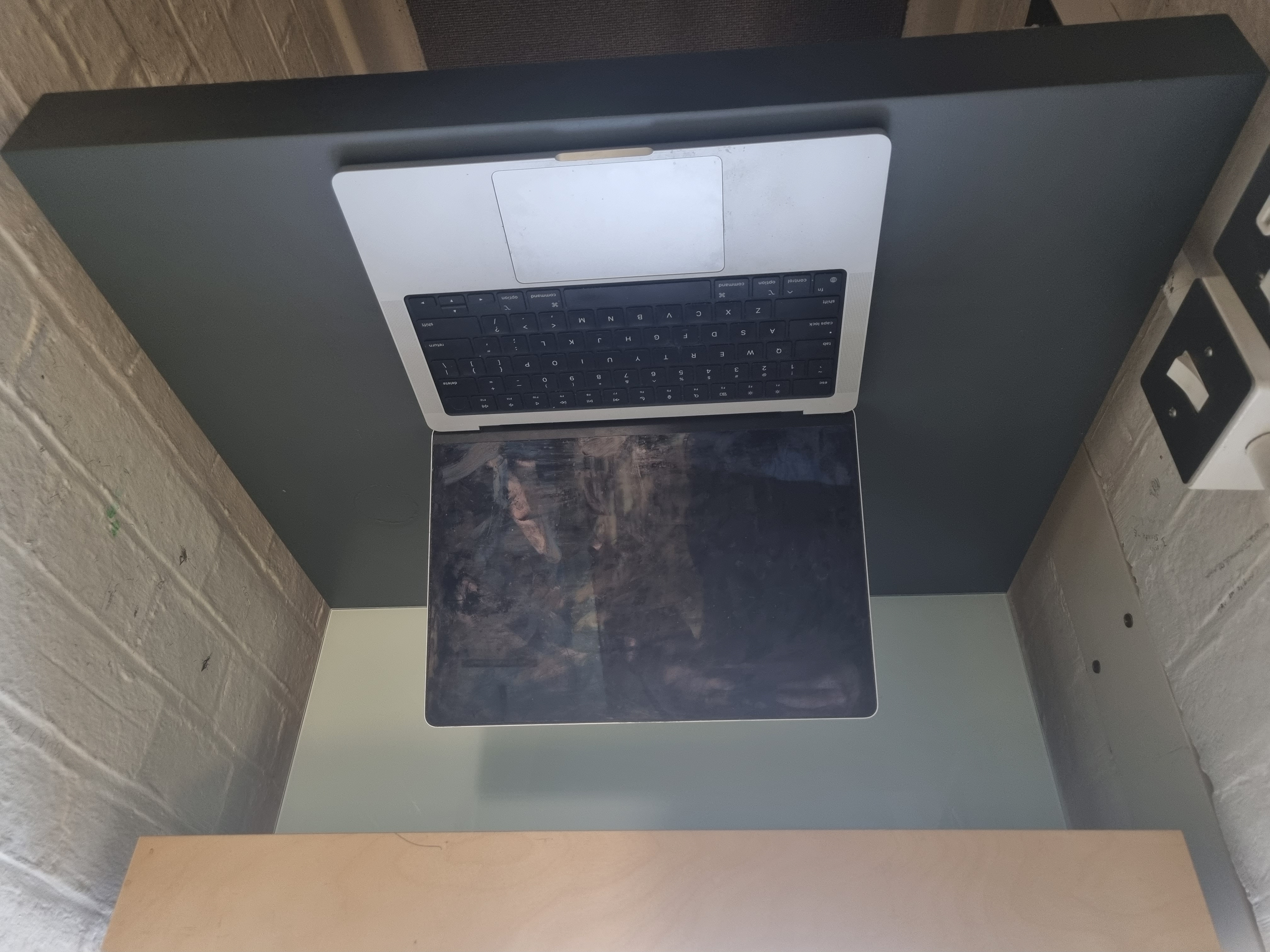

Compatibility Testing

During our compatibility testing phase, we evaluated the MotionInput features across two different platforms: an Apple Silicon M2 Mac and a Windows laptop equipped with an NVIDIA RTX 3060 graphics card. Our aim was to identify and document features that do not seamlessly transition across devices. We observed that while all poses and game modes functioned as expected on the Windows device, certain poses were not compatible with the macOS device due to limitations in accessing the gamepad driver.

ASUS TUF Gaming A15 Evaluation

We conducted tests on the high-performance ASUS TUF Gaming A15 laptop to assess the poses and game modes detailed in the implementation section of our site. The device exhibited full functionality with no inherent issues linked to its specific hardware configuration.

Mac Pro M2 Max Evaluation

Testing on a macOS-based Mac Pro M2 Max was carried out to verify the compatibility of the implemented poses and modes. The only encountered limitation was related to the gamepad driver, necessary for the drive and joystick_wrist poses. This limitation stems from the requirement of a virtual gamepad extension, currently exclusive to the Windows platform, rendering it an unresolved issue on macOS.

User Acceptance Testing

We conducted User Acceptance Testing on our MotionInput SDK and the migrated poses to evaluate the usability of our SDK and poses to ensure it meets all user requirements. We selected four individuals from diverse backgrounds as testers because they embody traits we anticipate in potential users, allowing us to gather comprehensive feedback on our products. Their identities have been anonymised to protect their privacy.

We collected both qualitative and quantitative data from the testers to evaluate the usability of our SDK and the migrated poses. Qualitative data was collected through interviews, while quantitative data was collected through a survey after the users used both MotionInput poses and the SDK.

In conclusion, all testers appreciated the MotionInput SDK and expressed a willingness to incorporate it into their own projects as well. Additionally, we received overwhelmingly positive feedback for the features we migrated, from both other computer science students and children at Richard Cloudesley School.

MotionInput SDK Feedback

4 users were asked to use 3 different poses using the MotionInput SDK. Both quantitative and qualitative data was collected.

| Acceptance Requirement | Strongly Disagree | Disagree | Neutral | Agree | Strongly Agree |

|---|---|---|---|---|---|

| Installation process of Python MotionInput package was seamless and straightforward. | 0 | 0 | 0 | 1 | 3 |

| UX of Python MotionInput package is intuitive and conducive to productivity. | 0 | 0 | 0 | 0 | 4 |

| Easy to import and use the Python MotionInput package | 0 | 0 | 0 | 0 | 4 |

| Easy to change the poses in the JSON file from the DLL | 0 | 0 | 0 | 0 | 4 |

| The DLL folder was easy to navigate and understand | 0 | 0 | 0 | 0 | 4 |

| The poses were working accurately using the DLL | 0 | 0 | 0 | 1 | 3 |

| I would readily incorporate the Python MotionInput package into my personal projects. | 0 | 0 | 0 | 0 | 4 |

Further qualitative data was collected through interviews with the 2 of the users chosen at random. Their responses were recorded below.

Interview 1 - Conducted 20/02/24 with a UCL student

Interview 2 - Conducted 27/02/24 with a UCL student

MotionInput v3.4 Migration Feedback

4 users were asked to play different 3 games using 3 migrated poses (total 9 times). Both quantitative and qualitative data was collected.

Round 1: Users were tasked to play Dino Run, Traffic Rush and Dinosaur Game using force_field pose.

Round 2: Users were tasked to play Dino Run, Traffic Rush and Dinosaur Game using brick_ball pose.

Round 3: Users were tasked to play Dino Run, Traffic Rush and Dinosaur Game using nosebox_display pose.

| Acceptance Requirement (out of 9 times) | 0 - 2 | 3 - 4 | 5 - 7 | 8 - 9 |

|---|---|---|---|---|

| Python poses were able to detect intended pose acccurately | 0 | 0 | 1 | 3 |

| Python poses was able to trigger intended gesture accurately | 0 | 0 | 0 | 4 |

| Python poses were able to be detected at different angles | 0 | 0 | 0 | 4 |

| Python poses were able to be detected at different distances | 0 | 0 | 1 | 3 |

Interview 1 - Conducted 04/03/24 with a UCL student

Interview 2 - Conducted 05/03/24 with a UCL student

MotionInput Testing at Richard Cloudesley School

Apart from able bodied users, we conducted testing for the disabled users at Richard Cloudesley School as well. Simple qualitative feedback was gathered whether they enjoyed the game and if they were able to play the game using the poses.