TESTING

- Home

- Testing

Testing Strategy

JurisBUD AI is a client-side web application, So we have to perform some proper tests to ensure its usability and statbility. Also we want to make sure that all implemented functionalities are properly working.

Testing Scope

1. It is real-world people who JurisBUD AI will be conversing with, therefore, we want to make sure that our AI can understand users' queries correctly which needs test based on actual cases.

2. JurisBUD AI has a variety of functionalities. Each of them should be tested to ensure they work properly and users can have access to and utilize every of them with no constraints and questions.

Test Methodology

1. The built-in features were tested by unit testing.

2. The overall performance and the resilience of dialogs were tested by integration testing.

3. The design and UI were tested by user acceptance testing.

Automate Testing

The tools we used for automate testing is Django.test package, which is suitable for both unit tests and integration tests.

Unit testing

Unit tests were introduced to the key functionalities of JurisBUD AI, such as Signup/Login, Chat generation, File upload and Chats menu operation.

def test_check_token(self):

url = reverse("check_token")

data = (

{

"token": self.token.key,

"user": self.user.name,

},

)

response = self.client.get(url, data, format="json")

self.assertEqual(response.status_code, 200)

self.assertIn("username", response.data)

self.assertEqual(response.data["username"], "testuser")

...

A satisfiable overage rate is obtained with sample information.

Integration testing

We also have a file to test the whole workflow with an exact uploading operation with a sample file, also the login/signup is done with sample information.

def test_list_chats(self):

# Assuming there are chats created by the user

Chat.objects.create(

author=self.user, prompt="Test Prompt 1", response="Test Response 1"

)

Chat.objects.create(

author=self.user, prompt="Test Prompt 2", response="Test Response 2"

)

url = reverse("list_chats")

response = self.client.get(url)

self.assertEqual(response.status_code, 200)

self.assertEqual(len(response.data["chats"]), 2)

...

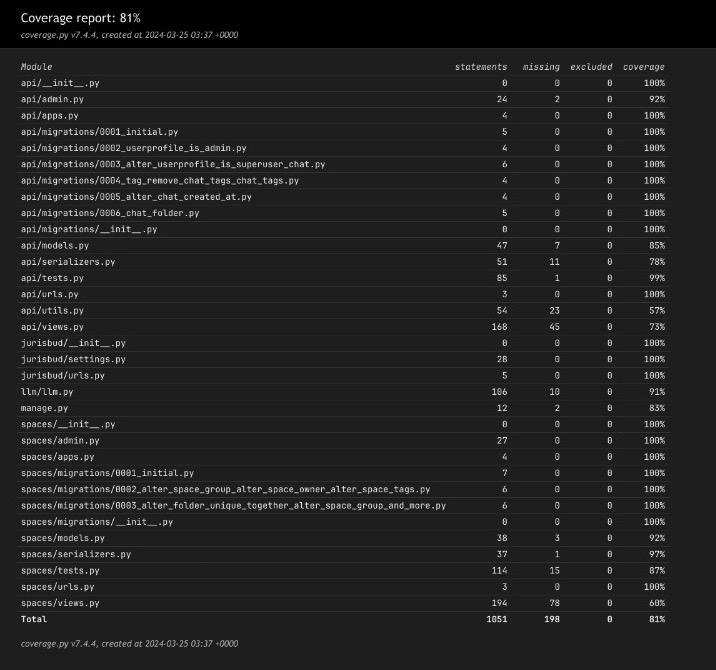

The testings has covered up to 81% coverage, as shown on the coverage report generated by the python package 'coverage'.

User Acceptance Testing

To gain a further understanding of user experience of using JurisBUD AI and find potential improvements, we asked 4 people to test our products and recorded their feedbacks.

Tester

The testers were chosen by their specific charcteristics, which are relevant to the target users of JurisBUD AI. They all either working, or learning, at least being interested in legal industry.

Test Case

We divided the test into 4 cases, the testers would go through each case and give feedbacks. They would then rate each requirement at Likert Scale as well as leave custom comments.

Test case 1

We let the testers start a query, follow the workflow of JurisBUD AI until they have the response.

Test case 2

We give them a sample document and aske them to upload it, then ask a question relative to the document.

Test case 3

We let them have a look on their chat histories.

Test case 4

We let them be free to play with chats management using spaces.

Feedback

| Acceptance Requirement | Total Dis. | Dis. | Neu- tral |

Agree | Total Agree | Comments |

|---|---|---|---|---|---|---|

| JurisBud AI run to the end of job | 0 | 0 | 0 | 0 | 4 | +Everyone agree JurisBUD AI run till end of job |

| Clear response to every action of users | 0 | 0 | 0 | 0 | 4 | +Everyone agree responses are clearly given |

| Reasonable answers in question answering | 0 | 0 | 0 | 0 | 4 | +The LLM works amazingly with OpenAI API that solves testers' queries successfully. |

| Attachments can be successfully received | 0 | 0 | 0 | 0 | 4 | +Everyone agree attachs were received

-only support PDF |

| Chat history works | 0 | 0 | 0 | 0 | 4 | +Everyone can browse their chat histories |

| User-friendly UI | 0 | 0 | 0 | 2 | 2 | +The UI works with basic functionalities -Need a 'Back' button. |

| Space is useful in categorization | 0 | 0 | 0 | 1 | 3 | +Most people love the idea to manage their chats -It might be too complicated. |

Conclusion

We are glad to see that the testers mostly are satisfied with the current product. The comments mean a lot to us. It means that the time and efforts spent do pay back because our users do like it. More importantly, they also show us that JurisBUD AI is not perfect which leads us the way for further development and improvement.

Jasmine

20 years old, an international student at Queen Mary, University of London

She is currently an undergraduate students in Business with Law.

Harry

47 years old, an experienced lawyer

He is working in the legal industry for about 10 years providing legal assistance to his clients.

William

20 years old, a second year university student

He is particularly interested in contracts and advanced technologies.

Nick

25 years old, legal assistant

He works for a small business to provide opinions in legal area.