Introduction

This project is all about displaying the maximum potential of the HoloLens, exploring what it is capable of and finding new way to use this new piece of technology. Harmonising both software and hardware, to produce API’s which can be used to aid developers in their endeavours.

At UCL HoloLens gaming, we are trying to push the boundaries and capture what Augmented Reality (AR) brings to the world, and the possible applications. In order to achieve this, we must first understand what has already been published and how the product works. Microsoft has provided many demo examples to explore various capabilities and features integrated into the system.

The HoloLens is a device which delves into the world of “mixed reality”. What this means, is Users merge objects from the virtual world with the physical. This blend of environment allows users to virtualise the working world and bring digital content to life. The HoloLens uses intuitive methods to provide an immersive experience interacting with the world. These methods are Gaze, Gesture and Voice.

- Gaze: Built in sensors uses head movements as a cursor. This provide a new meaning to point and click. Very accurate and responsive.

- Gesture: Allowing users to manipulate virtual objects size and location simply with motions and hand gestures.

- Voice: Using Cortana to help navigation and commands to control application.

Further information regarding the Hardware Specification can be found on the Microsoft Website:

https://developer.microsoft.com/en-us/windows/holographic/hardware_details

The objective is open ended for this project. The principles are about developing useful API's and project files, which can be used to further the endevour of the Hololens. There are various aspects which can be explored, this is assisted with the Microsoft Developers kit and Documentation provided about prewritten libraries. With the combination of Unity and Visual Studio Team Services, Team 11 will strive to innovate new kits and demo the capabilities of the Hololens and 'Mixed Reality'

Research Links

Apart from the crucial HoloLens academy [1] to learn about the functionality of the HoloLens, as well as the developer forums [2] and documentation [3], we also used various external tools and papers to further our capabilities with the software and hardware we have been given.

In the first term, we decided to pursue the direction of marker tracking to enhance our interaction capabilities and bring something almost novel to the HoloLens at the time. To this end, we started with the Aruco object and marker tracking library [4], and its implementation in OpenCV. We also researched the AprilTag library [5], which has almost the same features and no dependencies. In the end we decided to continue development with the Aruco library, since it offered additional features over AprilTag, that we felt were important enough to warrant the additional difficulty of integrating it with the HoloLens.

Apart from these two libraries, we also briefly looked at Vuforia (a commercial library which also has marker tracking features) [6], but at the time it was only available for a paid subscription.

The rest of our research was more “hands-on” – seeing as HoloLens is a relatively new product, we had to conduct manual tests to see what feels best for the user – testing velocities for objects, materials to use, interactions by gestures, movement and voice. Reliability of marker tracking, slow stabilization improvements, snapping, the actual size for the levels. All those factors had to be iteratively improved as we worked with the HoloLens for an extended period of time.

[1] "Holographic Academy", Windows Dev Center - Mixed Reality, 2017. [Online]. Available: https://developer.microsoft.com/en-us/windows/holographic/academy [Accessed: 21- Apr- 2017].

[2] "HoloLens App Development Forums", Hololens Developer Community, 2017. [Online]. Available: https://forums.hololens.com/ [Accessed: 21- Apr- 2017].

[3] "HoloLens Documentation", Developer.microsoft.com, 2017. [Online]. Available: https://developer.microsoft.com/en-us/windows/holographic/documentation [Accessed: 21- Apr- 2017].

[4] S. Garrido-Jurado, R. Muñoz-Salinas, F. Madrid-Cuevas and M. Marín-Jiménez, "Automatic generation and detection of highly reliable fiducial markers under occlusion", Pattern Recognition, vol. 47, no. 6, pp. 2280–2292, 2014. Available: http://eecs.mines.edu/Courses/csci507/schedule/24/ArUco.pdf. DOI: 10.1016/j.patcog.2014.01.005

[5] E. Olson, "AprilTag: A robust and flexible visual fiducial system", IEEE International Conference on Robotics and Automation (ICRA), pp. 3400-3407, 2011. Available: https://april.eecs.umich.edu/pdfs/olson2011tags.pdf

[6] "Vuforia | Augmented Reality", Vuforia.com, 2017. [Online]. Available: https://www.vuforia.com/ [Accessed: 21- Apr- 2017].

Aruco object markers and tracking: http://www.uco.es/investiga/grupos/ava/sites/default/files/GarridoJurado2014.pdf

AprilTag markers generation and tracking: https://april.eecs.umich.edu/papers/details.php?name=olson2011tags

Research paper provided by our teaching assistant – concerns the use of hand gestures in virtual reality applications:

http://www.samehkhamis.com/taylor-siggraph2016.pdf

Unity Test Tools: https://unity3d.com/learn/tutorials/topics/production/unity-test-tools

Windows Developers Center: Fundamentals of Holographic Development: https://developer.microsoft.com/en-us/windows/mixed-reality/development

April Robotics Laboratory: https://april.eecs.umich.edu/

University of Córdoba Report- Automatic generation and detection of highly reliable fiducial markers under occlusion: http://eecs.mines.edu/Courses/csci507/schedule/24/ArUco.pdf

This helped us gather expertise for dealing with marker tracking primarily, as well as general Unity functionalities. The rest of our research was more “hands-on” – seeing as Hololens is a relatively new product, we had to conduct manual tests to see what feels best for the user – testing velocities for objects

Comparision of Marker Tracking Libraries

- Vuforia

- Apriltag

- ARToolkit

- Aruco

Pros: Vuforia is an established commercial library with a lot of features, and is now officially supported for the HoloLens. It also offers a lot of flexibility in the use of different marker types, and even custom images.

Cons: At the start of the project, when we looked into the various library options, HoloLens support for Vuforia was still in Beta, and it wasn't clear whether it was accessible to any developer. This only changed later when we had already started work on our own solution, and it was still not clear whether the HoloLens features were included in the developers license. Additionally, there seemed to be no way to use it in the Unity Editor, which was required to test our code while we did not have access to the HoloLens.

Pros: AprilTag is a robust library for marker tracking that has the main advantage of being lightweight and free of any dependencies. For a while a port capable of running on the HoloLens was also maintained by Microsoft in their HoloToolkit.

Cons: Due to being very lightweight, the library does not provide as many convenient features as other libraries. Specifically, it does not offer direct pose estimation values, and our knowledge of marker tracking was not enough to generate this ourselves from the library's output values.

Pros: This is another established AR library, but open source instead of commercial like Vuforia. It offers a similar level of features to Vuforia for the marker tracking we are interested in. A HoloLens wrapper for it was released here (https://github.com/qian256/HoloLensARToolKit) while we were working on our project.

Cons: The wrapper for ARToolkit only appeared in the middle of our project timeline, so we would have had to drop our progress up to that point and spend more time to integrate this library into our project. It also, like Vuforia, offered no easy way of using it in the Unity Editor, an important feature while we had limited access to the HoloLens.

Pros: Aruco is another well-known marker tracking library, to the point of being integrated into OpenCV as its own module. Once OpenCV modules for the HoloLens were available, this made it easy to use since it required no additional compilation of the Aruco library itself. Furthermore, it also allowed us to use the x64-compiled version of the module for the Unity Editor version of the tracking plugin with the same plugin code base.

Cons: While Aruco provides many useful features such as direct pose estimations of tracked markers, there was still a lot of integration work to do to make the results match with the view on the HoloLens, and to generally improve the stability of tracking results. Any optimisations that would have been provided by libraries such as ARTookit or Vuforia had to be implemented by us.

Ultimately, we decided to use Aruco, since it allowed us to create our own plugin that would run on the HoloLens and in the Unity Editor, while also having enough features built-in to make implementation possible within our timeframe. We implemented some of the features missing from Aruco, such as pose data filtering, on our own, and with these we were able to reach the results that we wanted.

Marker Tracking Performance Testing

The tracking plugin gets a 896x504 image from the HoloLens camera. Within the plugin, we have the option to scale the image down to improve the tracking performance. Doing so comes with the loss of tracking precision however, since a smaller image means that markers will be harder to detect, and less detail exists to resolve their position.

To find the best balance between performance and accuracy, we tested performance of marker tracking at 4 internal image sizes: The full image, and the image scaled down by a factor of 1/2, 1/4, and 1/8 respectively. We had the HoloLens track a single marker, and measured the time taken to track the camera image each frame. Successive frame times are shown and compared in the graph below:

![]() The frame times overall fluctuate quite a lot, especiallly at larger image sizes. However, overall it can be seen that smaller image sizes do lower the time required for tracking. Interestingly though, there is almost no difference between the 1/4 and 1/8 image size, showing that the performance gains become less pronounced at very small image sizes. Additionally, during testing it could be seen that tracking accuracy reduced noticeably at 1/8 image size. Because of this, we settled on a reduction factor of 1/4 for the game.

The frame times overall fluctuate quite a lot, especiallly at larger image sizes. However, overall it can be seen that smaller image sizes do lower the time required for tracking. Interestingly though, there is almost no difference between the 1/4 and 1/8 image size, showing that the performance gains become less pronounced at very small image sizes. Additionally, during testing it could be seen that tracking accuracy reduced noticeably at 1/8 image size. Because of this, we settled on a reduction factor of 1/4 for the game.

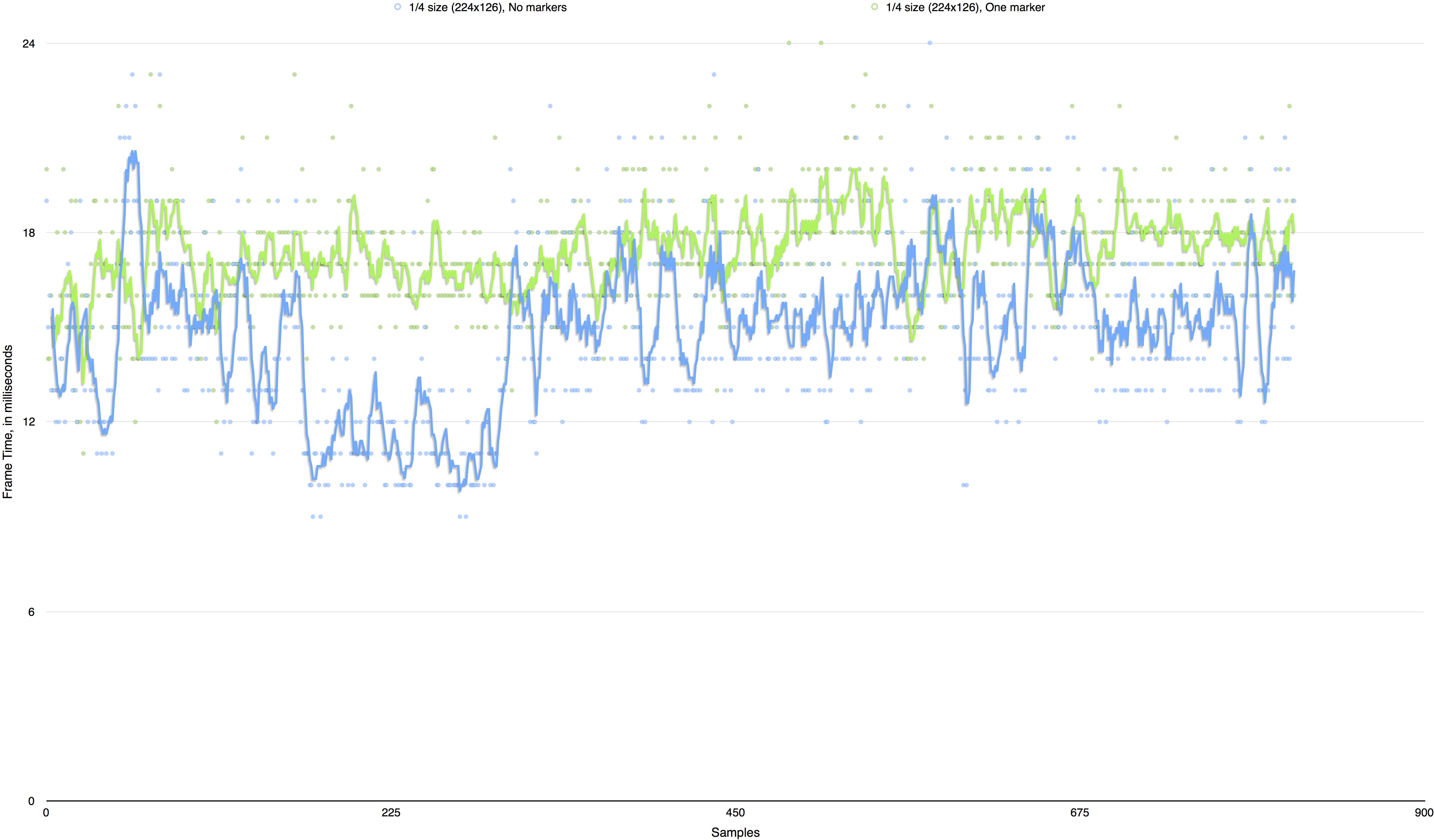

In addition, we also did some tests on the impact of the number of markers seen in the image on tracking performance. In the following graph, frame times are shown for tracking at 1/4 image size, with no markers visible, and then with one marker in the image.

Here it can be seen that the number of markers in the image only has a small impact on performance, if any. Knowing this meant that we did not have to worry about performance if a level in the game used multiple markers simultaneously.