Design Principles

Design Evolution

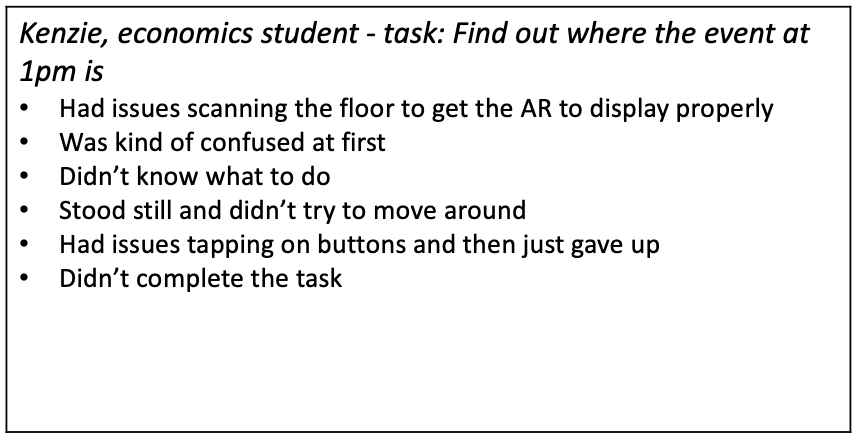

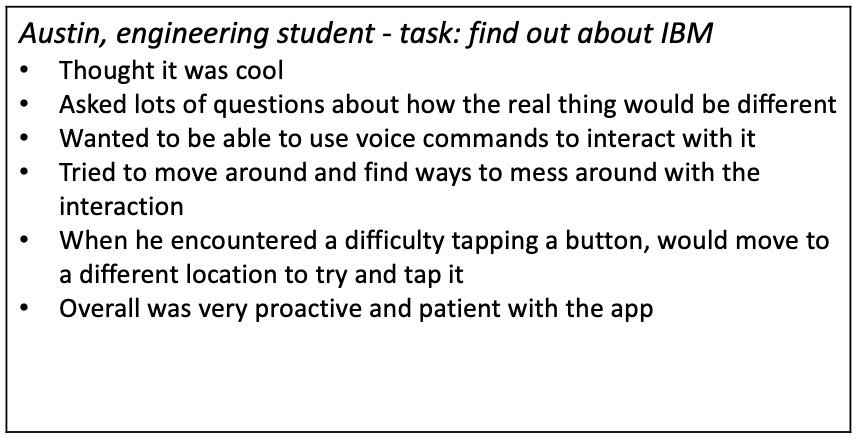

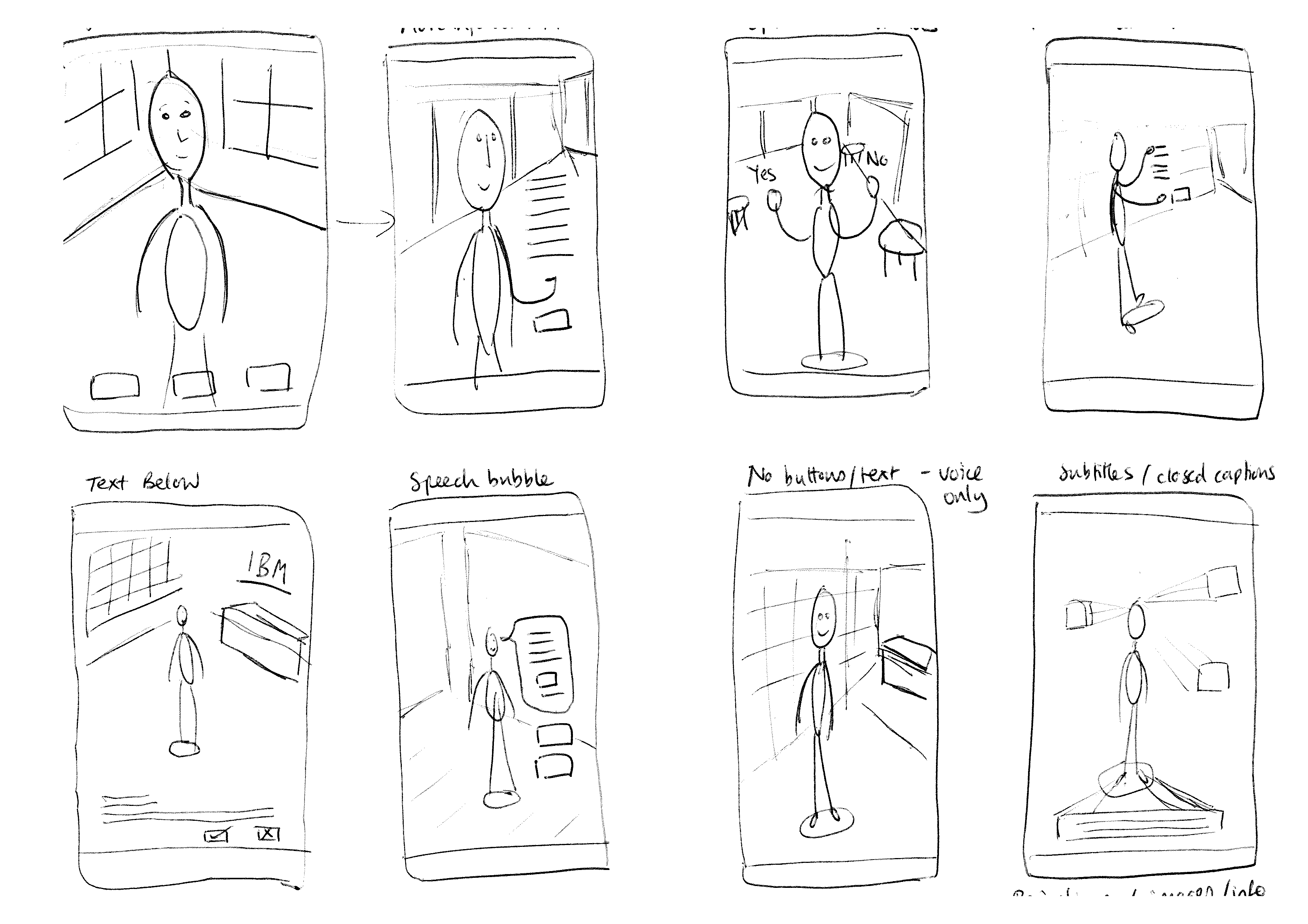

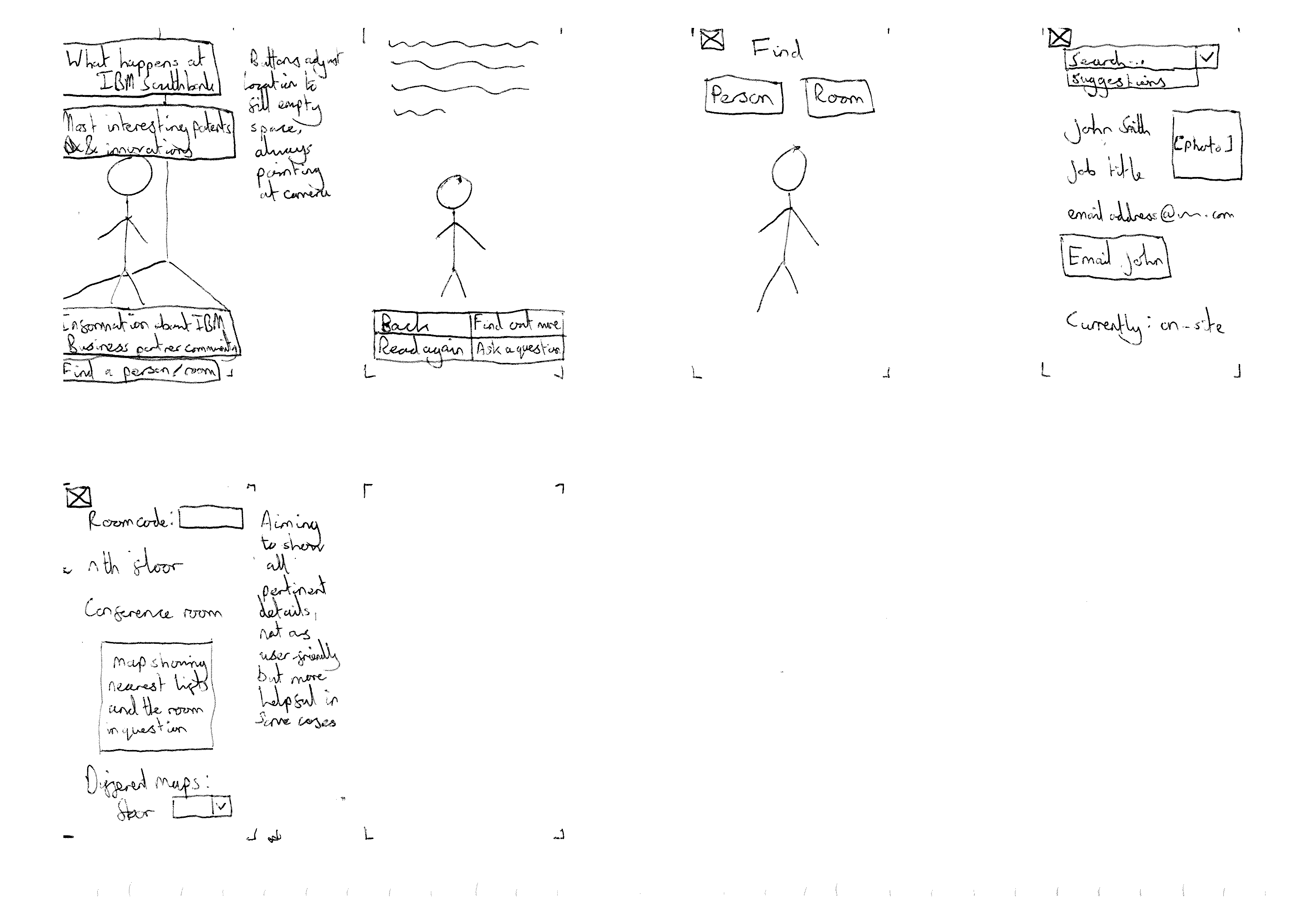

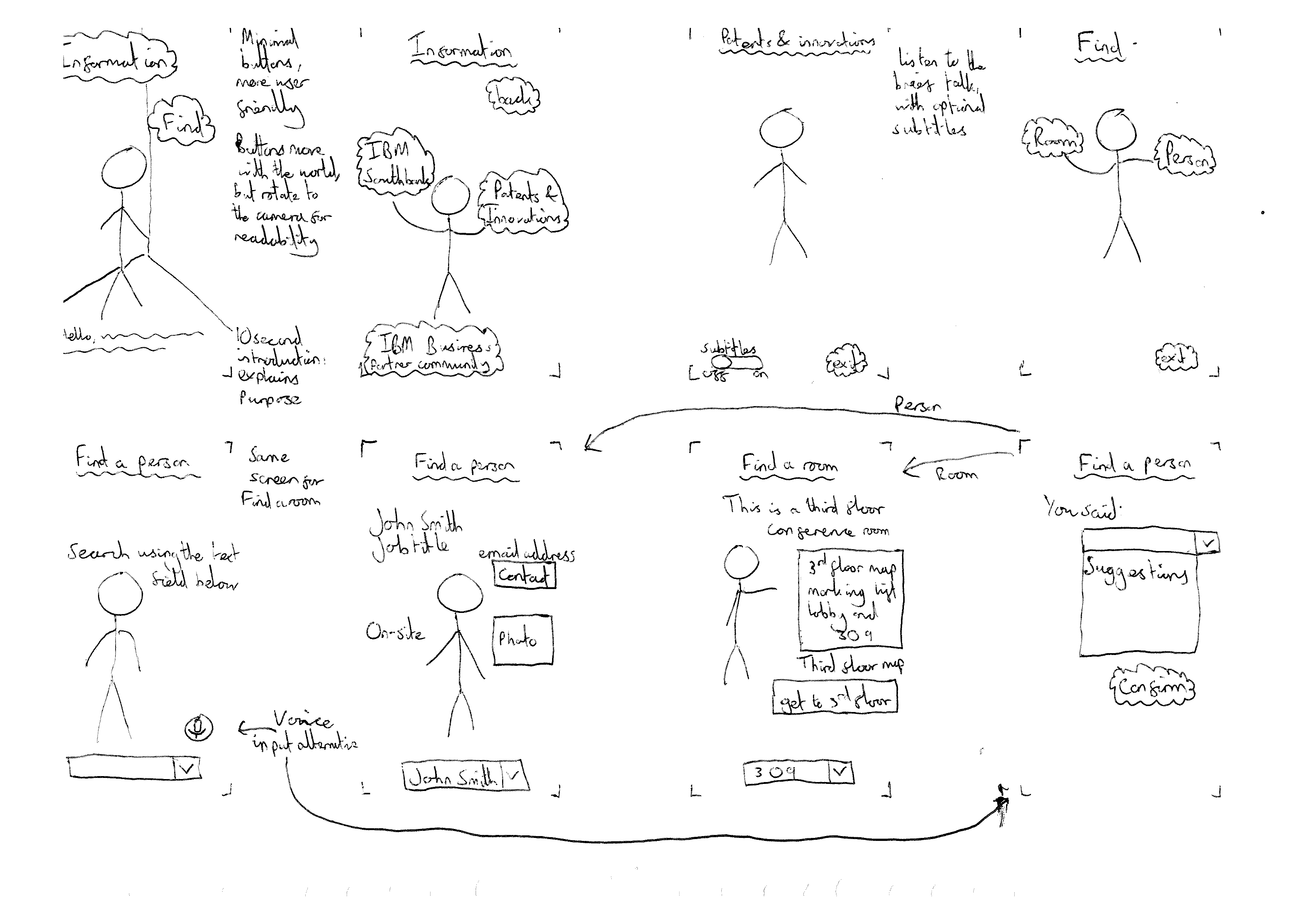

Our interaction design went through several stages of iteration and evolution. We started with hand drawn sketches to generate different ideas for the experience of interacting with the avatar.

In a second sketch, we showed the user trying to find the office of “John Smith” who works in the building. We drew the buttons and information as an overlay in “screen space” i.e. they don’t move around with the user’s movement. We showed lots of information about the person to be found (like picture, email address etc.) The pros of this approach are that the information was easy to see and the app looked like other apps people might have used. However, having lots of screen-space overlays and buttons like this slightly defeats the point of using AR, because it discourages the user’s movement and looks like a static, ordinary app.

In the third sketch, we decided to iterate and draw another sketched storyboard, this time showing buttons in ‘world space’ that moved around with the avatar, and showing possibilities for voice recognition interaction. We also reduced the amount of information that was displayed within each scene.

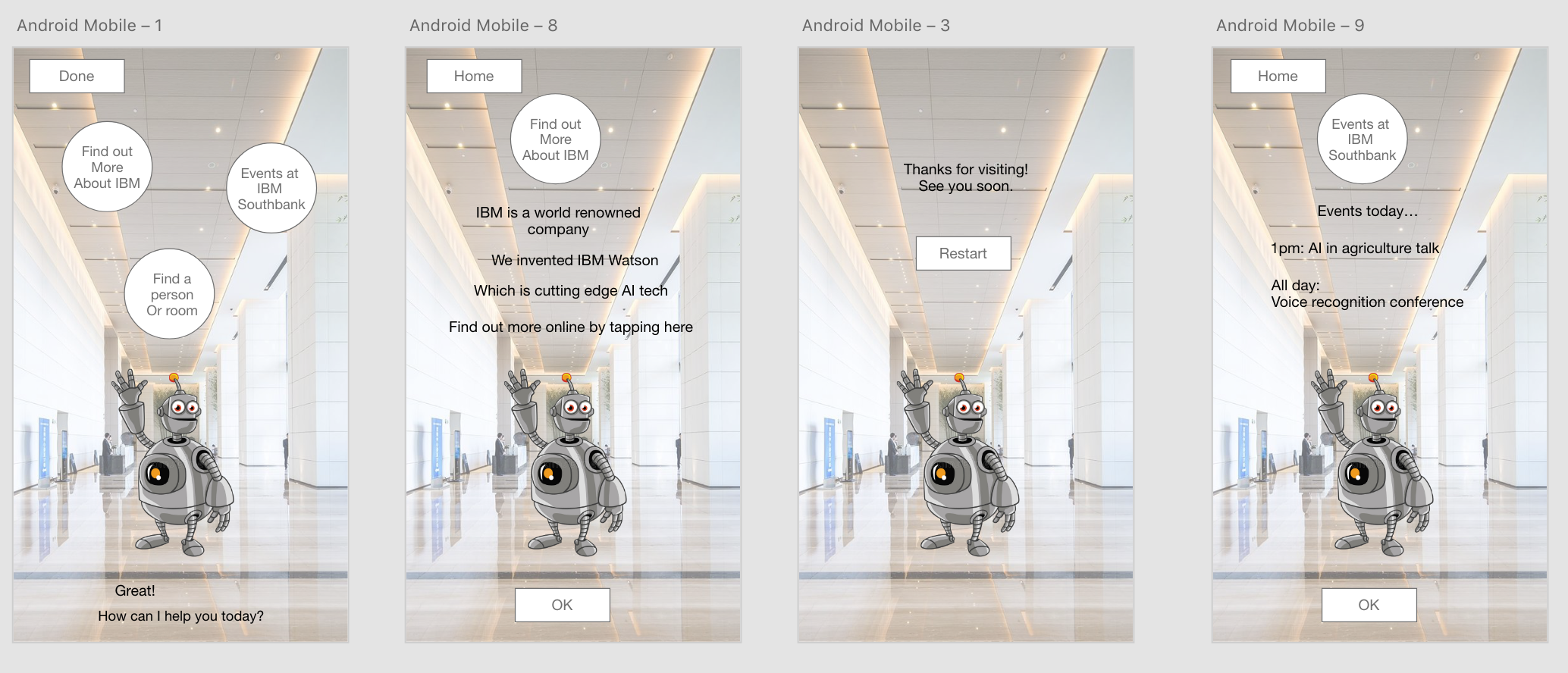

From these sketches, we developed a basic wireframe prototype using Adobe XD.

Since this was a 2D prototype, we were not able to simulate many characteristics of AR, but we used this prototype to test the branching structure of the interaction. Here you can see a user interacting with our wireframe prototype. Following from feedback we got from users at this stage, and earlier comments in our interviews, we realised it was important to add a “welcome” and “goodbye” message to make the interaction as a whole seem more ‘conversation like’ and to provide an explanation of what the AR was and how you could interact with it. We also realised how important it was for the user to have a “info” or “help” button so that they had an “emergency exit” at all times if something went wrong or they got confused.

Although we learnt some useful insights from our wireframe prototype, we realised that we needed something closer to a real AR experience in order to really understand user interactions in AR. We tried two AR prototyping tools, ARTorch and WiARFrame. Using these programs, we made an AR prototype that users could interact with on their phone, with buttons in world space. We also conducted research on best practice for AR design from existing sources ( Google AR design guidelines. [11] https://designguidelines.withgoogle.com/ar-design/ Prototyping for AR - Presentation at Apple WWDC 2018 [12] https://developer.apple.com/videos/play/wwdc2018/808)

Our 2D digital prototype was a very useful basis for this 3D one as it provided a map for the scene structure. We included the “welcome” and “goodbye” screens from iteration 2 of our 2D prototype. Here are some images of people using our prototype.