Project Background

With advances in mobile graphics and machine learning, lifelike augmented reality avatars are becoming feasible as an alternative to a human presence in customer-facing interactions. Whilst touchscreen check-in systems are often in use in receptions, these remove the social element that a human receptionist brings and lack identity, taking away the ‘face of the company' element that a human receptionist provides. The technology now exists for some of this reception functionality to be delivered by a virtual assistant. Using a simulated humanoid character in an augmented reality setting is an attempt to re-humanize this process and allows a company more control over the first impression that a visitor has when coming to a building.

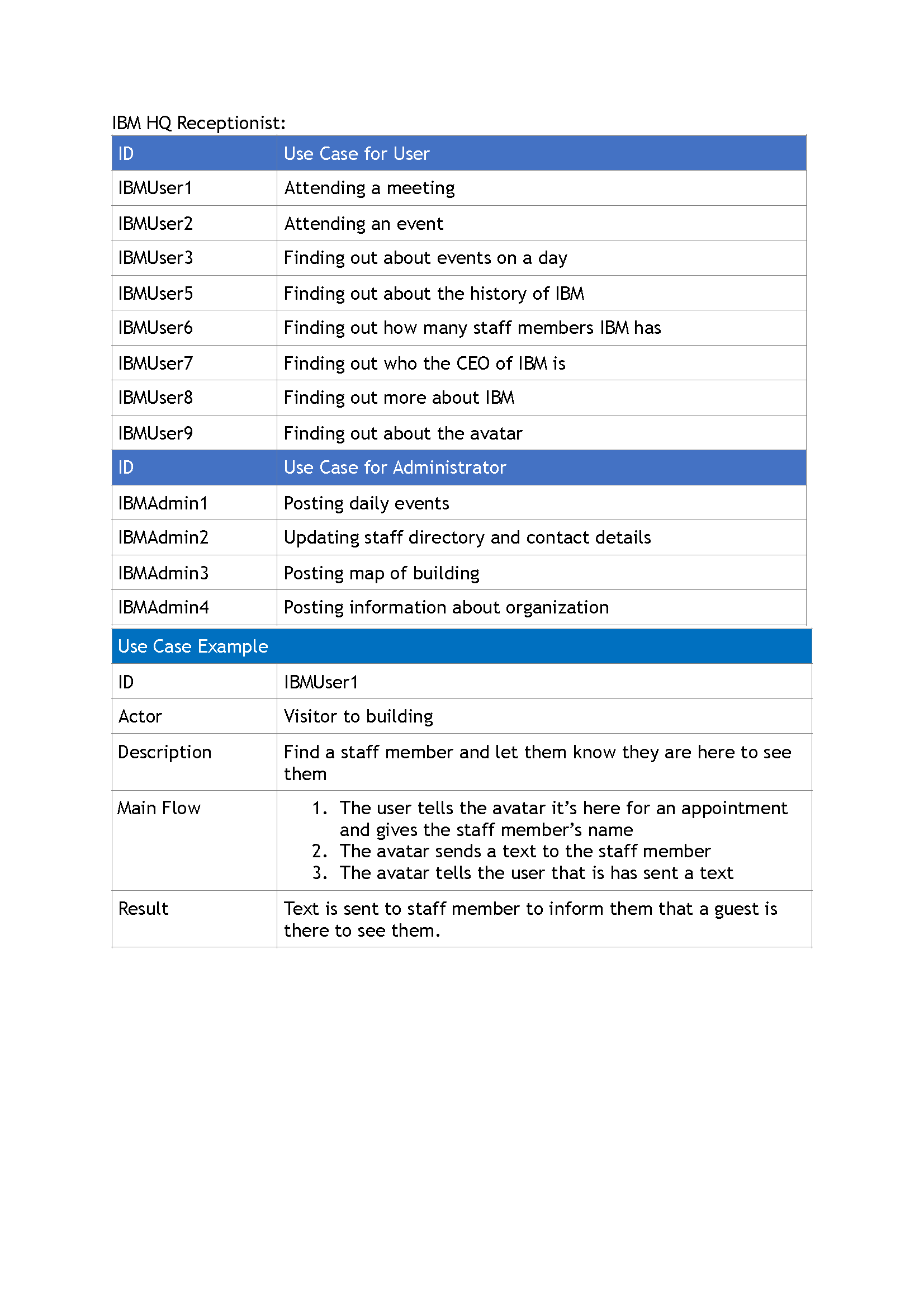

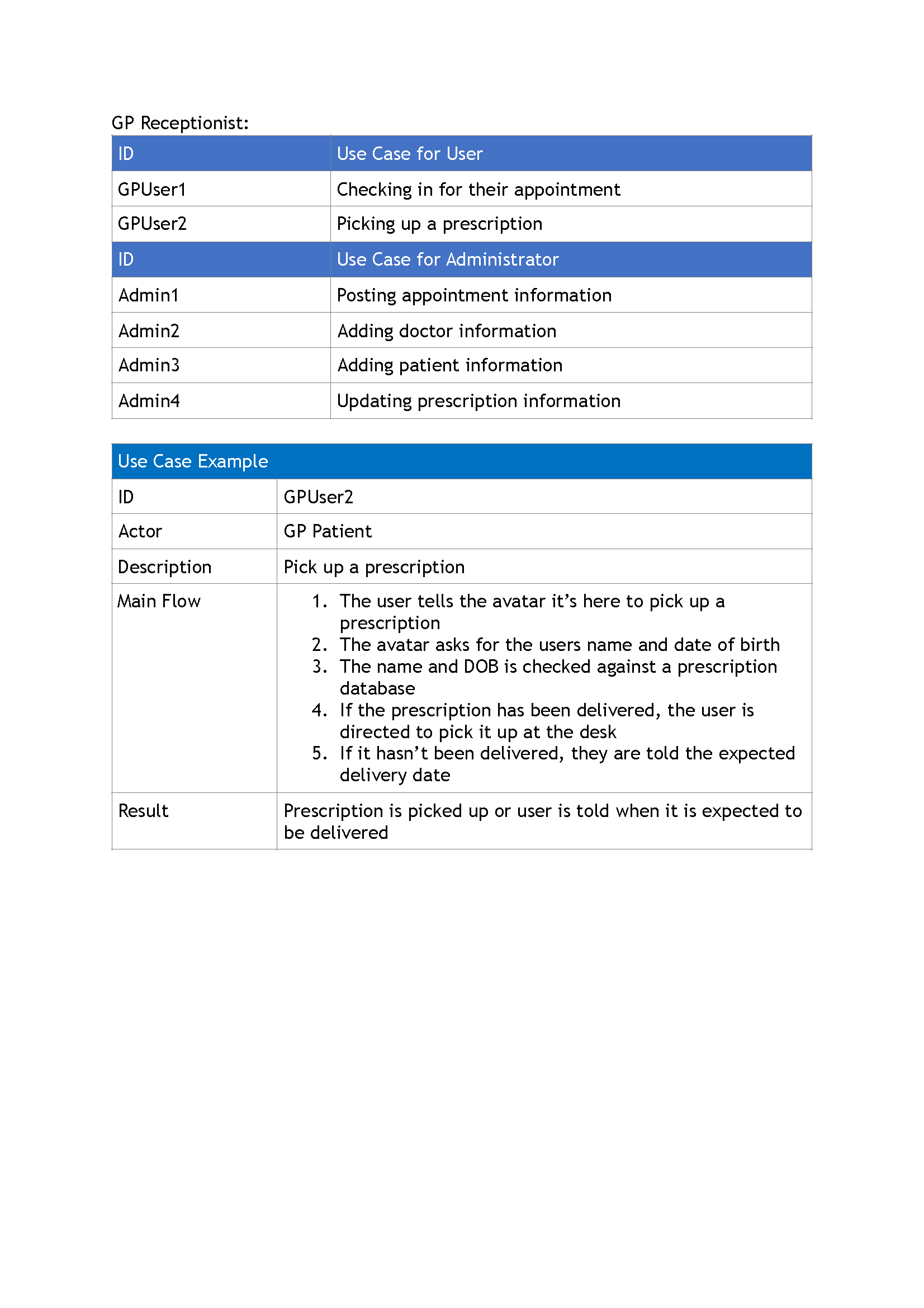

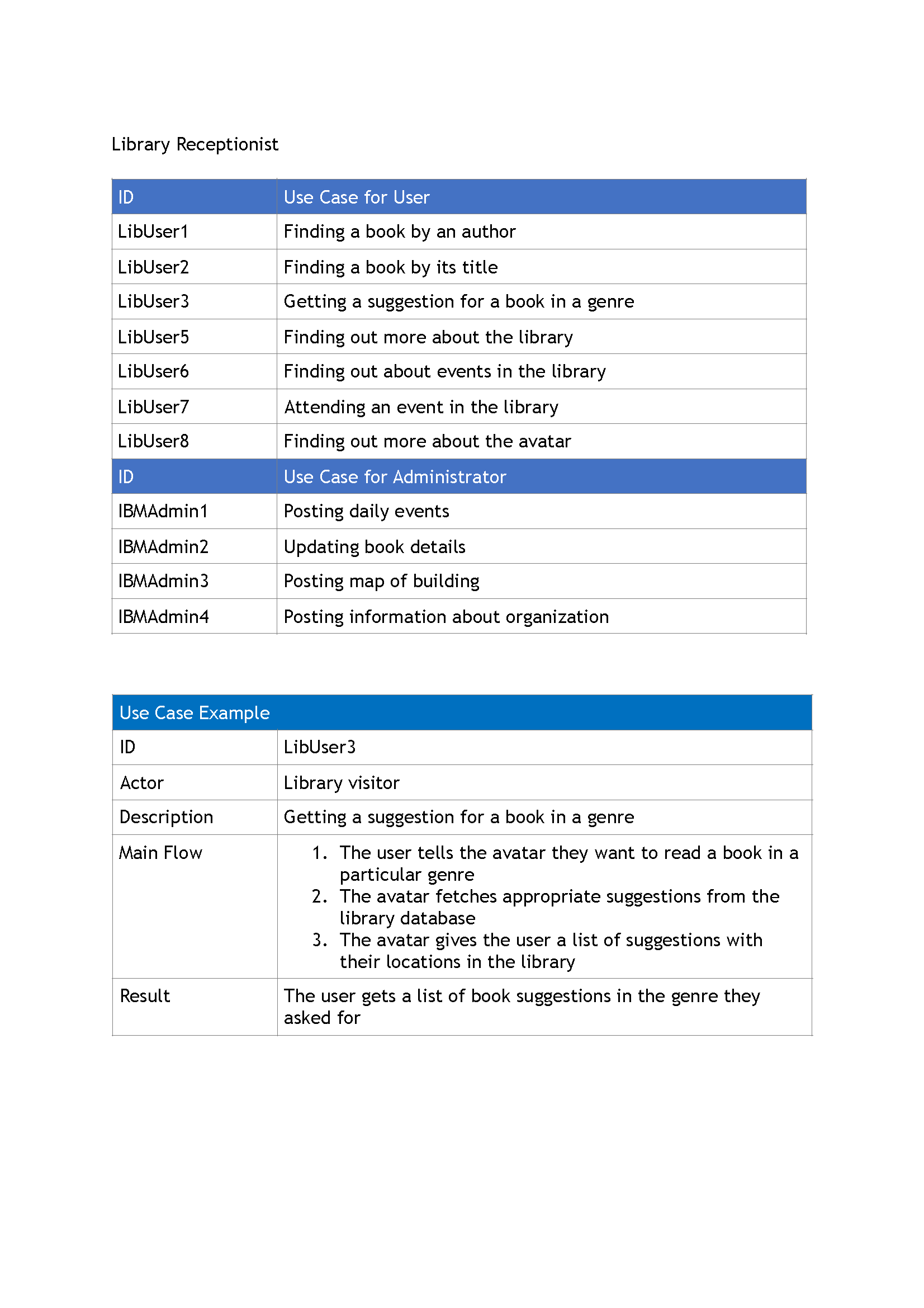

Our original task was to create a receptionist for use at IBM Headquarters in London. We were told that it would ideally have functionality to provide information about the company and any events happening in the building, as well as being an impressive demonstration of what IBM technology could do. Additionally, as a stretch goal, we wanted to provide proof of concept avatars for other settings, like a library, museum or healthcare setting, to show that this was a technology that could potentially be used in other organizations apart from IBM.

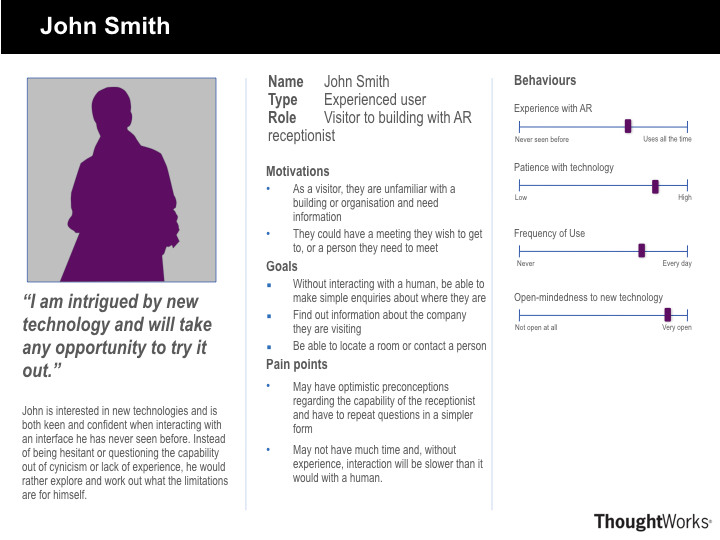

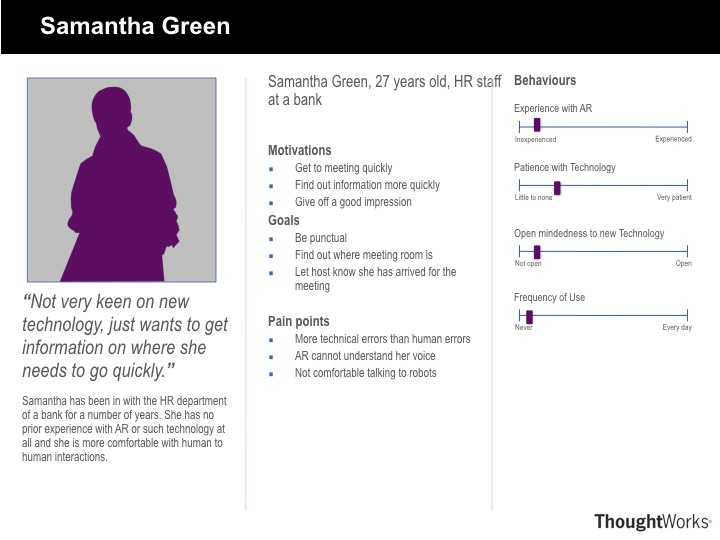

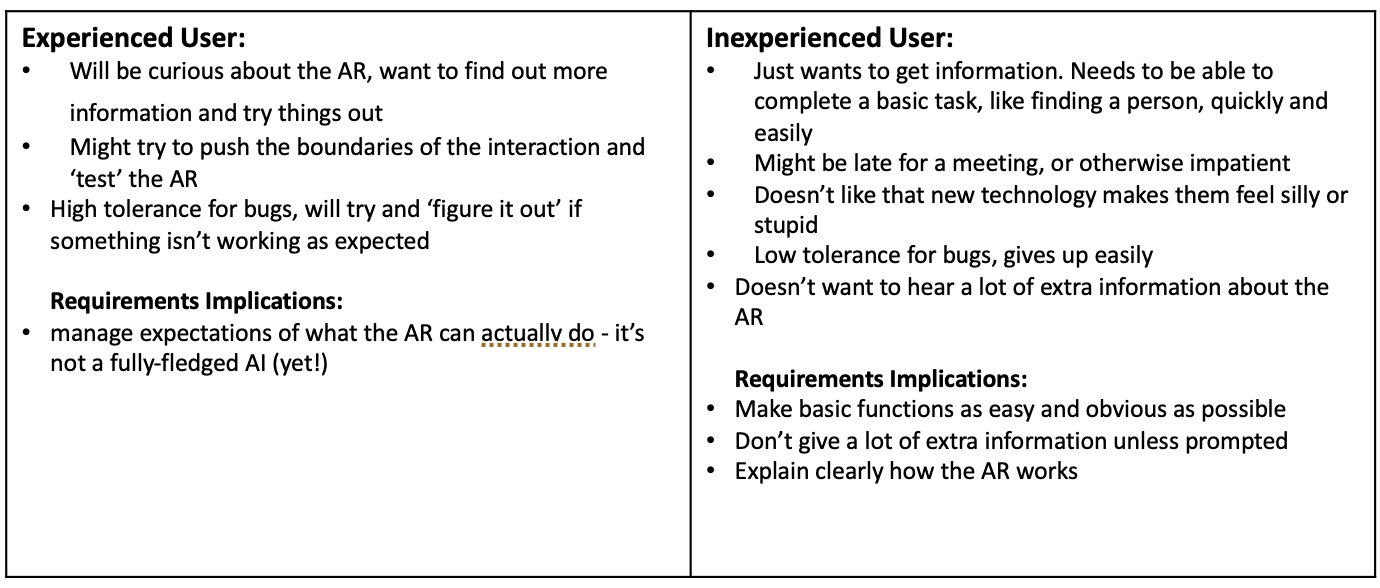

We had to consider that our users could be anybody who walks into a building with a reception, and that Augmented Reality is a relatively new technology that many people have not seen before. It was therefore important to consult with a wide range of users and to make our project as user friendly as possible.