How we made choices about which technologies to use

The main backend of our project, including voice recognition, conversion and chatbot technology, was already provided for us by IBM. This meant that we were slightly constrained in making technology choices because we needed to choose frameworks and software that fit well with IBM technology.

Devices

Since we were using AR, we had to develop for mobile devices that have this capability, which means Android and Apple mobile devices were our main choices, since both have relatively advanced AR development packages (ARCore and ARKit respectively). Ultimately, we wanted our project to be as widely available as possible, so we wanted to develop for both Android and Apple. We decided to start with Android because firstly, we had access to Android devices from our department, and secondly, we assessed the development pipeline for working with Android would be slightly easier – Apple have stricter controls on certain AR capability such as facial recognition (which we initially thought we would like to use for user sentiment analysis), microphone and camera permissions, and an Apple Developer Account is required to deploy your app to a phone. For this reason, we chose to start with Android with a view to deploying on iOS at a later stage.

Game Engine and Watson SDKs

Our main choices were between Unreal Game Engine and Unity Game Engine [5]. The decision was made much easier for us because IBM Watson, which we were required to work with, already has an SDK [6],[7] for Unity which made our work much easier. We could have chosen to write our own interface to the Watson cloud, but we felt it would be unrealistic for our skill level and the amount of time we had. For this reason, we chose to work with Unity and the Watson Unity SDKs.

AR Packages

With our ultimate goal of cross-platform development, we wanted an AR package that worked on both Android and Apple, and so using either ARCore or ARKit alone did not suit our needs. That left us with two main choices for an AR package that worked with Unity: Vuforia or Unity’s own AR Foundation [8]. Ultimately, we assessed that Vuforia probably had slightly better AR capability, but AR Foundation was developing rapidly and was also completely free, whereas Vuforia requires a subscription. With our goal of creating a low cost, open source project, we decided to take a chance with AR Foundation because we feel it’s more future proof and likely to significantly improve in capability over time. In fact, AR Foundation released a new version at the beginning of 2020 which we were able to use in our project.

Backend

IBM Watson Assistant can call out to a HTTP webhook to request information. We therefore knew we would have to use a webhook and connect to a URL. With an Azure Subscription provided to us for the project it made sense to use an Azure Function as our webhook. This also that we could use C# to write the webhook, which is the same language as we are using for scripting in Unity, making our workload slightly easier in terms of learning new technologies. We needed to connect our webhook to a simple database. We decided to use a Relational Database (as opposed to a Non-Relational Database) because we knew the kind of data we would want to store and query (staff names and contact information, book names and authors etc.) would be highly structured, and in addition we might want to put together some more complicated queries using joins,( for example if a patient provided their name to check into an appointment, we might want to look in another table to pull information about which doctor they were attached to). We chose to use the SQL database solution provided by Azure, which conveniently allowed us to include both database and webhook in the same resource group, which in a real deployment scenario would allow us to price these and manage their usage together. [9],[10]

References

[1]Mondly AR, "MondlyAR - World's first augmented reality language learning app", Mondly.com. [Online]. Available: https://www.mondly.com/ar. [Accessed: 21- Oct- 2019].

[2]Arcade Ltd, "Roxy the Ranger: The story behind the world's first AR chatbot in a visitor attraction - Arcade", Arcade. [Online]. Available: https://arcade.ltd/sea-life-ar-chatbot. [Accessed: 18- Oct- 2019].

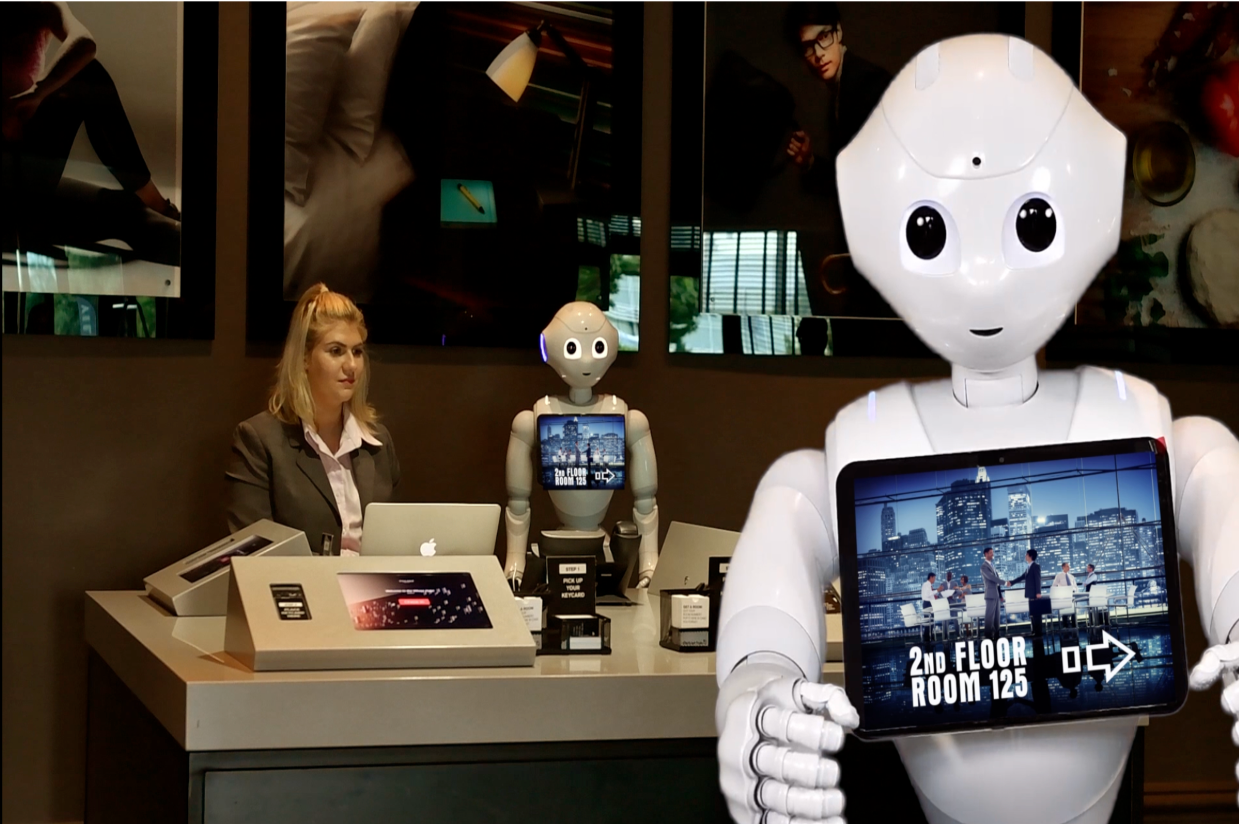

[3]Robots of London, "Pepper The Receptionist — Robots Of London", Robots Of London. [Online]. Available: https://www.robotsoflondon.co.uk/pepper-the-receptionist. [Accessed: 17- Oct- 2019].

4]Alice Receptionist, "Visitor Management Solution with ALICE Virtual Receptionist", ALICE Receptionist. [Online]. Available: https://alicereceptionist.com. [Accessed: 17- Oct- 2019].

[5]Unity Technologies, "Unity - Unity", Unity. [Online]. Available: https://unity.com/. [Accessed: 21- Oct- 2019].

[6]"watson-developer-cloud/unity-sdk", GitHub. [Online]. Available: https://github.com/watson-developer-cloud/unity-sdk. [Accessed: 21- Oct- 2019].

[7]IBM Watson, "Watson Developer Cloud: Unity SDK", IBM Developer. [Online]. Available: https://developer.ibm.com/depmodels/cloud/projects/watson-developer-cloud-unity-sdk/. [Accessed: 21- Oct- 2019].

[8]Unity Technologies, "About AR Foundation | AR Foundation | 2.2.0-preview.6", Docs.unity3d.com. [Online]. Available: https://docs.unity3d.com/Packages/com.unity.xr.arfoundation@2.2/manual/index.html. [Accessed: 24- Oct- 2019].

[9]"Choosing a Database Technology:", Medium. [Online]. Available: https://towardsdatascience.com/choosing-a-database-technology-d7f5a61d1e98. [Accessed: 28- Oct- 2019].

[10]"What Database Should I Use?", Jlamere.github.io. [Online]. Available: http://jlamere.github.io/databases. [Accessed: 28- Oct- 2019].

[11]"Introduction - Augmented Reality Design Guidelines", Designguidelines.withgoogle.com. [Online]. Available: https://designguidelines.withgoogle.com/ar-design. [Accessed: 30- Oct- 2019].

[12]Apple, "Prototyping for AR - WWDC 2018 - Videos - Apple Developer", Apple Developer. [Online]. Available: https://developer.apple.com/videos/play/wwdc2018/808. [Accessed: 31- Oct- 2019].