TESTING

- Home

- Testing

Testing Strategy

AvaBot is a client-side application, it is therefore necessary to perform thorough tests on it to ensure its usability, functionalities and stability meet the desired standards as in our MoSCoW lists and client requirements.

Testing Scope

1. It is real-world people who AvaBot will be conversing with, therefore, we must have considered the unpredictability of human behaviors. In that sense, every step of AvaBot’s dialogs should be tested, which is to ensure it can deal with both expected responses from users, and unexpected responses and correct the later by taking in return reasonable actions and offering clearer guidance.

2. AvaBot is endowed with various functionalities. Each functionality should be tested to ensure they work properly and users can have access to and utilize every of them with no constraints.

3. The aim of AvaBot is to help people who are working remotely. So tests should be conducted to ensure the application is designed in their positions, to speak their languages and to meet their real needs.

Test Methodology

AvaBot’s built-in features were tested by unit testing.

The overall performance and the resilience of dialogs were tested by integration testing.

The Bot design and UI were tested by user acceptance testing.

Test Principle

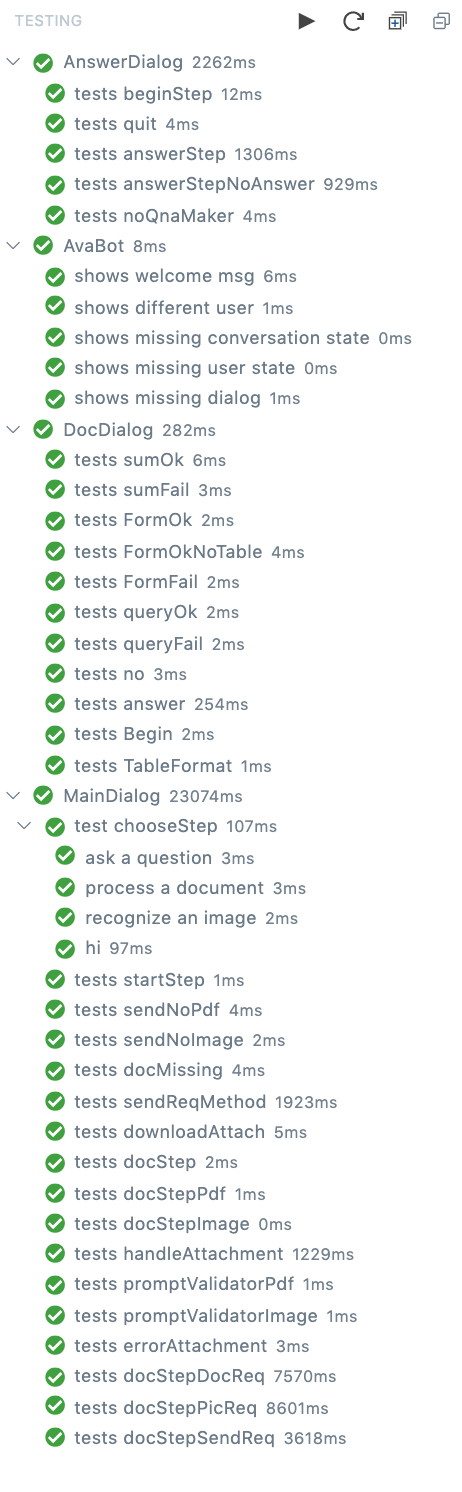

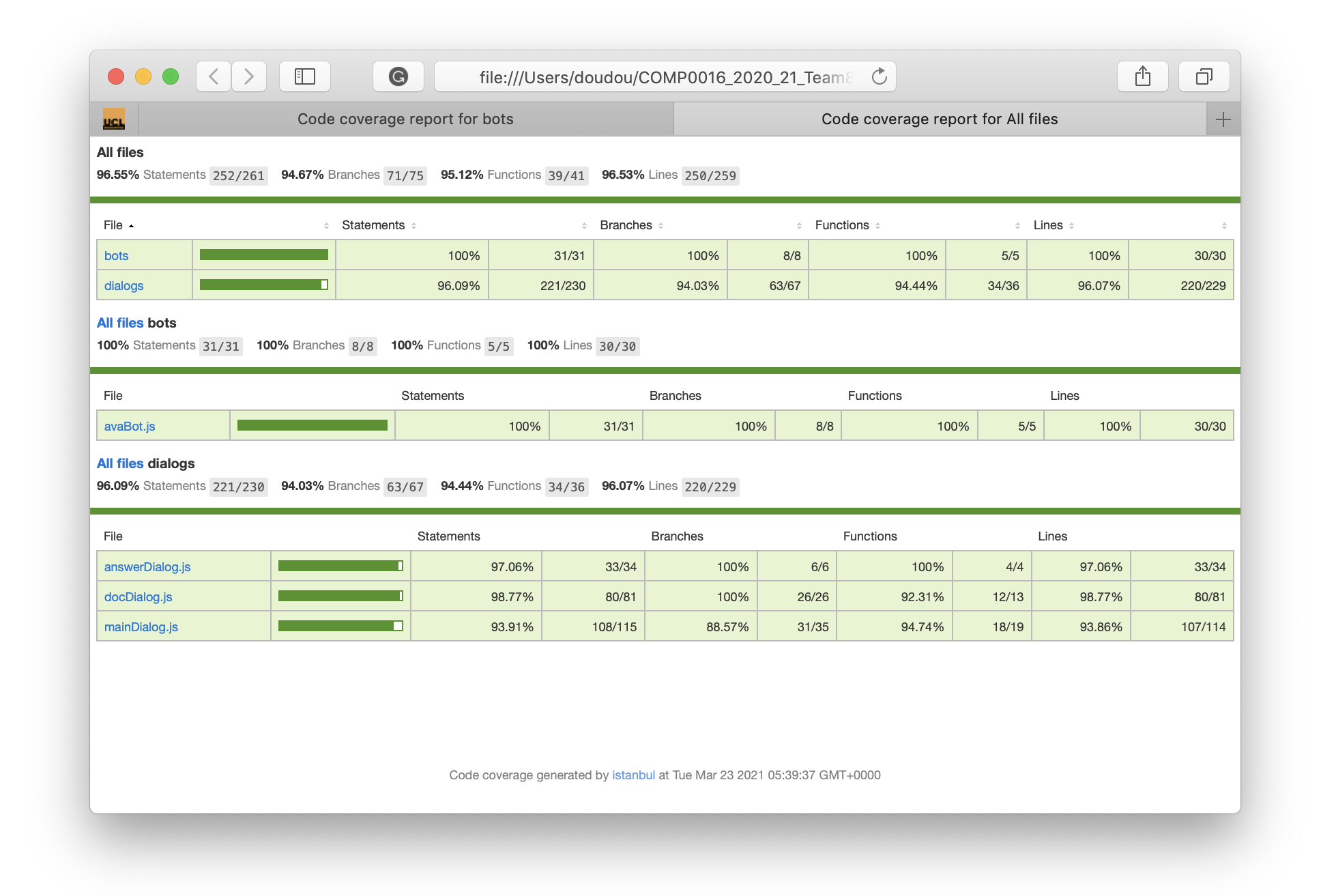

Our development of AvaBot followed TDD principle. The bot was tested by integration testing and unit testing during the development. We continuously wrote and repeatedly used integration tests to ensure the system worked correctly as a whole and every behavior of the bot was as expected. Any failed test would be looked into and the problem exposed would be fixed immediately. Unit tests tested each individual class and covered as many branches as possible (90%+ covered), which aided in discovering hidden problems and further refactoring.

Automate Testing

Unit testing

The tools we used for unit testing are Mocha, a feature-rich JavaScript test framework, and Chai, an assertion library for Node.js.

Unit tests were introduced towards Bot class and dialog classes, where all step methods, along with sendReq(), handleAttachment(), promptValidator() methods and etc. were covered.

Contrary to common sense, dialogs can be standalone from the bot, and thus can get their exclusive methods tested by instantiating the sole dialog objects.

it('tests promptValidatorImage', async () => {

const sut = new MainDialog();

let a = await sut.promptValidator({

let a = await sut.promptValidator({

let a = await sut.promptValidator({

recognized: {

recognized: {

recognized: {

succeeded: true,

succeeded: true,

succeeded: true,

value: [{ contentType: 'image/png' }]

value: [{ contentType: 'image/png' }]

value: [{ contentType: 'image/png' }]

},

options: { prompt: 'Please attach an image.' }

options: { prompt: 'Please attach an image.' }

options: { prompt: 'Please attach an image.' }

});

assert.strictEqual(a, true);

});

By instantiating all dialogs on their own can we feed them custom data for unit testing, hence obtaining higher coverage rate.

Integration testing

The bot framework SDK provides a test suit for testing the bot, which runs by mocking a client who can interact with the bot under the context of one dialog.

const sut = new MainDialog();

const client = new DialogTestClient('test', sut);

Then the client can send mock messages to the bot by calling sendActivity(), the response from the bot can be obtained as reply.text and asserted whether it is equal to the expected response or not.

let reply = await testClient.sendActivity('hi');

assert.strictEqual(reply.text, ‘How is it?’)

These two testings together cover up to 95% coverage, as shown on the coverage report generated by NYC, which is a Node module for generating test coverage reports.

User Acceptance Testing

To gain a further understanding of to what extent has AvaBot met user’s needs and find out potential improvements, we asked 4 people to test our products and recorded their feedbacks.

Tester

The testers were deliberately chosen, where they all have some characteristics that, firstly, they are our potential users, i.e., company employees who were working remotely, oversea students who were studying remotely and those whose job involves lots of document processing; secondly, non of them has software development experience before; lastly, they are from various backgrounds.

Note: our testers are real-world persons who chose to take the tests anonymously, we thus masked them with fake names and portraits to ensure anonymity and confidentiality.

Test Case

We divided the test into 4 cases, the testers would go through each case and give feedbacks. The feedbacks were based on the acceptance requirements given to the testers, where they would rate each requirement at Likert Scale as well as leave custom comments.

Test case 1

We let the testers go to the question-answering branch, ask questions to the bot, and see if they can receive acceptable answers.

Test case 2

We give a sample document (randomly chosen from the sample document library) to the testers and ask them to upload it to get the summary, and then table data from it.

Test case 3

We give a sample document to the testers and ask them to upload it to the bot, and then use the document-query feature, when they may ask whatever questions related to the document.

Test case 4

We give a sample image to the testers and ask them to go to the image recognition feature, upload the image, and let the bot recognize the image.

Feedback

| Acceptance Requirement | Total Dis. | Dis. | Neu- tral |

Agree | Total Agree | Comments |

|---|---|---|---|---|---|---|

| AvaBot run to end of job | 0 | 0 | 0 | 0 | 4 | +Everyone agree AvaBot run till end of job |

| the speech is intuitive, not confusing | 0 | 0 | 0 | 1 | 3 | +The user greeting part helps in letting users know how to use AvaBot

+The button options are very handy -The `QnA about it` button did not illustrate the feature very clearly |

| clear response to every action of users | 0 | 0 | 0 | 0 | 4 | +Everyone agree responses are clearly given |

| reasonable answers in question answering | 0 | 0 | 0 | 2 | 2 | +good answers for questions

-AvaBot did not manage to answer all the questions |

| attachments can be successfully received | 0 | 0 | 0 | 0 | 4 | +Everyone agree attachs were received

-only support PDF |

| reasonable summary | 0 | 0 | 0 | 1 | 3 | +Satisfactory summary

+document title extracted +good formatting |

| can extract table data | 0 | 0 | 0 | 0 | 4 | +All tables were extracted

-The data formatting could be better |

| can answer questions about a document with answer context | 0 | 0 | 0 | 1 | 3 | +Amazing feature, did good job

+can literally ask anything about the document |

| can extract text from images | 0 | 0 | 0 | 0 | 4 | +The text extracted is intact

+works especially good for business card +good formatting |

| accessible on any browser | 0 | 0 | 0 | 0 | 4 | +Everyone agree AvaBot works well on their web browsers |

| Fast response | 0 | 0 | 0 | 0 | 4 | +Overall response is fast

+quick attachment receiving -may wait for some time when using AvaBot for the first time -document processing time could be shorter +-quick response for general question answering, but document-query takes a bit long for the answer |

| can do multiple jobs sequentially | 0 | 0 | 0 | 0 | 4 | +Everyone agree AvaBot can do multiple jobs |

Conclusion

We are glad to see that the test users showed positive attitudes and enthusiasm to AvaBot while they were interacting with the bot and giving the feedbacks. Their comments, both pros and cons, meant a lot to us. Those comments have guided and will be guiding us to further refactoring of AvaBot to make it more mature and comprehensive for helping more and more people.

Kai'sa

19 years old, an international student at UCL

He is currently studying at home in his mother country due to UK's lock-down policy published last winter.

Ahri

24 years old, a Bank employee

She would have to work remotely at home since she is in the city classified as high-risk area by the government.

Evelyn

22 years old, a final-year university student

She is preparing for postgraduate programme application and needs to collect info from essays for her graduation project.

Akali

35 years old, an editor at press company

He works for a local press company and his work requires reading through tons of writing pieces per day.