Testing

Testing Strategy

Testing was an important part of our application and it was considered from the very first stages of development. We thoroughly tested our application to ensure all the usability, functionality and stability standards were met as set out in the MoSCoW list and the clients requirements.

Testing Scope

1. The question generation system is a client-side application and so there will be a lot of user engagement. To facilitate this we ensured tests were written and performed with full consideration of the users but without any expectations of their interactions. This meant tests were written to handle all possible erroneous inputs and uses of the application as well as check if the application performs efficiently and correctly with valid appropriate interactions from the user.

2. The system maintains a multitude of models for accurately generating different types of questions. All these functions should be tested independently to ensure they work as required (for example, testing distractors can be generated for any given word as a stand-alone application). Then these functions were tested on how well they integrate within the system and perform their expected tasks.

3. Finally, as requested by the clients, the question generation system must have API endpoints

for external programmers to interact with. This was also tested to see how accurately and

quickly the API can respond to HTTP requests.

Test Methodology

The question generation’s built-in features were tested by unit testing. The overall performance of the web application was tested by integration testing. The web applications design and UI were tested by user acceptance testing. All of these tests were continually enforced by using continuous integration to ensure all commits did not break the application or introduce any bugs.

Test Principle

The development of the question generation system strictly followed the TDD principle. The system was continually tested by integration testing and unit testing during each stage of the development. We continuously wrote and repeatedly used integration tests to ensure the system worked correctly as a whole and the question generation and answering functions behaved as expected. Unit tests tested each individual python file and had over 80% coverage as requested by the clients. This aided us in discovering hidden problems and ensuring the application worked as smooth as possible.

Automate Testing

Unit Testing

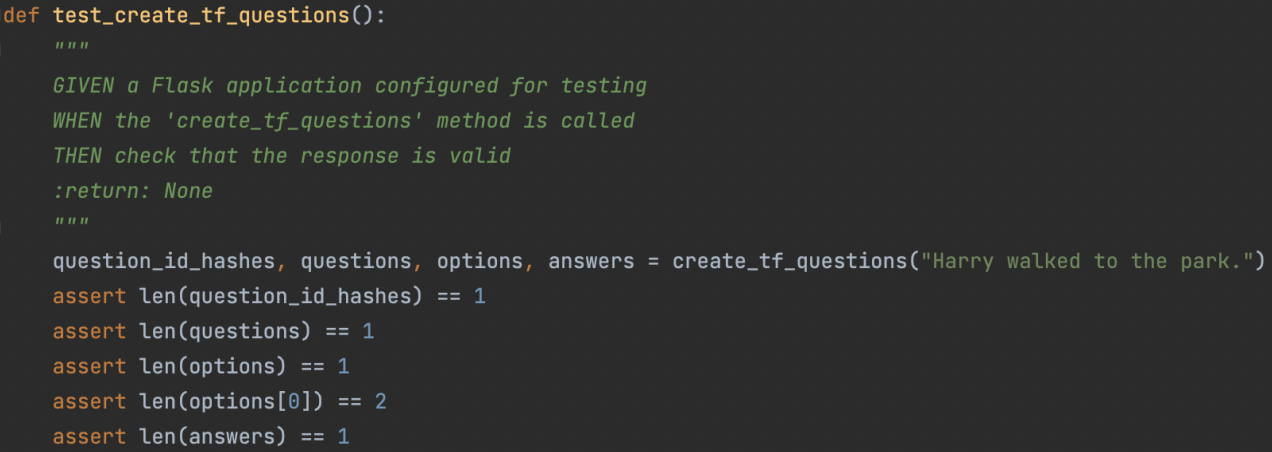

The unit tests were written for the models which contain the SQLAlchemy interface to the database and for the functions that handle the question generation logic engine. The GIVEN-WHEN-THEN structure was used to describe all the unit tests to ensure future developers can be sure of the purposes of each test.

To perform unit testing we used the pyTest framework which is a popular way of writing scalable unit tests for testing databases, APIs and UIs. The reason for using purest over the unit test module was because pytests requires less boilerplate code and so the test suites are more readable. This was especially important because the client wanted the code to be maintainable and scalable.

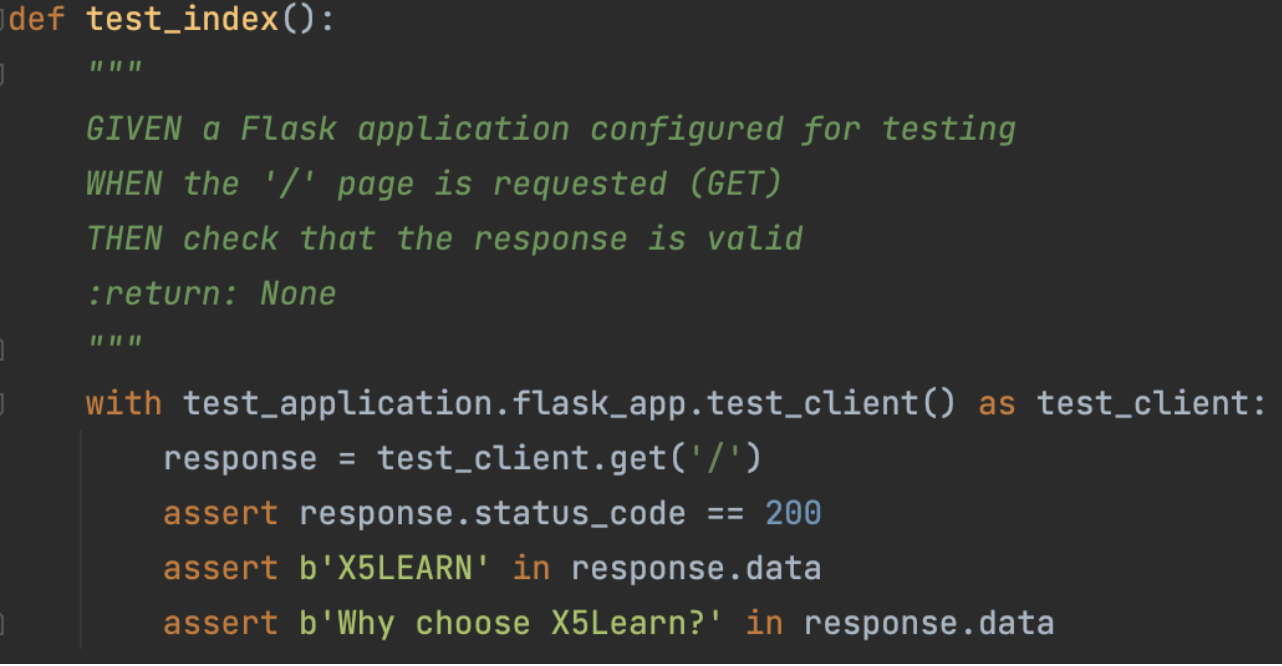

Integration Testing

Functional tests were written for the controllers and routes of the project. These contain the view functions and the blueprints used in the system. Since the project uses an application factory, it was quite easy to instantiate a testing version of the application through the flask’s built-in test_client helper method. POST and GET calls were then made to this dummy server and their responses would be validated by checking their status code and the contents of the view page returned.

Continuous Integration

Continuous integration was set up to ensure our development team can release code rapidly, automate the building and testing of the application. This was crucial to ensure we could deploy the latest versions of our application to our remote server as soon as possible. CircleCI was the platform that we used to maintain this and as can be seen, we were able to spot build failures but also be confident of successful builds throughout the development of our application. This proved to be the most important testing practice for us because the team had worked on multiple branches simultaneously and it would have been impossible to ensure breaking changes were not made without the use of CircleCI to ensure all merges into the master branch were safe. This was very simple to set up and only required us to write a config.yaml file to set out the requirements of the project including pip packages and any other external libraries.

Coverage Testing

It was a difficult process to ensure that the testing of our application was sufficient and reasonable. Initially, we had used coverage reports to check the branch coverage of our tests and we were able to achieve the 80% coverage benchmark as set by the clients. It was harder to achieve anything higher due to the nature of the application and not being able to automate all processes. In hindsight and with additional time we would use tools like selenium to automate the web application for testing purposes. This would give us greater branch coverage and more reliability of the stability of the system.

However, since we were unable to use selenium in this instance and since the confidence in the quality of our tests wasn’t quite high, we decided to perform mutation testing.

Mutation Testing

mutmut is the library that we used to perform mutation testing as this is a simple to use library. This also produces the most mutants compared to mutPy and cosmicRay. Another reason for using mutmut is because it is actively managed whereas mutPy isn’t. To aid our mutation testing process we used hammett which speeds up the testing process. Our mutation testing results were very promising and gave us greater confidence that the tests that were written were of high quality and successfully failed when mutants are introduced. We achieved a mutation score of 95.4% at the time of writing this (19/03/2022 11:51 GMT).

User Acceptance Testing

To test out if X5QGEN met users’ needs and to find out potential improvements, we asked 4 people to test our products and recorded their feedback.

Testers

Varun

20 years old second-year computer science student studying at UCL. He resides in London and he is a full-time student.

Vishaol

20 years old second-year computer science student studying at UCL. He resides in London and he is a full-time student but also works part-time in retail.

Jason

20 years old second-year computer science student studying at UCL. He resides in London and he is a full-time student.

Sophie

20 years old second-year computer science student studying at UCL. She resides in London and is a full-time student.

All of the testers are more than qualified to be able to test the system. They are all fluent in both writing and reading in English which is important when trying to test if the generated questions are well-formed. Also, all of our testers have plenty of experience with human-computer interaction design which is useful so that they can give detailed feedback on our user interface. Also, upon the request of the testers, we decided to not put pictures of them. That is why we used a user icon image.

Test Cases

We divided the test into 4 cases, the testers would go through each case and give feedbacks. The feedbacks were based on the acceptance requirements given to the testers, where they would rate each requirement at Likert Scale as well as leave custom comments.

Test Case 1

Users are asked to sign up and look through their profile page to check if the sign up is successful and then to log off. So, the user will be going through the whole authentication process and can give feedback on the experience.

Test Case 2

After the users are logged on, they are randomly given a text passage from three different text passages. They are asked to add a few sentences of their own to the text passage and generate both multiple choice questions and true or false questions. Then, answer them.

Test Case 3

The users are given a code to a set of questions, and they are asked to enter the code so that they can answer the questions themselves.

Test Case 4

Users are asked to navigate to the profile page and review their performance and give feedback on the experience.

Feedback

| Test Case | Acceptance Requirement | Total Dis. | Dis. | Neu- tral |

Agree | Total Agree | Comments |

|---|---|---|---|---|---|---|---|

| 1 | No issues when signing up | 0 | 0 | 0 | 0 | 4 | +No one had any issues signing up |

| 1 | no issues logging in and off | 0 | 0 | 0 | 0 | 4 | +No one had any issues when signing up -One tester said the wrong password warning can be a brighter colour |

| 1 | Profile page has personalised information | 0 | 0 | 0 | 0 | 4 | +Everyone agree there are personalised information on their profile page |

| 2 | Generated Multiple choice questions makes sense | 0 | 0 | 0 | 3 | 1 | +Most questions made sense

-Some questions were non-sensical but still understandable |

| 2 | Generated true or false questions makes sense | 0 | 0 | 0 | 0 | 4 | +Everyone agrees the true or false questions made sense |

| 2 | Questions are displayed clearly | 0 | 0 | 0 | 0 | 4 | +Questions were easy to read and displayed clearly |

| 2 | The interactive response to asnwering a questions is clear | 0 | 0 | 0 | 0 | 4 | +The change of colour of the options depending on the answer was very intuitive and easy to understand |

| 2 | The options for the questions were easy to differentiate | 0 | 0 | 4 | 0 | 0 | -For some questions the answers were obvious because it couldn't have been any of the other options |

| 3 | The share code generates the right question every time | 0 | 0 | 0 | 0 | 4 | +The code generated right question everytime I tried it |

| 4 | The profile page showed every question the user attempted | 0 | 0 | 0 | 1 | 3 | +It does show all the attempted questions -The attempted questions interface can look cleaner, it is too cluttered |

| 4 | There is a visual representation of the number of answers user got right and wrong | 0 | 0 | 0 | 0 | 4 | +Yes, there is a pie chart that shows that information +It shows the right information and it changed as the user attempted more questions |

| 4 | Does the profile page show clearly the performance of the user | 0 | 0 | 0 | 2 | 2 | +Everyone agreed it was clear enough -The user interface could be modified to make it look more organised |

Conclusion

The feedback we got from the users were very positive and they seemed to enjoy what X5QGEN got to offer. Their comments were quite helpful as we worked on improving the parts that they criticised. After testing the system in so many ways I think we can happily say it is ready to be deployed.