TEAM31

Evaluation

Upon project completion, we conducted a reflective evaluation of our year-long efforts.

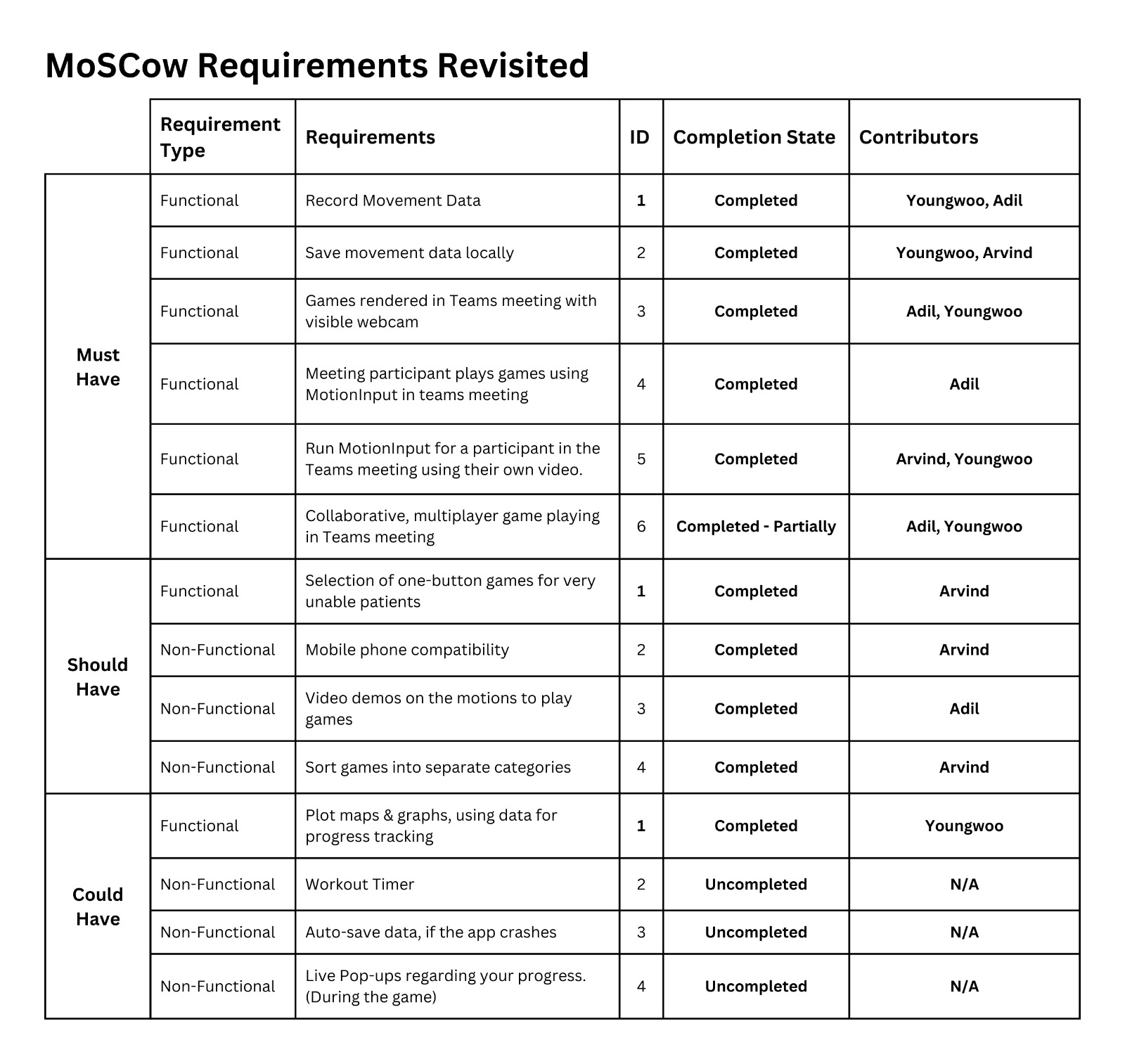

Moscow List Revisited

As we approached the end of our project developement, we reviewed our original MoSCow list and reflected on the progress that we've made to completing the goals that we set out.

Critical Evaluation

Client/Partner Feedback

Upon Project Completion, we received a detailed review from our Project Partners at Microsoft: "It is my pleasure to provide a complementary excellent review of the student's project activity related to the development of a Motioninput driven teams application using Live Share SDK for their academic industry exchange project with Microsoft and the Microsoft team's product group. The students' dedication and hard work in this project were evident from the very beginning. They displayed an exceptional level of professionalism, creativity, and problem-solving skills that were instrumental in the successful completion of the project. The students demonstrated a deep understanding of debugging the Teams Toolkit and Live Share SDK and its capabilities, which they effectively utilized to develop a functional and innovative Teams application. The students' teamwork and collaboration were particularly impressive, as they worked closely with the Microsoft team's product group to ensure that the application met the industry standards and fulfilled the project's objectives and also peers within the wider Motioninput teams to ensure the NDI and video input and support for a multi person Teams application was successful delivered. The students team ability to communicate effectively, share ideas and work towards a common goal was remarkable, resulting in a polished and high-quality final product. Furthermore, the students' efforts towards documentation and testing were outstanding. They ensured that the code and bug identified were well-documented. The ensured that their code base was easy to understand and maintain, and the application was thoroughly tested to guarantee its functionality and user-friendliness based on the user requirements and physiotherapy scenarios. Overall, the students' project activity was a huge success, and it is our pleasure to share the outcome of the project in a short video interview and blog post for Microsoft. We would like to highlight the students' exceptional skills, dedication, and hard work, which enabled them to develop an innovative and functional Teams application and showcase of using the Live Share SDK. We believe that the project is a testament to the students' ability and thank you for the collaboration." Lee Stott Principal Cloud Advocate Manager Associate Honorary Professor, Computer Science, University College London Microsoft

Self Evaluation

-

User Experience

A clean user interface has been designed to ensure an easy, yet smooth user experience. The Teams Application UI is relatively self-explanatory, with little to no extra information. Therefore, it is extremely streamlined, and the user should have ease when experiencing our product. The ‘Game Info’ buttons for each game are clear, in separate colours, and the gesture demo videos occupy a whole page. This is in accordance with feedback we received regarding user’s often missing this demo page and even in one case, a user did not feel the importance of our ‘Game Info’ button. To firstly tackle the ‘Game Info’ issue we centralised all the buttons and emphasised their UI and for the unseen pages in the application, we re-formatted the layout, so the most important information is shown by default. By optimising this user experience, we hope that our target audience will receive the product well. If time is permitted, to further the user experience we would have worked on integrating a personalised dashboard to render inside the Teams meeting. This dashboard would have interesting facts, figures and charts based on the movement data we collect. This could lead to greater personalisation in our application. Overall, we evaluate our User Experience as 4 out of 5

-

Functionality

The team has always focused on the key design target; to make remote physiotherapy accessible for all. Ultimately this goal has been achieved in 2 different solutions too. Implementation for all requirements provided by the client and defined in our MoSCoW have been successfully worked on, with the slight exception of multiplayer, collaborative live share experience in Teams. This feature has been partly implemented as it is working on 1 game, and the framework for 1 button static games, using the Live share technology has been implemented. This partial implementation could have been more concrete has we had more than 2 weeks with access to working technology. Also, while our data collection code is separate and unintegrated in MotionInput, due to permission issues for our group. However, this is ready to be shipping into. Overall, we evaluate our Functionality as 3 out of 5

-

Efficiency

Our project in general is very efficient due to certain design choices we made and technologies we used. E.g., Live Share SDK. Furthermore, all the games (including those implemented with Live Share SDK) all run with no latency issues. Again, this is a testament to technology choices made. The only issue is there is a delay when running MotionInput with NDI, from a video source from the Teams call. This largely is dependent on the person’s device, webcam quality and WI-FI connection. Therefore, it is mostly out of our control. Overall, we evaluate our Efficiency as 4 out of 5

-

Stability

Given our robust unit testing on all/most of the functionalities in our frontend UI, we are confident in the stability of our program. Furthermore, efficient file management systems meant we were able to isolate functionalities. Our data collection seems accurate, and the NDI solution is seamless; the only condition when it does not work is when Microsoft Teams shows all videos in the meeting as a black screen (this is most likely a bug in Teams). Overall, we evaluate our Stability as 5 out of 5

-

Compatibility

Our application prides itself based on compatibility. With our unique NDI solution, users can join a Teams call on any device, and you can run MotionInput for them, using their video from the Teams call. They hence can play MotionInput games from any device e.g., phone, tablet, etc. No pre-requisites are required. Furthermore, the Teams app is compatible on all browsers and on the actual Microsoft Teams desktop/mobile application. As well as some components having automatic window resizing. Overall, we evaluate our Compatibility as 5 out of 5

-

Maintainability

Our project was effectively modularised through the action of splitting our main sub-systems/solutions into separate repositories, so we can ensure each one operates separately without bugs. This helped debugging as the potential scope for finding errors was reduced. The LiveShare SDK framework is robust and can be expanded upon easily. However, this is limited to button games, currently. Overall, we evaluate our Maintainability as 4 out of 5

-

Project Management

This project has been managed very well in certain aspects, and in burst periods of time. Overall, we were organised and had a clear structure, however, we planned out the ‘planning’ phase of our project for too long, in hindsight. We should have started developing at an earlier date, this would have prepared us for the multiple blockers e.g., NDI-In disabled, and we could have had more time to work around these issues.We constantly had a good stream of communication with our project partners at Microsoft, progress updates were regular. With their help and through our work, we hope to bring a new feature to MotionInput that can help patients access physiotherapy remotely. Overall, we evaluate our Project Management as 3 out of 5

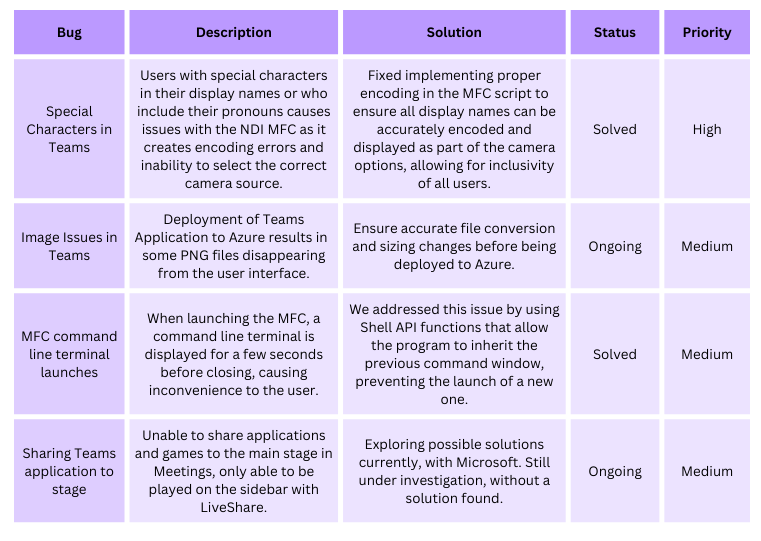

Bug List

Here is a list of the main bugs we have encountered while working through our project. While we have resolved some bugs through various solutions, others are still being worked on and remain unsolvable.

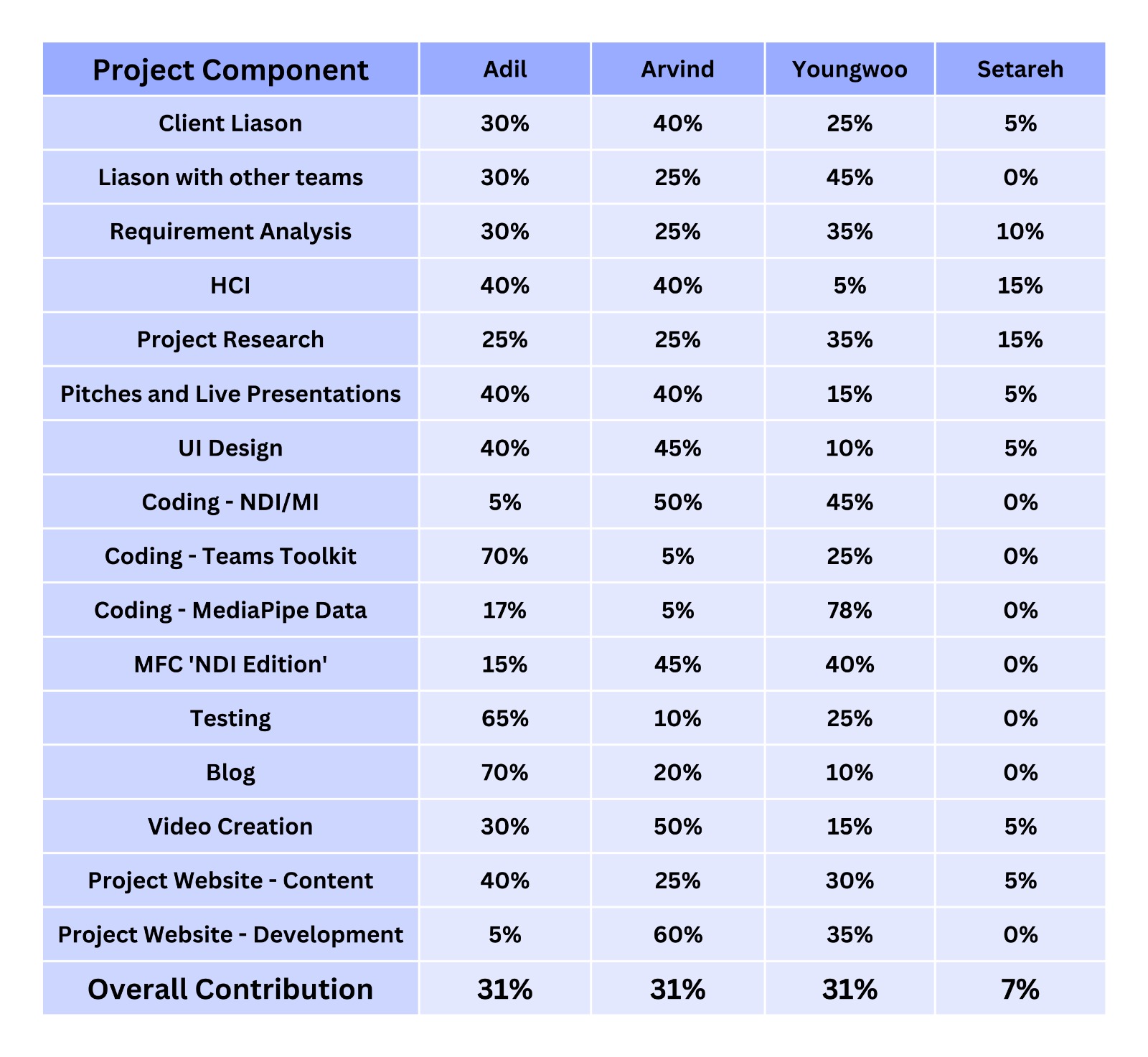

Individual Contribution

Here is the breakdown of each of our individual contributions to the project.

Future Work / Extendability

Microsoft Teams App Framework

We have built a framework in Microsoft Teams to allow collaborative multiplayer games. LiveShare SDK is a new feature, given less than 2 weeks with the new product, we created a working framework to allow users in a Teams meeting to enjoy collaborative experience inside our application. LiveShare SDK works for Tic-tac-toe, using mouse clicks; similar logic can be directly translated over into most other games requiring mouse clicks, for example, Reversi would use extremely similar logic. Therefore, many games using MotionInput will be able to be implemented using our framework. Also this was the first ever project with LiveShare SDK.Overall, if more time and attention was given, we could have implemented more games in this SDK, however, the framework is there for expandability.

NDI Extenability

As mentioned before, our NDI work can be extended and integrated into several other MotionInput projects, to bring remote MotionInput access to all gestures/motions and projects. We have already got it integrated and working with FeetNavigation, Bedside Navigator and more. Another possible extendability project, is an NDI multiplayer feature to build onto our initial work with NDI, would bring another level of compatibility to the Teams application. By NDI multiplayer we mean that one can switch the NDI video source running the MotionInput concurrently, all still whilst running MotionInput. Currently, if you are running MotionInput using an NDI video from the Teams call, and then you want to switch to another video, you have to stop MotionInput and re-run it again. By integrating the NDI multiplayer all of this can be done while MotionInput is still running. This would make running physiotherapy sessions easier and would mean that all users in the meeting do not need to install MotionInput on their computer. Also exciting features can be added, for example, if someone clicks on the screen using motion, then you can switch the NDI camera to the next user in the meeting. This would allow us to easily play games like Snakes and Ladders in the meeting, where everyone has their turn 1 by 1.

Data Tracking Framework

Our data calculations and framework have a vast scope for extendability. Merging our data calculations and plotting into MotionInput is the next step for our data features. Right now, this is implemented on MediaPipe (outside of MotionInput). We are ready completely to implement this into MotionInput, however, due to specific permissions we had to refrain from doing so. Here is a short manual on a possible integration manual:

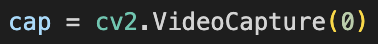

Find the block of code that VideoCaptures using OpenCV.

Now when the video capture is running, add the following code (on the right) to enable our pose estimation model in MotionInput.(You can find more detailed explanation about this in our Implementation Page.)