Testing Strategy

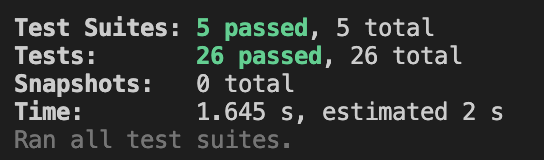

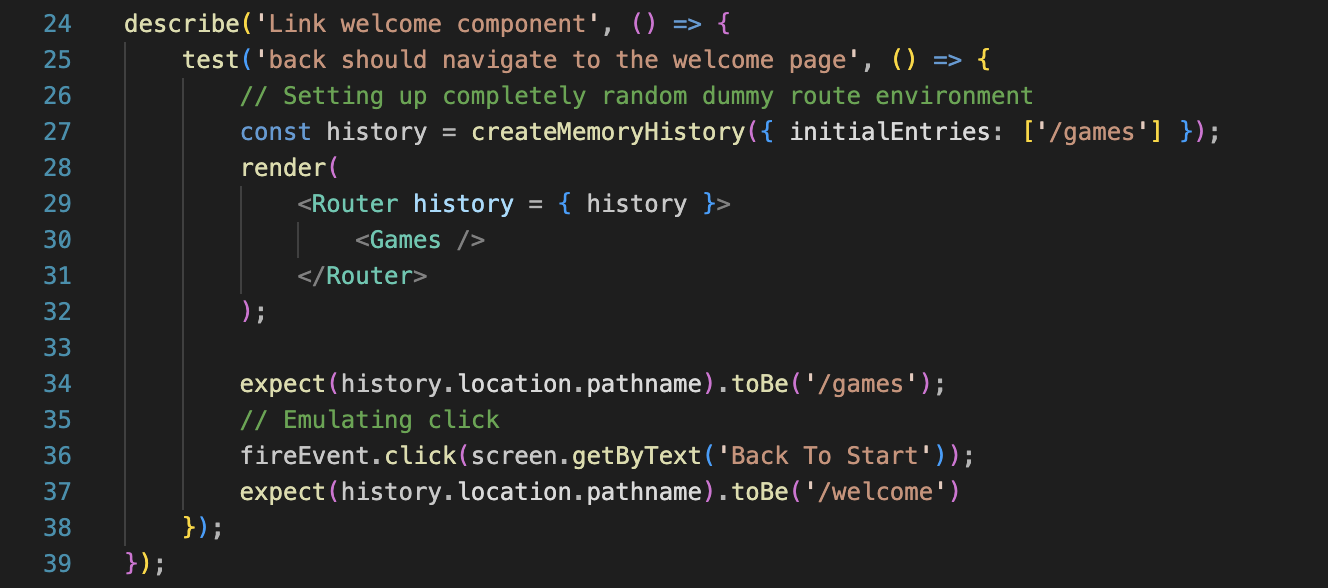

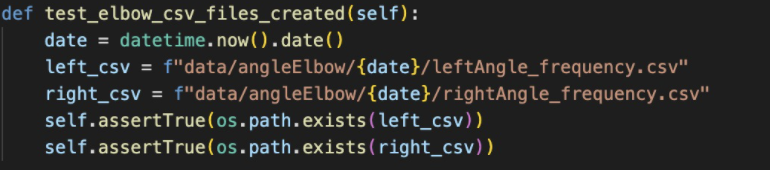

We have developed a client side application inside Teams. Therefore, it is pivotal to use specific testing methods, which give assurance that the usability, stability and functionality of the system do satisfy our MoSCoW requirements. Testing Scope The application should be tested around mimicked user-behaviour. Being for both patients and physiotherapists; testing should emulate their respective behaviours by considering a host of different scenarios. For example, this involves assessing the functionality with the Teams App of running MotionInput with NDI for a patients externally versus running locally on your own machine. Testing Methodology We integrated unit tests in our NDI script as well as in our Teams application. These allowed us to define rigidity within core functionalities of each part of our system. Furthermore, user acceptance testing is carried out with pseudo-users, since finding appropriate test subjects in-need of physiotherapy was challenging. However, we gathered useful feedback on the user-experience when using our UI. Usability testing was used to gather potential usability issues for user’s within our application, compared to user acceptance testing which helped validate requirements.

User Acceptance Testing

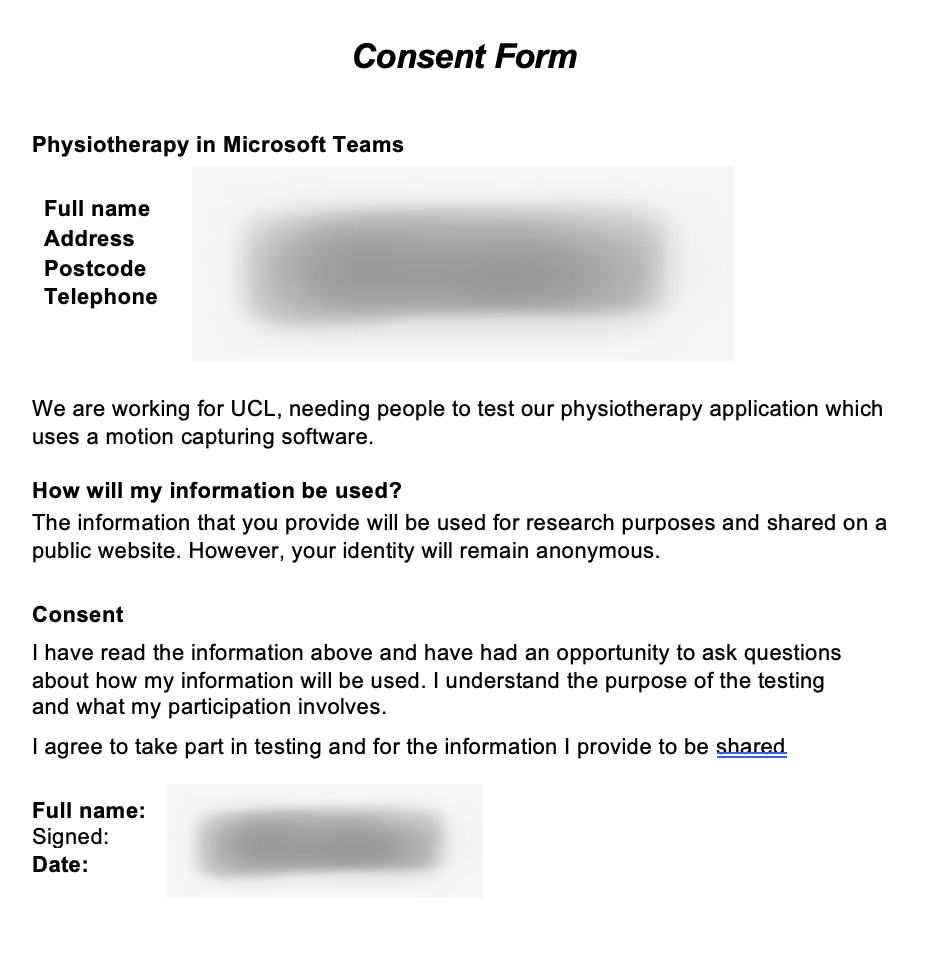

We conducted user acceptance testing to observe the actual functionality of the system fits the desired functionality, in respect to meeting requirements. One of the many reasons this is useful, is to eliminate product failure, by catching bugs, errors and imperfections. All user acceptance tests were in-person. The environment was setup for the user, they were placed inside an empty Teams call. Some testers were by themselves in the call, whereas others were placed with a ‘physiotherapist’. The physiotherapist’s purpose, was to use the testing subject’s Teams video to run MotionInput for them. A consent form was drafted and handed out to testing subject. It is shown below:

Testers

All pseudo-testers are from non-technical background, so we could receive authentic feedback. Also, the real testers embodied a specified pseudo-tester who are from a variety of ages ranges.

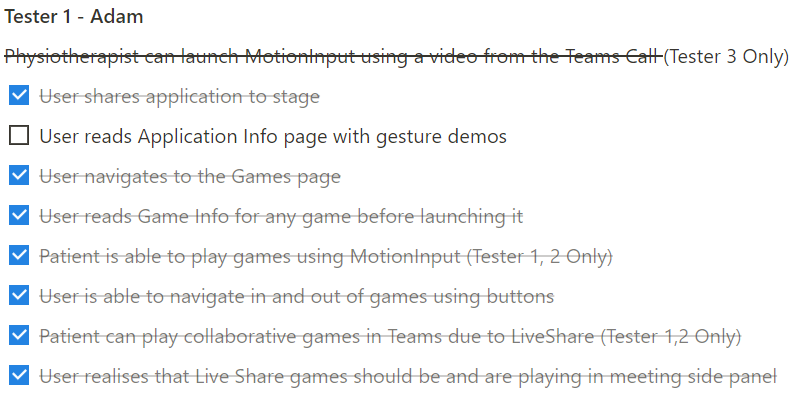

Tester 1 - Stefan Rodriguez

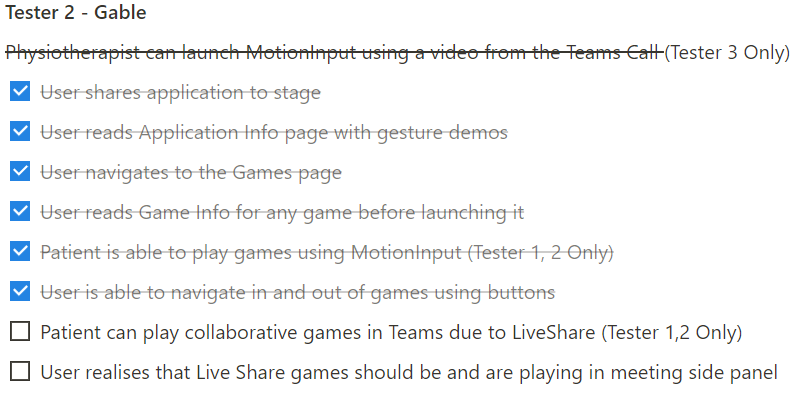

Tester 2 - Karen Baker

Tester 3 - Saif Iqbal

Tester 4 - Jodie Numayer

45 year old physiotherapist who works for the NHS.He has been delivering treatment to patients for nearly 2 decades and often encounters patients in need of physiotherapy.

58 year old, retired tennis playerStruggles to move upper body and spends most of their time in a wheelchair as a result. She relishes to help her shoulder motion which had previously suffered lots due to Tennis injuries. This will enable her to spend quality time with family.

20 year old student at UCLSuffered from a broken elbow and is now in recovery. They are in need of a physiotherapy program that they can easily follow and be motivated to follow, whilst studying.

35 year old, disabled personUnfortunately due to a condition that Jodie was born with, she has partial paralysis from the waist downwards. An effective and consistent physiotherapy program may be able to help her.

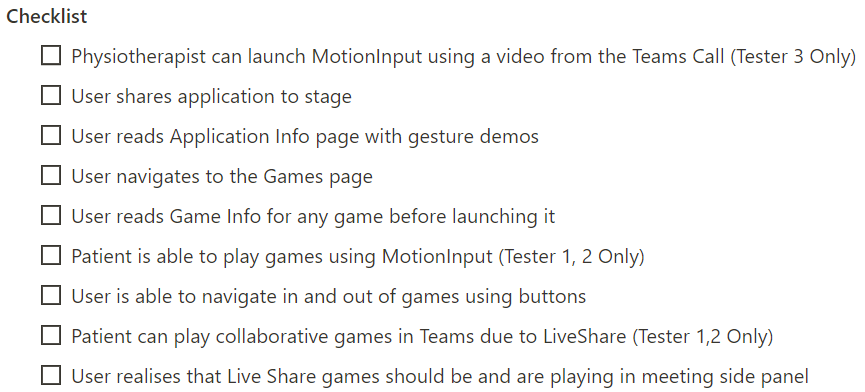

Test Cases

We created 4 key test cases:

Test Case 1: Physiotherapist running MotionInput on the behalf of a patient inside a Teams call.

Test Case 2: Patient joins Teams call and plays MotionInput games without running MotionInput since the

physiotherapist is running for them.

Test Case 3: Patient joins Teams call, launches MotionInput and plays games.

Test Case 4: Patients joins a Teams call, launches MotionInput and plays game with someone else placed on the call. In this case the other person on the call was a member of our teams.

Feedback

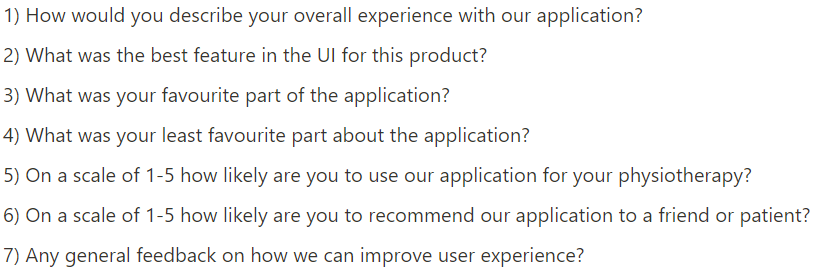

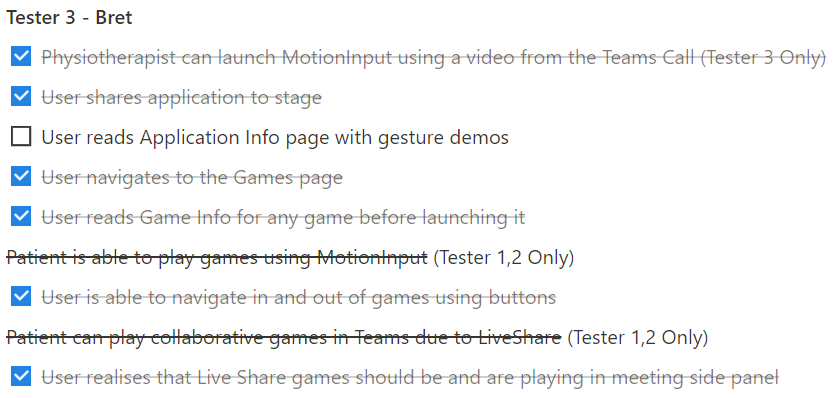

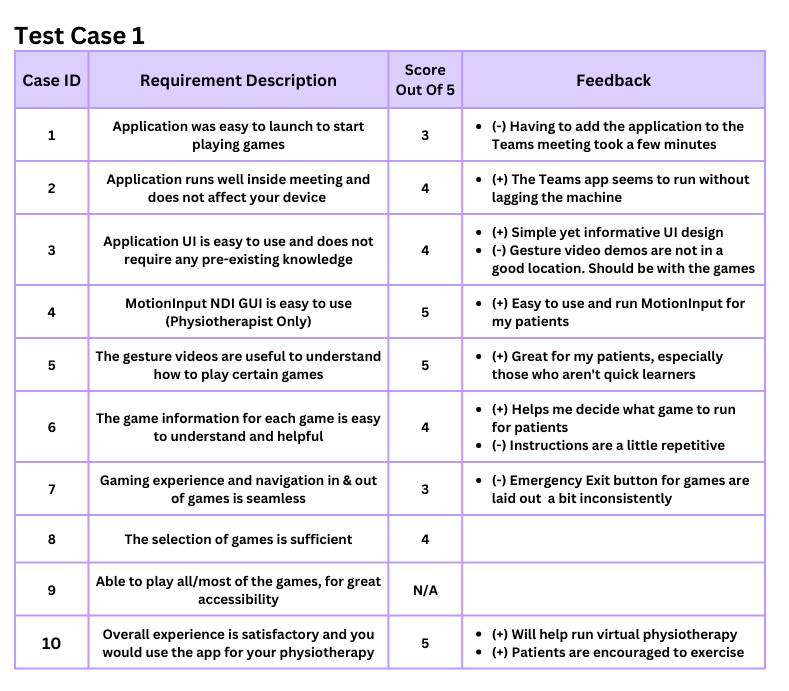

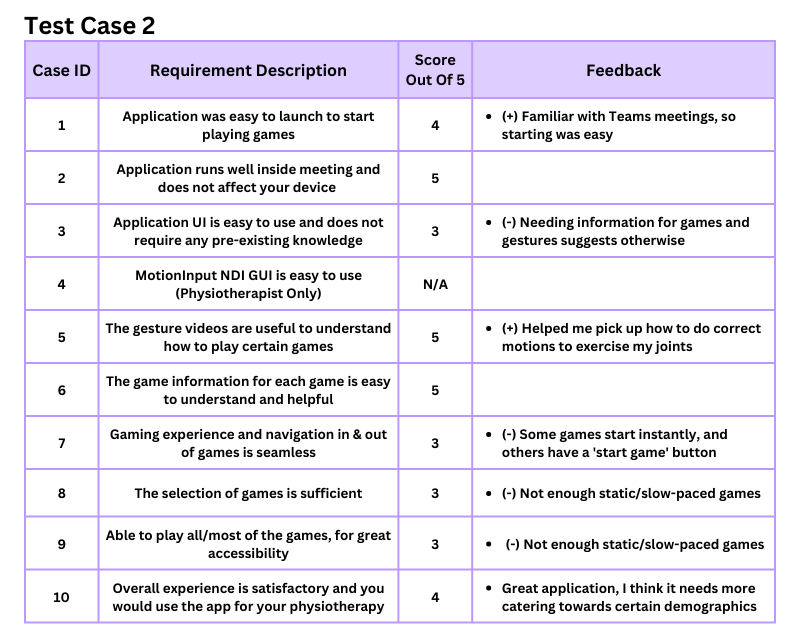

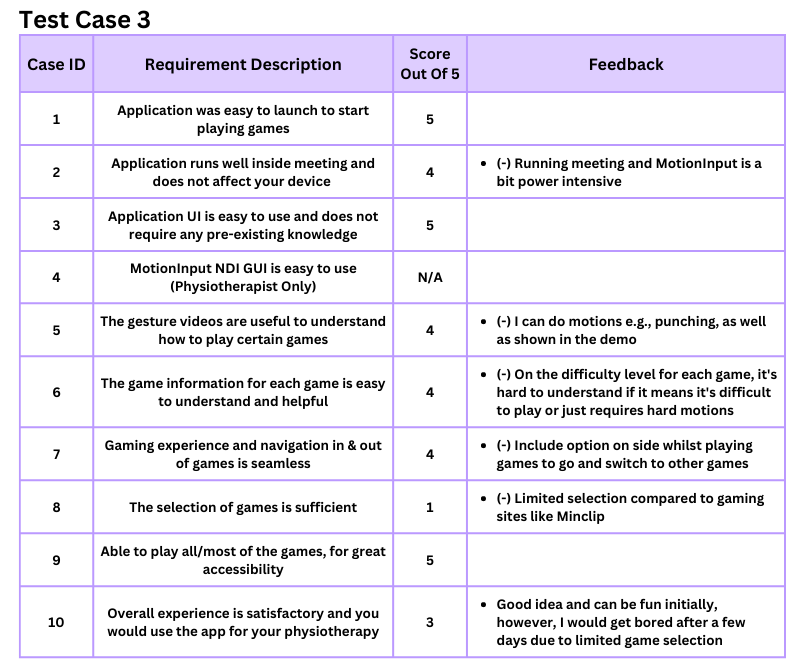

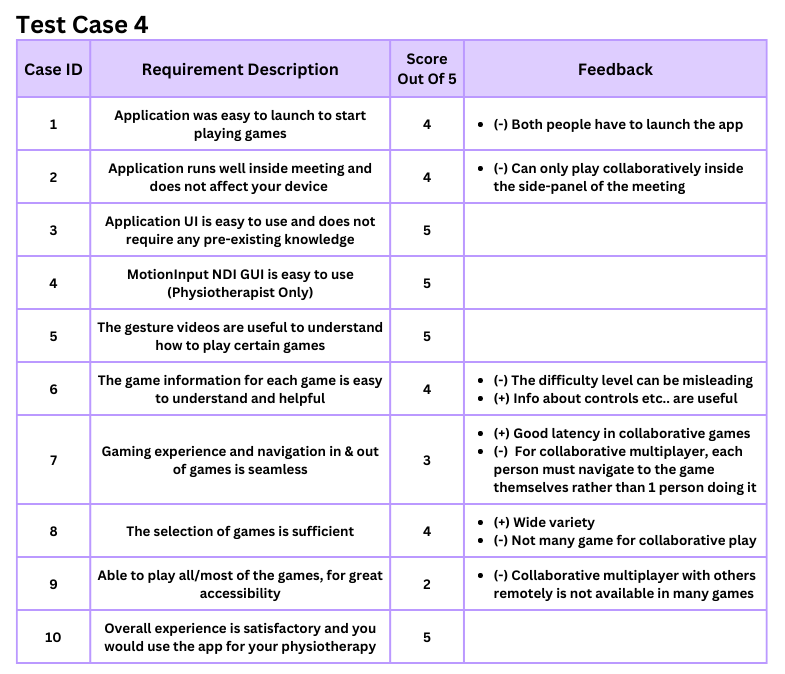

Throughout testing, users were asked to review certain acceptance requirements. We gathered both positive and negative feedback. We gathered the following feedback:

Conclusions

Our solution has received general excitement and enthusiasm from all age groups, demographics and types of users. Repeated feedback was mostly not included. Common themes which were suggested for improvement we have taken on board and worked on. Test case 1 generally had issues with the layout of the application. The main feedback being the layout and duplicate information for the user. Since then, we have included all the game information inside modals, which are only visible once the users clicks to view the information. This avoids excessive duplicate information being shown. Also, the button layout has been refactored to a more consistent formatting. Test case 2 generally wanted more games for her slower motions as she may still be at the earlier stages of recovery. Furthermore, due to cursor visibility issues with the NDI solution, this also limits the amount of games available on the app for the patient. Since then, we have implemented a static version of Duck Hunt as well as many other games with no time pressure, only requiring 1 click. Test case 3 was more of a youthful patient. Having grown up with gaming consoles and gaming website, they were expecting a raft of games. More than our current selection. We are regularly working on new games to add into our application. Test case 4 was happy about the implementation of LiveShare SDK when playing games. Latency was not an issue. However, they wanted more games available to play with LiveShare SDK. This is something for the extendability of the project, since we have only had limited time with this SDK and are laying out a framework for expansion.