MoSCoW Requirements Status

To refelect on the technical success of the project, we took our MosCoW requirements list and considered the completion state and contributors for each requirement.

| ID | Description | Priority | State | Contributors |

|---|---|---|---|---|

| 1 | Design a calibration system to correct the projection from a fixed user perspective | MUST | ✓ | Damian |

| 2 | Obtain an image of the projected area to use as a base for some projection modes | MUST | ✓ | Aishwarya |

| 3 | The calibration process must take less than 3 minutes in total | MUST | ✓ | Damian, Fabian, Aishwarya |

| 4 | Create a GUI to allow the user to setup and run the system (including settings selection for the projection modes) | MUST | ✓ MFC | Fabian |

| 5 | Allow the user to categorise their display screens to retrieve each display’s details (top left coordinates, width, height) | MUST | ✓ | Fabian |

| 6 | Provide a comprehensive user guide to allow for easy setup | MUST | ✓ Showcase website, user manual, instructions on the screen during calibration | Chan, Fabian |

| 7 | System compatibility with most, if not all, commercial games | MUST | ✓ | Fabian, Aishwarya |

| 8 | Create 5+ projection modes, including game and non-game dependent modes | MUST | ✓ | Aishwarya, Fabian, Chan |

| 9 | Capture the TV display to create projection modes which react to the TV content in real-time | MUST | ✓ Blur, Speed Blur, Low Health | Fabian |

| 10 | Capture the TV audio to launch a projection mode when certain sounds are detected e.g., via machine learning (ML) technology | MUST | ✓ Wobble for loud sounds (and explosive sounds e.g., gunfire in the uncompiled build) | Aishwarya |

| 11 | Analyse the TV display to perform real-time weather detection on games and films using ML technology | MUST | ✓ Weather mode | Chan |

| 12 | Include modes which use the features of the room to create animations | MUST | ✓ Snow, Wobble, Cartoon | Aishwarya |

| 13 | Compile the Python project into an executable using Nuitka | MUST | ✓ | Fabian, Aishwarya |

| 14 | Deploy to the Microsoft Store | MUST | ✓ | - |

| 15 | Release the project as an open-source framework on GitHub with detailed documentation | SHOULD | ✓ | All |

| 16 | Create an extendable system using OOP principles to ensure that developers can create their own projection mode designs | SHOULD | ✓ | Aishwarya, Fabian |

| 17 | Compile projector-camera calibration into an EXE usable from the main project | SHOULD | ✓ | Damian |

| 18 | Optimise capturing camera images for the calibration using multithreading technology | SHOULD | ✓ | Fabian, Damian |

| 19 | Allow switching between the projection modes without restarting the application | SHOULD | ✓ | Fabian |

| 20 | System compatibility with films and other TV-based entertainment | COULD | ✓ | Chan, Aishwarya, Fabian |

| 21 | Implement calibration from a fixed user perspective to correct the image or video for a wider variety of backgrounds, such as wall corners [to assist Team 34 in developing their Stellar Cartography system] | COULD | ✓ | Damian |

| 22 | Move calibration implementation to C++ for ease of compilation and improved performance | COULD | ✓ | Damian |

| 23 | Given a sound effect, identify when the sound is heard whilst playing a game or watching a film | COULD | ✗ Investigated audio matching techniques using a single sound file but achieved inaccurate results | |

| 24 | Incorporate hands or facial gesture control to navigate the set of projection modes whilst playing a game | WON'T | N/A |

Percentage of key functionalities (MUST & SHOULD) completed: 100%

Percentage of optional functionalities (COULD) completed: 75%

Known Bugs

Having refined our system after testing to remove critical bugs, the remaining known bugs are minor.

| ID | Bug Description | Priority |

|---|---|---|

| 1 | To make the software setup (during calibration) a better experience for the user, the TV and desired projection area selection windows should be improved. Currently, if the user presses the X button on the window (before or after selecting the region of interest, ROI), the same window opens again, and the user is unable to draw a new rectangle for the ROI. It can only be closed permanently after pressing ‘c’. Doing this will cancel any previous ROI selection and they must start the software calibration process again. This needs to be handled more elegantly in the future. | Minor |

| 2 | Projection area selection can sometimes produce black regions in the perspective transformed image when the selected 4 corners are too close together. This will not be a problem for our use case however as the projection area will be large meaning the 4 selected corners will be far apart. | Minor |

Individual Contribution

Here is a breakdown of the team's individual contributions to the project from when it commenced in October 2022. We assigned different weights to each task as some required more effort than others (e.g., system development and the portfolio website).

| Task | Weighting | Fabian (%) | Aishwarya (%) | Damian (%) | Chan (%) |

|---|---|---|---|---|---|

| Client Liaison | 0.04 | 60 | 15 | 20 | 5 |

| HCI & Requirement Analysis | 0.035 | 30 | 35 | 20 | 15 |

| Research & Experiments | 0.035 | 25 | 25 | 25 | 25 |

| Elevator Pitch Presentation | 0.03 | 35 | 35 | 15 | 15 |

| Project Demonstrations | 0.045 | 30 | 25 | 30 | 15 |

| Microsoft Imagine Cup '23 | 0.045 | 40 | 40 | 10 | 10 |

| System Development | 0.55 | 30 | 30 | 30 | 10 |

| Testing | 0.03 | 20 | 40 | 20 | 20 |

| Video Editing | 0.0325 | 82 | 6 | 6 | 6 |

| Blogs | 0.0325 | 25 | 25 | 25 | 25 |

| Portfolio Website Content/ Development | 0.125 | 29 | 29 | 22 | 20 |

| Overall Contribution (By Weighted Average) | 1 | 32.73 | 29.01 | 25.48 | 12.78 |

Critical Evaluation of the Project

We investigated how well the final project performed against several criteria.

User Experience

We maximised our effort to ensure that the project is simple for users to understand. The MFC app was designed to have a user-friendly interface, with as few options as are required. In addition, we wrote guided manuals to assist users when troubleshooting. However, as the Known Bugs table indicates, there are still areas where we can improve the UX.

Functionality

We completed 100% of the key functionalities from our MoSCoW requirements and 75% of the optional functionality tasks. All necessary targets were finished within the deadline. As a result, we achieved what was required to deploy the app to the Microsoft Store.

Stability

After conducting performance and user acceptance tests, we amended our system as explained in System Refinement. This gave us the opportunity to incorporate better user input validation and decrease the percentage of crash-free application sessions. Exception handling must be improved throughout the whole system however.

Compatibility

We have designed the system so that it can run on any laptop with the Windows operating system, ensuring that is is compatible with any projector and webcam or smartphone camera.

Our project can be used not only for playing games, but also for other TV-based entertainment such as films. By utilising the different projection modes, users are led on a variety of immersive journeys. Furthermore, we will deploy our project to the Microsoft Store and release it as an open-source framework on GitHub so that other developers can continue our vision and develop more projection modes.

Maintainability

We have created extensive documentation of the project in the form of this project website, code commenting and docstrings, and README files. These outline the system architecture, the implementation of key features, and present a starting point for the next developers involved in the project.

Project Management

The project progressed smoothly thanks to participation from our team members. A Notion page was used to manage our individual tasks and deadlines, and the project Gantt chart guided the overall timeline.

Future Work

Our work on UCL Open-Illumiroom V2 was just the beginning, and the project will now continue to be worked on by many more students! Here are some of our ideas for ways in which the project can be improved and extended.

-

System architecture: As mentioned in Memory Usage in Testing, in the current implementation of UCL Open-Illumiroom V2, each time the user selects and launches a projection mode, the entire system is re-initialised. To reduce latency when initialising the mode and to ensure the memory usage is stable when the application is running, the system must be adapted to ensure utility classes are initialised only once. This is because it is assumed that the user will launch and switch between multiple projection modes during each session.

Another area for improvement is exception handling, especially in the system setup.

-

Calibration: Calibration is currently only supported on modes which don't map effects onto the image of the projection area such as Blur and Low Health. For modes like Snow, Wobble, Cartoon etc., it needs to be adapted in some way in the future.

-

Improved audio classification: Given a single WAV file of a sound effect, the system could be extended to identify whether the same sound is heard while playing a game or watching a film in real-time. This could then be used as a trigger for certain projection modes. To do this, the developer would need to consider different audio processing and matching techniques. It would be challenging to implement, because sound effects are frequently combined and overlayed in media. For example, the Hadouken sound effect in Street Fighter, which could be used to trigger a mode that causes the background wall to shake or crack, doesn’t occur in isolation. The sound is frequently overlayed by game music, other characters speaking, another sound effect and so on.

Another idea for a mode which performs audio analysis was conceptualised when talking to Daniel Vance, the Lead Technology & Equipment Specialist at Team Gleason Foundation who are working to create assistive technology for patients with Motor Neurone Disease. One notable recommendation was to observe music to decipher the emotions being displayed on the TV. Based on the music’s key, colours or other visual patterns could be displayed to engage individuals with hearing impairments. Additionally, this could help people who have difficulty reading social cues and/or facial expressions such as some who are neurodiverse.

If further audio analysis is undertaken, it is highly recommended that the entire project be translated from Python into C++ to avoid Nuitka compilation errors when using popular libraries like Librosa.

-

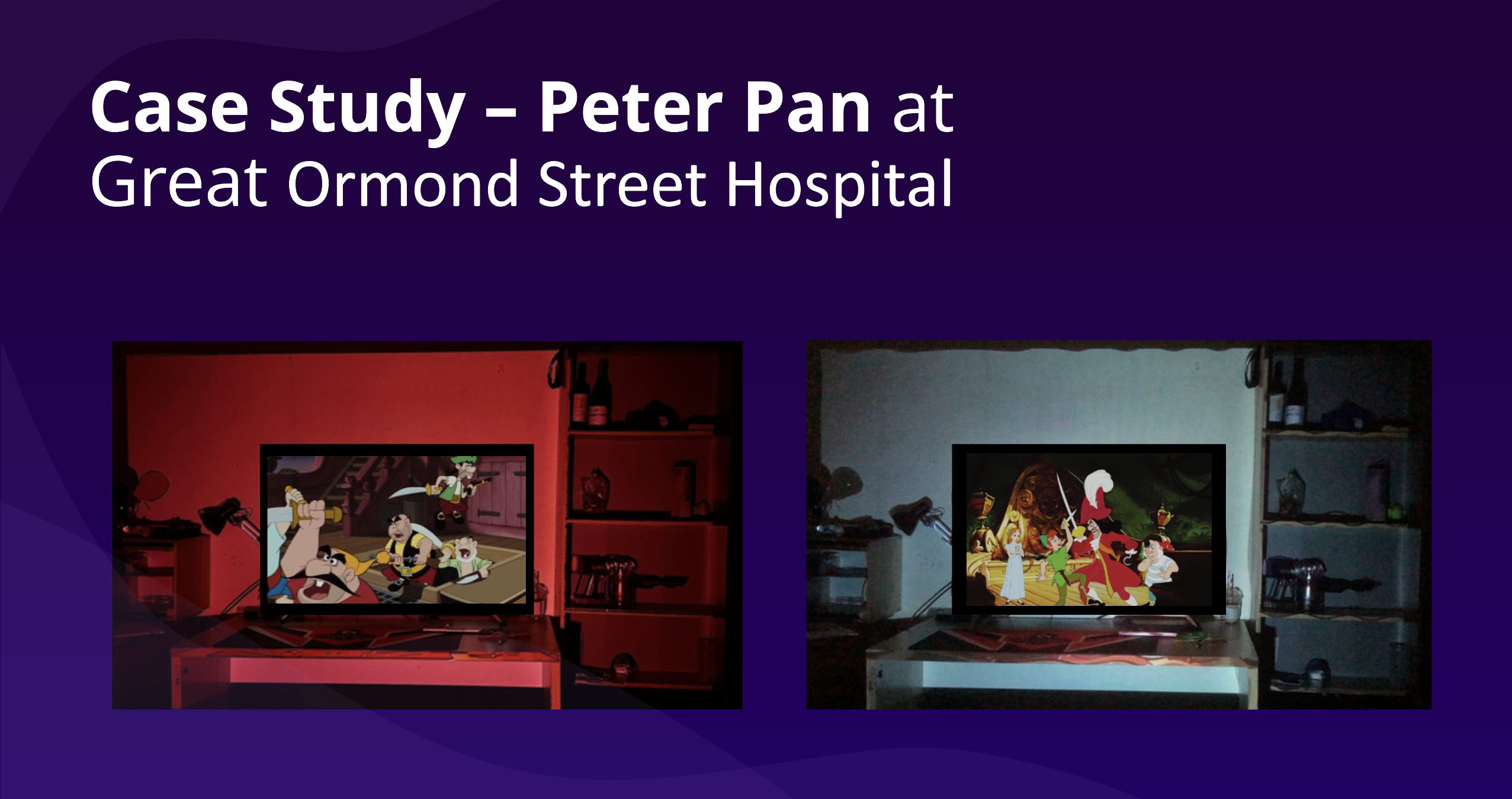

Pre-programmed effects: A projection mode could be created that would allow content producers to add projection effects at predetermined times to a preselected video, such as a film or TV show. An example of this was given by Prof Dean Mohamedally, who suggested that Great Ormond St Hospital (GOSH) could use their rights to the Peter Pan franchise to create an interactive and immersive version of the film, with effects displayed using UCL Open-Illumiroom V2.

For example, sparkles could be projected to represent the ‘pixie dust’ used by the characters Wendy, John and Michael to fly. In our presentation at the Imagine Cup UK Finals, we showed how our existing modes might be storyboarded as indicated in the image of our slide below.

-

Data visualisation - business: The system could be used for visualising data and graphs in businesses in meetings. This would allow teams to share more information and data, increasing productivity. Additionally, a ChatGPT based system could be passed audio prompts from users in a room, perhaps in a meeting, then generated text could be displayed by UCL Open-Illumiroom V2, providing ideas and inspiration, once again increasing productivity.

-

Data visualisation - sports: Sporting events often have huge amounts of data that users may have to look up on their phones to see, distracting from the main sporting event on their TV. In football, information about player lign-ups, team and player performance, expected and successful goals and more could be displayed directly to the user, engaging them more in the analytical side of the sport.

Motorsport also offers an exceptional opportunity for data to be generated and displayed to the user. In a Formula 1 race, there are 20 cars, each competing against each other, and it can often be difficult to understand what is happening on track. An experiment was run, using the MultiViewer for F1 app to see how adding extra visualisations on a projected display would impact the F1 viewing experience. The result was spectacular! Below is a short clip from the 2023 Jeddah Grand Prix Practice 2 session, courtesy of F1TV Pro.

Being able to see the track map showing all drivers on track, a driver’s point of view, and driver timing and tire data, all around the TV, gave an extraordinarily immersive feeling of being a driver engineer, monitoring the race from the pit wall.

Such experiences could be massively expanded to other sports with UCL Open-Illumiroom V2. Open-source data from APIs could be received, and displayed on movable tiles on the projection window. These tiles could be dynamically shifted around the TV depending on their size and the space available on the projection area.