Testing

Automated Tests

Testing is a fundamental aspect of the software development process,

as it helps ensure the quality, reliability, and functionality of the application.

Without a comprehensive testing strategy, software projects are prone to bugs, errors, and unexpected behavior, which can lead to user dissatisfaction, security vulnerabilities, and even complete system failures.

To facilitate the automation and streamlining of our testing efforts,

we have leveraged the power of Continuous Integration (CI) strategies, specifically utilizing GitHub Actions.

GitHub Actions is a powerful CI/CD (Continuous Integration/Continuous Deployment) platform that allows us to automate the execution of our test suites,

ensuring that every code change or commit triggers a comprehensive testing process.

Unit Tests

Unit testing is a fundamental testing approach that focuses on verifying the individual components or units of a software system. These units are typically the smallest testable parts of an application, such as functions, methods, or classes.

1.1 Functional Unit Test

As detailed in our implementation section, we broke the system down in modular parts to facilitate the development process. Among the many benefits of this approach was the ability to write unit tests for each module, ensuring that the individual components of the system function as expected.Due to the nature of our application, there were several aspects that made it challenging to write unit tests. Namely, this is due to the non-deterministic aspects of the system, such as the results returned by the scraper, the chunking of documents, and much more.

A key intuition to solve this (as guided by our TA) was to structure the methods into granular deterministic and non-deterministic parts. This ensures that the non-deterministic parts are isolated and can be mocked, while the deterministic parts can be tested in isolation. One tactic we employed to overcome this challenge was to mock the responses of the scraper, allowing us to simulate different scenarios and test the system's behavior under various conditions.

@pytest.fixture

def scraper() -> Scraper:

return Scraper()

def test_parse_content_pdf(scraper):

url = "something.pdf"

content = b"randomtextcontent"

res = scraper._parse_content(url, content)

assert type(res) == PDFContent

def test_parse_content_text(scraper):

url = "something.html"

content = "randomtextcontent"

res = scraper._parse_content(url, content)

assert type(res) == TextContent

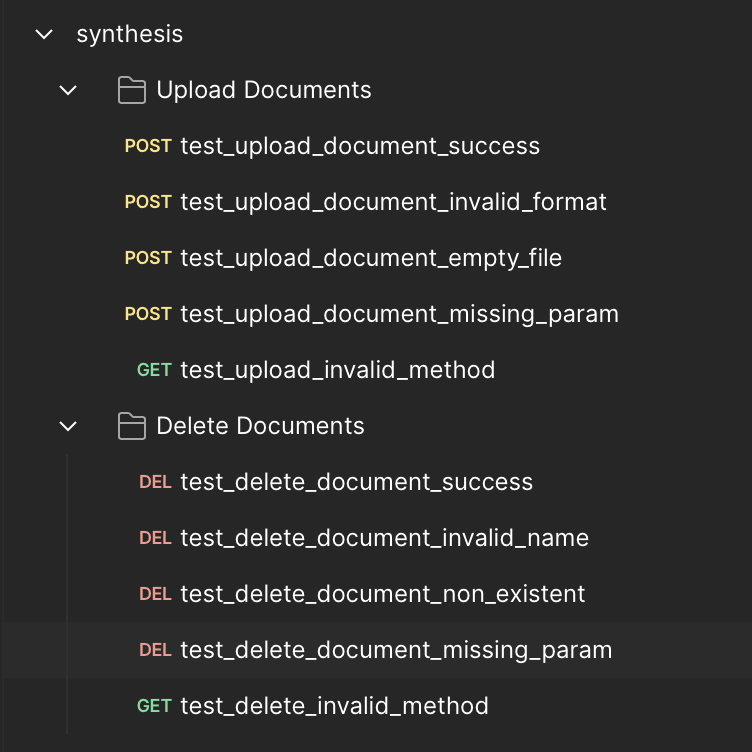

1.2 API testing

In addition to the actual functionality of the system, we also wrote unit tests for the API endpoints. This was particularly important as the API serves as the interface between the chatbot and the user, and any issues with the API could lead to incorrect responses or system failures.The testing was done via Postman, where we wrote a collection of tests that covered the various endpoints and scenarios. This allowed us to simulate different user queries and responses, ensuring that the API behaved as expected under different conditions.

System Tests

2.1 Boundary Testing

In the context of this project, we recognized the importance of E2E testing, as it allows us to assess the application's overall performance, usability, and resilience under real-world conditions. However, much of our E2E testing has been conducted in a more informal manner, as we wanted to explore the boundaries and edge cases of the system's behavior.2.1.1 User Queries

Some of the key aspects we tested include the system's response to different types of user queries- Queries with special characters

- Queries with multiple keywords

- Queries with misspelled words

- Queries with long sentences

- Queries with multiple sentences

- Typoglycemia Queries

2.1.2 Limited Website Sources

One of the key boundary conditions we investigated during our E2E testing was the system's behavior when the user attempts to access a limited set of websites or data sources. This scenario is particularly relevant, as the application's core functionality relies on the availability and quality of the underlying data sources.To simulate this scenario, we restricted the system's access to certain websites and data sources, observing how the system responded to user queries that required information from these sources.

In particular, we found two distinct behaviours on these occasions:

- Graceful Degradation

- In some cases, the system was able to provide relevant and accurate responses by leveraging the exisitng knowledge and generalizing to the user's prompt.

- Hallucinations

- In other cases, the system generated responses that were inaccurate or irrelevant, indicating that the underlying models were unable to provide meaningful information without access to the required data sources.

Responsive Design Testing

By testing the application on this diverse set of devices and browsers, we were able to identify and address any layout issues, content display problems, or functionality breakdowns that occurred due to differences in viewport sizes, rendering engines, or browser-specific quirks.

User Acceptance Testing

3.1 Client Feedback

One of the key aspects of our UAT approach has been the regular engagement with our client during our weekly meetings. These sessions have provided a platform for the client to directly interact with the application, explore its features, and provide candid feedback on its usability, functionality, and overall alignment with their requirements.During these collaborative sessions, the client has played an active role in identifying areas for improvement, highlighting pain points, and suggesting enhancements to the application. We have meticulously documented and prioritized this feedback, using it as a roadmap to guide our iterative development and testing efforts.

3.2 End-User Feedback

In addition to the client-focused UAT, we have also engaged a diverse group of end-user representatives to participate in the testing process. These individuals, randomly selected to emulate our target user base, have provided invaluable insights and feedback that have further shaped the development of the application.By observing these end-user representatives as they interact with the application, we have been able to identify areas of confusion, pain points, and opportunities for improvement that may have been overlooked during our internal testing. The feedback from this diverse group has helped us uncover nuanced usability issues, refine the information architecture, and enhance the overall intuitiveness of the application.