System Architecture

The PixelPilot Visual Studio Code extension architecture consists of both front-end and back-end components, designed to facilitate interaction between users and the system's AI capabilities.

The front-end is primarily represented by the VSCode Extension Framework API. This interface is responsible for capturing user interactions, such as chat messages or prompt inputs, and forwarding them to the system for processing. The extension runs within the VSCode environment and serves as the main access point for users to engage with the tool. It handles user inputs, displays outputs such as code or images, and provides the interactive elements needed to guide the user experience. Communication with the back-end is achieved via structured API calls, ensuring that requests are processed and responses are returned in real time.

The back-end encompasses core logic for prompt refinement and image generation. These processes interpret the user’s intent and fine-tune prompts before routing them to the appropriate AI model. The image generation logic is responsible for converting user prompts into visual assets, such as game sprites or concept art, while the refining prompts logic ensures that ambiguous or incomplete inputs are transformed into clearer, more actionable tasks for the models.

Underpinning the back-end is a collection of AI models, including both Offline Models and Copilot Models. These models handle the actual generation of outputs—whether code or images—and return the results to the back-end for delivery to the front-end interface. Offline models are tailored for local execution, enabling children to generate content without needing an internet connection, while Copilot models provide more advanced capabilities, potentially leveraging cloud-based resources for greater performance.

Diagram Overview

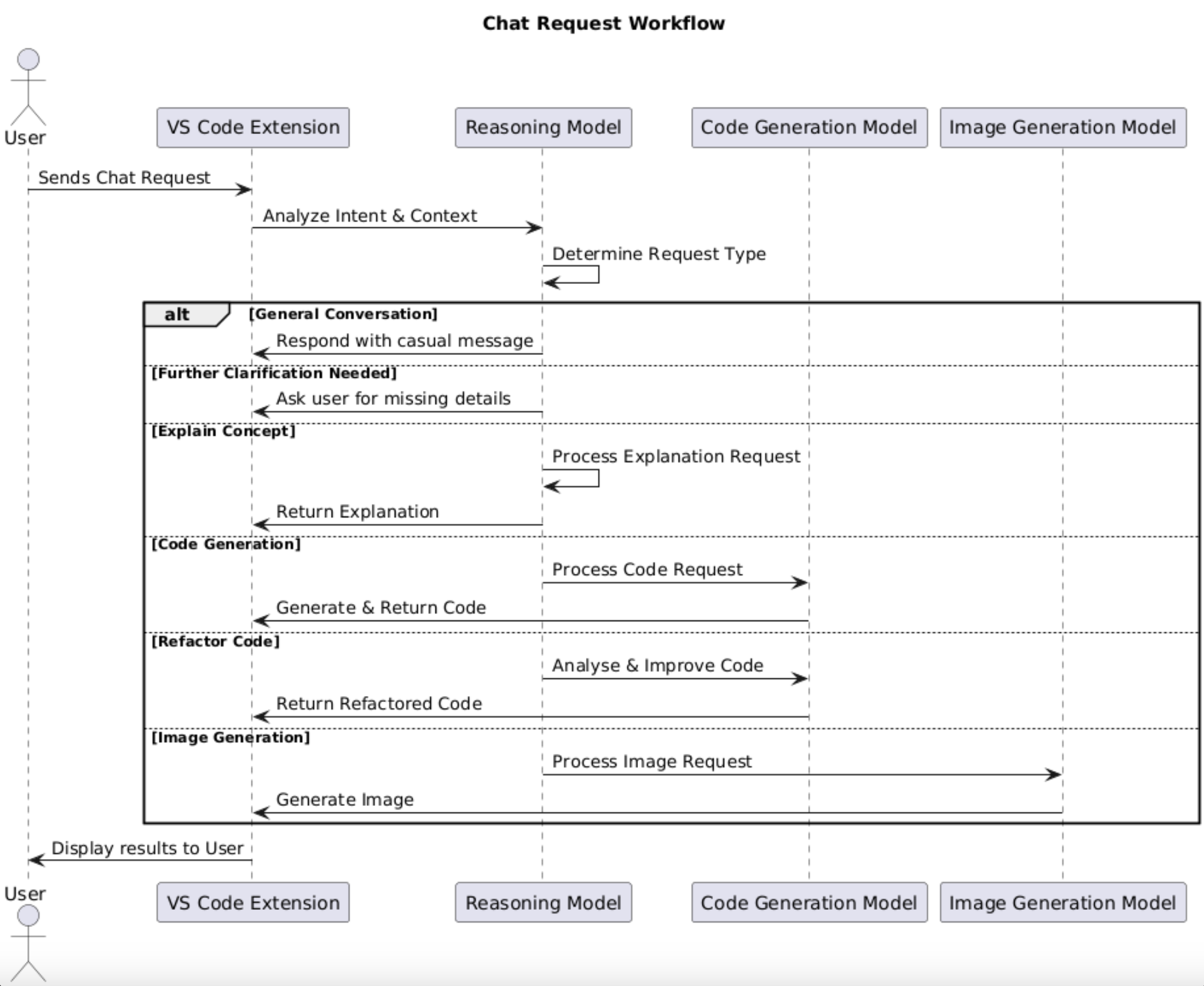

Now diving deeper into the request-handling logic of the system, this sequence diagram illustrates the detailed flow of a user’s interaction once a chat request is made through the VS Code Extension. It showcases how the front-end communicates with the back-end reasoning logic and various AI models—including the Reasoning Model, Code Generation Model, and Image Generation Model—to analyze the request and generate appropriate responses such as explanations, code snippets, or images.

Actors & Components

The User is the end participant who interacts with the PixelPilot system through the VS Code Extension. They initiate the workflow by submitting a prompt or message, which begins the decision-making process.

The VS Code Extension functions as the front-end interface and communication bridge between the user and the intelligent models running in the back-end. It collects user input, passes it along for processing, and renders the resulting output—be it code, explanations, or visuals—back to the user in the editor.

The Reasoning Model sits at the core of the decision-making process. It interprets the user's intent and context to determine the type of request being made. This model handles a variety of tasks, including responding to general conversation, requesting clarification for ambiguous input, and offering conceptual explanations for educational queries.

The Code Generation Model is activated when the user’s request relates to creating or editing code. It either generates fresh code based on user input or refactors existing code to improve quality, clarity, or performance.

The Image Generation Model is responsible for handling visual requests, such as generating images for game assets or user interface designs. When invoked, it processes the given prompt and returns the corresponding image output to the front-end.

Workflow Breakdown

The workflow begins when the user sends a chat request through the VS Code Extension. This request is then forwarded to the Reasoning Model, which analyzes the intent and context of the message to determine what kind of request it is handling.

Based on this analysis, the system follows one of several paths. For general conversation, the Reasoning Model simply responds with a casual message (e.g., a greeting or light interaction). If the message lacks clarity, the model prompts the user for further information to proceed. When the user asks for a concept explanation, the Reasoning Model processes the request and returns an informative response.

If the user is requesting code generation, the Code Generation Model is invoked. It creates the appropriate code and sends it back through the extension. In the case of a refactor code request, the same model analyzes the provided code and returns a cleaner or improved version.

When the user prompts for image generation, the Image Generation Model processes the image request and provides the generated visual.

Finally, once a response is generated by any of the models, the VS Code Extension displays the results to the user, completing the request cycle