Testing

Testing Overview

Testing was a crucial part of our development process to ensure the accuracy, reliability, and efficiency of our system. Given that our solution relies on a Large Language Model (LLM), traditional deterministic testing methods were not always applicable, since LLM outputs can vary based on context and prompt phrasing. Instead, we focused on evaluating the system’s ability to process, retrieve, and generate relevant responses. By systematically testing each component, we ensured that our solution met user requirements and functioned effectively across all the use cases.

Unit Testing

Unit testing was an essential step in ensuring the robustness and correctness of our system. By isolating individual components and verifying their behaviour under controlled conditions, we could detect and resolve issues early in the development process. Given the complexity of our Retrieval Augmented Generation (RAG) pipelines, unit tests helped us validate key functions such as data extraction, and chat history retrieval. By rigorously testing these components, we ensured that our system performed reliably and consistently across various formats and input conditions.

We chose to use pytest as our unit testing framework due to its simplicity, flexibility, and powerful feature set. Pytest allows for concise test functions, and the reuse of setup code with fixtures, making it well-suited for our needs. Additionally, pytest provides detailed failure reports, enabling quick debugging and efficient test execution. Also, the ability to mock dependencies allowed us to simulate different test cases without relying on actual files, ensuring a controlled and reproducible testing environment.

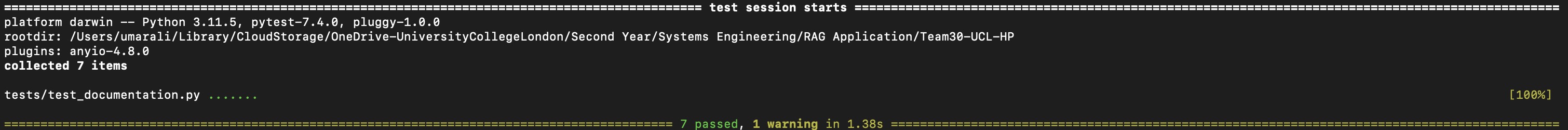

The testing suite for the documentation use case ensures that the system correctly extracts, processes, and compares document versions while maintaining reliable functionality. The unit tests check key functionalities, such as extracting text from PDFs using pdfplumber, normalising text formatting, and identifying document names and versions from filenames. The version comparison logic is tested by checking for accurate detection of added and removed lines. Additionally, tests validate the processing of multiple document versions to ensure proper version tracking. The system’s ability to log and retrieve chat metrics is also tested, including scenarios with and without chat history. We also used Mocking to isolate dependencies, ensuring robust and independent test execution.

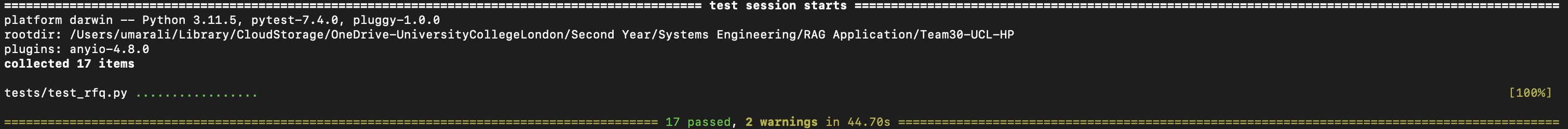

The testing suite for the RFQ use case ensures that the system accurately extracts, processes, and retrieves relevant information from procurement documents. Unit tests cover critical functionalities, such as extracting text from PDFs, identifying and categorising structured tables, and extracting key metadata like customer names, contract durations, and pricing details. The document processing pipeline is validated by testing document loading, splitting, and vector database creation. Additionally, tests ensure that the RAG-based retrieval system correctly retrieves relevant context and generates responses. The chat history functionality is also tested, verifying that past interactions are properly logged and analysed for metrics.

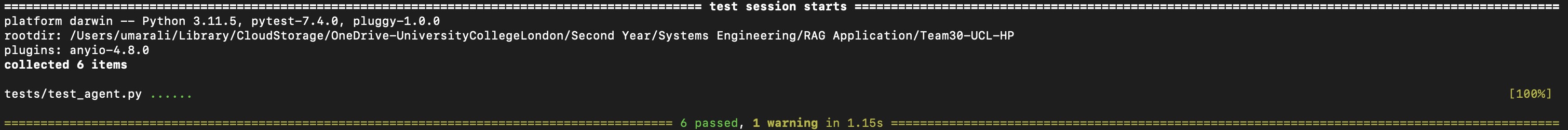

The testing suite for the telemetry use case ensures the accuracy and reliability of data extraction, transformation, and storage processes. Unit tests validate core functionalities including database initialisation, processing Excel files, and standardising column names to ensure consistency. The tests also verify metadata extraction, particularly the retrieval of date suffixes from telemetry reports. Additionally, tests confirm that raw data is cleaned and transformed correctly before being saved into a structured database. Finally, the integration of SQLite for storage is validated by checking if tables are created correctly and populated with the expected data.

Our unit tests were successful in validating the functionality of our system and provided confidence in its reliability. The tests effectively covered critical functions such as text extraction, version comparison, and chat metrics retrieval, ensuring that each component operated as expected. The use of meaningful assertions helped confirm expected outputs, while mocking techniques allowed us to isolate dependencies. The thorough test coverage ensured that any errors or unexpected behaviours were caught early, contributing to a stable and well-functioning system.

User Acceptance Testing

User acceptance testing was conducted to ensure that our solutions met the expectations and needs of our users. Since our applications were designed to assist business leaders in analysing documentation incoherencies, procurement (RFQ) documents, and operational telemetry data, it was crucial to validate that they provided relevant, accurate, and actionable insights. User acceptance testing allowed us to identify any usability issues, make sure that the outputs aligned with user expectations, and refine the system based on real-world feedback before deployment.

For the documentation incoherency RAG, our clients tested the system by uploading multiple versions of technical documents to verify whether it correctly highlighted changes, inconsistencies, and missing information. They provided feedback on the clarity of the detected differences, the accuracy of change explanations, and the usefulness of retrieved information. Based on their input, we refined the system by adjusting how changes were indicated, which improved the system’s ability to provide meaningful comparisons.

For the RFQ documentation RAG, our clients evaluated the system by querying it with specific procurement requirements and checking whether the retrieved RFQs contained the necessary details. They tested the ease of searching for specific tables, the accuracy of extracted information, and the system’s ability to generate new tables. Their feedback led us to adjust how the extracted data was represented which improved the retrieval of relevant information.

For the operational telemetry data SQL agent, our clients provided real-world system usage logs and queried the RAG for insights related to metrics such as CPU utilisation, and uptime trends. They assessed whether the system accurately processed and retrieved resource statistics while providing meaningful insights. Their feedback helped us fine-tune query responses, which ensured that the system effectively summarised system trends in a clear and actionable manner.

Through this structured user acceptance testing process, we ensured that our applications met the needs of business leaders by providing relevant, accurate, and easy-to-use insights. The feedback gathered helped refine the system, improving both functionality and usability before deployment.

Compatibility Testing

To ensure the system's interface works seamlessly across various environments, we carried out comprehensive compatibility testing across different browsers and operating systems. First, we tested the Gradio interface on major web browsers such as Google Chrome, Mozilla Firefox, Microsoft Edge, and Safari. The goal was to verify that all UI elements, including chat boxes, buttons, and file upload components rendered correctly and that users could interact with them without issues. This testing ensured that visual elements like markdown, text fields, and buttons maintained proper alignment and formatting across browsers.

In addition to browser compatibility, we tested the interface across different operating systems, including Windows and macOS. This allowed us to confirm that the interface functions consistently, regardless of the user's platform. We also specifically tested the responsiveness of the layout to ensure the UI adapts correctly to varying screen sizes and resolutions.