Individual Contribution

Here is the breakdown of each of our individual contributions to the project

| Task | Carmen | Jason | Radu | Mari |

|---|---|---|---|---|

| Client Liasion | 45 | 45 | 10 | 0 |

| Liasion with other teams | 50 | 30 | 20 | 0 |

| HCI | 40 | 40 | 20 | 0 |

| Requirement Analysis | 40 | 40 | 20 | 0 |

| Pitch Presentations | 40 | 40 | 20 | 0 |

| Coding | 45 | 40 | 15 | 0 |

| PR reviews | 95 | 5 | 0 | 0 |

| Blog | 50 | 50 | 0 | 0 |

| Testing | 25 | 25 | 50 | 0 |

| Report Writing | 50 | 30 | 20 | 0 |

| Report Website | 10 | 90 | 0 | 0 |

| Video Editing | 80 | 10 | 10 | 0 |

| Overall | 40% | 40% | 20% | 0% |

Developer Feedback

As a major part of our project was to support other developers, we reached out to students from other MotionInput teams, for feedback on how well we succeeded in the following aspects of our project:

- Creating clear documentation for developers to expand the functionality of MotionInput.

- Designing a system that would be more easily expandable than MotionInput V2.

- Designing a system that would be neticebly more efficent than MotionInput V2.

We were able to get the feedback from the following 8 students:

- Team 5: Alexandros Theofanous

- Team 30: Oluwaponmile Femi-Sunmaila, Yadong(Adam) Liu, Andrzej Szablewski

- Team 33: Phoenix Sun

- Team 34: Fawziyah Hussain, Siam Islam

- Team 35: Raquel Sofia Fernandes Marques da Silva

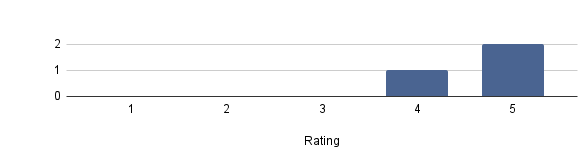

Documentation

-

How clear (1-unclear, 5-clear) would you say was the documentation for:

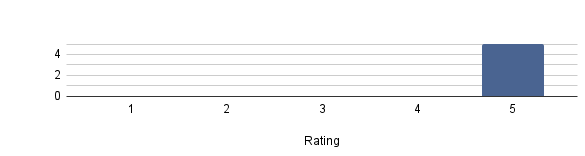

Adding/extending modules in MI v3:

Adding GestureEvents to MI v3:

Adding handlers to MI v3:

Adding new functionality through JSON files to MI v3: -

How would you compare the documentation of MI v3 to v2?

- “V3 is certainly better”

- “Better and much more accessible”

- “V3 documentation is vastly superior”

- “V3 is better. Don't really have "documentation" in V2”

- “Huge difference. The documentation of V3 was amazing”

-

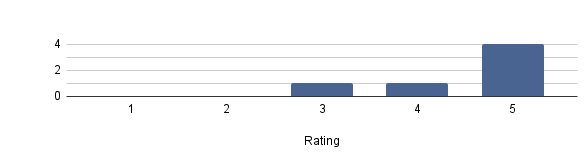

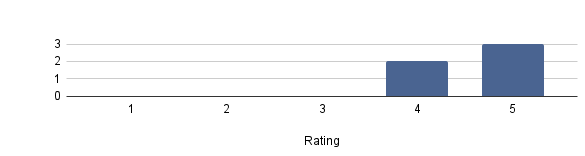

How would you compare the simplicity of adding new features to v3 and v2 (1-easier in v2, 5-easier in v3)?

Simplicity of adding new features to v3 and v2 (1-easier in v2, 5-easier in v3) -

Describe how would you compare the simplicity of adding new features to v3 and v2?

- “V3 is simpler”

- “I still have nightmares about V2, this is much better”

- “Adding new features to v3 is definitely easier but there are more rules to follow, and less freedom is given, so I feel more time may be needed compared to v2 for becoming familiar with the codebase and how things work. But once you're familiar, adding new features is a lot simpler and definitely less tedious”

- “V3 is much, much, much better”

- “The new features on V.3 are extremely easier”

-

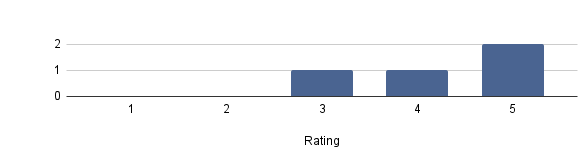

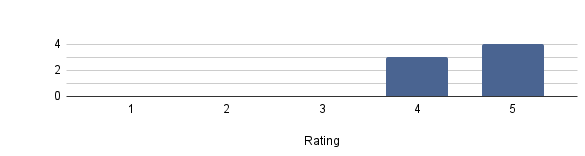

How would you compare the latency of v3 and v2 (1-better in v2, 5-better in v3)?

Latency of v3 and v2 (1-better in v2, 5-better in v3) -

How would you compare the experience of using the v2 and v3 builds?

- “V2 was much slower, the lag was a problem when testing with reaction-based games. V3 made all those problems go away”

- “Much better experience using MI v3”

- “V3 is much faster than v2. Especially starting MotionInput core”

- “V3 IS WAY FASTER!!! Amazing experience!”

-

Were there any differences in efficency you noticed between v2 and v3?

- “V3 much more efficient and reactive, latency much more reduced, better feeling when using it overall”

- “Yes, start time is much faster in v3”

- “V3 is a lot faster”

- “Way faster”

- “The loading time has severly been diminished”

-

Any problems you faced regarding the architecture?

- “No calibration function in backend and adding new custom trigger is untested yet”

- “Big architecture = hard to understand, but after time works fine!”

- “Understanding it to begin with”

- “None - worked seamlessly”

- “The Implementation of the calibration required the addition of am extra camera instance that provided errors”

-

Anything that you would consider a drawback in v3 compared to v2?

- “No calibration functions yet”

-

Were there any functional differences you would like to mention?

- “The idea of Gestures being made from a gesture factory which combines a series of primitives makes it much easier to implement more gestures.”

- “Everything dramatically changed, including my sleep schedule!”

-

What would you say were the biggest improvements from v2?

- “Pipe communication”

- “Compilation process, my god imagine having to follow a 15+ step pdf just to get the program on your system. Also don't get me started on code quality -- why were some comments in chinese >=[”

- “I think the biggest improvements are the documentation and modularity in v3. Being able to switch which events are currently active, what gestures they use etc. without having to change any code is amazing<”

- “In v3, we are able to use different modules at the same time”

- “Documentation, the new API allows easy addition of gestures, events and handlers. Really usable and editable”