System Architecture Diagram

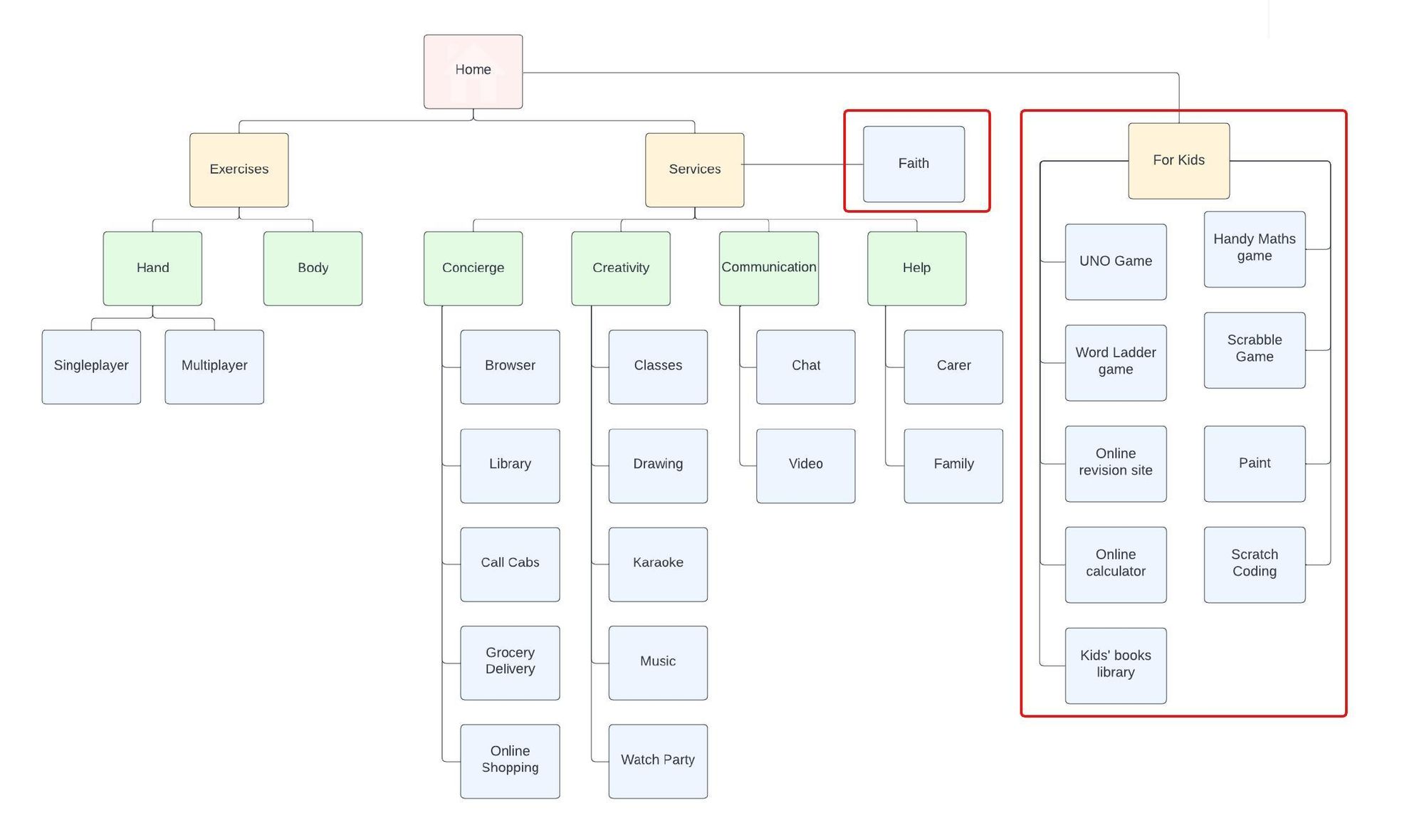

Front-End: UCL FISECARE

This updated site map for UCL FISECARE reflects our changes to the interface, which include the web apps designed for children and people who practice a religion.

Front-End: MFC Settings Window

Firstly, the user will encounter the MFC Settings App where the user can select a combination of a variety of options to select a mode that suits their needs. The back-end of the MFC is coded in C++ and upon launching MotionInput, the config.json file is edited. The config.json file includes all the settings required to launch MotionInput. For example, if the user selected to run calibration, this will be set to ?true? in the config.json file to run the corresponding calibration code. Furthermore, MotionInput refers to the mode_controller.json file to run the correct mode selected by the user and to find the list of events that should be active with the selected mode. The ?current_mode? in the mode_controller.json will be updated by the MFC to run the selected mode. A full list of events is found in events.json, which also provides information to MotionInput on what gesture or interaction each event triggers.

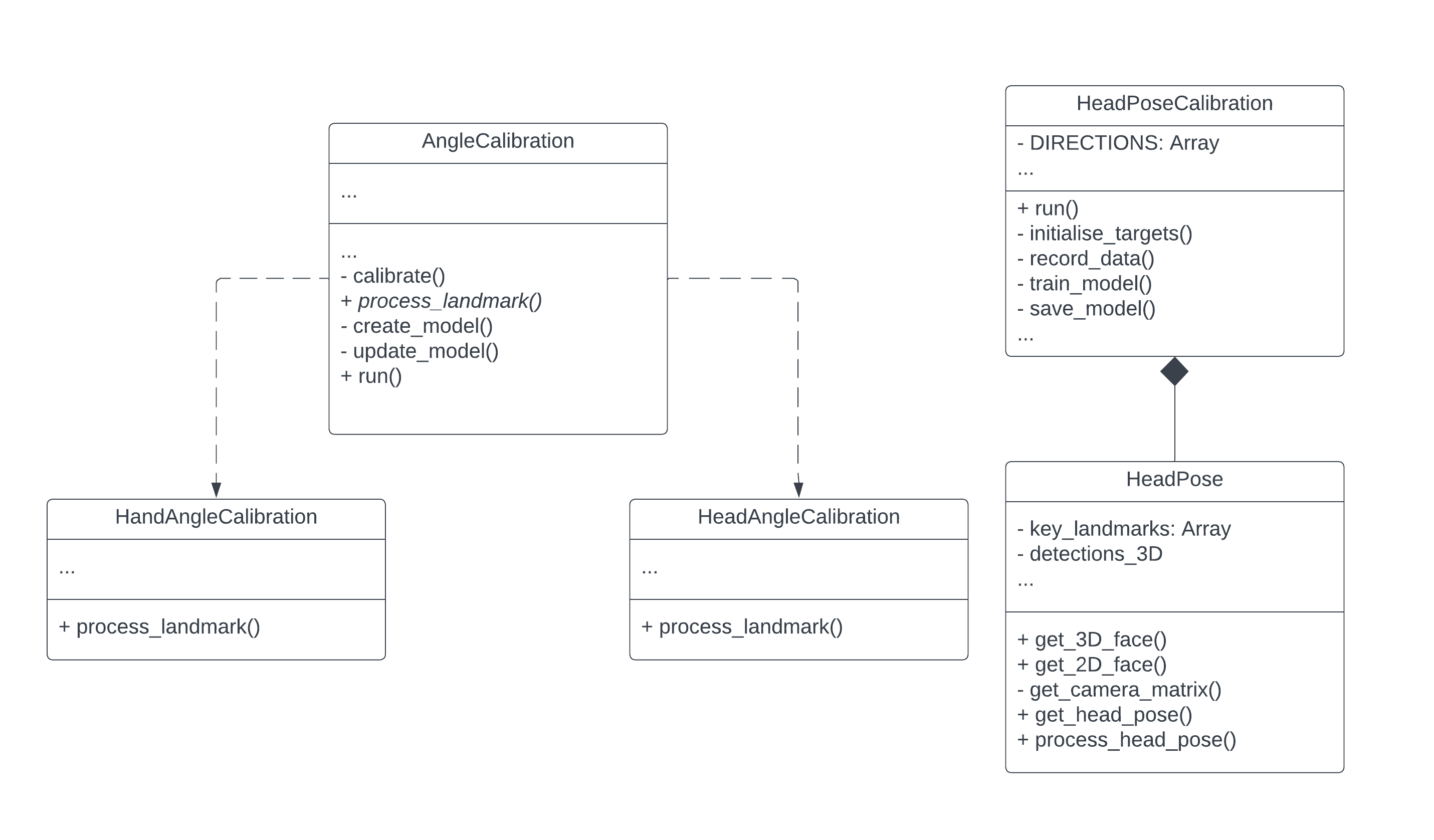

Back-End: Calibration

Next, our user completes calibration. After that, the machine learning model is updated in either the data/ML models/hand or data/ML models/head folders for ease of access by the rest of MotionInput. As MotionInput runs, the models are loaded from the folders to make predictions.

Under our AngleCalibration class we have one abstract method process_landmark() which is implemented in the child classes. Processing of the landmarks for the head and hand use two different models: MediaPipe Face Mesh and MediaPipe Hand respectively, therefore, these methods are specific to its module.

Otherwise, when the run() method is called, calibration runs and we train a model. Finally, update_model() will save the model in the ML Models data folder using Joblib.

In the HeadPose class, we define the methods to calculate the head pose. The method process_head_pose() will return either 0 if the processing of the landmarks was unsuccessful or 1 if it was successful along with the Euler angles. Our HeadPose Calibration class utilises this method and will train a model given the Euler angles and directions.

Back-End: MotionInput API

- bedside_nav_face_speech

- bedside_nav_face_gestures

- bedside_nav_face_joystick_cursor_gestures

- bedside_nav_face_joystick_cursor_speech

New modes created for facial navigation:

- bedside_nav_left_touch

- bedside_nav_right_touch

New modes created for hand navigation:

One of the many modes listed above will be running for which a few specific gesture events have been created to obtain the desired interaction with the computer.

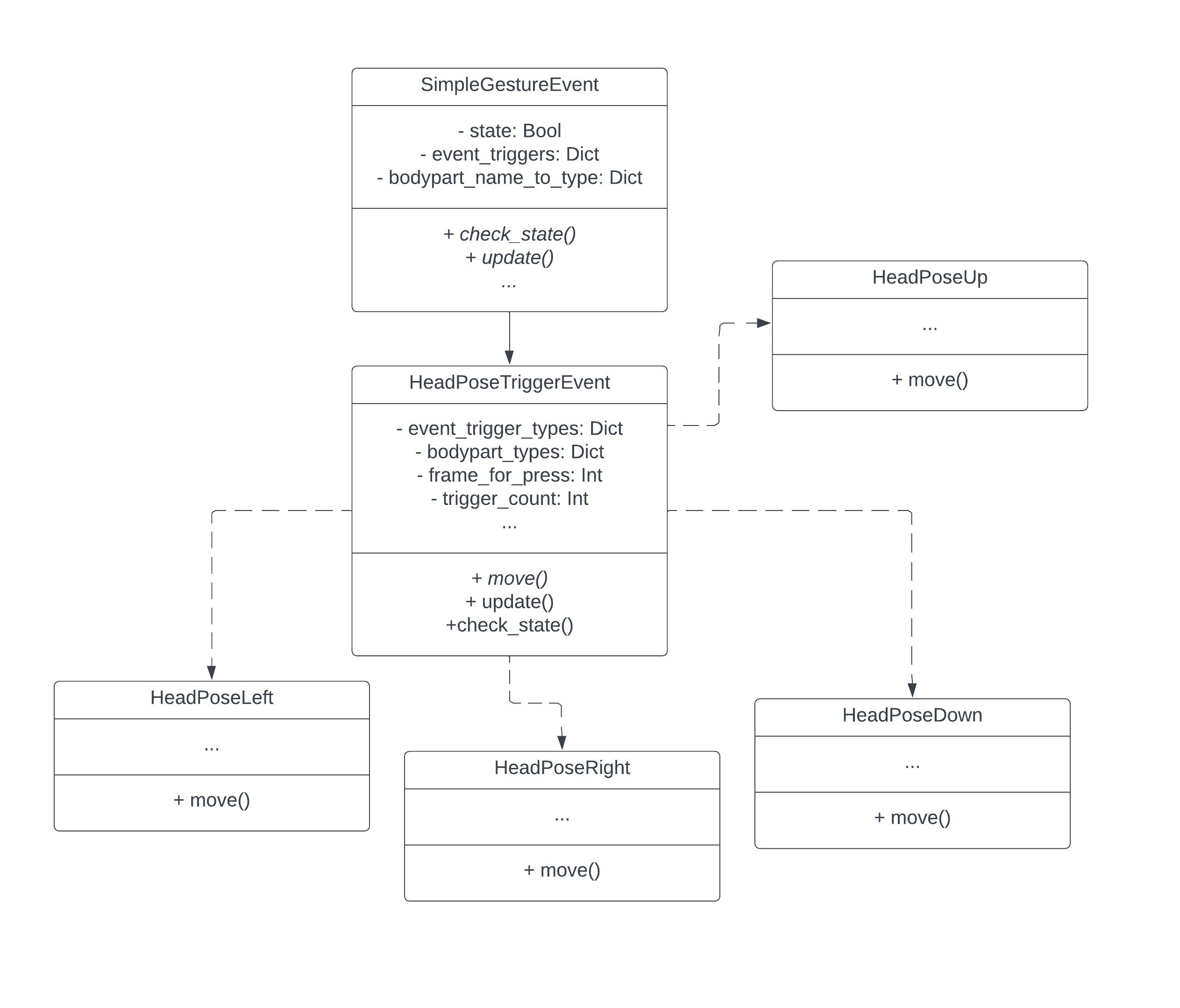

Below is a sub-component of the new head pose navigation mode - it illustrates the composition of a head pose gesture event.